Tom Costello

@tomcostello.bsky.social

research psychologist. beliefs, AI, computational social science. prof at american university.

Maybe you see this as all too rosy, which is fair and maybe even true, but warnings and dismissals (alone) are bad tools, if nothing else. future isn't set. So yes, I believe we should actively articulate and defend a positive vision in order to reduce harms + capture gains.

July 24, 2025 at 4:16 PM

Maybe you see this as all too rosy, which is fair and maybe even true, but warnings and dismissals (alone) are bad tools, if nothing else. future isn't set. So yes, I believe we should actively articulate and defend a positive vision in order to reduce harms + capture gains.

Also, incentives are not static; if revenue continues to come from usage fees (rather than ads), maybe helping users reach reliable answers is indeed a profitable/competitive approach. open question. plus i don't imagine these big companies want to replay social media era mistakes

July 24, 2025 at 4:01 PM

Also, incentives are not static; if revenue continues to come from usage fees (rather than ads), maybe helping users reach reliable answers is indeed a profitable/competitive approach. open question. plus i don't imagine these big companies want to replay social media era mistakes

So the problem is incentives. I agree. The incentives are aligned with building the models in the first place, too (hence my first sentence in that quote). Should we not try to identify and bolster a positive vision that underscores potential returns to cooperation, democracy, etc?

July 24, 2025 at 3:51 PM

So the problem is incentives. I agree. The incentives are aligned with building the models in the first place, too (hence my first sentence in that quote). Should we not try to identify and bolster a positive vision that underscores potential returns to cooperation, democracy, etc?

Thanks for sharing!

July 24, 2025 at 3:37 PM

Thanks for sharing!

More on that front soon, actually...

July 9, 2025 at 9:25 PM

More on that front soon, actually...

I think this is interesting, and it would be worthwhile to convene a group and expose them to this chatbot interaction (perhaps it would be much less effective when social dynamics are involved), but I think the active ingredient is strong arguments + evidence. LLMs can surface good arguments.

July 9, 2025 at 8:35 PM

I think this is interesting, and it would be worthwhile to convene a group and expose them to this chatbot interaction (perhaps it would be much less effective when social dynamics are involved), but I think the active ingredient is strong arguments + evidence. LLMs can surface good arguments.

You mean given everything with the Epstein files?

July 9, 2025 at 7:04 PM

You mean given everything with the Epstein files?

yeah, I think that talking to an LLM that is prompted to behave like ChatGPT is likely to amplify whichever tendencies already exist in a person (so can weird beliefs, like has been reported). But our studies give the LLM a very specific goal (e.g., debunking), so it is not 1:1 in a meaningful way

July 9, 2025 at 6:46 PM

yeah, I think that talking to an LLM that is prompted to behave like ChatGPT is likely to amplify whichever tendencies already exist in a person (so can weird beliefs, like has been reported). But our studies give the LLM a very specific goal (e.g., debunking), so it is not 1:1 in a meaningful way

🚨New WP🚨

Using GPT4 to persuade participants significantly reduces climate skepticism and inaction

-Sig more effective than consensus messaging

-Works for Republicans

-Evidence of persistence @ 1mo

-Scalable!

PDF: osf.io/preprints/ps...

Try the bot: www.debunkbot.com/climate-change

Here’s how 👇

Using GPT4 to persuade participants significantly reduces climate skepticism and inaction

-Sig more effective than consensus messaging

-Works for Republicans

-Evidence of persistence @ 1mo

-Scalable!

PDF: osf.io/preprints/ps...

Try the bot: www.debunkbot.com/climate-change

Here’s how 👇

July 9, 2025 at 4:34 PM

Do these effects succeed for non-conspiracy beliefs, like climate attitudes? yes!

bsky.app/profile/dgra...

bsky.app/profile/dgra...

🚨New WP🚨

Using GPT4 to persuade participants significantly reduces climate skepticism and inaction

-Sig more effective than consensus messaging

-Works for Republicans

-Evidence of persistence @ 1mo

-Scalable!

PDF: osf.io/preprints/ps...

Try the bot: www.debunkbot.com/climate-change

Here’s how 👇

Using GPT4 to persuade participants significantly reduces climate skepticism and inaction

-Sig more effective than consensus messaging

-Works for Republicans

-Evidence of persistence @ 1mo

-Scalable!

PDF: osf.io/preprints/ps...

Try the bot: www.debunkbot.com/climate-change

Here’s how 👇

July 9, 2025 at 4:34 PM

Do these effects succeed for non-conspiracy beliefs, like climate attitudes? yes!

bsky.app/profile/dgra...

bsky.app/profile/dgra...

Second, why are debunking dialogues so effective? Good arguments and evidence! (and, for unfolding conspiracies, saying "no one knows what's going on, you should be epistemically cautious" may be a strong argument)

bsky.app/profile/tomc...

bsky.app/profile/tomc...

Last year, we published a paper showing that AI models can "debunk" conspiracy theories via personalized conversations. That paper raised a major question: WHY are the human<>AI convos so effective? In a new working paper, we have some answers.

TLDR: facts

osf.io/preprints/ps...

TLDR: facts

osf.io/preprints/ps...

July 9, 2025 at 4:34 PM

Second, why are debunking dialogues so effective? Good arguments and evidence! (and, for unfolding conspiracies, saying "no one knows what's going on, you should be epistemically cautious" may be a strong argument)

bsky.app/profile/tomc...

bsky.app/profile/tomc...

Some other recent papers from our group on AI debunking:

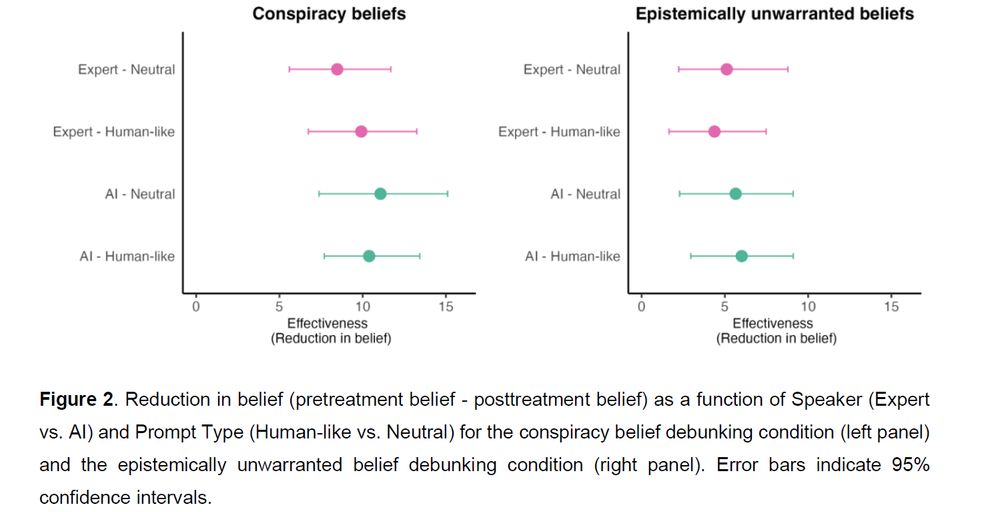

First, does this work if people think they're talking to a human being? yes!

bsky.app/profile/gord...

First, does this work if people think they're talking to a human being? yes!

bsky.app/profile/gord...

Recent research shows that AI can durably reduce belief in conspiracies. But does this work b/c the AI is good at producing evidence, or b/c ppl really trust AI?

In a new working paper, we show that the effect persists even if the person thinks they're talking to a human: osf.io/preprints/ps...

🧵

In a new working paper, we show that the effect persists even if the person thinks they're talking to a human: osf.io/preprints/ps...

🧵

July 9, 2025 at 4:34 PM

Some other recent papers from our group on AI debunking:

First, does this work if people think they're talking to a human being? yes!

bsky.app/profile/gord...

First, does this work if people think they're talking to a human being? yes!

bsky.app/profile/gord...

Huge thanks to my brilliant co-authors: Nathaniel Rabb (who split the work with me and is co-first author), Nick Stagnaro, @gordpennycook.bsky.social, and @dgrand.bsky.social

We're eager to hear your thoughts and feedback!

We're eager to hear your thoughts and feedback!

July 9, 2025 at 4:34 PM

Huge thanks to my brilliant co-authors: Nathaniel Rabb (who split the work with me and is co-first author), Nick Stagnaro, @gordpennycook.bsky.social, and @dgrand.bsky.social

We're eager to hear your thoughts and feedback!

We're eager to hear your thoughts and feedback!

Also, the treatment succeeded for both Democrats and Republicans, who endorsed slightly different conspiratorial explanations of the assassination attempts (see figure below for a breakdown)

July 9, 2025 at 4:34 PM

Also, the treatment succeeded for both Democrats and Republicans, who endorsed slightly different conspiratorial explanations of the assassination attempts (see figure below for a breakdown)

(the most notable part?): The effect was durable and preventative. When we recontacted participants 2 months later after the second assassination attempt, those from the tx group were ~50% less likely to endorse conspiracies about this new event! The debunking acted as an "inoculation" of sorts.

July 9, 2025 at 4:34 PM

(the most notable part?): The effect was durable and preventative. When we recontacted participants 2 months later after the second assassination attempt, those from the tx group were ~50% less likely to endorse conspiracies about this new event! The debunking acted as an "inoculation" of sorts.

Did this work? Yes. The Gemini dialogues significantly reduced conspiracy beliefs compared to controls who chatted about an irrelevant topic or just read a fact sheet (d = .38). The effect was robust across multiple measures.

Key figure attached

Key figure attached

July 9, 2025 at 4:34 PM

Did this work? Yes. The Gemini dialogues significantly reduced conspiracy beliefs compared to controls who chatted about an irrelevant topic or just read a fact sheet (d = .38). The effect was robust across multiple measures.

Key figure attached

Key figure attached