Tom Costello

@tomcostello.bsky.social

research psychologist. beliefs, AI, computational social science. prof at american university.

Targeted ads have gone too far

July 24, 2025 at 4:10 PM

Targeted ads have gone too far

Is there a strong case for AI helping, rather than harming, the accuracy of people's beliefs about contentious topics? In this

@nature.com Nature Medicine piece (focusing on vaccination), I argue the answer is YES. And it boils down to how LLMs differ from other sources of information.

@nature.com Nature Medicine piece (focusing on vaccination), I argue the answer is YES. And it boils down to how LLMs differ from other sources of information.

July 17, 2025 at 8:38 PM

Is there a strong case for AI helping, rather than harming, the accuracy of people's beliefs about contentious topics? In this

@nature.com Nature Medicine piece (focusing on vaccination), I argue the answer is YES. And it boils down to how LLMs differ from other sources of information.

@nature.com Nature Medicine piece (focusing on vaccination), I argue the answer is YES. And it boils down to how LLMs differ from other sources of information.

Also, the treatment succeeded for both Democrats and Republicans, who endorsed slightly different conspiratorial explanations of the assassination attempts (see figure below for a breakdown)

July 9, 2025 at 4:34 PM

Also, the treatment succeeded for both Democrats and Republicans, who endorsed slightly different conspiratorial explanations of the assassination attempts (see figure below for a breakdown)

(the most notable part?): The effect was durable and preventative. When we recontacted participants 2 months later after the second assassination attempt, those from the tx group were ~50% less likely to endorse conspiracies about this new event! The debunking acted as an "inoculation" of sorts.

July 9, 2025 at 4:34 PM

(the most notable part?): The effect was durable and preventative. When we recontacted participants 2 months later after the second assassination attempt, those from the tx group were ~50% less likely to endorse conspiracies about this new event! The debunking acted as an "inoculation" of sorts.

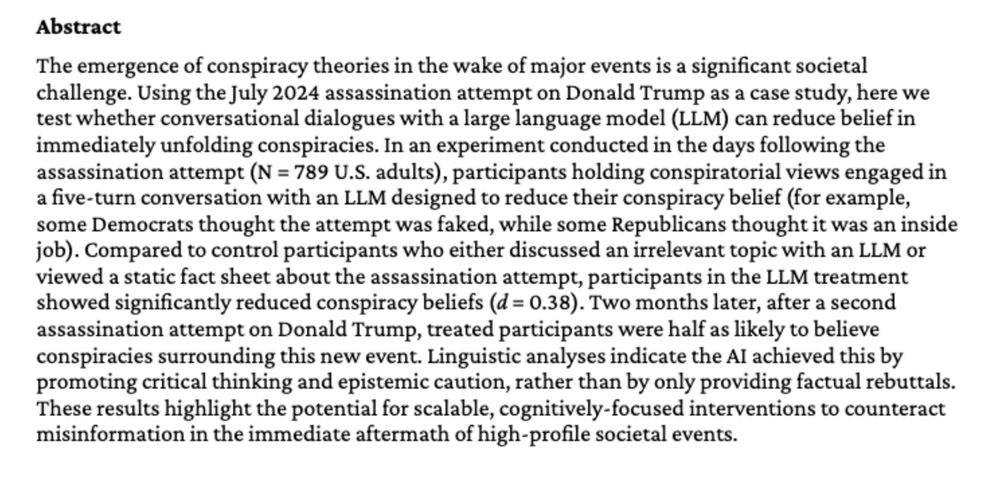

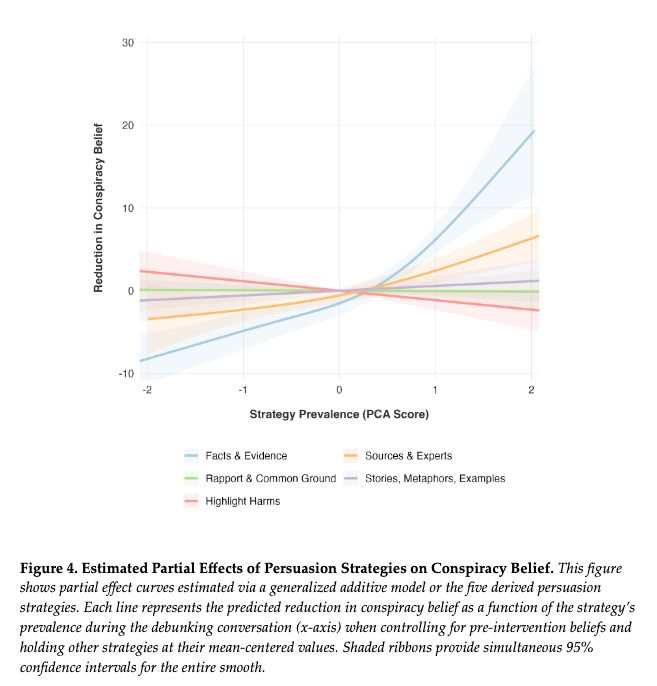

Did this work? Yes. The Gemini dialogues significantly reduced conspiracy beliefs compared to controls who chatted about an irrelevant topic or just read a fact sheet (d = .38). The effect was robust across multiple measures.

Key figure attached

Key figure attached

July 9, 2025 at 4:34 PM

Did this work? Yes. The Gemini dialogues significantly reduced conspiracy beliefs compared to controls who chatted about an irrelevant topic or just read a fact sheet (d = .38). The effect was robust across multiple measures.

Key figure attached

Key figure attached

Compared to our other studies (where we had an AI debunk established conspiracy theories), here the LLM used fundamentally different persuasive strategies. Instead of using facts (which aren't available, since no one knew what was going on!), it promoted epistemic caution & critical thinking.

July 9, 2025 at 4:34 PM

Compared to our other studies (where we had an AI debunk established conspiracy theories), here the LLM used fundamentally different persuasive strategies. Instead of using facts (which aren't available, since no one knew what was going on!), it promoted epistemic caution & critical thinking.

Conspiracies emerge in the wake of high-profile events, but you can’t debunk them with evidence because little yet exists. Does this mean LLMs can’t debunk conspiracies during ongoing events? No!

We show they can in a new working paper.

PDF: osf.io/preprints/ps...

We show they can in a new working paper.

PDF: osf.io/preprints/ps...

July 9, 2025 at 4:34 PM

Conspiracies emerge in the wake of high-profile events, but you can’t debunk them with evidence because little yet exists. Does this mean LLMs can’t debunk conspiracies during ongoing events? No!

We show they can in a new working paper.

PDF: osf.io/preprints/ps...

We show they can in a new working paper.

PDF: osf.io/preprints/ps...

It’s my birthday, so today seems like as good a time as any to share that, this summer, I’ll be joining Carnegie Mellon University as an assistant professor in the Department of Social and Decision Sciences (w/ courtesy in HCI institute).

May 30, 2025 at 4:27 PM

It’s my birthday, so today seems like as good a time as any to share that, this summer, I’ll be joining Carnegie Mellon University as an assistant professor in the Department of Social and Decision Sciences (w/ courtesy in HCI institute).

In trying to get purple Connections first, I ended up with a sequence of guesses that was unique across all other players.

I think impossible to replicate this while earnestly trying to get puzzle right?

I think impossible to replicate this while earnestly trying to get puzzle right?

May 27, 2025 at 8:13 PM

In trying to get purple Connections first, I ended up with a sequence of guesses that was unique across all other players.

I think impossible to replicate this while earnestly trying to get puzzle right?

I think impossible to replicate this while earnestly trying to get puzzle right?

We used AI to examine the text of every transcript from the tx condition using a 28-theme codebook. Among participants unchanged by the convo, the #1 barrier was rigid “law-is-the-law” legalism (44%). This fits with prior research showing starkly diff views on legal vs undocumented immigration.

May 21, 2025 at 4:55 PM

We used AI to examine the text of every transcript from the tx condition using a 28-theme codebook. Among participants unchanged by the convo, the #1 barrier was rigid “law-is-the-law” legalism (44%). This fits with prior research showing starkly diff views on legal vs undocumented immigration.

Following the convos, anti-immigrant prejudice fell 0.13 SD and pro-immigration policy support rose .15 SD vs. control.

In a follow-up survey 5 weeks later—during the final month of the 2024 election—30-40% of those attitude shifts were still there. That is, the prejudice reduction endured.

In a follow-up survey 5 weeks later—during the final month of the 2024 election—30-40% of those attitude shifts were still there. That is, the prejudice reduction endured.

May 21, 2025 at 4:55 PM

Following the convos, anti-immigrant prejudice fell 0.13 SD and pro-immigration policy support rose .15 SD vs. control.

In a follow-up survey 5 weeks later—during the final month of the 2024 election—30-40% of those attitude shifts were still there. That is, the prejudice reduction endured.

In a follow-up survey 5 weeks later—during the final month of the 2024 election—30-40% of those attitude shifts were still there. That is, the prejudice reduction endured.

🚨New WP🚨

Can AI do "deep canvassing"—the time-intensive, empathic persuasive dialogues that durably reduce prejudice when done by humans?

Spoiler: Yes! We find durable reduction in prejudice toward undocumented immigrants

LLMs make deep canvassing possible at massive scale

osf.io/preprints/os...

Can AI do "deep canvassing"—the time-intensive, empathic persuasive dialogues that durably reduce prejudice when done by humans?

Spoiler: Yes! We find durable reduction in prejudice toward undocumented immigrants

LLMs make deep canvassing possible at massive scale

osf.io/preprints/os...

May 21, 2025 at 4:55 PM

🚨New WP🚨

Can AI do "deep canvassing"—the time-intensive, empathic persuasive dialogues that durably reduce prejudice when done by humans?

Spoiler: Yes! We find durable reduction in prejudice toward undocumented immigrants

LLMs make deep canvassing possible at massive scale

osf.io/preprints/os...

Can AI do "deep canvassing"—the time-intensive, empathic persuasive dialogues that durably reduce prejudice when done by humans?

Spoiler: Yes! We find durable reduction in prejudice toward undocumented immigrants

LLMs make deep canvassing possible at massive scale

osf.io/preprints/os...

Here’s an example of a CBS anchor not believing it last week just last week!

May 15, 2025 at 9:26 PM

Here’s an example of a CBS anchor not believing it last week just last week!

Had a chat about “debunkbot” and the research supporting it on CBS Mornings yesterday!

www.cbsnews.com/video/ai-cha...

www.cbsnews.com/video/ai-cha...

May 8, 2025 at 5:03 PM

Had a chat about “debunkbot” and the research supporting it on CBS Mornings yesterday!

www.cbsnews.com/video/ai-cha...

www.cbsnews.com/video/ai-cha...

Personalized AI dialogues increased parents' vaccination intentions significantly: 28.9% (!!) for their sons and 21.9% for daughters compared to a placebo control.

The AI treatment was twice as effective as an information-packed CDC brochure (i.e., the current scalable standard)!

The AI treatment was twice as effective as an information-packed CDC brochure (i.e., the current scalable standard)!

April 3, 2025 at 2:06 AM

Personalized AI dialogues increased parents' vaccination intentions significantly: 28.9% (!!) for their sons and 21.9% for daughters compared to a placebo control.

The AI treatment was twice as effective as an information-packed CDC brochure (i.e., the current scalable standard)!

The AI treatment was twice as effective as an information-packed CDC brochure (i.e., the current scalable standard)!

AI lets us query humanity’s collective knowledge (or a subset of it) – rapidly addressing any given parental concern about vaccines in compelling detail.

So perhaps information-focused LLM conversations can shift vaccination intentions. We test this in an RCT of 1,124 HPV vax-hesitant parents.

So perhaps information-focused LLM conversations can shift vaccination intentions. We test this in an RCT of 1,124 HPV vax-hesitant parents.

April 3, 2025 at 2:06 AM

AI lets us query humanity’s collective knowledge (or a subset of it) – rapidly addressing any given parental concern about vaccines in compelling detail.

So perhaps information-focused LLM conversations can shift vaccination intentions. We test this in an RCT of 1,124 HPV vax-hesitant parents.

So perhaps information-focused LLM conversations can shift vaccination intentions. We test this in an RCT of 1,124 HPV vax-hesitant parents.

AI lets us query humanity’s collective knowledge (or a subset of it) – thus addressing any given parental concern about vaccines in compelling detail.

So...perhaps information-focused LLM conversations shift vaccination intentions. We test this in an RCT of 1,124 HPV vaccine-hesitant parents.

So...perhaps information-focused LLM conversations shift vaccination intentions. We test this in an RCT of 1,124 HPV vaccine-hesitant parents.

April 3, 2025 at 1:59 AM

AI lets us query humanity’s collective knowledge (or a subset of it) – thus addressing any given parental concern about vaccines in compelling detail.

So...perhaps information-focused LLM conversations shift vaccination intentions. We test this in an RCT of 1,124 HPV vaccine-hesitant parents.

So...perhaps information-focused LLM conversations shift vaccination intentions. We test this in an RCT of 1,124 HPV vaccine-hesitant parents.

I had a similar concern initially, but looked at this and the tx doesn't just increase variance -- most people are doing down (within-participant). e.g., here's a plot from when i first started this work (sorry it is messy)

February 19, 2025 at 3:27 PM

I had a similar concern initially, but looked at this and the tx doesn't just increase variance -- most people are doing down (within-participant). e.g., here's a plot from when i first started this work (sorry it is messy)

Thanks! Yes, objectivity doesn't seem to be the key factor. Though some people who were not persuaded attributed that to the AI's bias, so perhaps this will work even more effectively if AI is seen as VERY neutral?

February 18, 2025 at 5:37 PM

Thanks! Yes, objectivity doesn't seem to be the key factor. Though some people who were not persuaded attributed that to the AI's bias, so perhaps this will work even more effectively if AI is seen as VERY neutral?

Many who did change their minds described the AI’s factual, logical approach as the clincher. Those who didn’t highlighted reasons like skepticism of AI, perceived bias, or that the AI’s evidence wasn’t good enough.

February 18, 2025 at 4:30 PM

Many who did change their minds described the AI’s factual, logical approach as the clincher. Those who didn’t highlighted reasons like skepticism of AI, perceived bias, or that the AI’s evidence wasn’t good enough.

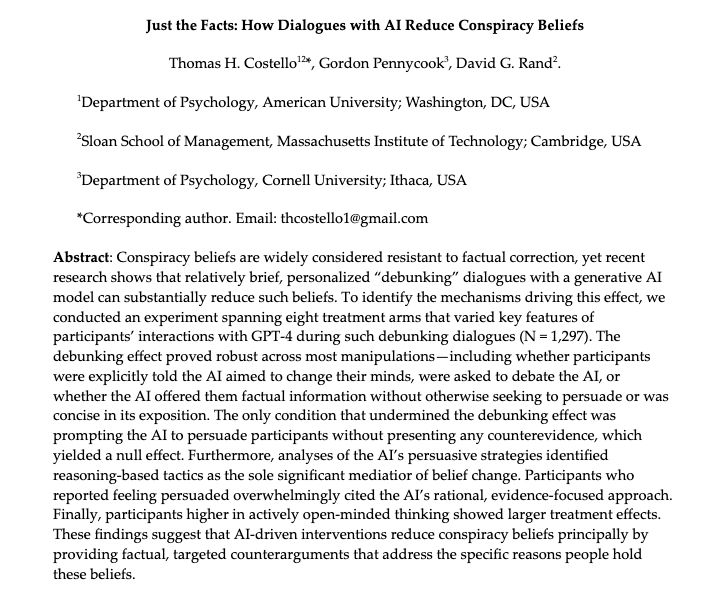

We also analyzed how GPT-4’s persuasion strategies varied across conditions. Reason- and evidence-focused tactics strongly predicted belief change; rhetorical flourishes or emotional appeals mattered less.

February 18, 2025 at 4:30 PM

We also analyzed how GPT-4’s persuasion strategies varied across conditions. Reason- and evidence-focused tactics strongly predicted belief change; rhetorical flourishes or emotional appeals mattered less.

All other arms yielded conspiracy belief reductions.

This includes:

AI just prompted to provide accurate information (and not to be persuasive)

Participants told the AI wants to change their minds

Much shorter AI msgs

Participants were told their job was to beat the AI in a debate

This includes:

AI just prompted to provide accurate information (and not to be persuasive)

Participants told the AI wants to change their minds

Much shorter AI msgs

Participants were told their job was to beat the AI in a debate

February 18, 2025 at 4:30 PM

All other arms yielded conspiracy belief reductions.

This includes:

AI just prompted to provide accurate information (and not to be persuasive)

Participants told the AI wants to change their minds

Much shorter AI msgs

Participants were told their job was to beat the AI in a debate

This includes:

AI just prompted to provide accurate information (and not to be persuasive)

Participants told the AI wants to change their minds

Much shorter AI msgs

Participants were told their job was to beat the AI in a debate

Each experimental arm knocked out one of these explanatory mechanisms.

So, to test whether people are updating their beliefs based on the AI’s targeted counterevidence, we prompted the AI to persuade without using actual facts.

The debunking effect was reduced to zero.

So, to test whether people are updating their beliefs based on the AI’s targeted counterevidence, we prompted the AI to persuade without using actual facts.

The debunking effect was reduced to zero.

February 18, 2025 at 4:30 PM

Each experimental arm knocked out one of these explanatory mechanisms.

So, to test whether people are updating their beliefs based on the AI’s targeted counterevidence, we prompted the AI to persuade without using actual facts.

The debunking effect was reduced to zero.

So, to test whether people are updating their beliefs based on the AI’s targeted counterevidence, we prompted the AI to persuade without using actual facts.

The debunking effect was reduced to zero.

Last year, we published a paper showing that AI models can "debunk" conspiracy theories via personalized conversations. That paper raised a major question: WHY are the human<>AI convos so effective? In a new working paper, we have some answers.

TLDR: facts

osf.io/preprints/ps...

TLDR: facts

osf.io/preprints/ps...

February 18, 2025 at 4:30 PM

Last year, we published a paper showing that AI models can "debunk" conspiracy theories via personalized conversations. That paper raised a major question: WHY are the human<>AI convos so effective? In a new working paper, we have some answers.

TLDR: facts

osf.io/preprints/ps...

TLDR: facts

osf.io/preprints/ps...