🧠🤖

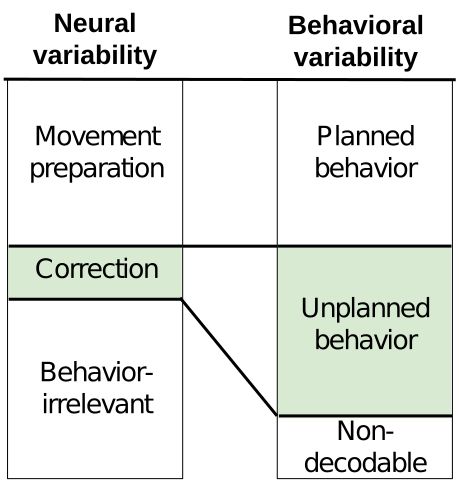

We propose a theory of how learning curriculum affects generalization through neural population dimensionality. Learning curriculum is a determining factor of neural dimensionality - where you start from determines where you end up.

🧠📈

A 🧵:

tinyurl.com/yr8tawj3

🧠🤖

We propose a theory of how learning curriculum affects generalization through neural population dimensionality. Learning curriculum is a determining factor of neural dimensionality - where you start from determines where you end up.

🧠📈

A 🧵:

tinyurl.com/yr8tawj3

💻🧬 3 weeks of intense coursework & projects with support from expert tutors and faculty

📈Apply until July 1st!

🔗https://imbizo.africa/

💻🧬 3 weeks of intense coursework & projects with support from expert tutors and faculty

📈Apply until July 1st!

🔗https://imbizo.africa/

More info and apply: imbizo.africa/apply/

#Imbizo2026 #CompNeuro

More info and apply: imbizo.africa/apply/

#Imbizo2026 #CompNeuro

Our new paper explores this: elifesciences.org/reviewed-pre...

Our new paper explores this: elifesciences.org/reviewed-pre...

🦖Introducing Reptrix, a #Python library to evaluate representation quality metrics for neural nets: github.com/BARL-SSL/rep...

🧵👇[1/6]

#DeepLearning

🦖Introducing Reptrix, a #Python library to evaluate representation quality metrics for neural nets: github.com/BARL-SSL/rep...

🧵👇[1/6]

#DeepLearning

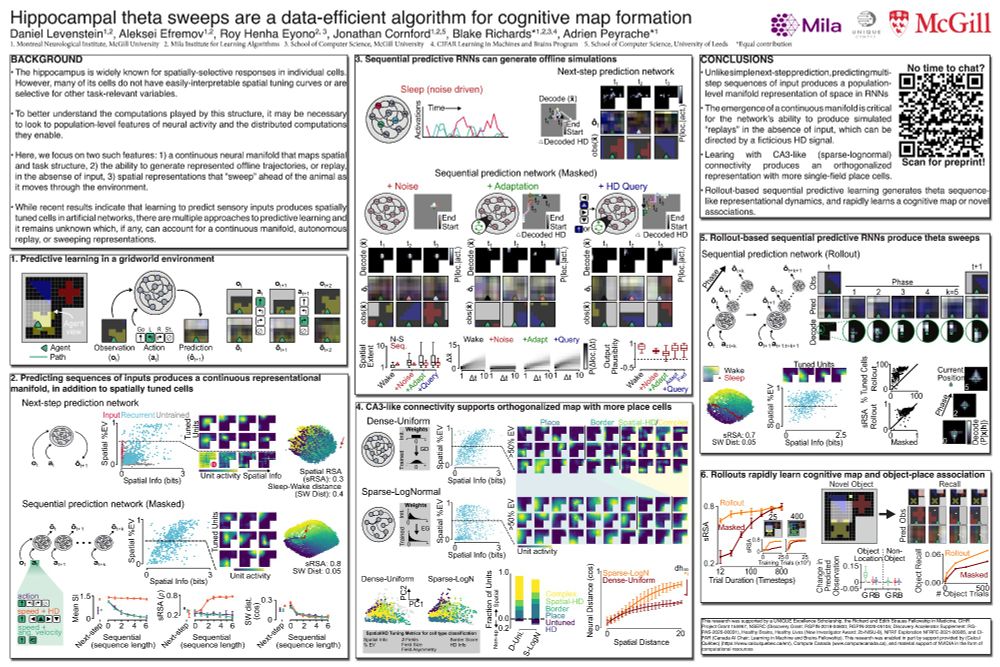

Feel free to drop by! The related pre-print is also out:

www.biorxiv.org/content/10.1...

Feel free to drop by! The related pre-print is also out:

www.biorxiv.org/content/10.1...

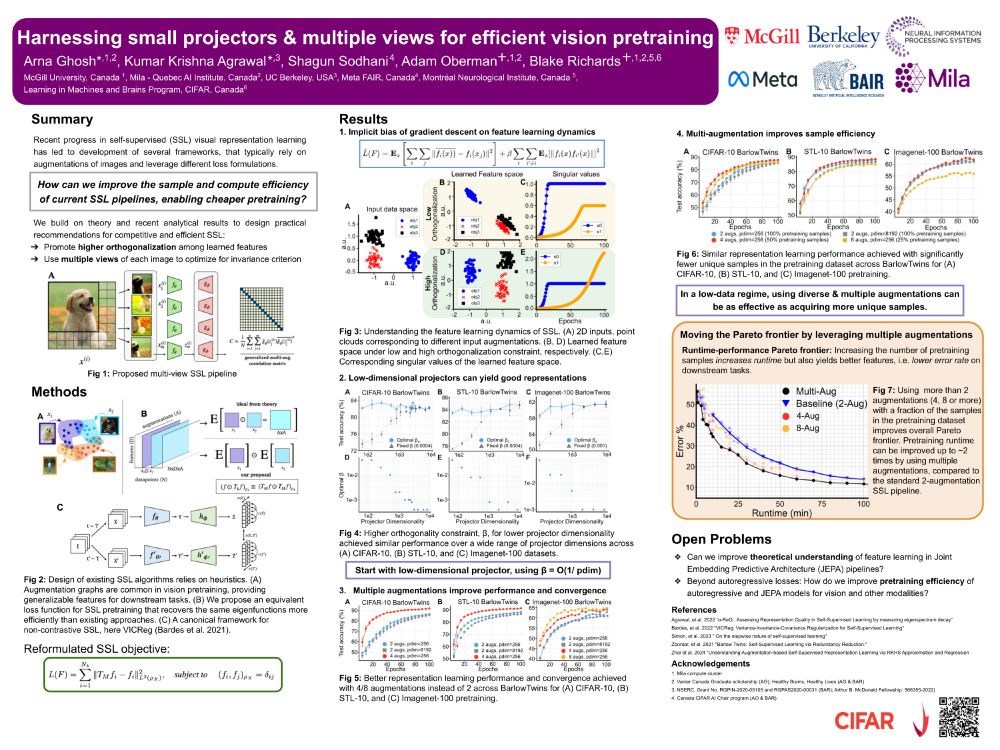

Training time: Weeks

Dataset size: Millions of images

Compute costs: 💸💸💸

Our #NeurIPS2024 poster makes SSL pipelines 2x faster and achieves similar accuracy at 50% pretraining cost! 💪🏼✨

🧵 1/8

Training time: Weeks

Dataset size: Millions of images

Compute costs: 💸💸💸

Our #NeurIPS2024 poster makes SSL pipelines 2x faster and achieves similar accuracy at 50% pretraining cost! 💪🏼✨

🧵 1/8