Sasha Rush

@srushnlp.bsky.social

Professor, Programmer in NYC.

Cornell, Hugging Face 🤗

Cornell, Hugging Face 🤗

Reposted by Sasha Rush

If you're in Berkeley or like a nice streamed talk, I'm about to give a talk at the Simons Institute today: "You Know It Or You Don’t: Compositionality and Phase Transitions in LMs". Tune in at 4PM pacific!

Talk By

No abstract available.

simons.berkeley.edu

February 5, 2025 at 7:49 PM

If you're in Berkeley or like a nice streamed talk, I'm about to give a talk at the Simons Institute today: "You Know It Or You Don’t: Compositionality and Phase Transitions in LMs". Tune in at 4PM pacific!

What to know about DeepSeek

youtu.be/0eMzc-WnBfQ?...

In which we attempt to figure out MoE, o1, scaling, tech reporting, modern semiconductors, microeconomics, and international geopolitics.

youtu.be/0eMzc-WnBfQ?...

In which we attempt to figure out MoE, o1, scaling, tech reporting, modern semiconductors, microeconomics, and international geopolitics.

How DeepSeek Changes the LLM Story

YouTube video by Sasha Rush 🤗

youtu.be

February 4, 2025 at 3:41 PM

What to know about DeepSeek

youtu.be/0eMzc-WnBfQ?...

In which we attempt to figure out MoE, o1, scaling, tech reporting, modern semiconductors, microeconomics, and international geopolitics.

youtu.be/0eMzc-WnBfQ?...

In which we attempt to figure out MoE, o1, scaling, tech reporting, modern semiconductors, microeconomics, and international geopolitics.

For reasons, I find myself thinking a lot about the history of US/USSR Cold War science, particularly in applied math. Does anyone have a recommendation for a good book on this topic?

January 30, 2025 at 5:04 PM

For reasons, I find myself thinking a lot about the history of US/USSR Cold War science, particularly in applied math. Does anyone have a recommendation for a good book on this topic?

10 short videos about LLM infrastructure to help you appreciate Pages 12-18 of the DeepSeek-v3 paper (arxiv.org/abs/2412.19437)

www.youtube.com/watch?v=76gu...

www.youtube.com/watch?v=76gu...

Flash LLMs: Pipeline Parallel

YouTube video by Sasha Rush 🤗

www.youtube.com

January 7, 2025 at 3:01 PM

10 short videos about LLM infrastructure to help you appreciate Pages 12-18 of the DeepSeek-v3 paper (arxiv.org/abs/2412.19437)

www.youtube.com/watch?v=76gu...

www.youtube.com/watch?v=76gu...

I tried reading this book, and I was just shocked at how little insight it had, and it's sheer inability to focus. The fact that it is being recommended to policy makers...

www.gatesnotes.com/The-Coming-W...

www.gatesnotes.com/The-Coming-W...

www.gatesnotes.com

January 6, 2025 at 10:12 PM

I tried reading this book, and I was just shocked at how little insight it had, and it's sheer inability to focus. The fact that it is being recommended to policy makers...

www.gatesnotes.com/The-Coming-W...

www.gatesnotes.com/The-Coming-W...

I'm going to do a live coding stream for the next couple of hours. We'll start by running through some WebGPU tutorials. Can also talk about some AI stuff.

www.youtube.com/watch?v=sqKq...

www.youtube.com/watch?v=sqKq...

Python + WebGPU

YouTube video by Sasha Rush 🤗

www.youtube.com

December 27, 2024 at 7:00 PM

I'm going to do a live coding stream for the next couple of hours. We'll start by running through some WebGPU tutorials. Can also talk about some AI stuff.

www.youtube.com/watch?v=sqKq...

www.youtube.com/watch?v=sqKq...

Reposted by Sasha Rush

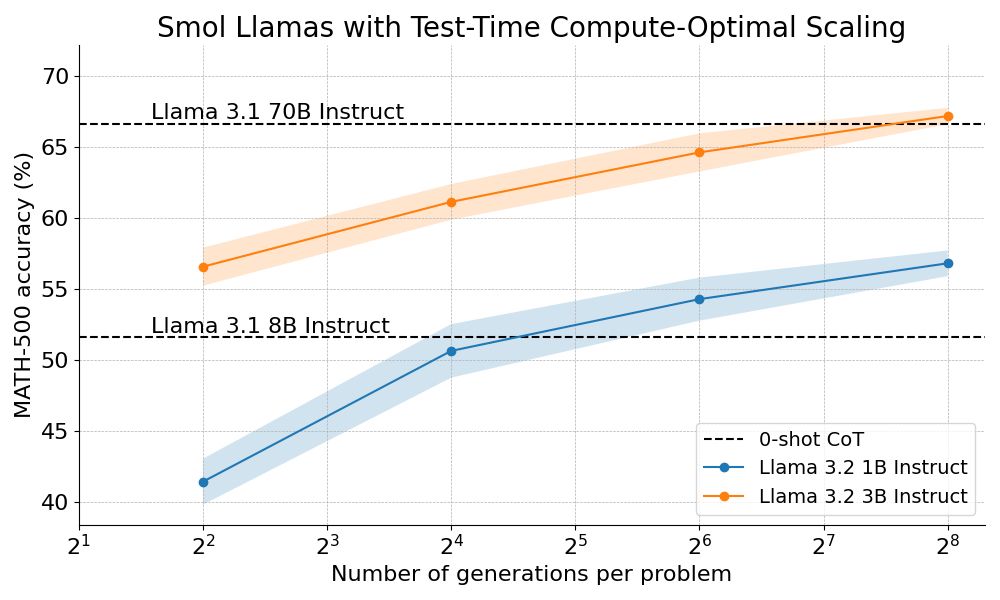

We outperform Llama 70B with Llama 3B on hard math by scaling test-time compute 🔥

How? By combining step-wise reward models with tree search algorithms :)

We're open sourcing the full recipe and sharing a detailed blog post 👇

How? By combining step-wise reward models with tree search algorithms :)

We're open sourcing the full recipe and sharing a detailed blog post 👇

December 16, 2024 at 5:08 PM

We outperform Llama 70B with Llama 3B on hard math by scaling test-time compute 🔥

How? By combining step-wise reward models with tree search algorithms :)

We're open sourcing the full recipe and sharing a detailed blog post 👇

How? By combining step-wise reward models with tree search algorithms :)

We're open sourcing the full recipe and sharing a detailed blog post 👇

As coding LLMs get faster at inference, iterating verification-in-the-loop tests becomes the bottleneck for coding agents. Probably need quite different programming systems for these settings, or even things like "batchable" runtimes, whatever that means.

December 11, 2024 at 6:43 PM

As coding LLMs get faster at inference, iterating verification-in-the-loop tests becomes the bottleneck for coding agents. Probably need quite different programming systems for these settings, or even things like "batchable" runtimes, whatever that means.

Reposted by Sasha Rush

We organised a lively poster fest with many students rehearsing for the upcoming @neuripsconf.bsky.social next week and others discussing their cool works!

Thanks to #GAIL, the #Generative #AI lab in #Edinburgh for sponsoring the event!

Thanks to #GAIL, the #Generative #AI lab in #Edinburgh for sponsoring the event!

December 6, 2024 at 8:43 PM

We organised a lively poster fest with many students rehearsing for the upcoming @neuripsconf.bsky.social next week and others discussing their cool works!

Thanks to #GAIL, the #Generative #AI lab in #Edinburgh for sponsoring the event!

Thanks to #GAIL, the #Generative #AI lab in #Edinburgh for sponsoring the event!

Reposted by Sasha Rush

I wanted to make my first post about a project close to my heart. Linear algebra is an underappreciated foundation for machine learning. Our new framework CoLA (Compositional Linear Algebra) exploits algebraic structure arising from modelling assumptions for significant computational savings! 1/4

December 5, 2024 at 3:15 PM

I wanted to make my first post about a project close to my heart. Linear algebra is an underappreciated foundation for machine learning. Our new framework CoLA (Compositional Linear Algebra) exploits algebraic structure arising from modelling assumptions for significant computational savings! 1/4

Reposted by Sasha Rush

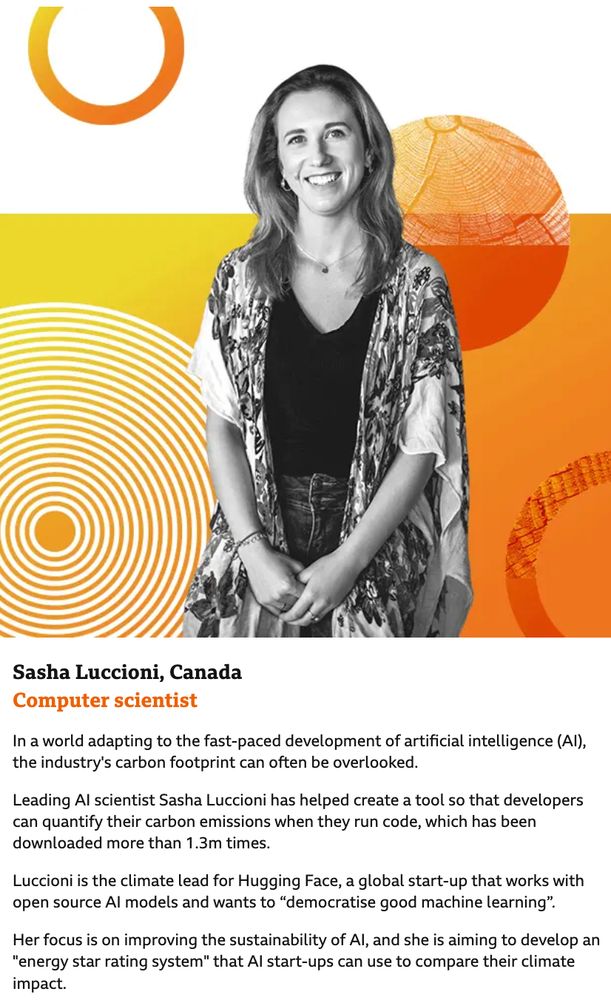

Proud of my amazing colleague @sashamtl.bsky.social for her much deserved recognition on advancing the science of AI energy use.

BBC100: www.bbc.co.uk/news/resourc...

Fast Company: www.fastcompany.com/91233692/why...

Sasha has been working tirelessly moving things fwd--endurance & brilliance in one.

BBC100: www.bbc.co.uk/news/resourc...

Fast Company: www.fastcompany.com/91233692/why...

Sasha has been working tirelessly moving things fwd--endurance & brilliance in one.

December 3, 2024 at 6:47 PM

Proud of my amazing colleague @sashamtl.bsky.social for her much deserved recognition on advancing the science of AI energy use.

BBC100: www.bbc.co.uk/news/resourc...

Fast Company: www.fastcompany.com/91233692/why...

Sasha has been working tirelessly moving things fwd--endurance & brilliance in one.

BBC100: www.bbc.co.uk/news/resourc...

Fast Company: www.fastcompany.com/91233692/why...

Sasha has been working tirelessly moving things fwd--endurance & brilliance in one.

Reposted by Sasha Rush

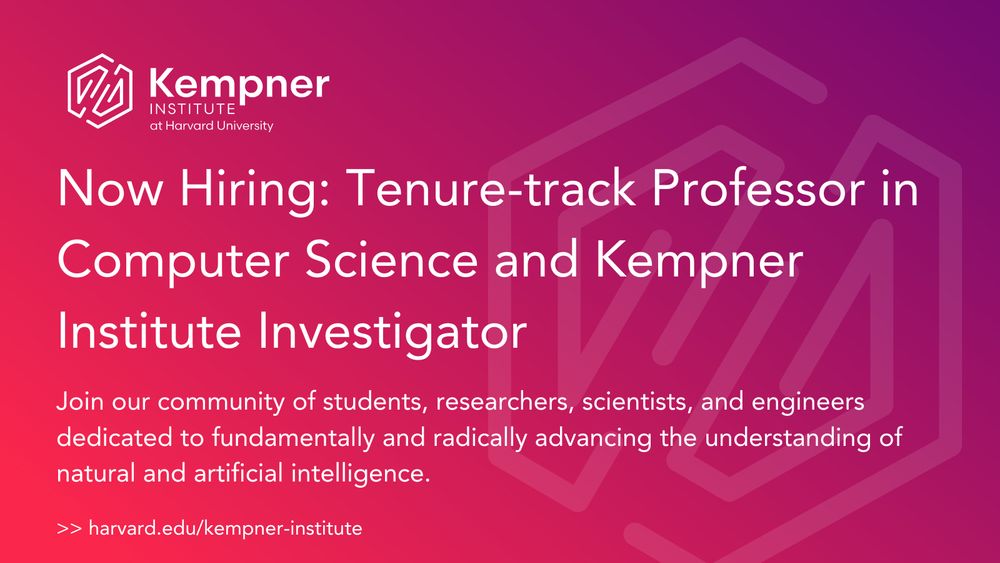

NEW: we have an exciting opportunity for a tenure-track professor at the #KempnerInstitute and the John A. Paulson School of Engineering and Applied Sciences (SEAS). Read the full description & apply today: academicpositions.harvard.edu/postings/14362

#ML #AI

#ML #AI

December 3, 2024 at 1:24 AM

NEW: we have an exciting opportunity for a tenure-track professor at the #KempnerInstitute and the John A. Paulson School of Engineering and Applied Sciences (SEAS). Read the full description & apply today: academicpositions.harvard.edu/postings/14362

#ML #AI

#ML #AI

Reposted by Sasha Rush

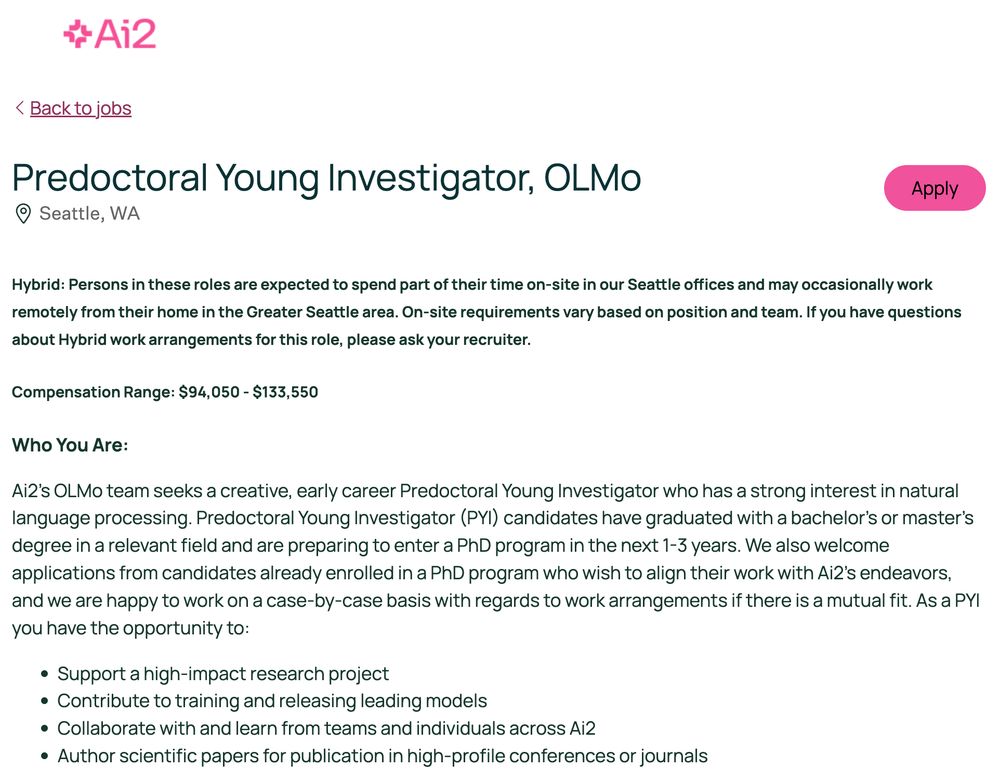

We're hiring another predoctoral researcher for my team at Ai2/OLMo next year. The goal of this position is to mentor and grow future academic stars of NLP/AI over 1-2 years before grad school.

This ends up being people done with BS or MS who want to continue to a PhD soon.

https://buff.ly/49nuggo

This ends up being people done with BS or MS who want to continue to a PhD soon.

https://buff.ly/49nuggo

December 3, 2024 at 11:52 PM

We're hiring another predoctoral researcher for my team at Ai2/OLMo next year. The goal of this position is to mentor and grow future academic stars of NLP/AI over 1-2 years before grad school.

This ends up being people done with BS or MS who want to continue to a PhD soon.

https://buff.ly/49nuggo

This ends up being people done with BS or MS who want to continue to a PhD soon.

https://buff.ly/49nuggo

Is there a community that writes RL-first programming languages? Something like (Num)Pyro that takes seriously the idea of separating the policy specification from the learning process.

December 2, 2024 at 2:24 PM

Is there a community that writes RL-first programming languages? Something like (Num)Pyro that takes seriously the idea of separating the policy specification from the learning process.

Reposted by Sasha Rush

ICYMI, @srushnlp.bsky.social recently gave a nice talk speculating about the methods/data used to train OpenAI's o1 model. The key idea seems to be scaling up chain-of-thought (CoT) generation using auxiliary verifier models that can give feedback on the correctness of the generation. 1/N

Talk: Speculations on Test-Time Scaling

A tutorial on the technical aspects behind OpenAI's o1 and open research questions in this space.

youtu.be/6PEJ96k1kiw

Slides+bibliography: github.com/srush/awesom...

A tutorial on the technical aspects behind OpenAI's o1 and open research questions in this space.

youtu.be/6PEJ96k1kiw

Slides+bibliography: github.com/srush/awesom...

Speculations on Test-Time Scaling (o1)

YouTube video by Sasha Rush 🤗

youtu.be

December 1, 2024 at 3:24 AM

ICYMI, @srushnlp.bsky.social recently gave a nice talk speculating about the methods/data used to train OpenAI's o1 model. The key idea seems to be scaling up chain-of-thought (CoT) generation using auxiliary verifier models that can give feedback on the correctness of the generation. 1/N

Reposted by Sasha Rush

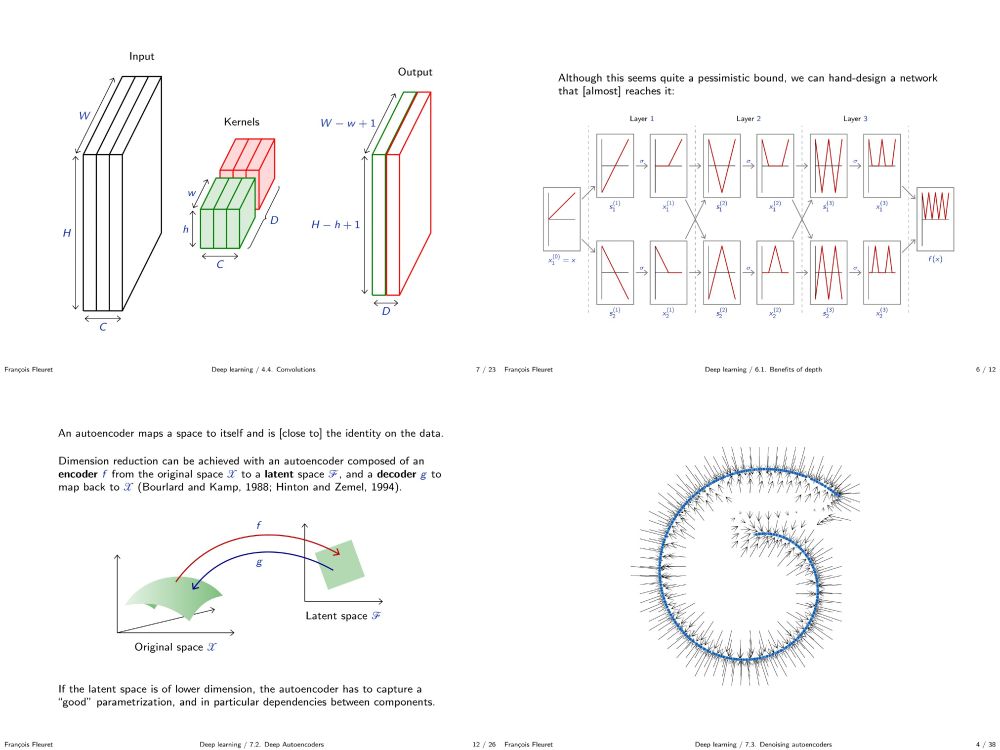

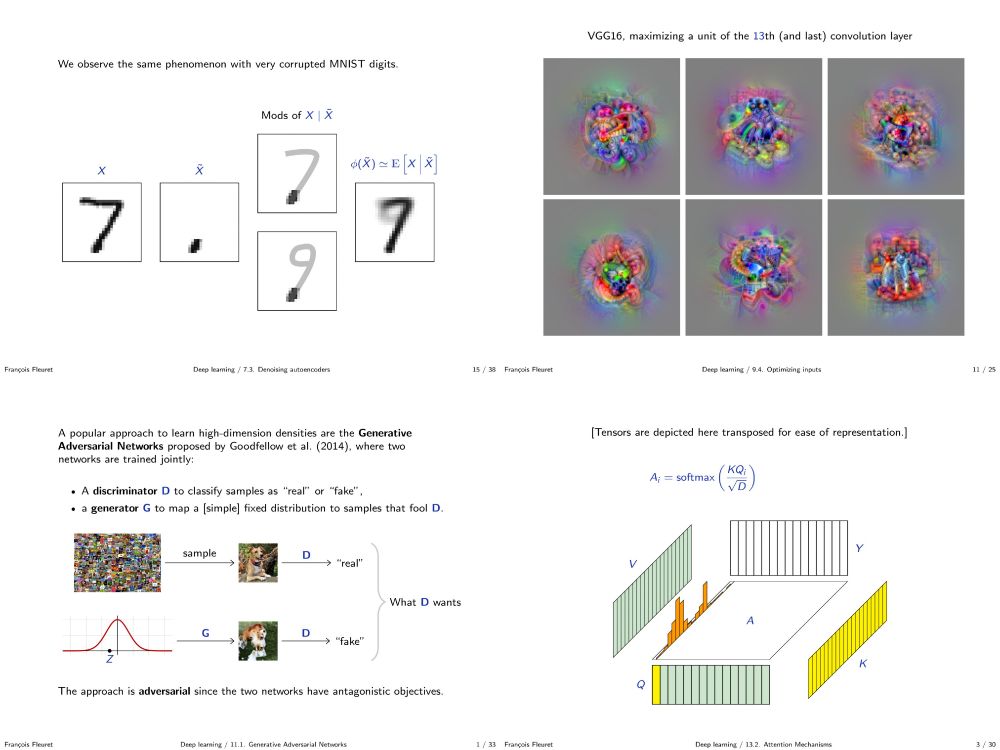

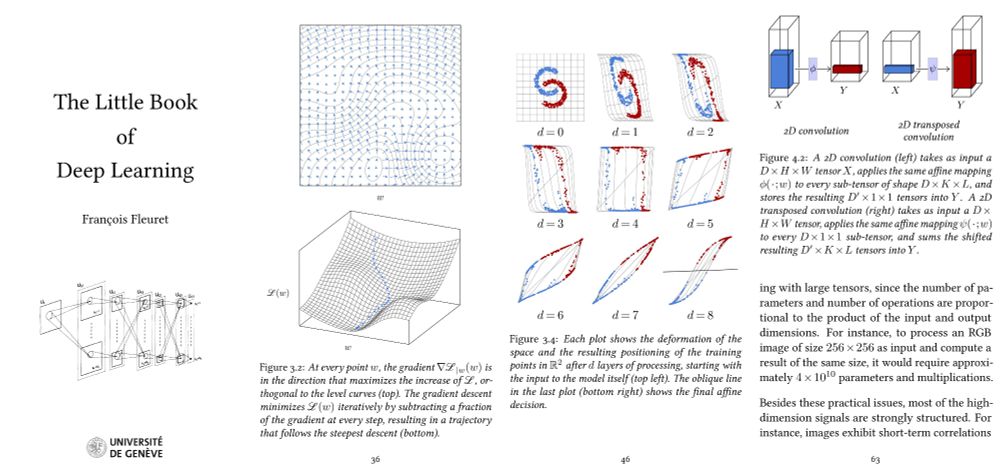

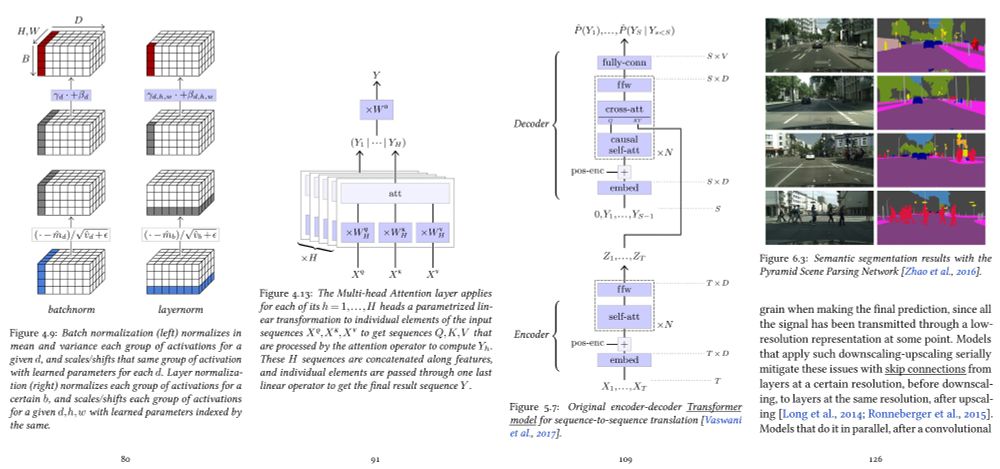

My deep learning course at the University of Geneva is available on-line. 1000+ slides, ~20h of screen-casts. Full of examples in PyTorch.

fleuret.org/dlc/

And my "Little Book of Deep Learning" is available as a phone-formatted pdf (nearing 700k downloads!)

fleuret.org/lbdl/

fleuret.org/dlc/

And my "Little Book of Deep Learning" is available as a phone-formatted pdf (nearing 700k downloads!)

fleuret.org/lbdl/

November 26, 2024 at 6:15 AM

My deep learning course at the University of Geneva is available on-line. 1000+ slides, ~20h of screen-casts. Full of examples in PyTorch.

fleuret.org/dlc/

And my "Little Book of Deep Learning" is available as a phone-formatted pdf (nearing 700k downloads!)

fleuret.org/lbdl/

fleuret.org/dlc/

And my "Little Book of Deep Learning" is available as a phone-formatted pdf (nearing 700k downloads!)

fleuret.org/lbdl/

Reposted by Sasha Rush

November 26, 2024 at 4:02 PM

Reposted by Sasha Rush

Hello Bluesky! 🔵 We start our account by having our third guess for Ask Me Anything session #3DV2025AMA!

Noah Snavely @snavely.bsky.social from Cornell & Google DeepMind! 🌟

🕒 You have now 24 HOURS to ask him anything — drop your questions in the comments below!

Keep it engaging but respectful!

Noah Snavely @snavely.bsky.social from Cornell & Google DeepMind! 🌟

🕒 You have now 24 HOURS to ask him anything — drop your questions in the comments below!

Keep it engaging but respectful!

November 26, 2024 at 3:33 PM

Hello Bluesky! 🔵 We start our account by having our third guess for Ask Me Anything session #3DV2025AMA!

Noah Snavely @snavely.bsky.social from Cornell & Google DeepMind! 🌟

🕒 You have now 24 HOURS to ask him anything — drop your questions in the comments below!

Keep it engaging but respectful!

Noah Snavely @snavely.bsky.social from Cornell & Google DeepMind! 🌟

🕒 You have now 24 HOURS to ask him anything — drop your questions in the comments below!

Keep it engaging but respectful!

Rare personal tweet:

Subletting our furnished apartment in Brooklyn for the spring at a significant discount. It's quite nice and in a fun location. under price. Email me know if you are interested, I will send pictures.

Subletting our furnished apartment in Brooklyn for the spring at a significant discount. It's quite nice and in a fun location. under price. Email me know if you are interested, I will send pictures.

November 25, 2024 at 8:39 PM

Rare personal tweet:

Subletting our furnished apartment in Brooklyn for the spring at a significant discount. It's quite nice and in a fun location. under price. Email me know if you are interested, I will send pictures.

Subletting our furnished apartment in Brooklyn for the spring at a significant discount. It's quite nice and in a fun location. under price. Email me know if you are interested, I will send pictures.

Several incredible NeurIPS tutorials this year. Worth navigating through the Swifties.

Another nano gem from my amazing student

Piotr Nawrot!

A repo & notebook on sparse attention for efficient LLM inference: github.com/PiotrNawrot/...

This will also feature in my #NeurIPS 2024 tutorial "Dynamic Sparsity in ML" with André Martins: dynamic-sparsity.github.io Stay tuned!

Piotr Nawrot!

A repo & notebook on sparse attention for efficient LLM inference: github.com/PiotrNawrot/...

This will also feature in my #NeurIPS 2024 tutorial "Dynamic Sparsity in ML" with André Martins: dynamic-sparsity.github.io Stay tuned!

November 21, 2024 at 10:01 PM

Several incredible NeurIPS tutorials this year. Worth navigating through the Swifties.

Reposted by Sasha Rush

Another nano gem from my amazing student

Piotr Nawrot!

A repo & notebook on sparse attention for efficient LLM inference: github.com/PiotrNawrot/...

This will also feature in my #NeurIPS 2024 tutorial "Dynamic Sparsity in ML" with André Martins: dynamic-sparsity.github.io Stay tuned!

Piotr Nawrot!

A repo & notebook on sparse attention for efficient LLM inference: github.com/PiotrNawrot/...

This will also feature in my #NeurIPS 2024 tutorial "Dynamic Sparsity in ML" with André Martins: dynamic-sparsity.github.io Stay tuned!

November 20, 2024 at 12:51 PM

Another nano gem from my amazing student

Piotr Nawrot!

A repo & notebook on sparse attention for efficient LLM inference: github.com/PiotrNawrot/...

This will also feature in my #NeurIPS 2024 tutorial "Dynamic Sparsity in ML" with André Martins: dynamic-sparsity.github.io Stay tuned!

Piotr Nawrot!

A repo & notebook on sparse attention for efficient LLM inference: github.com/PiotrNawrot/...

This will also feature in my #NeurIPS 2024 tutorial "Dynamic Sparsity in ML" with André Martins: dynamic-sparsity.github.io Stay tuned!

Reposted by Sasha Rush

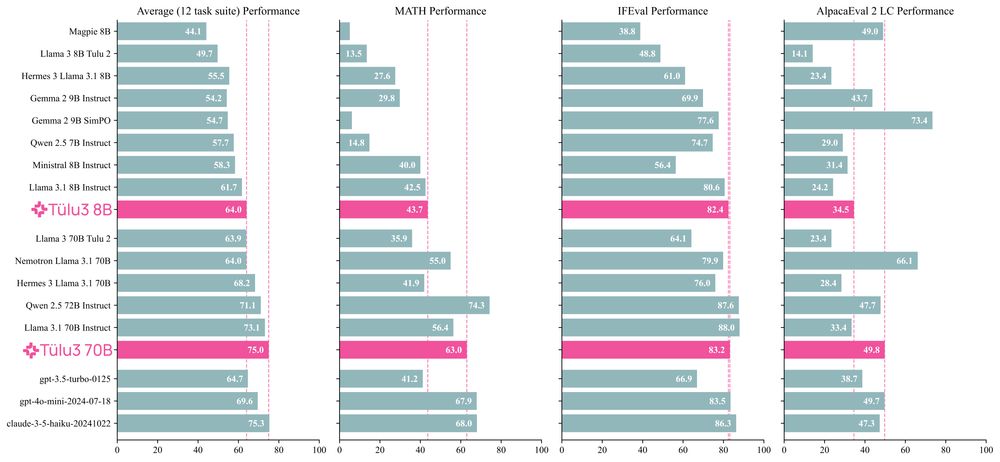

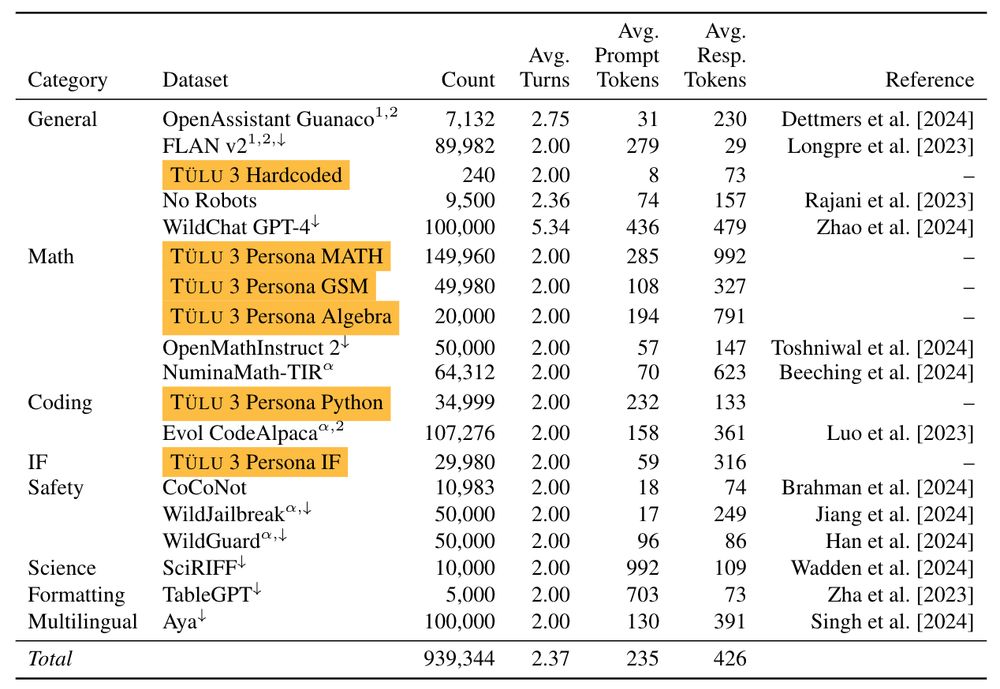

I've spent the last two years scouring all available resources on RLHF specifically and post training broadly. Today, with the help of a totally cracked team, we bring you the fruits of that labor — Tülu 3, an entirely open frontier model post training recipe. We beat Llama 3.1 Instruct.

Thread.

Thread.

November 21, 2024 at 5:01 PM

I've spent the last two years scouring all available resources on RLHF specifically and post training broadly. Today, with the help of a totally cracked team, we bring you the fruits of that labor — Tülu 3, an entirely open frontier model post training recipe. We beat Llama 3.1 Instruct.

Thread.

Thread.

Discrete diffusion has become a very hot topic again this year. Dozens of interesting ICLR submissions and some exciting attempts at scaling. Here's a bibliography on the topic from the Kuleshov group (my open office neighbors).

github.com/kuleshov-gro...

github.com/kuleshov-gro...

GitHub - kuleshov-group/awesome-discrete-diffusion-models: A curated list for awesome discrete diffusion models resources.

A curated list for awesome discrete diffusion models resources. - kuleshov-group/awesome-discrete-diffusion-models

github.com

November 21, 2024 at 6:39 PM

Discrete diffusion has become a very hot topic again this year. Dozens of interesting ICLR submissions and some exciting attempts at scaling. Here's a bibliography on the topic from the Kuleshov group (my open office neighbors).

github.com/kuleshov-gro...

github.com/kuleshov-gro...

A disproportionate number of sucessful CS academics have some intense cardio hobby. Took me some years to understand.

Every time I see someone post this image it goes viral

November 21, 2024 at 12:37 AM

A disproportionate number of sucessful CS academics have some intense cardio hobby. Took me some years to understand.