https://cims.nyu.edu/~andrewgw

"Small Batch Size Training for Language Models:

When Vanilla SGD Works, and Why Gradient Accumulation Is Wasteful" (arxiv.org/abs/2507.07101)

(I can confirm this holds for RLVR, too! I have some experiments to share soon.)

"Small Batch Size Training for Language Models:

When Vanilla SGD Works, and Why Gradient Accumulation Is Wasteful" (arxiv.org/abs/2507.07101)

(I can confirm this holds for RLVR, too! I have some experiments to share soon.)

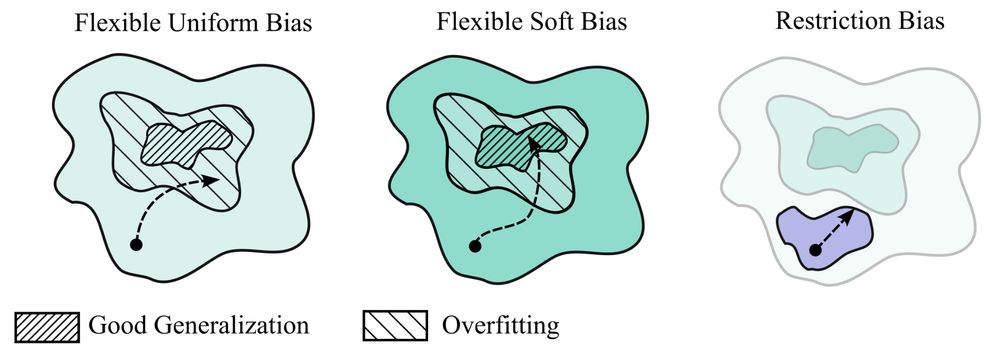

@ualberta.bsky.social + Amii.ca Fellow! 🥳 Recruiting students to develop theories of cognition in natural & artificial systems 🤖💭🧠. Find me at #NeurIPS2025 workshops (speaking coginterp.github.io/neurips2025 & organising @dataonbrainmind.bsky.social)

@ualberta.bsky.social + Amii.ca Fellow! 🥳 Recruiting students to develop theories of cognition in natural & artificial systems 🤖💭🧠. Find me at #NeurIPS2025 workshops (speaking coginterp.github.io/neurips2025 & organising @dataonbrainmind.bsky.social)

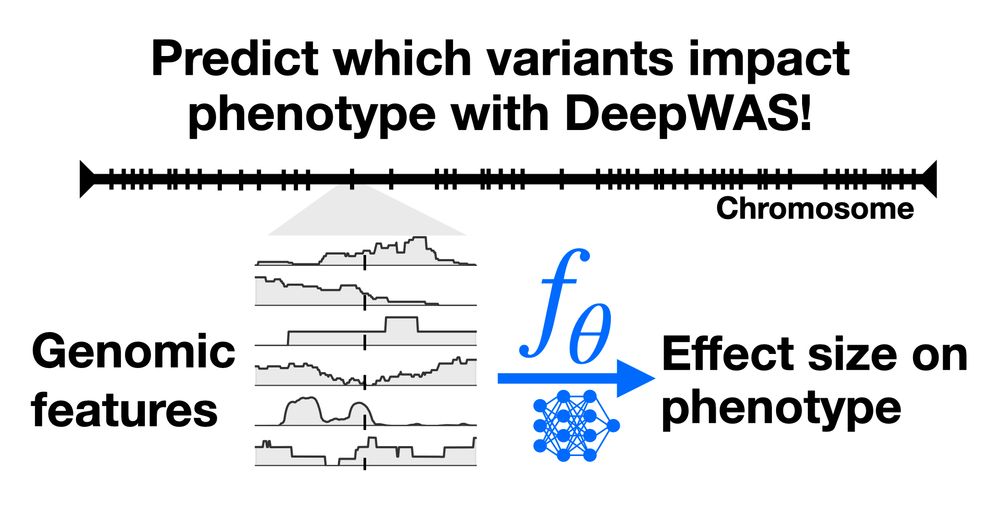

nyudatascience.medium.com/deep-learnin...

nyudatascience.medium.com/deep-learnin...

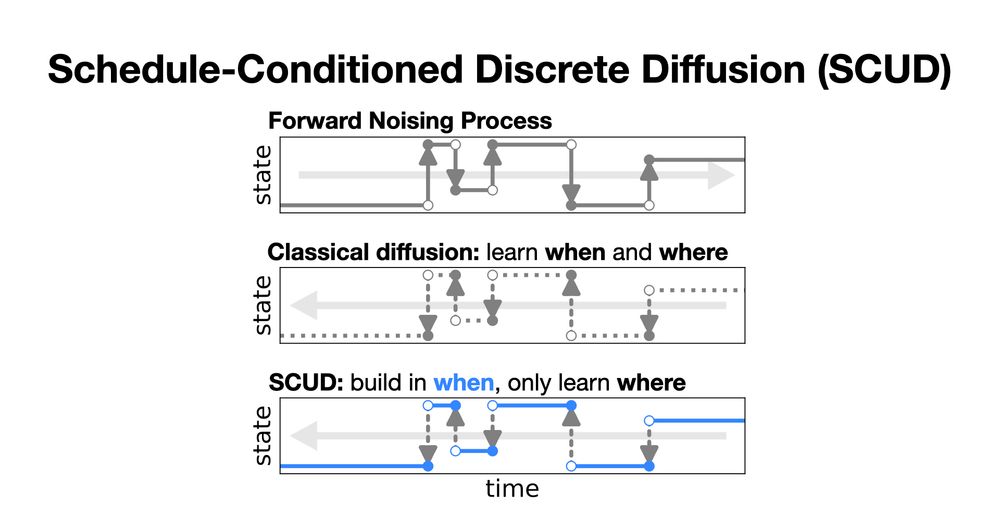

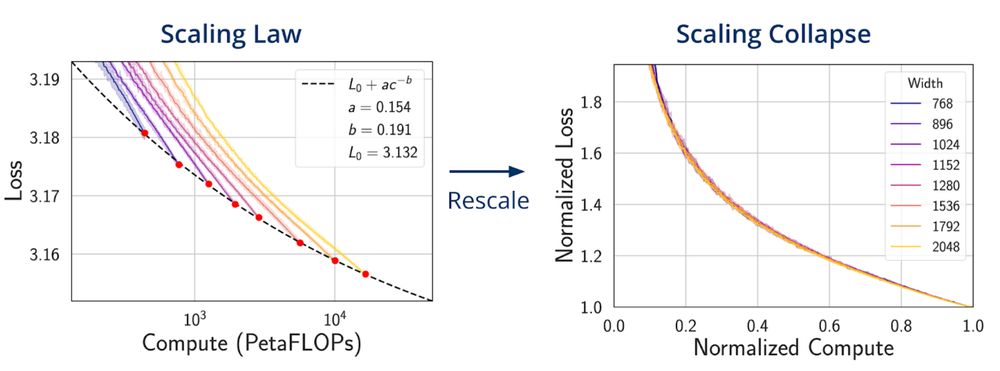

arxiv.org/abs/2507.02119

1/3

arxiv.org/abs/2507.02119

1/3