Seth Axen 🪓

@sethaxen.com

Empowering scientists with machine learning @mlcolab.org. Sometimes #Bayesian. Usually #FOSS. Preferably in #JuliaLang.

Expat: 🇺🇸 ➡️ 🇩🇪

💼 On the job market (remote/Stuttgart area)

sethaxen.com

Expat: 🇺🇸 ➡️ 🇩🇪

💼 On the job market (remote/Stuttgart area)

sethaxen.com

Pinned

Seth Axen 🪓

@sethaxen.com

· Nov 18

Quick intro 👋! I help scientists use ML in their research. My background is in structural biology, but I now mostly post about FOSS topics. I've worked on tools for manifolds and autodiff, but these days I mostly focus on tools for Bayesian analysis, e.g. ArviZ. In my free time, I powerlift.

Reposted by Seth Axen 🪓

We figured out flow matching over states that change dimension. With "Branching Flows", the model decides how big things must be! This works wherever flow matching works, with discrete, continuous, and manifold states. We think this will unlock some genuinely new capabilities.

November 10, 2025 at 9:10 AM

We figured out flow matching over states that change dimension. With "Branching Flows", the model decides how big things must be! This works wherever flow matching works, with discrete, continuous, and manifold states. We think this will unlock some genuinely new capabilities.

Reposted by Seth Axen 🪓

Fisher meets Feynman! 🤝

We use score matching and a trick from quantum field theory to make a product-of-experts family both expressive and efficient for variational inference.

To appear as a spotlight @ NeurIPS 2025.

#NeurIPS2025 (link below)

We use score matching and a trick from quantum field theory to make a product-of-experts family both expressive and efficient for variational inference.

To appear as a spotlight @ NeurIPS 2025.

#NeurIPS2025 (link below)

October 27, 2025 at 12:51 PM

Fisher meets Feynman! 🤝

We use score matching and a trick from quantum field theory to make a product-of-experts family both expressive and efficient for variational inference.

To appear as a spotlight @ NeurIPS 2025.

#NeurIPS2025 (link below)

We use score matching and a trick from quantum field theory to make a product-of-experts family both expressive and efficient for variational inference.

To appear as a spotlight @ NeurIPS 2025.

#NeurIPS2025 (link below)

Yesterday I asked the 7-year-old how he would describe what I do for work.

His response: "You do math all day, get paid for no reason, and print things."

His response: "You do math all day, get paid for no reason, and print things."

October 24, 2025 at 8:55 AM

Yesterday I asked the 7-year-old how he would describe what I do for work.

His response: "You do math all day, get paid for no reason, and print things."

His response: "You do math all day, get paid for no reason, and print things."

"I'm sure I don't have to tell you that #JuliaLang is awesome and Matlab is not" #HeardAtJuliaCon #JuliaCon

October 2, 2025 at 3:13 PM

"I'm sure I don't have to tell you that #JuliaLang is awesome and Matlab is not" #HeardAtJuliaCon #JuliaCon

Reposted by Seth Axen 🪓

MC Stan is here! Follow for the latest Stan news, and tag if you want us to repost your posts about new papers, packages, courses, etc. about Stan

September 17, 2025 at 3:16 PM

MC Stan is here! Follow for the latest Stan news, and tag if you want us to repost your posts about new papers, packages, courses, etc. about Stan

Reposted by Seth Axen 🪓

From hackathon to release: sbi v0.25 is here! 🎉

What happens when dozens of SBI researchers and practitioners collaborate for a week? New inference methods, new documentation, lots of new embedding networks, a bridge to pyro and a bridge between flow matching and score-based methods 🤯

1/7 🧵

What happens when dozens of SBI researchers and practitioners collaborate for a week? New inference methods, new documentation, lots of new embedding networks, a bridge to pyro and a bridge between flow matching and score-based methods 🤯

1/7 🧵

September 9, 2025 at 3:00 PM

From hackathon to release: sbi v0.25 is here! 🎉

What happens when dozens of SBI researchers and practitioners collaborate for a week? New inference methods, new documentation, lots of new embedding networks, a bridge to pyro and a bridge between flow matching and score-based methods 🤯

1/7 🧵

What happens when dozens of SBI researchers and practitioners collaborate for a week? New inference methods, new documentation, lots of new embedding networks, a bridge to pyro and a bridge between flow matching and score-based methods 🤯

1/7 🧵

Reposted by Seth Axen 🪓

My paper with Loucas Pillaud-Vivien and Lawrence Saul, “Variational Inference for Uncertainty Quantification: An Analysis of Trade-offs”, has been accepted for publication in the Journal of Machine Learning Research.

📃 arxiv.org/abs/2403.13748

🧵 1/

📃 arxiv.org/abs/2403.13748

🧵 1/

September 10, 2025 at 12:28 AM

My paper with Loucas Pillaud-Vivien and Lawrence Saul, “Variational Inference for Uncertainty Quantification: An Analysis of Trade-offs”, has been accepted for publication in the Journal of Machine Learning Research.

📃 arxiv.org/abs/2403.13748

🧵 1/

📃 arxiv.org/abs/2403.13748

🧵 1/

Working from home and overhearing my 7-year-old trying to explain "countable infinity" to my partner using donuts.

September 8, 2025 at 4:38 PM

Working from home and overhearing my 7-year-old trying to explain "countable infinity" to my partner using donuts.

Reposted by Seth Axen 🪓

Fun read of their amazing contributions to the SBI hackathon! 🥐

The SBI-Pyro bridge that @sethaxen.com built has a lot of potential I believe. I'll actually be presenting this work at @euroscipy.bsky.social this Wednesday - excited to share this with a broader audience.

euroscipy.org/talks/KCYYTF/

The SBI-Pyro bridge that @sethaxen.com built has a lot of potential I believe. I'll actually be presenting this work at @euroscipy.bsky.social this Wednesday - excited to share this with a broader audience.

euroscipy.org/talks/KCYYTF/

August 18, 2025 at 12:15 PM

Fun read of their amazing contributions to the SBI hackathon! 🥐

The SBI-Pyro bridge that @sethaxen.com built has a lot of potential I believe. I'll actually be presenting this work at @euroscipy.bsky.social this Wednesday - excited to share this with a broader audience.

euroscipy.org/talks/KCYYTF/

The SBI-Pyro bridge that @sethaxen.com built has a lot of potential I believe. I'll actually be presenting this work at @euroscipy.bsky.social this Wednesday - excited to share this with a broader audience.

euroscipy.org/talks/KCYYTF/

Reposted by Seth Axen 🪓

All three books I've co-authored are freely available online for non-commercial use:

- #Bayesian Data Analysis, 3rd ed (aka BDA3) at stat.columbia.edu/~gelman/book/

- #Regression and Other Stories at avehtari.github.io/ROS-Examples/

- Active Statistics at avehtari.github.io/ActiveStatis...

- #Bayesian Data Analysis, 3rd ed (aka BDA3) at stat.columbia.edu/~gelman/book/

- #Regression and Other Stories at avehtari.github.io/ROS-Examples/

- Active Statistics at avehtari.github.io/ActiveStatis...

August 2, 2024 at 1:35 PM

All three books I've co-authored are freely available online for non-commercial use:

- #Bayesian Data Analysis, 3rd ed (aka BDA3) at stat.columbia.edu/~gelman/book/

- #Regression and Other Stories at avehtari.github.io/ROS-Examples/

- Active Statistics at avehtari.github.io/ActiveStatis...

- #Bayesian Data Analysis, 3rd ed (aka BDA3) at stat.columbia.edu/~gelman/book/

- #Regression and Other Stories at avehtari.github.io/ROS-Examples/

- Active Statistics at avehtari.github.io/ActiveStatis...

Pro-tip: don't be the poor sod that adds a daily cron trigger to a GitHub Actions workflow that often fails. Long after you've left the project, you will get *daily* failure notifications, and the only way out is to trick some other poor sod into editing the workflow. docs.github.com/en/actions/c...

August 11, 2025 at 3:02 PM

Pro-tip: don't be the poor sod that adds a daily cron trigger to a GitHub Actions workflow that often fails. Long after you've left the project, you will get *daily* failure notifications, and the only way out is to trick some other poor sod into editing the workflow. docs.github.com/en/actions/c...

Like the authors, I also found this result disturbing.

The crux is that *conditioning* a distribution to lie on a manifold is *not* in general the same thing as *restricting* the distribution to the manifold (i.e. constraining the support and re-normalizing).

The crux is that *conditioning* a distribution to lie on a manifold is *not* in general the same thing as *restricting* the distribution to the manifold (i.e. constraining the support and re-normalizing).

Natha\"el Da Costa, Marvin Pf\"ortner, Jon Cockayne: Constructive Disintegration and Conditional Modes https://arxiv.org/abs/2508.00617 https://arxiv.org/pdf/2508.00617 https://arxiv.org/html/2508.00617

August 8, 2025 at 9:34 AM

Like the authors, I also found this result disturbing.

The crux is that *conditioning* a distribution to lie on a manifold is *not* in general the same thing as *restricting* the distribution to the manifold (i.e. constraining the support and re-normalizing).

The crux is that *conditioning* a distribution to lie on a manifold is *not* in general the same thing as *restricting* the distribution to the manifold (i.e. constraining the support and re-normalizing).

For the Bayesians, it's the Monte Hall problem.

August 8, 2025 at 8:29 AM

For the Bayesians, it's the Monte Hall problem.

Finally, a part of my Fairphone 5 stopped working. For any other phone, I'd be dropping >500€ right now to replace it with a marginally better brand new phone that would last me just 2-3 years. Instead, I paid 41€, had the new part in 4 days, replaced it in 5 minutes, and the phone is good as new!

August 5, 2025 at 12:46 PM

Finally, a part of my Fairphone 5 stopped working. For any other phone, I'd be dropping >500€ right now to replace it with a marginally better brand new phone that would last me just 2-3 years. Instead, I paid 41€, had the new part in 4 days, replaced it in 5 minutes, and the phone is good as new!

Sharing this here a bit late, but @vstaros.bsky.social and I wrote a little something about our experience contributing to the @sbi-devs.bsky.social (simulation-based inference) hackathon. @mlcolab.org @mackelab.bsky.social

We were obviously very hungry while writing.

We were obviously very hungry while writing.

A retrospective on the 2025 SBI Hackathon

You walk into a bakery, take one bite of a still-warm pastry, and think: “Whoa - there’s rye flour, a hint of orange zest, maybe cardamom… and is that buckwheat honey?” From that single taste you begi...

mlcolab.org

July 31, 2025 at 11:54 AM

Sharing this here a bit late, but @vstaros.bsky.social and I wrote a little something about our experience contributing to the @sbi-devs.bsky.social (simulation-based inference) hackathon. @mlcolab.org @mackelab.bsky.social

We were obviously very hungry while writing.

We were obviously very hungry while writing.

Spent a pleasant morning organizing GitHub repos and notifications, responding to issues and PRs, and answering questions on Slack. Reminder that FOSS is often about building a community as much as building software!

July 24, 2025 at 9:20 AM

Spent a pleasant morning organizing GitHub repos and notifications, responding to issues and PRs, and answering questions on Slack. Reminder that FOSS is often about building a community as much as building software!

Reposted by Seth Axen 🪓

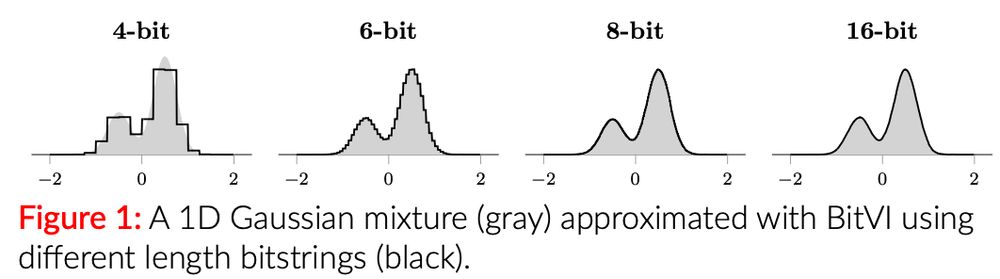

Remember that computers use bitstrings to represent numbers? We exploit this in our recent @auai.org paper and introduce #BitVI.

#BitVI directly learns an approximation in the space of bitstring representations, thus, capturing complex distributions under varying numerical precision regimes.

#BitVI directly learns an approximation in the space of bitstring representations, thus, capturing complex distributions under varying numerical precision regimes.

July 21, 2025 at 11:41 AM

If you run a DuckDuckGo search for "qr decomposition", it returns a QR code for "decomposition".

July 15, 2025 at 9:24 AM

If you run a DuckDuckGo search for "qr decomposition", it returns a QR code for "decomposition".

Reposted by Seth Axen 🪓

Can LLMs access and describe their own internal distributions? With my colleagues at Apple, I invite you to take a leap forward and make LLM uncertainty quantification what it can be.

📄 arxiv.org/abs/2505.20295

💻 github.com/apple/ml-sel...

🧵1/9

📄 arxiv.org/abs/2505.20295

💻 github.com/apple/ml-sel...

🧵1/9

July 3, 2025 at 9:08 AM

Can LLMs access and describe their own internal distributions? With my colleagues at Apple, I invite you to take a leap forward and make LLM uncertainty quantification what it can be.

📄 arxiv.org/abs/2505.20295

💻 github.com/apple/ml-sel...

🧵1/9

📄 arxiv.org/abs/2505.20295

💻 github.com/apple/ml-sel...

🧵1/9

This paper is an absolute work of art.

"I’m really sorry, but an unexpected family obligation has come up" - How well can AIs write social excuses? arxiv.org/pdf/2506.13685

June 20, 2025 at 7:42 AM

This paper is an absolute work of art.

Normalize v1.0 of software adding no new features. 1.0 comes with an expectation of greater stability than pre-1.0 releases. Debuting new features in that release makes it less stable, not more. Put the new features in an earlier release and let them stabilize *before* the 1.0.

June 7, 2025 at 9:20 AM

Normalize v1.0 of software adding no new features. 1.0 comes with an expectation of greater stability than pre-1.0 releases. Debuting new features in that release makes it less stable, not more. Put the new features in an earlier release and let them stabilize *before* the 1.0.

I strongly suspect my 4-year-old has figured out how to vomit at will so he can stay home from preschool and "watch TV."

June 2, 2025 at 10:00 AM

I strongly suspect my 4-year-old has figured out how to vomit at will so he can stay home from preschool and "watch TV."

Reposted by Seth Axen 🪓

We are incredible happy to be able to continue our work of developing new #AI4science across a wide range of disciplines with incredible colleagues in #physics, #neuroscience, #cogsci, #geoscience, #linguistics, #economics, #medicine, #philosophy, #law and #anthropology!

@unituebingen.bsky.social

@unituebingen.bsky.social

We're super happy: Our Cluster of Excellence will continue to receive funding from the German Research Foundation @dfg.de ! Here’s to 7 more years of exciting research at the intersection of #machinelearning and science! Find out more: uni-tuebingen.de/en/research/... #ExcellenceStrategy

May 23, 2025 at 7:11 AM

We are incredible happy to be able to continue our work of developing new #AI4science across a wide range of disciplines with incredible colleagues in #physics, #neuroscience, #cogsci, #geoscience, #linguistics, #economics, #medicine, #philosophy, #law and #anthropology!

@unituebingen.bsky.social

@unituebingen.bsky.social

I am literally begging you, if in your paper you summarize the features of software *that you do not use*, at the bare minimum have a user of that software (even better, a maintainer) look over that bit of text before submitting.

Sincerely, tired-of-software-I-worked-on-being-misrepresented

Sincerely, tired-of-software-I-worked-on-being-misrepresented

May 23, 2025 at 8:04 AM

I am literally begging you, if in your paper you summarize the features of software *that you do not use*, at the bare minimum have a user of that software (even better, a maintainer) look over that bit of text before submitting.

Sincerely, tired-of-software-I-worked-on-being-misrepresented

Sincerely, tired-of-software-I-worked-on-being-misrepresented

Reposted by Seth Axen 🪓

Amazing Best Paper award talk on Variational inference theory by Charles Margossian @charlesm993.bsky.social at #aistats

What does VI learn and under which conditions?

-> arxiv.org/pdf/2410.11067

What does VI learn and under which conditions?

-> arxiv.org/pdf/2410.11067

May 4, 2025 at 5:17 AM

Amazing Best Paper award talk on Variational inference theory by Charles Margossian @charlesm993.bsky.social at #aistats

What does VI learn and under which conditions?

-> arxiv.org/pdf/2410.11067

What does VI learn and under which conditions?

-> arxiv.org/pdf/2410.11067

![Comic. A snake slithers around a hypercube. No two non-consecutive parts of its coils can be on adjacent corners. [Three small 4-dimensional hypercubes showing disallowed options with one large cube with snake wrapped around it. Dimensions = 4. Max Length - 7.] Snake(N) = Longest snake that can fit in an n-dimensional hypercube. Snake(N=1, 2, 3…8) = 1, 2, 4, 7, 13, 26, 50, 98. Snake(N>8) = UNSOLVED. [caption] It turns out every scientific field has a key thought experiment that involves putting a cute animal in a weird box for no reason. So far, quantum mechanics and graph theory have found theirs, but most other fields are still working on it.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:cz73r7iyiqn26upot4jtjdhk/bafkreicjbjwxfe3ef6styvrvsjtt63znh24tfuqxlceewi3b6t7zfu756i@jpeg)