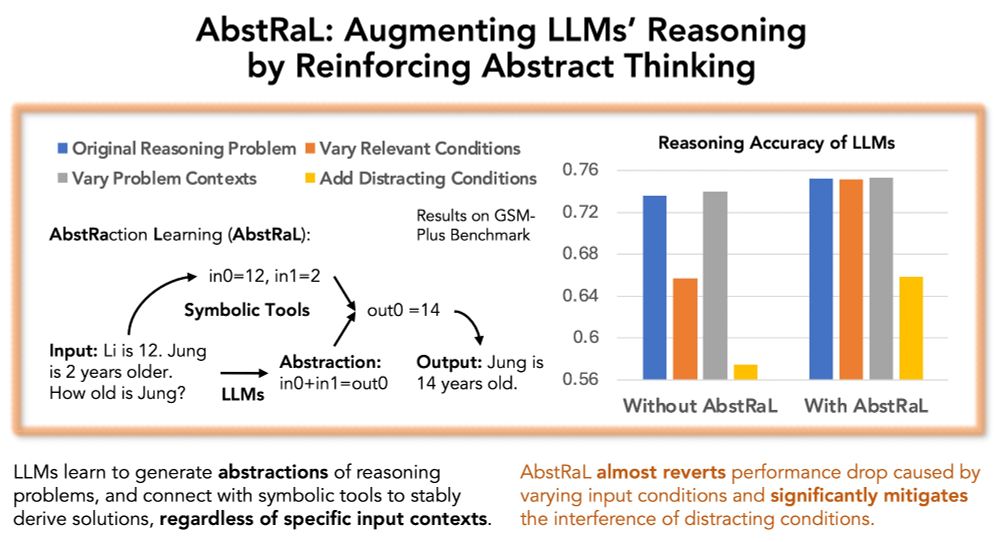

📄 arxiv.org/abs/2505.20295

💻 github.com/apple/ml-sel...

🧵1/9

Details & Application ➡️ jobs.apple.com/en-my/detail...

Details & Application ➡️ jobs.apple.com/en-my/detail...

The tutorial to do so is here: ott-jax.readthedocs.io/tutorials/ne...

The tutorial to do so is here: ott-jax.readthedocs.io/tutorials/ne...

The Apple Machine Learning Research (MLR) team in Paris is hiring a few interns, to do cool research for ±6 months 🚀🚀 & work towards publications/OSS.

Check requirements and apply: ➡️ jobs.apple.com/en-us/detail...

More❓→ ✉️ mlr_paris_internships@group.apple.com

The Apple Machine Learning Research (MLR) team in Paris is hiring a few interns, to do cool research for ±6 months 🚀🚀 & work towards publications/OSS.

Check requirements and apply: ➡️ jobs.apple.com/en-us/detail...

More❓→ ✉️ mlr_paris_internships@group.apple.com

📑 arxiv.org/abs/2510.02375

[1/10]🧵

📑 arxiv.org/abs/2510.02375

[1/10]🧵

Relying on optimal transport couplings (to pick noise and data pairs) should, in principle, be helpful to guide flow matching

🧵

Relying on optimal transport couplings (to pick noise and data pairs) should, in principle, be helpful to guide flow matching

🧵

arxiv.org/pdf/2505.20295

arxiv.org/pdf/2505.20295

Here's everything from me and other folks at Apple: machinelearning.apple.com/updates/appl...

Here's everything from me and other folks at Apple: machinelearning.apple.com/updates/appl...

📄 arxiv.org/abs/2505.20295

💻 github.com/apple/ml-sel...

🧵1/9

📄 arxiv.org/abs/2505.20295

💻 github.com/apple/ml-sel...

🧵1/9

Sign-up and join our Interspeech tutorial: Speech Technology Meets Early Language Acquisition: How Interdisciplinary Efforts Benefit Both Fields. 🗣️👶

www.interspeech2025.org/tutorials

⬇️ (1/2)

Sign-up and join our Interspeech tutorial: Speech Technology Meets Early Language Acquisition: How Interdisciplinary Efforts Benefit Both Fields. 🗣️👶

www.interspeech2025.org/tutorials

⬇️ (1/2)

www.nature.com/articles/s41...

www.nature.com/articles/s41...

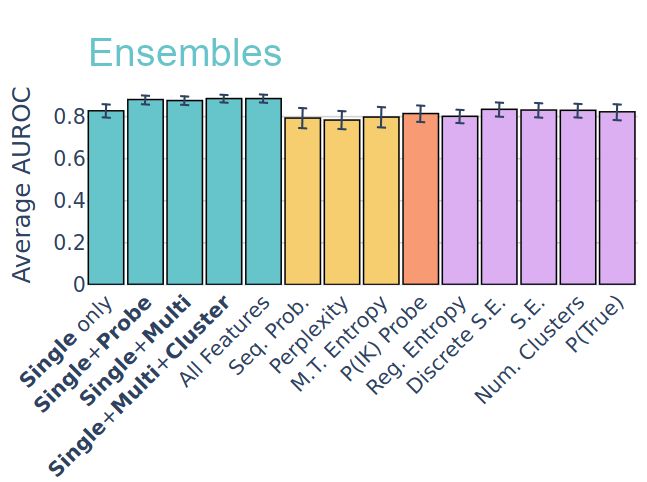

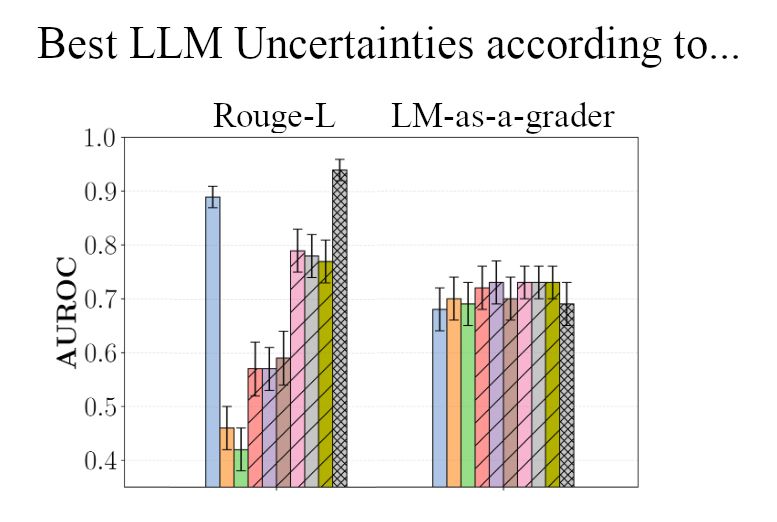

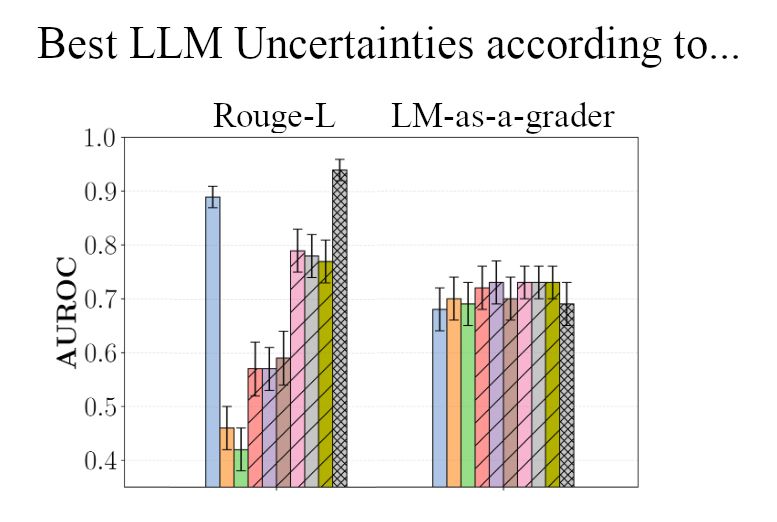

📄 openreview.net/forum?id=QKR...

NeurIPS: Sunday, East Exhibition Hall A, Safe Gen AI workshop

📄 openreview.net/forum?id=QKR...

NeurIPS: Sunday, East Exhibition Hall A, Safe Gen AI workshop

Check out our work at NeurIPS!

Feel free to reach out for a chat!

📄 openreview.net/forum?id=jGt...

NeurIPS: Sunday, East Exhibition Hall A, Safe Gen AI workshop

Check out our work at NeurIPS!

Feel free to reach out for a chat!

📄 openreview.net/forum?id=jGt...

NeurIPS: Sunday, East Exhibition Hall A, Safe Gen AI workshop

📄 openreview.net/forum?id=jGt...

NeurIPS: Sunday, East Exhibition Hall A, Safe Gen AI workshop

After 7 amazing years at Google Brain/DM, I am joining OpenAI. Together with @xzhai.bsky.social and @giffmana.ai, we will establish OpenAI Zurich office. Proud of our past work and looking forward to the future.

After 7 amazing years at Google Brain/DM, I am joining OpenAI. Together with @xzhai.bsky.social and @giffmana.ai, we will establish OpenAI Zurich office. Proud of our past work and looking forward to the future.