Anchor model: 0.8GB @ RAM

Level 1: 39GB @ Flash

Level 2: 155GB @ External Disk

Level 3: 618GB @ Cloud

Total fetch time: 38ms (vs. 198ms for a single-level flat memory bank). [9/10]

Anchor model: 0.8GB @ RAM

Level 1: 39GB @ Flash

Level 2: 155GB @ External Disk

Level 3: 618GB @ Cloud

Total fetch time: 38ms (vs. 198ms for a single-level flat memory bank). [9/10]

Unlike typical architectures, the proposed memory bank setup enables controlled parametric knowledge access (e.g., for training data privacy). See the impact of memory bank blocking on performance here: [7/10]

Unlike typical architectures, the proposed memory bank setup enables controlled parametric knowledge access (e.g., for training data privacy). See the impact of memory bank blocking on performance here: [7/10]

For the text completion task "Atomic number of [element-name] is...", the baseline model (purple) has 17% accuracy for the least frequent elements in DCLM (last bucket). With only 10% added memory, accuracy improves to 83%. [6/10]

For the text completion task "Atomic number of [element-name] is...", the baseline model (purple) has 17% accuracy for the least frequent elements in DCLM (last bucket). With only 10% added memory, accuracy improves to 83%. [6/10]

💡 Tasks requiring specific knowledge, like ARC and TriviaQA. Below are categorizations of common pretraining benchmarks based on their knowledge specificity and accuracy improvement when a 410M model is augmented with 10% memory. [5/10]

💡 Tasks requiring specific knowledge, like ARC and TriviaQA. Below are categorizations of common pretraining benchmarks based on their knowledge specificity and accuracy improvement when a 410M model is augmented with 10% memory. [5/10]

👇A 160M anchor model, augmented with memories from 1M to 300M parameters, gains over 10 points in accuracy. Two curves show memory bank sizes of 4.6B and 18.7B parameters. [4/10]

👇A 160M anchor model, augmented with memories from 1M to 300M parameters, gains over 10 points in accuracy. Two curves show memory bank sizes of 4.6B and 18.7B parameters. [4/10]

💡 We evaluate 1) FFN-memories (extending SwiGLU's internal dimension), 2) LoRA applied to various layers, and 3) Learnable KV. Larger memories perform better, with FFN-memories significantly outperforming others of the same size. [3/10]

💡 We evaluate 1) FFN-memories (extending SwiGLU's internal dimension), 2) LoRA applied to various layers, and 3) Learnable KV. Larger memories perform better, with FFN-memories significantly outperforming others of the same size. [3/10]

📑 arxiv.org/abs/2510.02375

[1/10]🧵

📑 arxiv.org/abs/2510.02375

[1/10]🧵

arxiv.org/pdf/2505.20295

arxiv.org/pdf/2505.20295

Here's everything from me and other folks at Apple: machinelearning.apple.com/updates/appl...

Here's everything from me and other folks at Apple: machinelearning.apple.com/updates/appl...

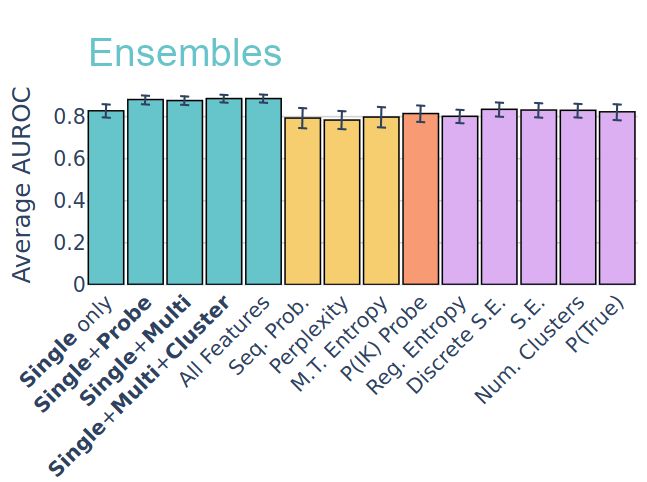

📄 arxiv.org/abs/2505.20295

💻 github.com/apple/ml-sel...

🧵1/9

📄 arxiv.org/abs/2505.20295

💻 github.com/apple/ml-sel...

🧵1/9

📄 openreview.net/forum?id=QKR...

NeurIPS: Sunday, East Exhibition Hall A, Safe Gen AI workshop

📄 openreview.net/forum?id=QKR...

NeurIPS: Sunday, East Exhibition Hall A, Safe Gen AI workshop