NLP, with a healthy dose of MT

Based in 🇮🇩, worked in 🇹🇱 🇵🇬 , from 🇫🇷

🌍 Is scaling diff by lang?

🧙♂️ Can we model the curse of multilinguality?

⚖️ Pretrain vs finetune from checkpoint?

🔀 X-lingual transfer scores across langs?

1/🧵

Tuesday @ 4pm

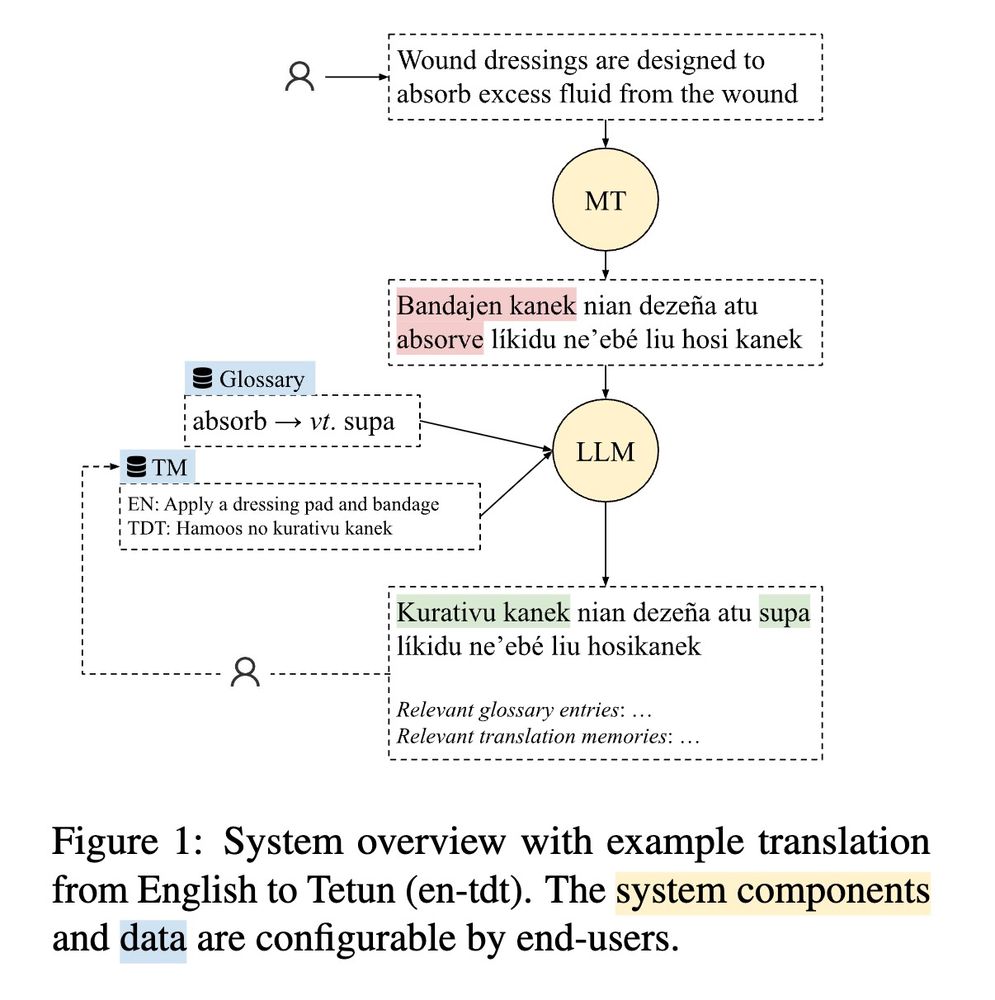

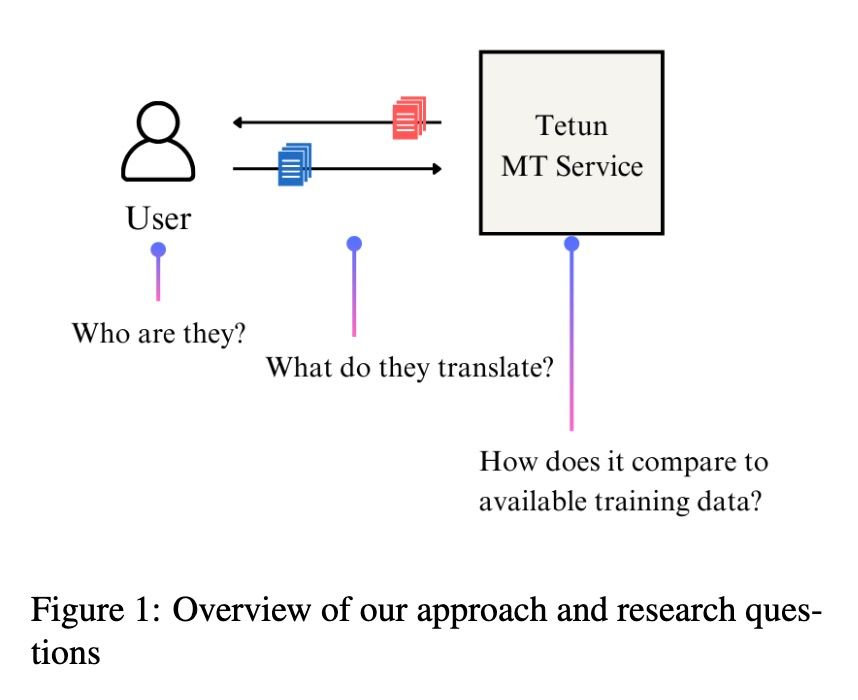

Working w 2 real use cases: medical translation into Tetun 🇹🇱 & disaster relief speech translation in Bislama 🇻🇺

Tuesday @ 4pm

Working w 2 real use cases: medical translation into Tetun 🇹🇱 & disaster relief speech translation in Bislama 🇻🇺

I like that they use linguistic datasets for their experiments, then get results that can contribute to linguistics as a field too! (on structural priming vs L1/L2)

I like that they use linguistic datasets for their experiments, then get results that can contribute to linguistics as a field too! (on structural priming vs L1/L2)

In particular Fig. 2 + this discussion point:

In particular Fig. 2 + this discussion point:

The experiments they run to show this are pretty cool too!

The @lmarena.bsky.social has become the go-to evaluation for AI progress.

Our release today demonstrates the difficulty in maintaining fair evaluations on the Arena, despite best intentions.

The experiments they run to show this are pretty cool too!

If you're doubtful of all these non-reproducible evals on translated multiple choice questions, this paper is for you

arxiv.org/abs/2504.11829

🌍It reflects experiences from my personal research journey: coming from MT into multilingual LLM research I missed reliable evaluations and evaluation research…

If you're doubtful of all these non-reproducible evals on translated multiple choice questions, this paper is for you

Just wish it supported eval of closed models (e.g. through LiteLLM?)

github.com/MaLA-LM/Glot...

Just wish it supported eval of closed models (e.g. through LiteLLM?)

github.com/MaLA-LM/Glot...

We’ve just touched down and we’re excited to be here 🌤️🐍

This is the official PyCon AU account, your go-to space for updates, announcements, and all things Python in Australia✨

Hit that follow button and stay tuned because we’ve got some awesome things coming your way!

#PyConAU

We’ve just touched down and we’re excited to be here 🌤️🐍

This is the official PyCon AU account, your go-to space for updates, announcements, and all things Python in Australia✨

Hit that follow button and stay tuned because we’ve got some awesome things coming your way!

#PyConAU

Huggingface: huggingface.co/datasets/goo...

Huggingface: huggingface.co/datasets/goo...

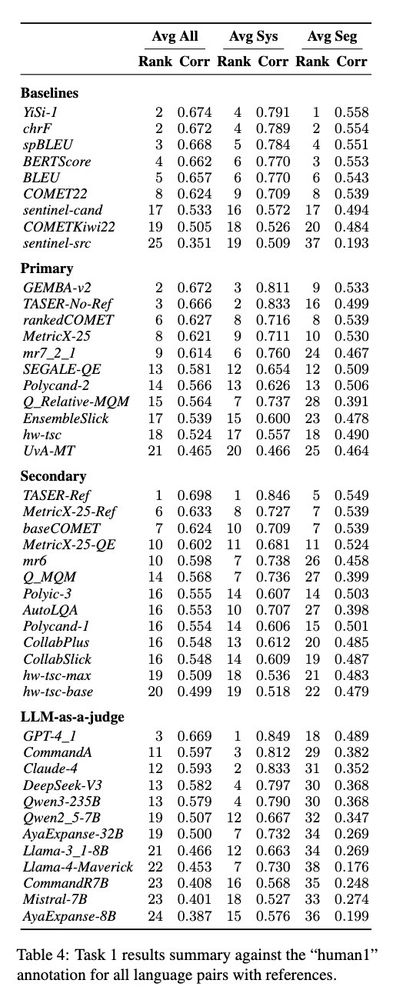

arxiv.org/abs/2410.03182

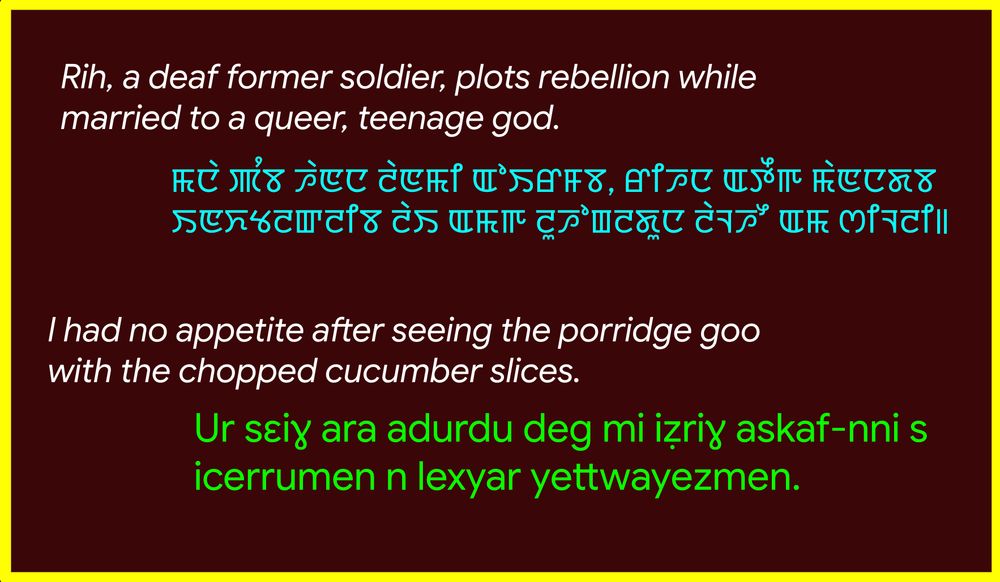

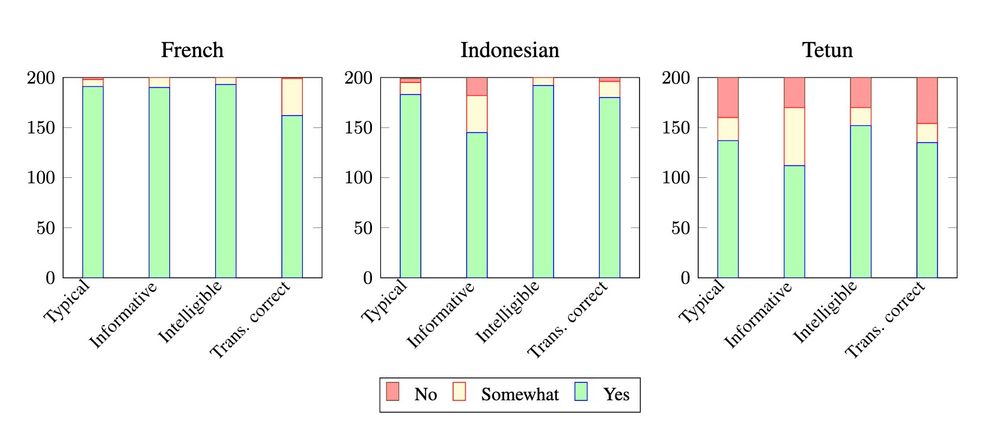

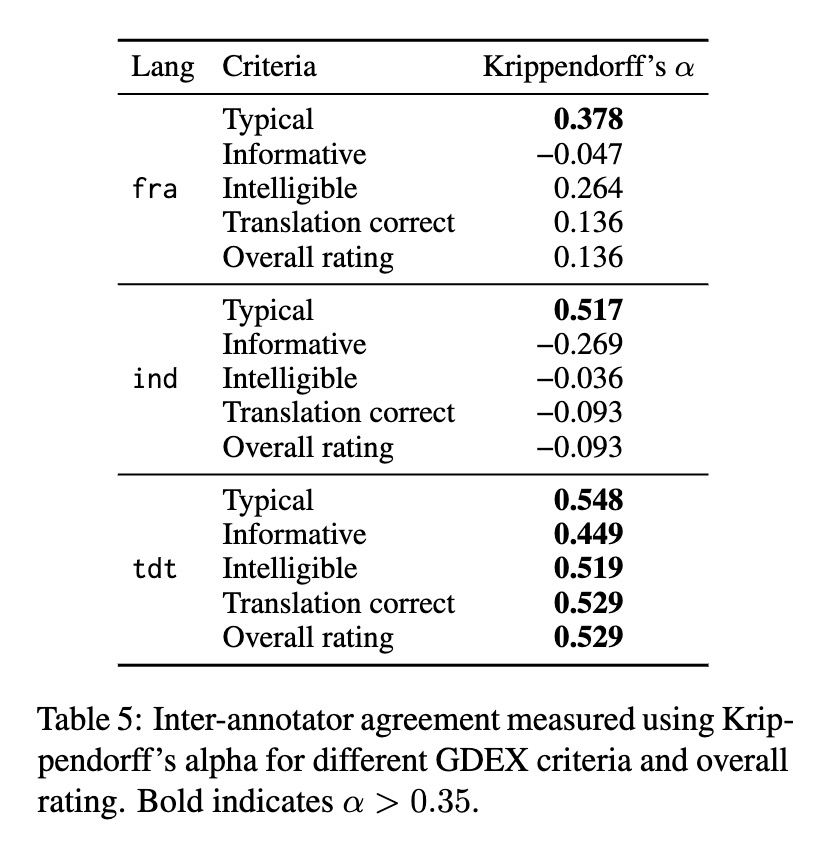

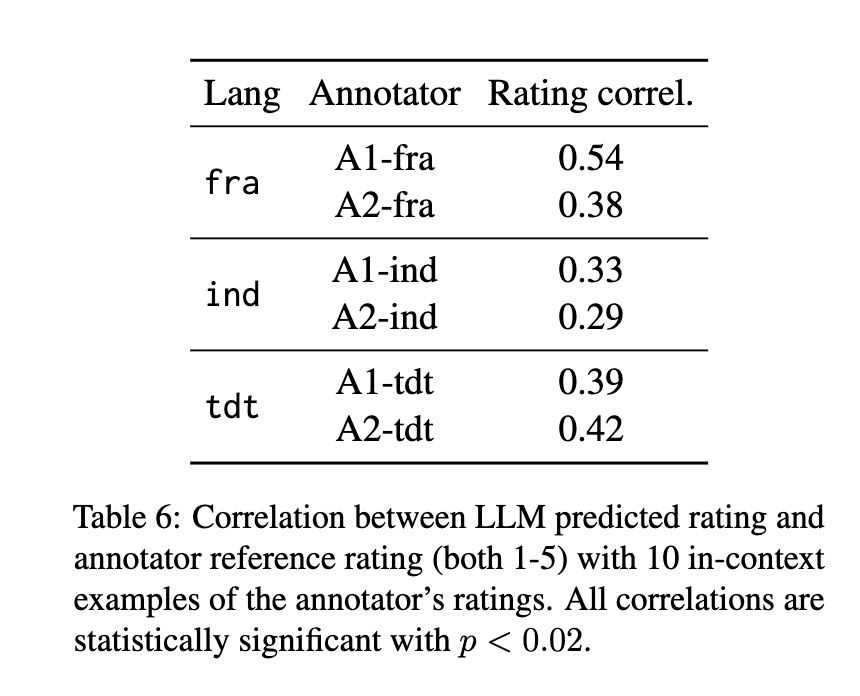

We work with French / Indonesian / Tetun, find that annotators don't agree about what's a "good example", but that LLMs can align with a specific annotator.

arxiv.org/abs/2410.03182

We work with French / Indonesian / Tetun, find that annotators don't agree about what's a "good example", but that LLMs can align with a specific annotator.

slator.com/openais-whis...

slator.com/openais-whis...

aclanthology.org/2024.emnlp-m...

aclanthology.org/2024.emnlp-m...

Slightly pretentious but enjoyable read: www.generalist.com/briefing/the...

Slightly pretentious but enjoyable read: www.generalist.com/briefing/the...