NLP, with a healthy dose of MT

Based in 🇮🇩, worked in 🇹🇱 🇵🇬 , from 🇫🇷

Paper at aclanthology.org/2025.loresmt... , video presentation at youtu.be/8zenieJWRyg

Paper at aclanthology.org/2025.loresmt... , video presentation at youtu.be/8zenieJWRyg

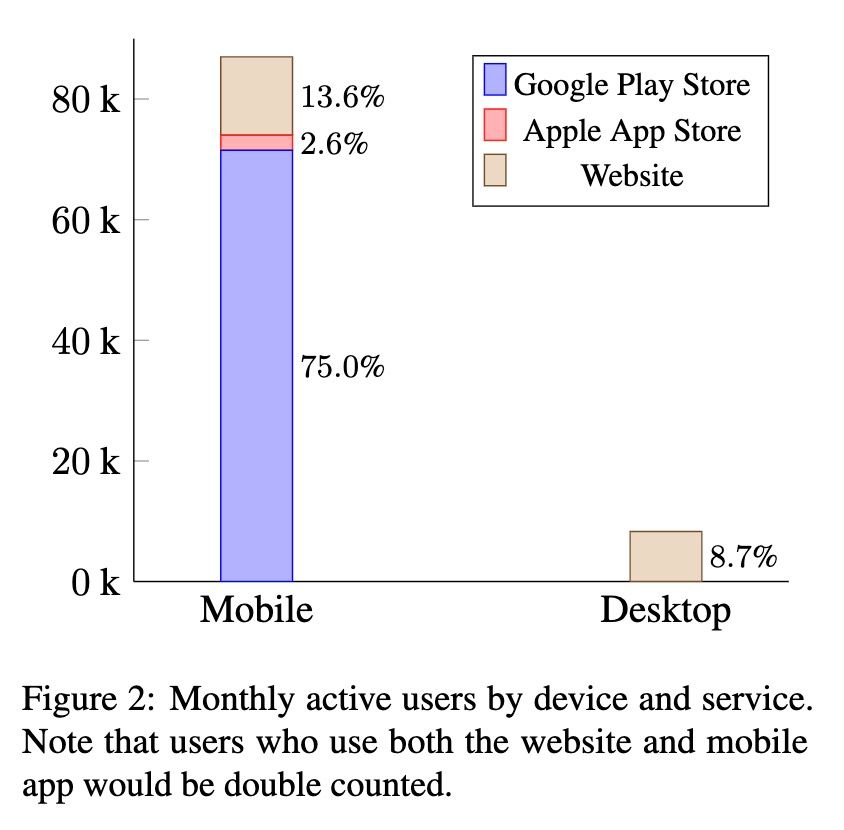

Takeaway: publishing MT model in mobile apps is probably more impactful than setting up a website / HuggingFace space.

Takeaway: publishing MT model in mobile apps is probably more impactful than setting up a website / HuggingFace space.

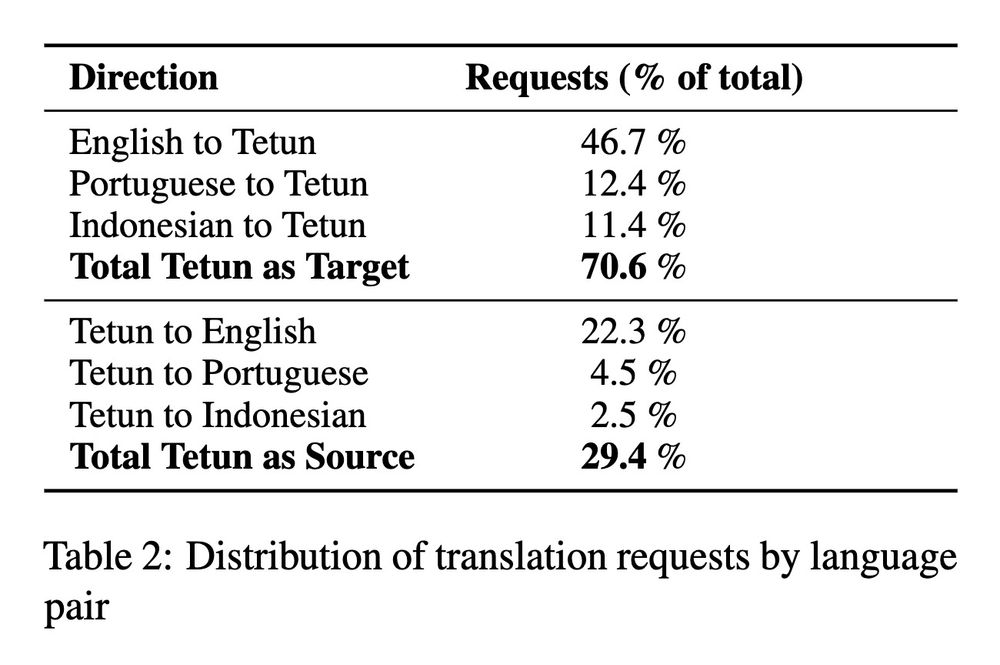

Takeaway for us MT folks: focus on translation into low-res langs, harder but more impactful

Takeaway for us MT folks: focus on translation into low-res langs, harder but more impactful

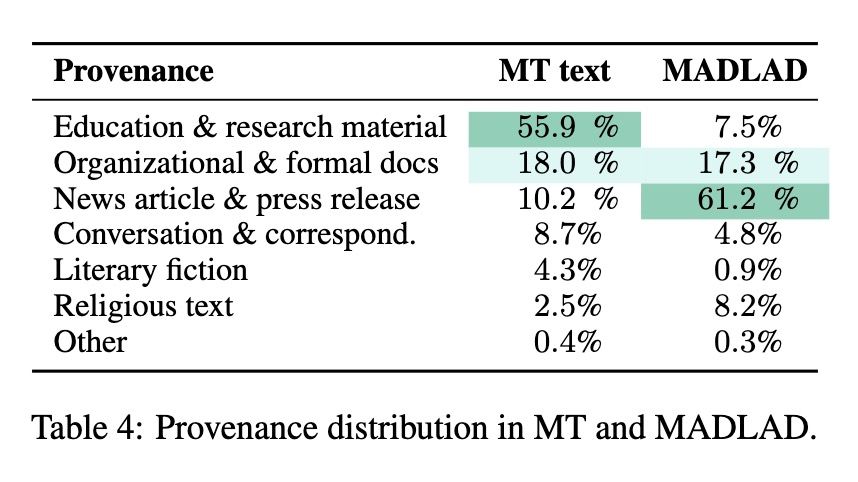

(1) a LOT of usage is for educational purposes (>50% of translated text)

--> contrasts sharply with Tetun corpora (e.g. MADLAD), dominated by news & religion.

Takeaway: don't evaluate MT on overrepresented domains (e.g. religion)! You risk misrepresenting end-user exp.

(1) a LOT of usage is for educational purposes (>50% of translated text)

--> contrasts sharply with Tetun corpora (e.g. MADLAD), dominated by news & religion.

Takeaway: don't evaluate MT on overrepresented domains (e.g. religion)! You risk misrepresenting end-user exp.

I'm esp. fond of your "researcher in the loop" method to ensure wide vocab coverage.

I'm esp. fond of your "researcher in the loop" method to ensure wide vocab coverage.