https://irenechen.net/

Apply via UC Berkeley CPH or EECS (AI-H) 🌉.

irenechen.net/join-lab/

irenechen.net/sail2025/

irenechen.net/sail2025/

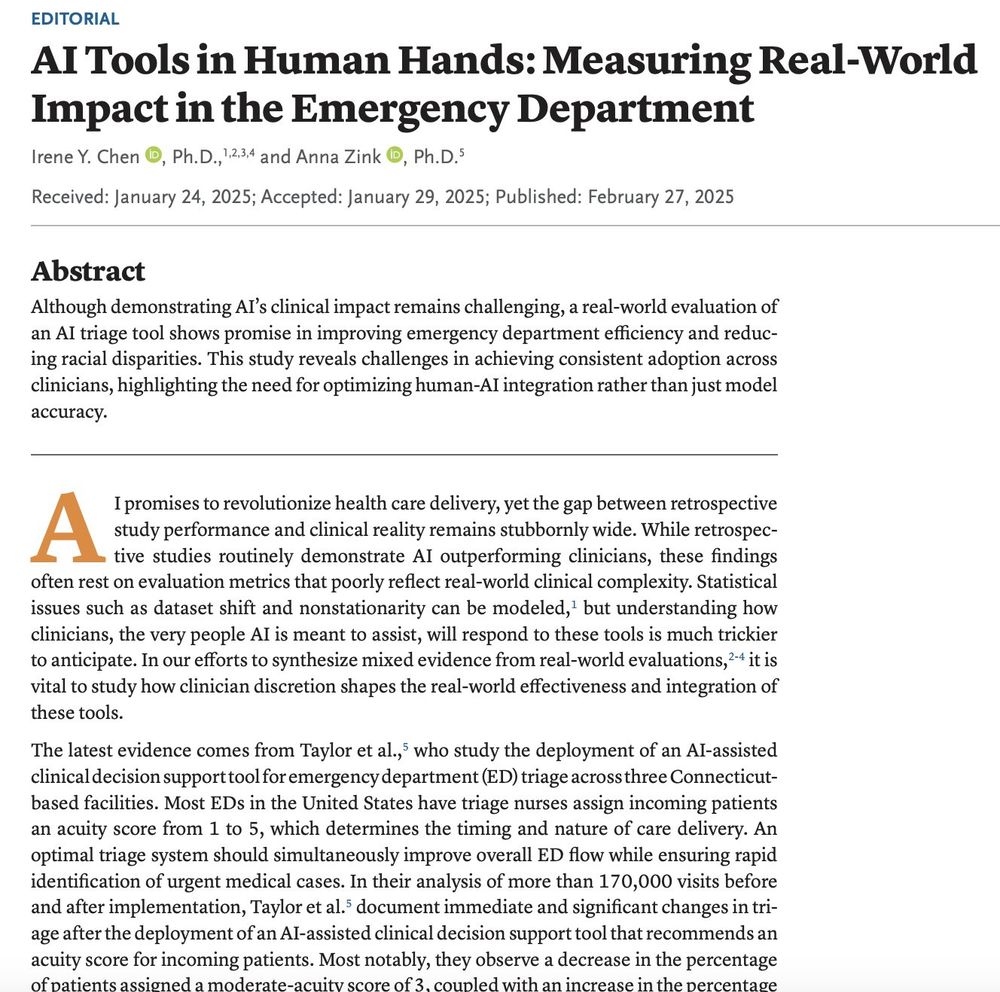

We share thoughts in @ai.nejm.org about a recent AI tool for emergency dept triage that: 1) improves wait times and fairness (!), and 2) helps nurses unevenly based on triage ability

We share thoughts in @ai.nejm.org about a recent AI tool for emergency dept triage that: 1) improves wait times and fairness (!), and 2) helps nurses unevenly based on triage ability

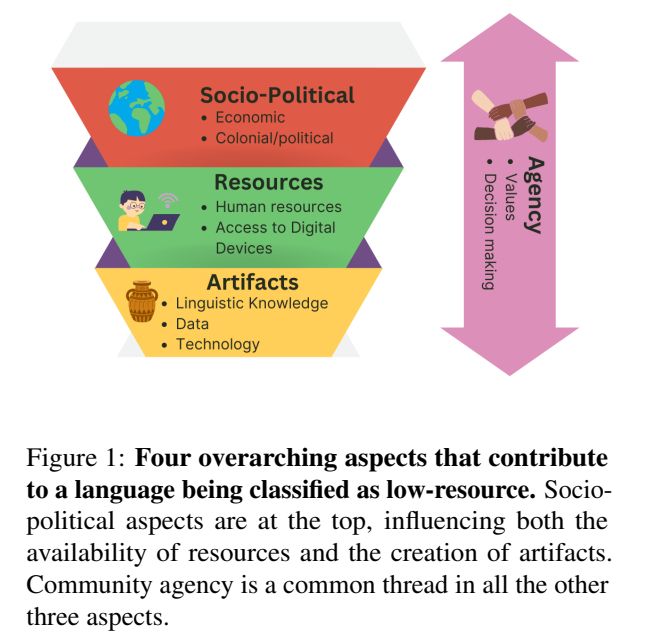

arxiv.org/pdf/2412.07924

arxiv.org/pdf/2412.07924

aclanthology.org/2024.emnlp-m...

aclanthology.org/2024.emnlp-m...

katelee168.github.io/pdfs/arbitra...

katelee168.github.io/pdfs/arbitra...

Paper: arxiv.org/pdf/2408.04154

Workshop: simons.berkeley.edu/workshops/do...

Paper: arxiv.org/pdf/2408.04154

Workshop: simons.berkeley.edu/workshops/do...

Apply via UC Berkeley CPH or EECS (AI-H) 🌉.

irenechen.net/join-lab/

Apply via UC Berkeley CPH or EECS (AI-H) 🌉.

irenechen.net/join-lab/