Webpage: https://ldornfeld.github.io/

www.engelhorn-stiftung.de/forschungspr...

www.engelhorn-stiftung.de/forschungspr...

A brief reflection on what skills may (hopefully) remain relevant in the new (hopefully bright) future of LLMs et al.

#AcademicWriting #AcademicChatter

bastian.rieck.me/blog/2025/sk...

A brief reflection on what skills may (hopefully) remain relevant in the new (hopefully bright) future of LLMs et al.

#AcademicWriting #AcademicChatter

bastian.rieck.me/blog/2025/sk...

Applications are due Dec 1: make sure you include a research statement!

jobs.careers.microsoft.com/global/en/jo...

Applications are due Dec 1: make sure you include a research statement!

jobs.careers.microsoft.com/global/en/jo...

www.techpolicy.press/generative-a...

www.techpolicy.press/generative-a...

We developed salad (sparse all-atom denoising), a family of blazing fast protein structure diffusion models.

Paper: nature.com/articles/s42256-…

Code: github.com/mjendrusch/salad

Data: zenodo.org/records/14711580

1/🧵

Salad is significantly faster than comparable methods, and designing proteins that don't exist in nature can have applications in many scientific fields.

www.nature.com/articles/s42...

We developed salad (sparse all-atom denoising), a family of blazing fast protein structure diffusion models.

Paper: nature.com/articles/s42256-…

Code: github.com/mjendrusch/salad

Data: zenodo.org/records/14711580

1/🧵

Find out which early-career researchers will receive funding this year, what they will be investigating, where they will be based... plus lots of other #ERCStG facts & figures for 2025!

➡️ buff.ly/IsafuFh

#FrontierResearch 🇪🇺#EUfunded #HorizonEurope

communities.springernature.com/posts/behind...

communities.springernature.com/posts/behind...

In which I, somewhat coherently, try to contribute to the whole "Is AI Conscious" debate.

🔗 bastian.rieck.me/blog/2025/co...

#AI #MachineLearning

In which I, somewhat coherently, try to contribute to the whole "Is AI Conscious" debate.

🔗 bastian.rieck.me/blog/2025/co...

#AI #MachineLearning

We addressed a long-standing question that people debated already in the 1970s - but it still remained open:

Do proteins in crystals move as they do in solution? We used ring flips to find out.

1/n

We addressed a long-standing question that people debated already in the 1970s - but it still remained open:

Do proteins in crystals move as they do in solution? We used ring flips to find out.

1/n

AtomWorks (the foundational data pipeline powering it) is perhaps the really most exciting part of this release!

Congratulations @simonmathis.bsky.social and team!!! ❤️

bioRxiv preprint: www.biorxiv.org/content/10.1...

AtomWorks (the foundational data pipeline powering it) is perhaps the really most exciting part of this release!

Congratulations @simonmathis.bsky.social and team!!! ❤️

bioRxiv preprint: www.biorxiv.org/content/10.1...

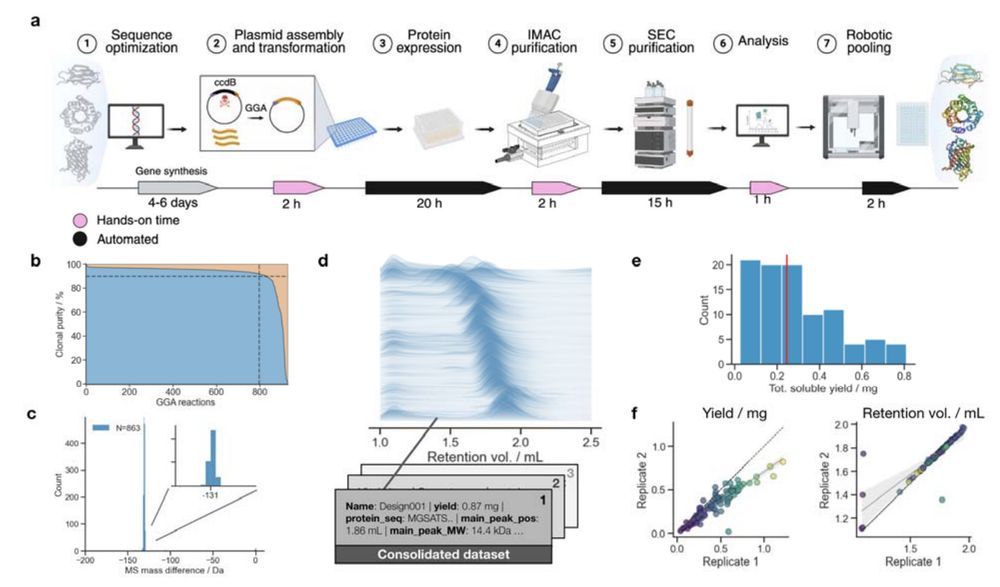

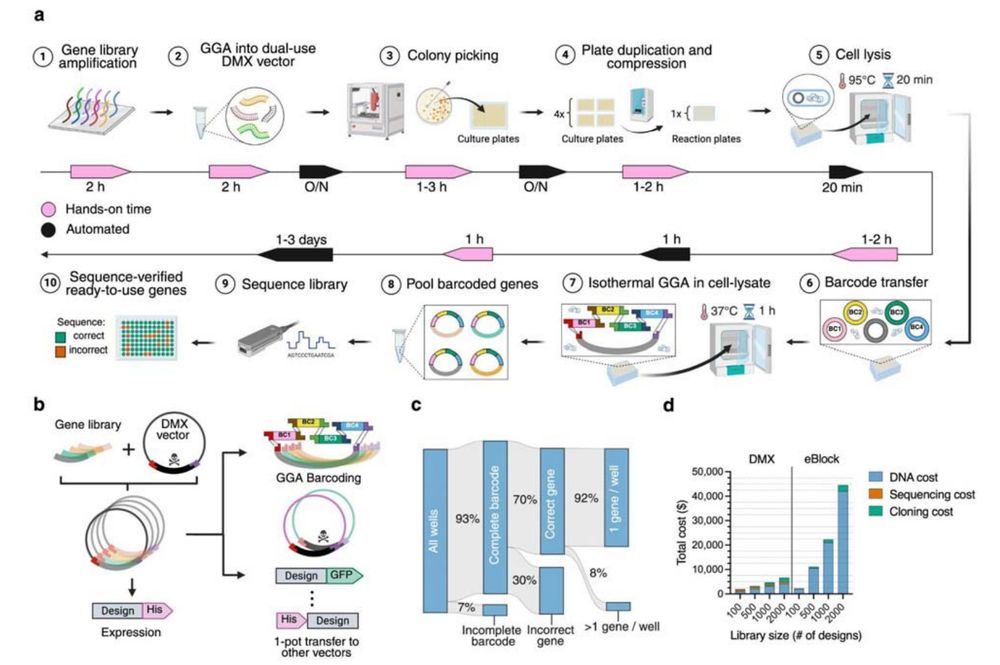

- A rapid, scalable, pipeline for producing and characterizing proteins

- A demultiplexing protocol for converting oligopools to clonal constructs

Jason Qian @lfmilles.bsky.social Basile Wicky

- A rapid, scalable, pipeline for producing and characterizing proteins

- A demultiplexing protocol for converting oligopools to clonal constructs

Jason Qian @lfmilles.bsky.social Basile Wicky

Ubiquitin-specific protease 7 (pdb 4M5W)

It’s been a while since I last drew anything. Forgot how fun it is.

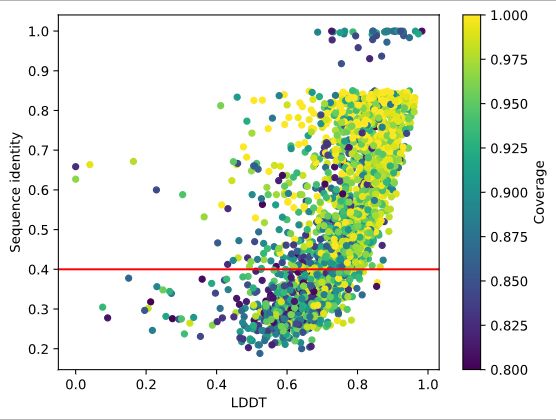

Zhidian Zhang, @milot.bsky.social, @martinsteinegger.bsky.social, and @sokrypton.org

biorxiv.org/content/10.1...

TLDR: We introduce MSA Pairformer, a 111M parameter protein language model that challenges the scaling paradigm in self-supervised protein language modeling🧵

Zhidian Zhang, @milot.bsky.social, @martinsteinegger.bsky.social, and @sokrypton.org

biorxiv.org/content/10.1...

TLDR: We introduce MSA Pairformer, a 111M parameter protein language model that challenges the scaling paradigm in self-supervised protein language modeling🧵

greglandrum.github.io/rdkit-blog/p...

greglandrum.github.io/rdkit-blog/p...

What would that even mean?

Our new ICML paper (poster tomorrow!) formalizes these questions.

One result tells the story: A transformer trained on 10M solar systems nails planetary orbits. But it botches gravitational laws 🧵

What would that even mean?

Our new ICML paper (poster tomorrow!) formalizes these questions.

One result tells the story: A transformer trained on 10M solar systems nails planetary orbits. But it botches gravitational laws 🧵