https://keyonvafa.com

Next (two weeks): Alexander Vezhnevets talks about a new multi-actor generative agent based model. As usual, *all welcome* #datascience #css💡🤖🔥

Next (two weeks): Alexander Vezhnevets talks about a new multi-actor generative agent based model. As usual, *all welcome* #datascience #css💡🤖🔥

Next, we have @keyonv.bsky.social asking: "What are AI's World Models?". Exciting times over here, all welcome!💡🤖🔥

Next, we have @keyonv.bsky.social asking: "What are AI's World Models?". Exciting times over here, all welcome!💡🤖🔥

What would that even mean?

Our new ICML paper (poster tomorrow!) formalizes these questions.

One result tells the story: A transformer trained on 10M solar systems nails planetary orbits. But it botches gravitational laws 🧵

What would that even mean?

Our new ICML paper (poster tomorrow!) formalizes these questions.

One result tells the story: A transformer trained on 10M solar systems nails planetary orbits. But it botches gravitational laws 🧵

medium.com/@gsb_silab/k...

medium.com/@gsb_silab/k...

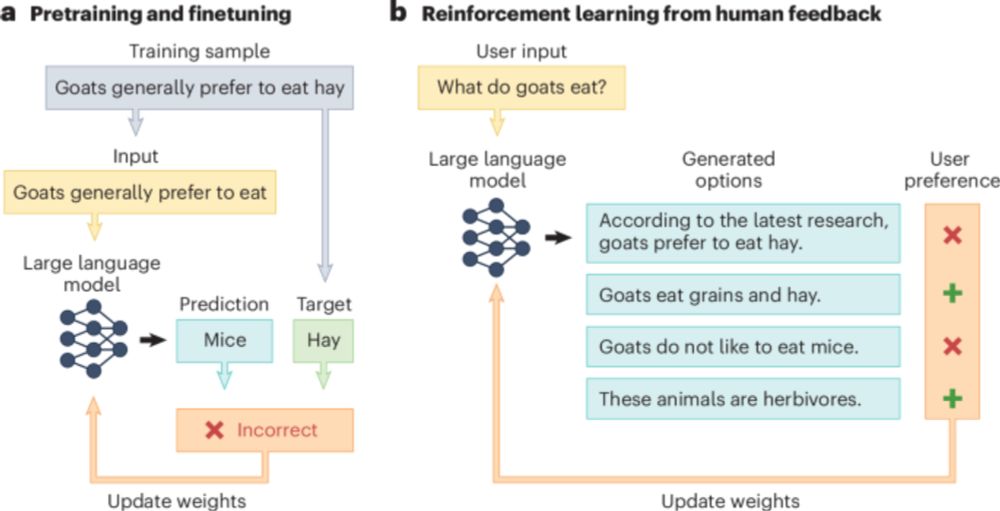

New PNAS paper @susanathey.bsky.social & @keyonv.bsky.social & @Blei Lab:

Bad news: Good predictions ≠ good estimates.

Good news: Good estimates possible by fine-tuning models differently 🧵

New PNAS paper @susanathey.bsky.social & @keyonv.bsky.social & @Blei Lab:

Bad news: Good predictions ≠ good estimates.

Good news: Good estimates possible by fine-tuning models differently 🧵

Check out the amazing (original) paper here: www.nature.com/articles/s43...

Check out the amazing (original) paper here: www.nature.com/articles/s43...

Today I'll be presenting our spotlight paper on evaluating LLM world models at the 4:30pm poster session (#2301).

On Saturday I'll be co-organizing the Behavioral ML workshop. Hope to see you there!

Paper: arxiv.org/abs/2406.03689

Workshop: behavioralml.org

Today I'll be presenting our spotlight paper on evaluating LLM world models at the 4:30pm poster session (#2301).

On Saturday I'll be co-organizing the Behavioral ML workshop. Hope to see you there!

Paper: arxiv.org/abs/2406.03689

Workshop: behavioralml.org

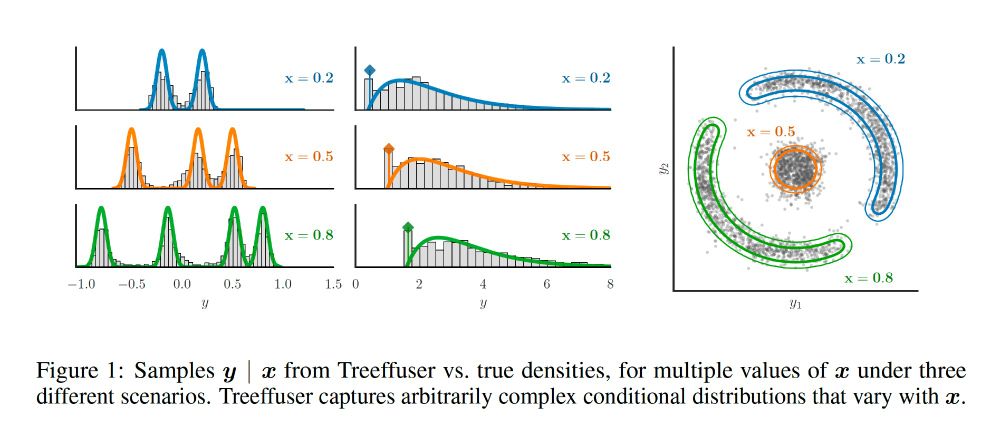

This is joint work with Sudalakshmee Chiniah and my advisor @nkgarg.bsky.social

Description/links below: 1/

This is joint work with Sudalakshmee Chiniah and my advisor @nkgarg.bsky.social

Description/links below: 1/

paper: arxiv.org/abs/2406.07658

repo: github.com/blei-lab/tre...

🧵(1/8)

paper: arxiv.org/abs/2406.07658

repo: github.com/blei-lab/tre...

🧵(1/8)