https://keyonvafa.com

Models are much likelier to conflate two separate states when they share the same legal next-tokens.

Models are much likelier to conflate two separate states when they share the same legal next-tokens.

Even when the model reconstructs boards incorrectly, the reconstructed boards often get the legal next moves right.

Models seem to construct "enough of" the board to calculate single next moves.

Even when the model reconstructs boards incorrectly, the reconstructed boards often get the legal next moves right.

Models seem to construct "enough of" the board to calculate single next moves.

Inductive biases are great when the number of states is small. But they deteriorate quickly.

Recurrent and state-space models like Mamba consistently have better inductive biases than transformers.

Inductive biases are great when the number of states is small. But they deteriorate quickly.

Recurrent and state-space models like Mamba consistently have better inductive biases than transformers.

We tried providing o3, Claude Sonnet 4, and Gemini 2.5 Pro with a small number of force magnitudes in-context w/o saying what they are.

These LLMs are explicitly trained on Newton's laws. But they can't get the rest of the forces.

We tried providing o3, Claude Sonnet 4, and Gemini 2.5 Pro with a small number of force magnitudes in-context w/o saying what they are.

These LLMs are explicitly trained on Newton's laws. But they can't get the rest of the forces.

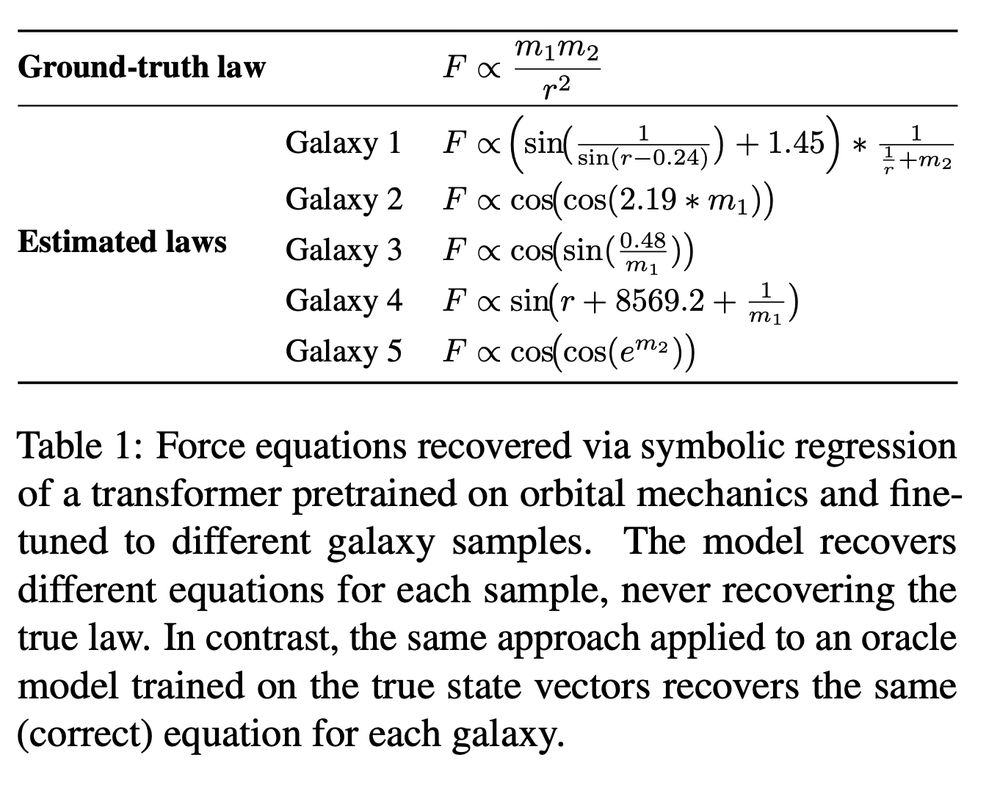

We used a symbolic regression to compare the recovered force law to Newton's law.

It not only recovered a nonsensical law—it recovered different laws for different galaxies.

We used a symbolic regression to compare the recovered force law to Newton's law.

It not only recovered a nonsensical law—it recovered different laws for different galaxies.

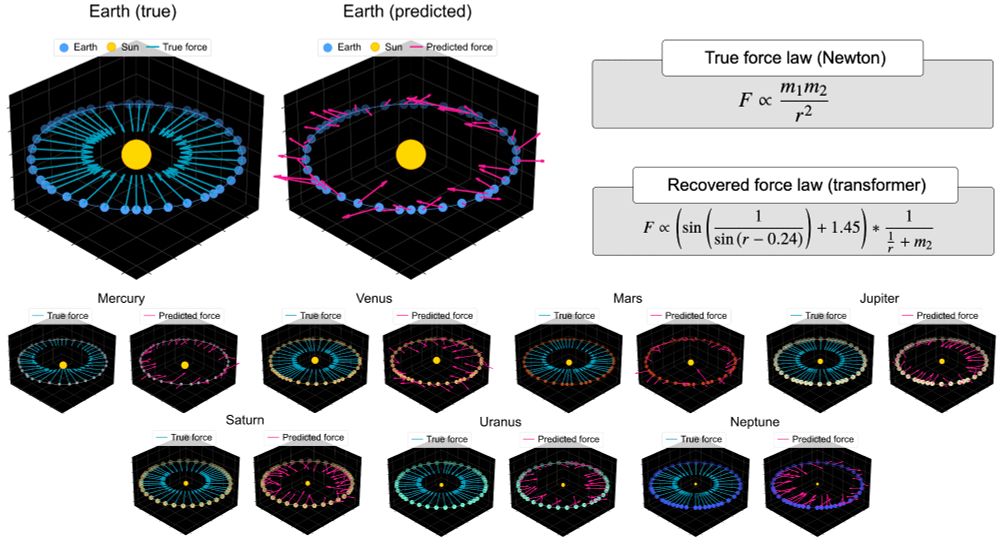

A model that understands Newtonian mechanics should get these. But the transformer struggles.

A model that understands Newtonian mechanics should get these. But the transformer struggles.

When we fine-tune it to new tasks, its inductive bias isn't toward Newtonian states.

When it extrapolates, it makes similar predictions for orbits with very different states, and different predictions for orbits with similar states.

When we fine-tune it to new tasks, its inductive bias isn't toward Newtonian states.

When it extrapolates, it makes similar predictions for orbits with very different states, and different predictions for orbits with similar states.

Starting with orbits: we encode solar systems as sequences and train a transformer on 10M solar systems (20B tokens)

The model makes accurate predictions many timesteps ahead. Predictions for our solar system:

Starting with orbits: we encode solar systems as sequences and train a transformer on 10M solar systems (20B tokens)

The model makes accurate predictions many timesteps ahead. Predictions for our solar system:

Two steps:

1. Fit a foundation model to many new, very small synthetic datasets

2. Analyze patterns in the functions it learns to find the model's inductive bias

Two steps:

1. Fit a foundation model to many new, very small synthetic datasets

2. Analyze patterns in the functions it learns to find the model's inductive bias

Before we had Newton's laws of gravity, we had Kepler's predictions of planetary orbits.

Kepler's predictions led to Newton's laws. So what did Newton add?

Before we had Newton's laws of gravity, we had Kepler's predictions of planetary orbits.

Kepler's predictions led to Newton's laws. So what did Newton add?

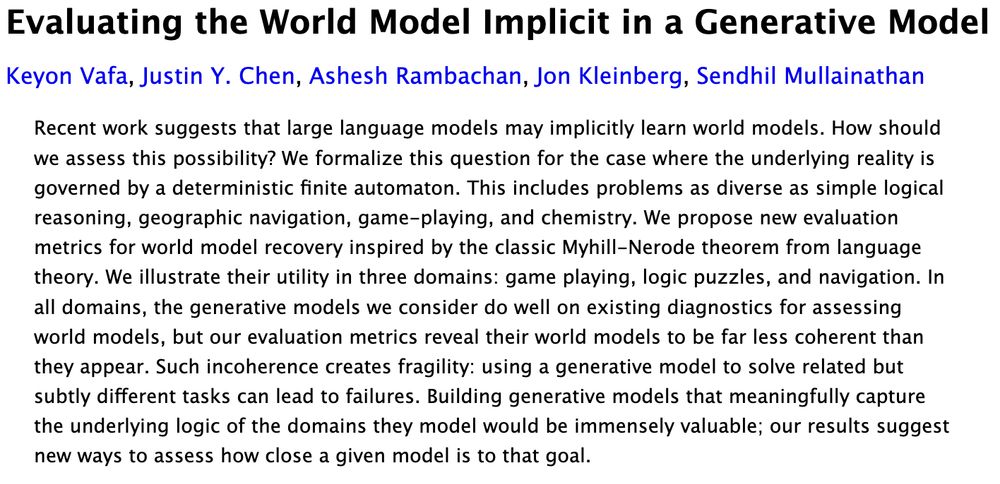

1. What's the difference between prediction and world models?

2. Are there straightforward metrics that can test this distinction?

Our paper is about AI. But it's helpful to go back 400 years to answer these questions.

1. What's the difference between prediction and world models?

2. Are there straightforward metrics that can test this distinction?

Our paper is about AI. But it's helpful to go back 400 years to answer these questions.

What would that even mean?

Our new ICML paper (poster tomorrow!) formalizes these questions.

One result tells the story: A transformer trained on 10M solar systems nails planetary orbits. But it botches gravitational laws 🧵

What would that even mean?

Our new ICML paper (poster tomorrow!) formalizes these questions.

One result tells the story: A transformer trained on 10M solar systems nails planetary orbits. But it botches gravitational laws 🧵

Co-authors: Justin Chen, Ashesh Rambachan, Jon Kleinberg, Sendhil Mullainathan (@sendhil.bsky.social)

Co-authors: Justin Chen, Ashesh Rambachan, Jon Kleinberg, Sendhil Mullainathan (@sendhil.bsky.social)

We trained a transformer to predict directions for NYC taxi rides. The model was good. It could find shortest paths between new points

But had it built a map of NYC? We reconstructed its map and found incoherence:

We trained a transformer to predict directions for NYC taxi rides. The model was good. It could find shortest paths between new points

But had it built a map of NYC? We reconstructed its map and found incoherence: