Zhidian Zhang, @milot.bsky.social, @martinsteinegger.bsky.social, and @sokrypton.org

biorxiv.org/content/10.1...

TLDR: We introduce MSA Pairformer, a 111M parameter protein language model that challenges the scaling paradigm in self-supervised protein language modeling🧵

📄 www.nature.com/articles/s41...

💿 mmseqs.com

📄 www.nature.com/articles/s41...

💿 mmseqs.com

- SIMD FW/BW alignment (preprint soon!)

- Sub. Mat. λ calculator by Eric Dawson

- Faster ARM SW by Alexander Nesterovskiy

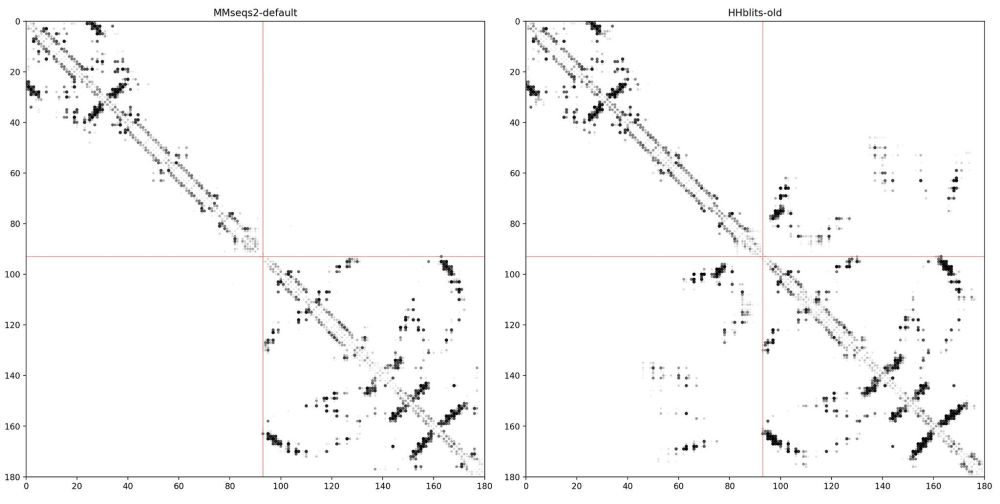

- MSA-Pairformer’s proximity-based pairing for multimer prediction (www.biorxiv.org/content/10.1...; avail. in ColabFold API)

💾 github.com/soedinglab/M... & 🐍

- SIMD FW/BW alignment (preprint soon!)

- Sub. Mat. λ calculator by Eric Dawson

- Faster ARM SW by Alexander Nesterovskiy

- MSA-Pairformer’s proximity-based pairing for multimer prediction (www.biorxiv.org/content/10.1...; avail. in ColabFold API)

💾 github.com/soedinglab/M... & 🐍

Zhidian Zhang, @milot.bsky.social, @martinsteinegger.bsky.social, and @sokrypton.org

biorxiv.org/content/10.1...

TLDR: We introduce MSA Pairformer, a 111M parameter protein language model that challenges the scaling paradigm in self-supervised protein language modeling🧵

Zhidian Zhang, @milot.bsky.social, @martinsteinegger.bsky.social, and @sokrypton.org

biorxiv.org/content/10.1...

TLDR: We introduce MSA Pairformer, a 111M parameter protein language model that challenges the scaling paradigm in self-supervised protein language modeling🧵