github.com/yoakiyama/MS...

colab.research.google.com/github/yoaki...

Please reach out with any comments, questions or concerns! We really appreciate all of the feedback from the community and are excited to see how y'all will use MSA Pairformer :)

github.com/yoakiyama/MS...

colab.research.google.com/github/yoaki...

Please reach out with any comments, questions or concerns! We really appreciate all of the feedback from the community and are excited to see how y'all will use MSA Pairformer :)

And a huge shoutout to the entire solab! I'm so grateful to work with these brilliant and supportive scientists every day. Keep an eye out for exciting work coming out from the team!

And a huge shoutout to the entire solab! I'm so grateful to work with these brilliant and supportive scientists every day. Keep an eye out for exciting work coming out from the team!

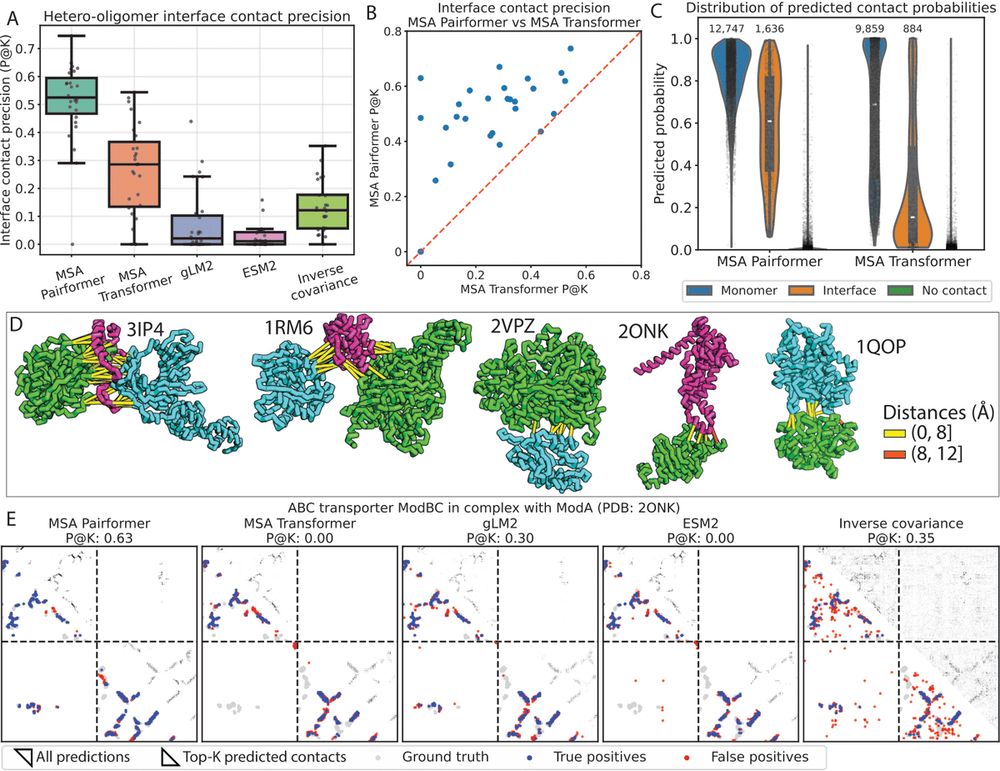

We're excited for all of MSA Pairformer's potential applications for biological discovery and for the future of memory and parameter efficient pLMs

We're excited for all of MSA Pairformer's potential applications for biological discovery and for the future of memory and parameter efficient pLMs

P.S. this figure slightly differs from what's in the preprint and will be updated in v2 of the paper!

P.S. this figure slightly differs from what's in the preprint and will be updated in v2 of the paper!

1) scale down protein language modeling?

2) expand its scope?

1) scale down protein language modeling?

2) expand its scope?