I run the small, mobile and independent research CV-inside lab: https://beton-ochoa.github.io

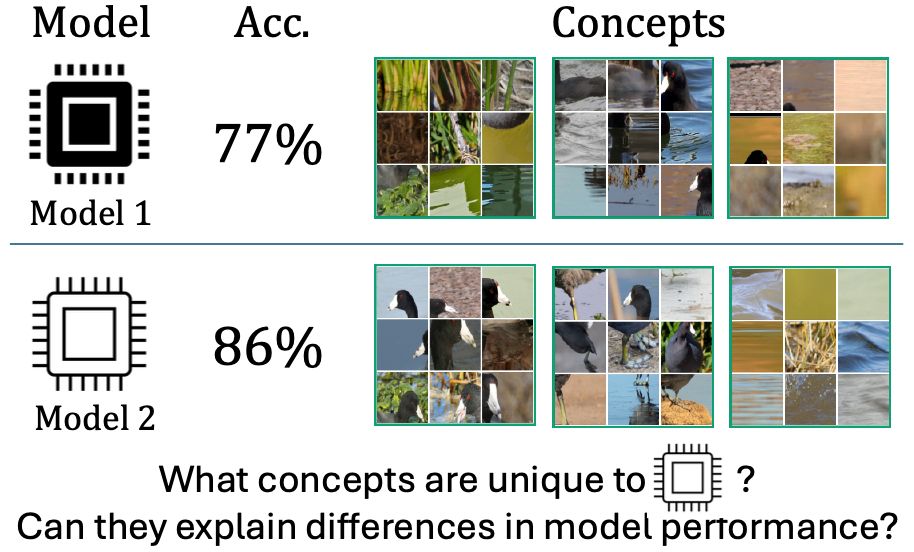

We all know the ViT-Large performs better than the Resnet-50, but what visual concepts drive this difference? Our new ICLR 2025 paper addresses this question! nkondapa.github.io/rsvc-page/

We all know the ViT-Large performs better than the Resnet-50, but what visual concepts drive this difference? Our new ICLR 2025 paper addresses this question! nkondapa.github.io/rsvc-page/

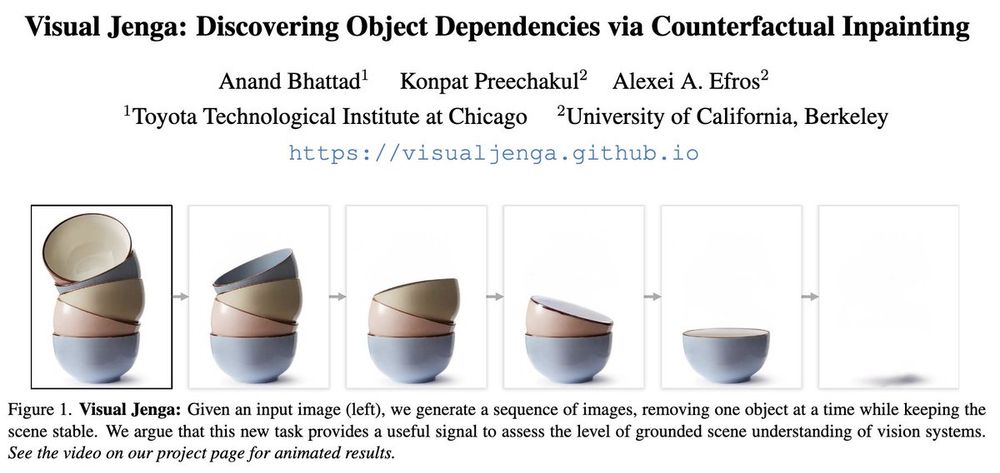

Models today can label pixels and detect objects with high accuracy. But does that mean they truly understand scenes?

Super excited to share our new paper and a new task in computer vision: Visual Jenga!

📄 arxiv.org/abs/2503.21770

🔗 visualjenga.github.io

Models today can label pixels and detect objects with high accuracy. But does that mean they truly understand scenes?

Super excited to share our new paper and a new task in computer vision: Visual Jenga!

📄 arxiv.org/abs/2503.21770

🔗 visualjenga.github.io

Xiaohao Xu, @tianyizhang.bsky.social, Shibo Zhao, Xiang Li, Sibo Wang, Yongqi Chen, Ye Li, Bhiksha Raj, Matthew Johnson-Roberson, Sebastian Scherer, Xiaonan Huang

arxiv.org/abs/2501.14319

Xiaohao Xu, @tianyizhang.bsky.social, Shibo Zhao, Xiang Li, Sibo Wang, Yongqi Chen, Ye Li, Bhiksha Raj, Matthew Johnson-Roberson, Sebastian Scherer, Xiaonan Huang

arxiv.org/abs/2501.14319

Zero-Shot Monocular Scene Flow Estimation in the Wild

https://arxiv.org/abs/2501.10357

Zero-Shot Monocular Scene Flow Estimation in the Wild

https://arxiv.org/abs/2501.10357

nature.com/articles/s41...

nature.com/articles/s41...

Course: www.eecs.yorku.ca/~kosta/Cours...

Course: www.eecs.yorku.ca/~kosta/Cours...

#fyp #robotics #robots #foryou #airobots

Robotic-assisted spine surgery is a minimally invasive surgical technique that uses a robotic arm to assist the surgeon in performing the delicate tasks involved in spinal fusion surgery.

www.online-sciences.com/robotics/use...

#fyp #robotics #robots #foryou #airobots

Robotic-assisted spine surgery is a minimally invasive surgical technique that uses a robotic arm to assist the surgeon in performing the delicate tasks involved in spinal fusion surgery.

www.online-sciences.com/robotics/use...

Hanwen Jiang, Zexiang Xu, @desaixie.bsky.social, Ziwen Chen, @haian-jin.bsky.social, Fujun Luan, Zhixin Shu, Kai Zhang, Sai Bi, Xin Sun, Jiuxiang Gu, Qixing Huang, Georgios Pavlakos, Hao Tan

arxiv.org/abs/2412.14166

Hanwen Jiang, Zexiang Xu, @desaixie.bsky.social, Ziwen Chen, @haian-jin.bsky.social, Fujun Luan, Zhixin Shu, Kai Zhang, Sai Bi, Xin Sun, Jiuxiang Gu, Qixing Huang, Georgios Pavlakos, Hao Tan

arxiv.org/abs/2412.14166

Special Issue on Innovations in Artificial Intelligence for Medicine and Healthcare

Title: Innovations in Artificial Intelligence for Medicine and Healthcare

Journal Health Information Science and Systems (Springer)

Submit your research!

Special Issue on Innovations in Artificial Intelligence for Medicine and Healthcare

Title: Innovations in Artificial Intelligence for Medicine and Healthcare

Journal Health Information Science and Systems (Springer)

Submit your research!

Easy to use like DUSt3R/MASt3R, from an uncalibrated RGB video it recovers accurate, globally consistent poses & a dense map.

With @ericdexheimer.bsky.social* @ajdavison.bsky.social (*Equal Contribution)

Submissions for artworks using / about computer vision are open until 9th March 2025

More details: bit.ly/CVPRAIArt25

#CVPR2025 #creativeAI #AIart

Submissions for artworks using / about computer vision are open until 9th March 2025

More details: bit.ly/CVPRAIArt25

#CVPR2025 #creativeAI #AIart

We'd love to hear from you on what we did well, and what we could have done even better - this will inform next year's chairs.

Organising #MICCAI2024 came with challenges but also great rewards!

We'd love to hear from you on what we did well, and what we could have done even better - this will inform next year's chairs.

Organising #MICCAI2024 came with challenges but also great rewards!

jamanetwork.com/journals/jam...

jamanetwork.com/journals/jam...

Special Issue on Innovations in Artificial Intelligence for Medicine and Healthcare

Title: Innovations in Artificial Intelligence for Medicine and Healthcare

Journal Health Information Science and Systems (Springer)

Submit your research!

Special Issue on Innovations in Artificial Intelligence for Medicine and Healthcare

Title: Innovations in Artificial Intelligence for Medicine and Healthcare

Journal Health Information Science and Systems (Springer)

Submit your research!

Accurate, fast, & robust structure + camera estimation from casual monocular videos of dynamic scenes!

MegaSaM outputs camera parameters and consistent video depth, scaling to long videos with unconstrained camera paths and complex scene dynamics!

Accurate, fast, & robust structure + camera estimation from casual monocular videos of dynamic scenes!

MegaSaM outputs camera parameters and consistent video depth, scaling to long videos with unconstrained camera paths and complex scene dynamics!

www.nature.com/articles/s42...

www.nature.com/articles/s42...