A couple of years into your PhD, but wondering: "Am I doing this right?"

Most of the advice is aimed at graduating students. But there's far less for junior folks who are still finding their academic path.

My candid takes: anandbhattad.github.io/blogs/jr_gra...

@anandbhattad.bsky.social @uthsav.bsky.social @gligoric.bsky.social @murat-kocaoglu.bsky.social @tiziano.bsky.social

ai.jhu.edu/news/data-sc...

A couple of years into your PhD, but wondering: "Am I doing this right?"

Most of the advice is aimed at graduating students. But there's far less for junior folks who are still finding their academic path.

My candid takes: anandbhattad.github.io/blogs/jr_gra...

A couple of years into your PhD, but wondering: "Am I doing this right?"

Most of the advice is aimed at graduating students. But there's far less for junior folks who are still finding their academic path.

My candid takes: anandbhattad.github.io/blogs/jr_gra...

sites.google.com/view/standou...

We're excited to announce "How to Stand Out in the Crowd?" at #CVPR2025 Nashville - our 4th community-building workshop featuring this incredible speaker lineup!

🔗 sites.google.com/view/standou...

sites.google.com/view/standou...

Jiahao Li, Haochen Wang, @zubair-irshad.bsky.social, @ivasl.bsky.social, Matthew R. Walter, Vitor Campagnolo Guizilini, Greg Shakhnarovich

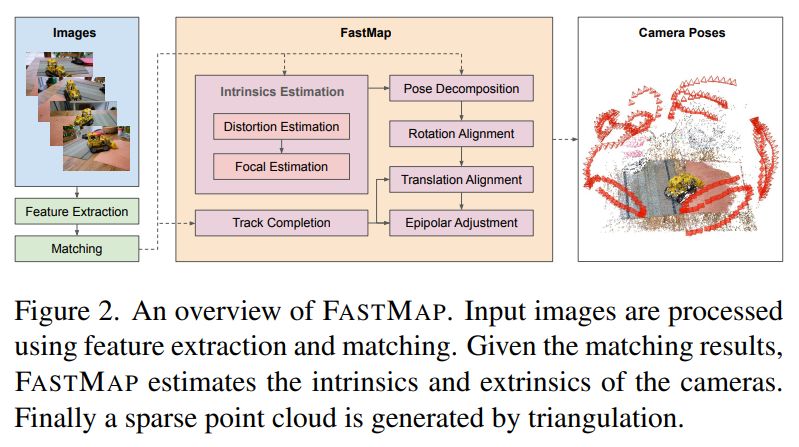

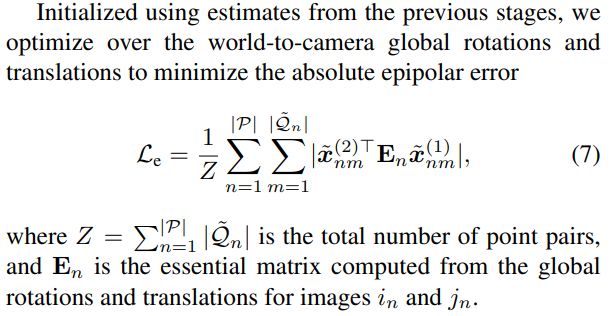

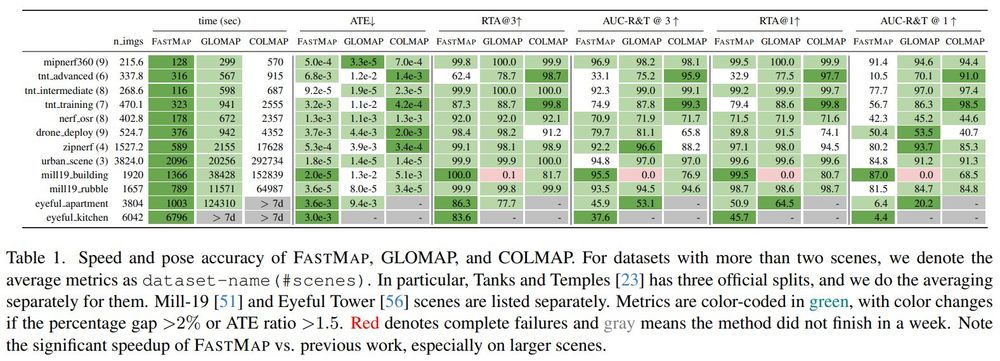

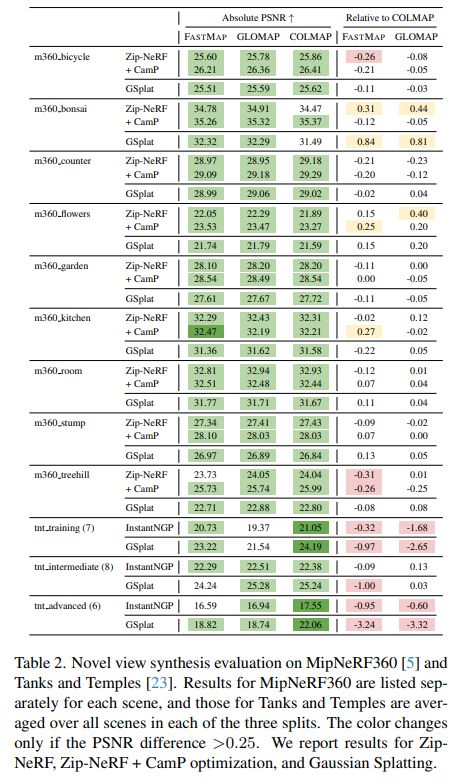

tl;dr: replace BA with epipolar error+IRLS; fully PyTorch implementation

arxiv.org/abs/2505.04612

Jiahao Li, Haochen Wang, @zubair-irshad.bsky.social, @ivasl.bsky.social, Matthew R. Walter, Vitor Campagnolo Guizilini, Greg Shakhnarovich

tl;dr: replace BA with epipolar error+IRLS; fully PyTorch implementation

arxiv.org/abs/2505.04612

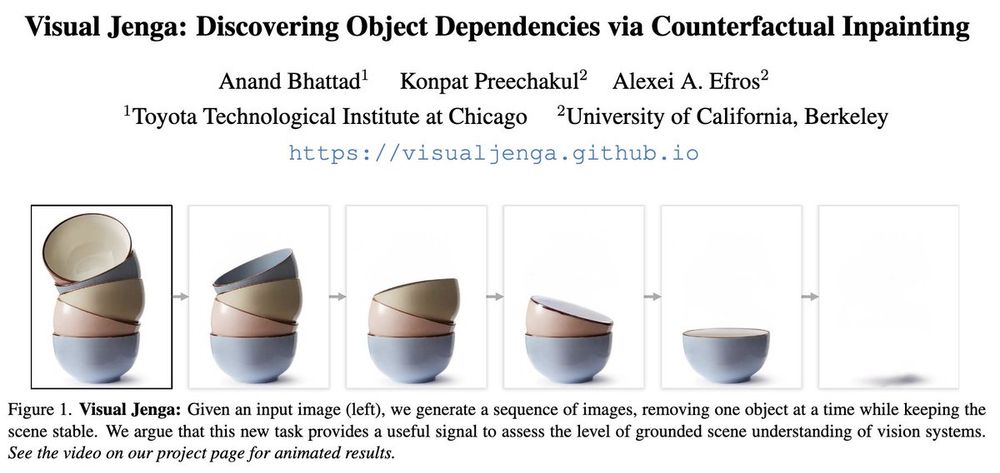

Models today can label pixels and detect objects with high accuracy. But does that mean they truly understand scenes?

Super excited to share our new paper and a new task in computer vision: Visual Jenga!

📄 arxiv.org/abs/2503.21770

🔗 visualjenga.github.io

Models today can label pixels and detect objects with high accuracy. But does that mean they truly understand scenes?

Super excited to share our new paper and a new task in computer vision: Visual Jenga!

📄 arxiv.org/abs/2503.21770

🔗 visualjenga.github.io

Models today can label pixels and detect objects with high accuracy. But does that mean they truly understand scenes?

Super excited to share our new paper and a new task in computer vision: Visual Jenga!

📄 arxiv.org/abs/2503.21770

🔗 visualjenga.github.io

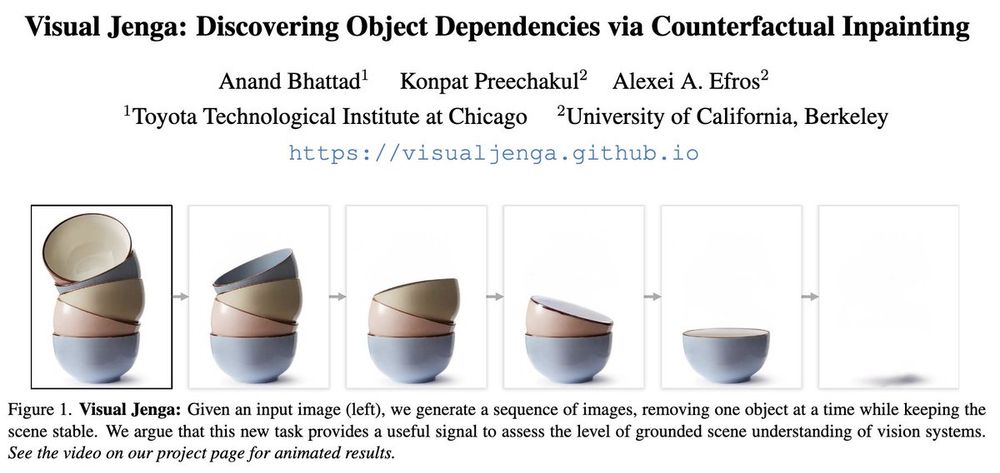

Xiaoyan, who’s been working with me on relighting, sent this over. It’s one of the hardest examples we’ve consistently used to stress-test LumiNet: luminet-relight.github.io

Xiaoyan, who’s been working with me on relighting, sent this over. It’s one of the hardest examples we’ve consistently used to stress-test LumiNet: luminet-relight.github.io

It’s a super cool project led by the amazing Chih-Hao!

@chih-hao.bsky.social is a rising star in 3DV! Follow him!

Learn more here👇

We introduce UrbanIR, a neural rendering framework for 💡relighting, 🌃nighttime simulation, and 🚘 object insertion—all from a single video of urban scenes!

It’s a super cool project led by the amazing Chih-Hao!

@chih-hao.bsky.social is a rising star in 3DV! Follow him!

Learn more here👇

Check out our #3DV2025 UrbanIR paper, led by @chih-hao.bsky.social that does exactly this.

🔗: urbaninverserendering.github.io

Check out our #3DV2025 UrbanIR paper, led by @chih-hao.bsky.social that does exactly this.

🔗: urbaninverserendering.github.io

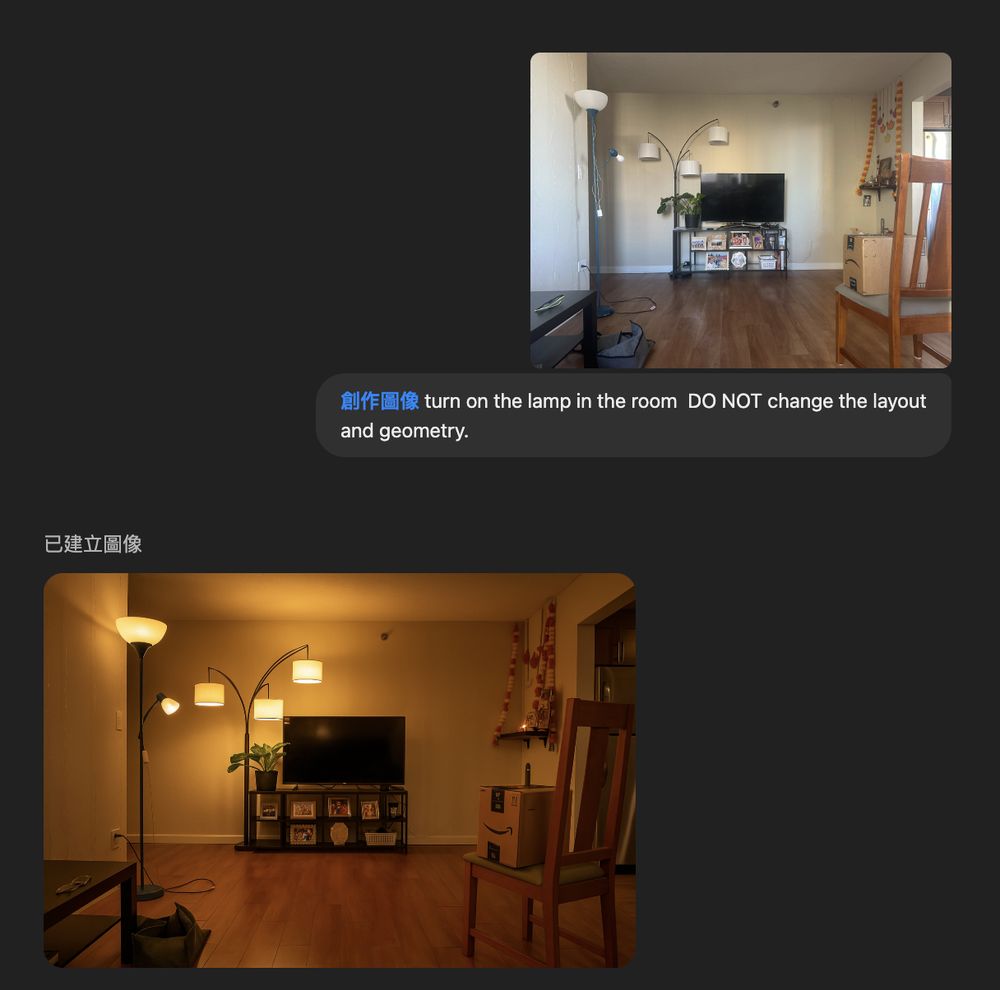

As this will get pretty long, this will be two threads.

The first will go into the RL part, and the second on the emergence and distillation.

As this will get pretty long, this will be two threads.

The first will go into the RL part, and the second on the emergence and distillation.

Train a diffusion model as a renderer that takes intrinsic images as input. Once trained, we can perform zero-shot object compositing & can easily extend this to other object editing tasks, such as material swapping.

arxiv.org/abs/2410.08168

Train a diffusion model as a renderer that takes intrinsic images as input. Once trained, we can perform zero-shot object compositing & can easily extend this to other object editing tasks, such as material swapping.

arxiv.org/abs/2410.08168

Excited about this work—fully data-driven, with zero reliance on computer graphics datasets.

It builds upon our previous works—StyLitGAN provided the training data, while Latent Intrinsics abstracted lighting and albedo for diffusion models.

And more to come! 🤓

💡LumiNet combines latent intrinsic representations with a powerful generative image model. It lets us relight complex indoor images by transferring lighting from one image to another. It is completely data-driven: no labels, mark-up, not even depth or normals.

Excited about this work—fully data-driven, with zero reliance on computer graphics datasets.

It builds upon our previous works—StyLitGAN provided the training data, while Latent Intrinsics abstracted lighting and albedo for diffusion models.

And more to come! 🤓

We've invited incredible researchers who are leading fantastic work at various related fields.

4dvisionworkshop.github.io

We've invited incredible researchers who are leading fantastic work at various related fields.

4dvisionworkshop.github.io

psyarxiv.com/pq8nb

psyarxiv.com/pq8nb

Also chasing down a few reviewers to update their justification beyond 'my concerns were not addressed in the rebuttal, so I’ll maintain my original score.' 😅 #CVPR2025

Also chasing down a few reviewers to update their justification beyond 'my concerns were not addressed in the rebuttal, so I’ll maintain my original score.' 😅 #CVPR2025

I wish this could be integrated with paper submission services to flag missing related works.

I wish this could be integrated with paper submission services to flag missing related works.

We're excited to announce "How to Stand Out in the Crowd?" at #CVPR2025 Nashville - our 4th community-building workshop featuring this incredible speaker lineup!

🔗 sites.google.com/view/standou...

We're excited to announce "How to Stand Out in the Crowd?" at #CVPR2025 Nashville - our 4th community-building workshop featuring this incredible speaker lineup!

🔗 sites.google.com/view/standou...