Poster 153 — see you there!

Our method generates large sets of images using significantly less compute than standard diffusion.

📎https://ddecatur.github.io/hierarchical-diffusion/

1/

Poster 153 — see you there!

colab.research.google.com/drive/16GJyb...

Comments welcome!

colab.research.google.com/drive/16GJyb...

Comments welcome!

DM if you want to meet!

DM if you want to meet!

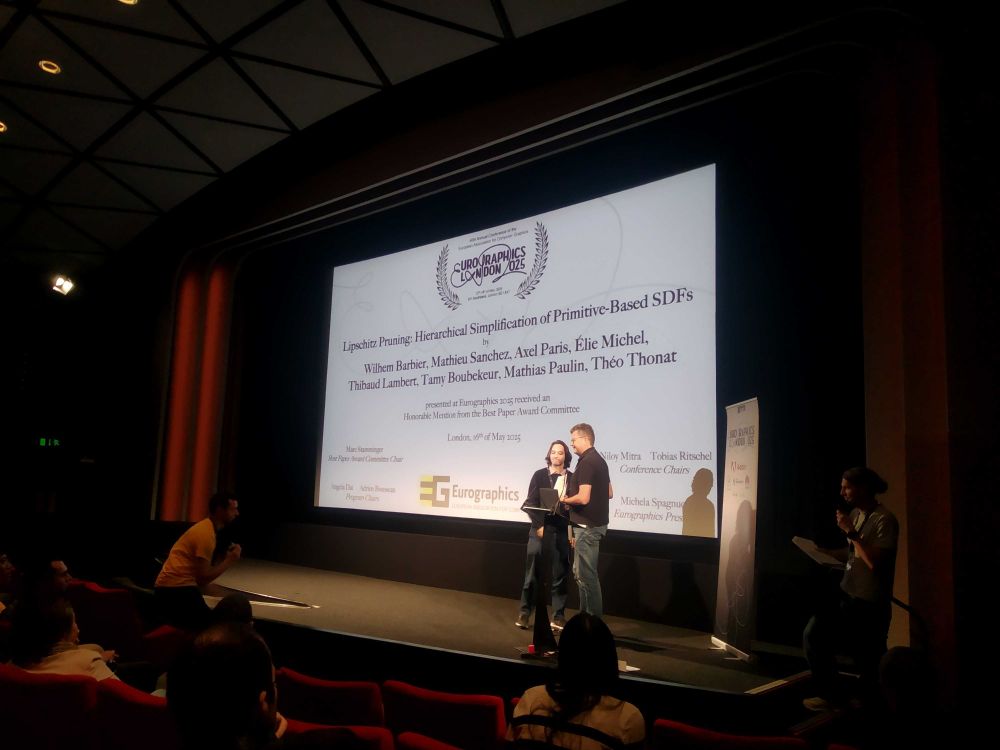

👉 The paper's page: wbrbr.org/publications...

Congrats to @wbrbr.bsky.social, M. Sanchez, @axelparis.bsky.social, T. Lambert, @tamyboubekeur.bsky.social, M. Paulin and T. Thonat!

👉 The paper's page: wbrbr.org/publications...

Congrats to @wbrbr.bsky.social, M. Sanchez, @axelparis.bsky.social, T. Lambert, @tamyboubekeur.bsky.social, M. Paulin and T. Thonat!

Make your life easier: read this thread.

Make your life easier: read this thread.

Probably my first time visiting Brazil for professional reasons :-)

Probably my first time visiting Brazil for professional reasons :-)

Huge congrats to everyone involved and the community 🎉

Huge congrats to everyone involved and the community 🎉

“(…) you should include specific feedback on ways the authors can improve their papers.”

“(…) you should include specific feedback on ways the authors can improve their papers.”

An online 3D reasoning framework for many 3D tasks directly from just RGB. For static or dynamic scenes. Video or image collections, all in one!

Project Page: cut3r.github.io

Code and Model: github.com/CUT3R/CUT3R

An online 3D reasoning framework for many 3D tasks directly from just RGB. For static or dynamic scenes. Video or image collections, all in one!

Project Page: cut3r.github.io

Code and Model: github.com/CUT3R/CUT3R

arxiv.org/abs/2502.03714

(1/9)

arxiv.org/abs/2502.03714

(1/9)

psyarxiv.com/pq8nb

psyarxiv.com/pq8nb

A mesh-based 3D represention for training radiance fields from collections of images.

radfoam.github.io

arxiv.org/abs/2502.01157

Project co-lead by my PhD students Shrisudhan Govindarajan and Daniel Rebain, and w/ co-advisor Kwang Moo Yi

A mesh-based 3D represention for training radiance fields from collections of images.

radfoam.github.io

arxiv.org/abs/2502.01157

Project co-lead by my PhD students Shrisudhan Govindarajan and Daniel Rebain, and w/ co-advisor Kwang Moo Yi

Is there any way in OpenReview to "mention" a reviewer in a discussion? I think reviewers get the e-mail with whatever message gets posted in the discussion that is sent to them, but they have no idea if Reviewer HjKl is them or someone else...

Is there any way in OpenReview to "mention" a reviewer in a discussion? I think reviewers get the e-mail with whatever message gets posted in the discussion that is sent to them, but they have no idea if Reviewer HjKl is them or someone else...

I can't help but think though: if everyone is trying to stand out you will stand out by not trying to :-)

(I am obviously kidding, the workshop is about more than that, visit the webpage to learn more)

We're excited to announce "How to Stand Out in the Crowd?" at #CVPR2025 Nashville - our 4th community-building workshop featuring this incredible speaker lineup!

🔗 sites.google.com/view/standou...

I can't help but think though: if everyone is trying to stand out you will stand out by not trying to :-)

(I am obviously kidding, the workshop is about more than that, visit the webpage to learn more)

Apply!

vvvvv

Apply!

vvvvv

Zehan Wang, Ziang Zhang, Tianyu P

tl;dr: train ViT on Objaverse(800K->55K obj after filtering)to predict canonical ori->PROFIT

arxiv.org/abs/2412.18605

Zehan Wang, Ziang Zhang, Tianyu P

tl;dr: train ViT on Objaverse(800K->55K obj after filtering)to predict canonical ori->PROFIT

arxiv.org/abs/2412.18605

What are the equivalently useful numbers, worth memorizing for estimating economic / political things?

Some of mine:

1/2

What are the equivalently useful numbers, worth memorizing for estimating economic / political things?

Some of mine:

1/2

☑️With MAtCha, we leverage a pretrained depth model to recover sharp meshes from sparse views including both foreground and background, within mins!🧵

🌐Webpage: anttwo.github.io/matcha/

☑️With MAtCha, we leverage a pretrained depth model to recover sharp meshes from sparse views including both foreground and background, within mins!🧵

🌐Webpage: anttwo.github.io/matcha/