Vaccines save lives.

We've relaunched medarc.ai, our open science research community. Join us if you want to help advance open medical AI.

And we are hiring.

We've relaunched medarc.ai, our open science research community. Join us if you want to help advance open medical AI.

And we are hiring.

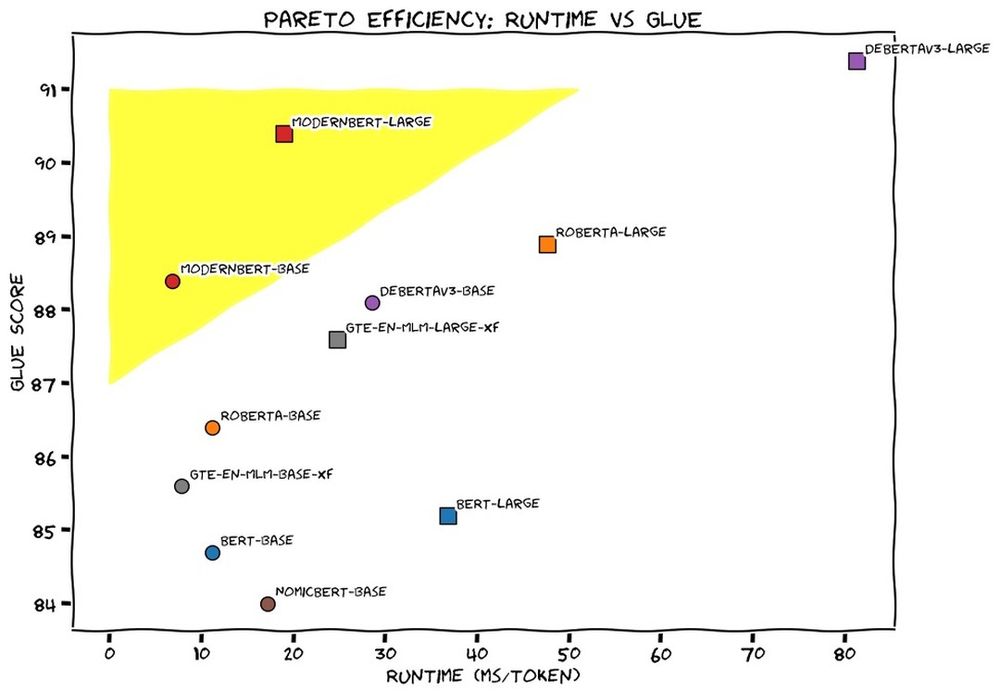

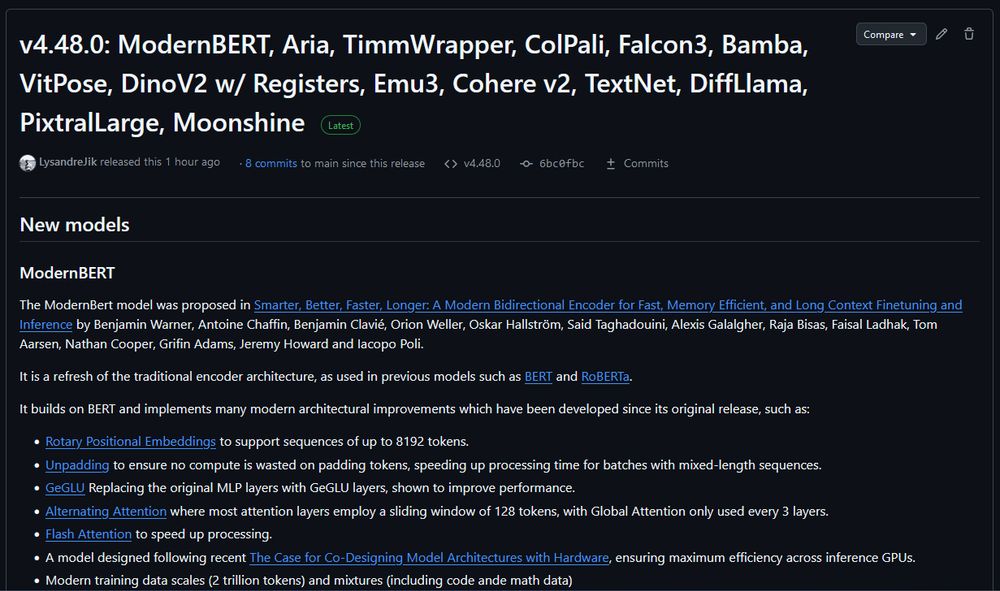

One of the most requested models I've seen, @jhuclsp.bsky.social has trained state-of-the-art massively multilingual encoders using the ModernBERT architecture: mmBERT.

Stronger than an existing models at their sizes, while also much faster!

Details in 🧵

One of the most requested models I've seen, @jhuclsp.bsky.social has trained state-of-the-art massively multilingual encoders using the ModernBERT architecture: mmBERT.

Stronger than an existing models at their sizes, while also much faster!

Details in 🧵

Thinking Mini still makes for a good faster search if you don’t need the extra reasoning ability.

Thinking Mini still makes for a good faster search if you don’t need the extra reasoning ability.

Remember the glue on pizza Reddit post that the subpar Google AI cited uncritically? Bing’s then integration of GPT 3.5 recognized the Reddit post as sarcasm.

Remember the glue on pizza Reddit post that the subpar Google AI cited uncritically? Bing’s then integration of GPT 3.5 recognized the Reddit post as sarcasm.

He walkthrough how he learned to implement Flash Attention for 5090 in CUDA C++. The main objective is to learn writing attention in CUDA C++,

He walkthrough how he learned to implement Flash Attention for 5090 in CUDA C++. The main objective is to learn writing attention in CUDA C++,

My guess is current and near-future LLMs are more likely to increase the demand for programmers, not decrease demand (Jevons Paradox).

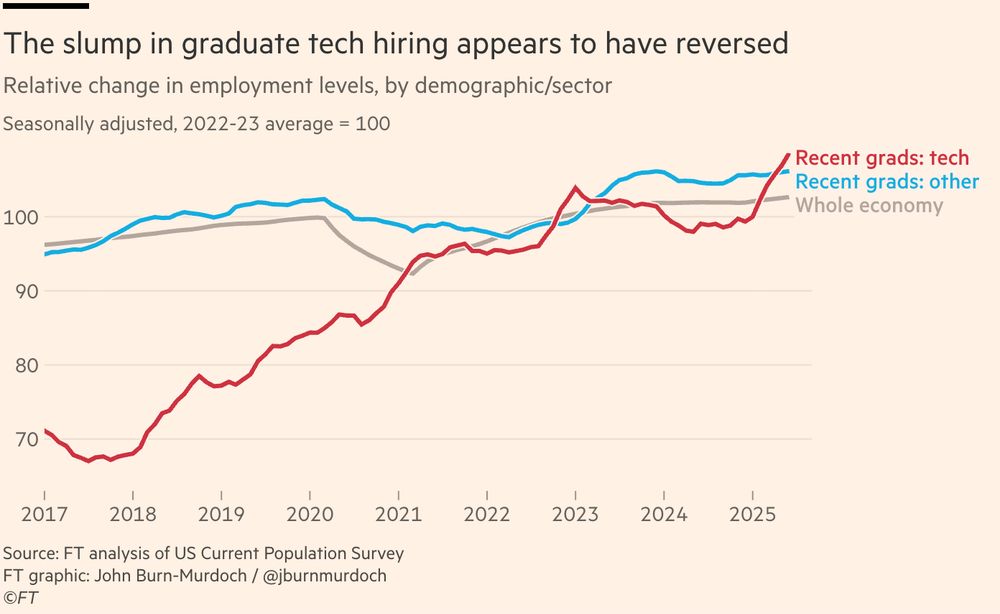

The much-discussed contraction in entry-level tech hiring appears to have *reversed* in recent months.

In fact, relative to the pre-generative AI era, recent grads have secured coding jobs at the same rate as they’ve found any job, if not slightly higher.

My guess is current and near-future LLMs are more likely to increase the demand for programmers, not decrease demand (Jevons Paradox).

Spoilers: the answer is yes.

![from transformers import pipeline

model_name = "answerdotai/ModernBERT-Large-Instruct"

fill_mask = pipeline("fill-mask", model=model_name, tokenizer=model_name)

text = """You will be given a question and options. Select the right answer.

QUESTION: If (G, .) is a group such that (ab)^-1 = a^-1b^-1, for all a, b in G, then G is a/an

CHOICES:

- A: commutative semi group

- B: abelian group

- C: non-abelian group

- D: None of these

ANSWER: [unused0] [MASK]"""

results = fill_mask(text)

answer = results[0]["token_str"].strip()

print(f"Predicted answer: {answer}") # Answer: B](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:psfkl7gi24rg5rhvv7z6mly3/bafkreid6ko23ckgz2mwpp7zj3p6n333vmg5c2r5bghon77g4el6322z6oa@jpeg)

Spoilers: the answer is yes.

I somehow got into an argument last week with someone who was insisting that all models are industrial blackboxes... and I wish I'd had this on hand.

I somehow got into an argument last week with someone who was insisting that all models are industrial blackboxes... and I wish I'd had this on hand.

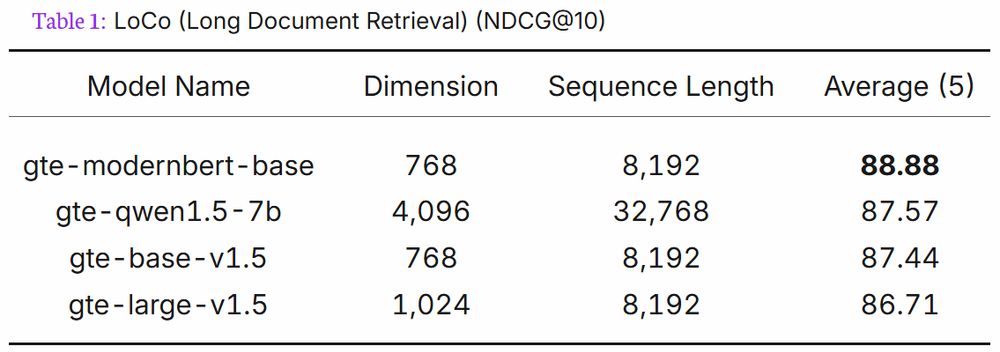

gte-modernbert-base beats gte-qwen1.5-7b on LoCo long context retrieval with 7B less parameters.

gte-modernbert-base beats gte-qwen1.5-7b on LoCo long context retrieval with 7B less parameters.

Details in 🧵

Details in 🧵

But the large variant of ModernBERT is also awesome...

So today, @lightonai.bsky.social is releasing ModernBERT-embed-large, the larger and more capable iteration of ModernBERT-embed!

But the large variant of ModernBERT is also awesome...

So today, @lightonai.bsky.social is releasing ModernBERT-embed-large, the larger and more capable iteration of ModernBERT-embed!

If you are plugging ModernBERT into an existing encoder finetuning pipeline, try increasing the learning rate. We've found that ModernBERT tends to prefer a higher LR than older models.

If you are plugging ModernBERT into an existing encoder finetuning pipeline, try increasing the learning rate. We've found that ModernBERT tends to prefer a higher LR than older models.

The bad: $2,000

The Ugly*: PCIe 5 without NVLink

The bad: $2,000

The Ugly*: PCIe 5 without NVLink

It seems to me that AI will be most relevant in people's lives because the Honda Civic is ubiquitous, not so much because everyone is driving a Ferrari.

It seems to me that AI will be most relevant in people's lives because the Honda Civic is ubiquitous, not so much because everyone is driving a Ferrari.

Details in 🧵

Details in 🧵