Vaccines save lives.

GTE: huggingface.co/Alibaba-NLP/...

Nomic: huggingface.co/nomic-ai/mod...

GTE: huggingface.co/Alibaba-NLP/...

Nomic: huggingface.co/nomic-ai/mod...

Twitter: x.com/bclavie/stat...

Model: huggingface.co/answerdotai/...

Blog: www.answer.ai/posts/2025-0...

Paper: arxiv.org/abs/2502.03793

Twitter: x.com/bclavie/stat...

Model: huggingface.co/answerdotai/...

Blog: www.answer.ai/posts/2025-0...

Paper: arxiv.org/abs/2502.03793

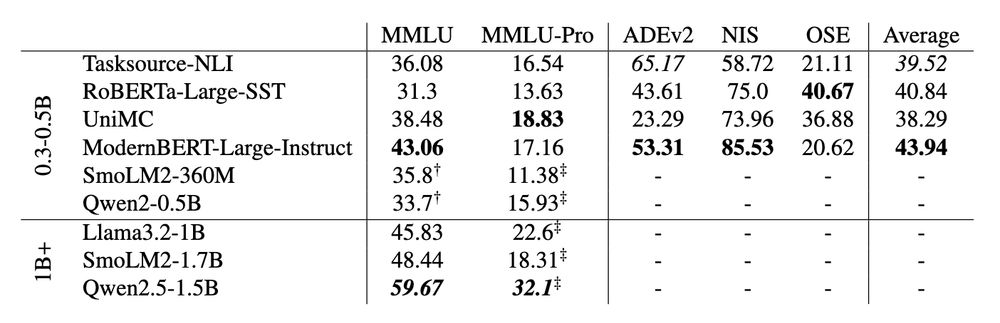

No. And it's not close.

No. And it's not close.

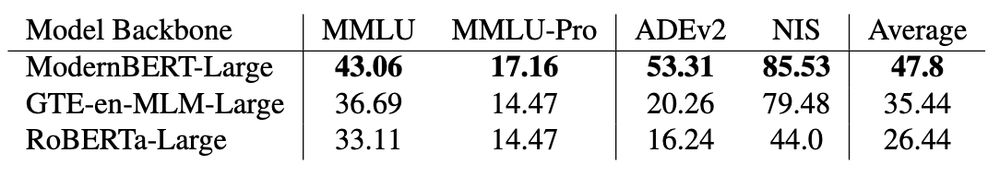

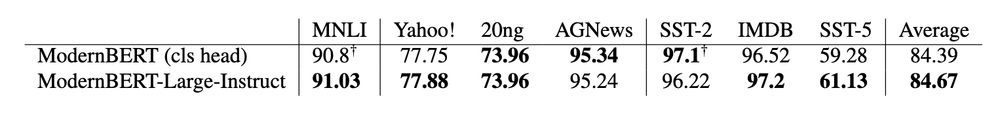

- Can an instruction-tuned ModernBERT zero-shot tasks using the MLM-head?

- Could we then fine-tune instruction-tuned ModernBERT to complete any task?

Detailed answers: arxiv.org/abs/2502.03793

- Can an instruction-tuned ModernBERT zero-shot tasks using the MLM-head?

- Could we then fine-tune instruction-tuned ModernBERT to complete any task?

Detailed answers: arxiv.org/abs/2502.03793

- gte-modernbert-base: huggingface.co/Alibaba-NLP/...

- gte-reranker-modernbert-base: huggingface.co/Alibaba-NLP/...

- gte-modernbert-base: huggingface.co/Alibaba-NLP/...

- gte-reranker-modernbert-base: huggingface.co/Alibaba-NLP/...

Check out our announcement post for more details: huggingface.co/blog/modernb...

Check out our announcement post for more details: huggingface.co/blog/modernb...