Vaccines save lives.

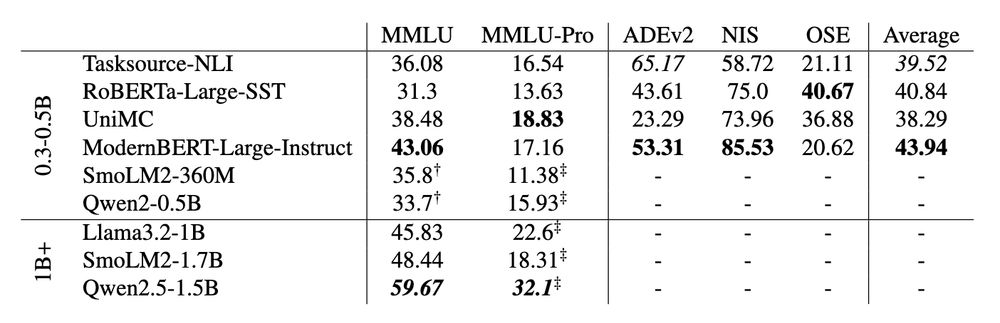

No. And it's not close.

No. And it's not close.

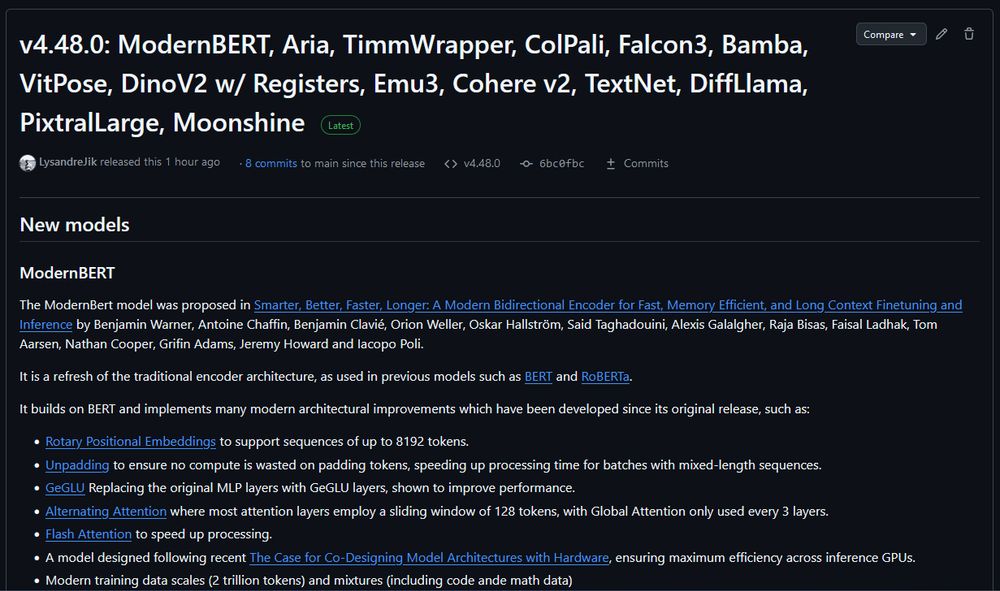

Spoilers: the answer is yes.

![from transformers import pipeline

model_name = "answerdotai/ModernBERT-Large-Instruct"

fill_mask = pipeline("fill-mask", model=model_name, tokenizer=model_name)

text = """You will be given a question and options. Select the right answer.

QUESTION: If (G, .) is a group such that (ab)^-1 = a^-1b^-1, for all a, b in G, then G is a/an

CHOICES:

- A: commutative semi group

- B: abelian group

- C: non-abelian group

- D: None of these

ANSWER: [unused0] [MASK]"""

results = fill_mask(text)

answer = results[0]["token_str"].strip()

print(f"Predicted answer: {answer}") # Answer: B](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:psfkl7gi24rg5rhvv7z6mly3/bafkreid6ko23ckgz2mwpp7zj3p6n333vmg5c2r5bghon77g4el6322z6oa@jpeg)

Spoilers: the answer is yes.

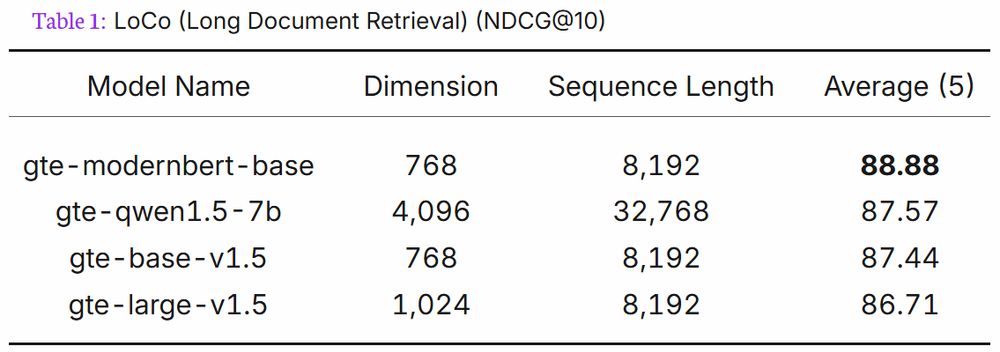

gte-modernbert-base beats gte-qwen1.5-7b on LoCo long context retrieval with 7B less parameters.

gte-modernbert-base beats gte-qwen1.5-7b on LoCo long context retrieval with 7B less parameters.

If you are plugging ModernBERT into an existing encoder finetuning pipeline, try increasing the learning rate. We've found that ModernBERT tends to prefer a higher LR than older models.

If you are plugging ModernBERT into an existing encoder finetuning pipeline, try increasing the learning rate. We've found that ModernBERT tends to prefer a higher LR than older models.

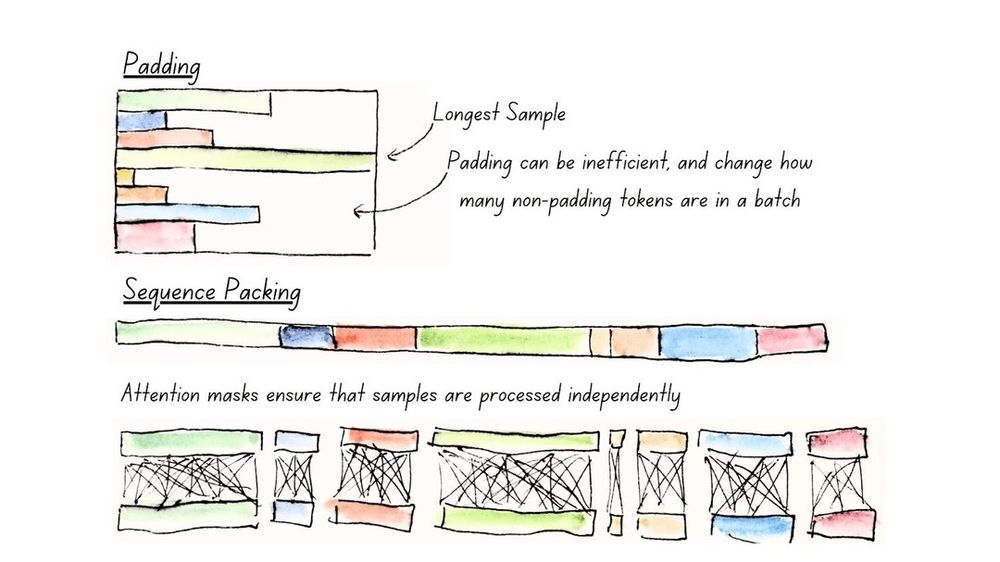

(Full model sequence packing illustrated below)

(Full model sequence packing illustrated below)