Machine Learning Engineer at 🤗 Hugging Face

More in the thread 🧵

More in the thread 🧵

Updating is only useful if you're training.

Details in 🧵

Updating is only useful if you're training.

Details in 🧵

Alongside a new tool: ColGrep, to use it with your coding agents straight away, locally & cheap

Models, dataset, and training code released 🧵

Alongside a new tool: ColGrep, to use it with your coding agents straight away, locally & cheap

Models, dataset, and training code released 🧵

It's called voyage-4-nano, it's multilingual, and very efficient.

Details in 🧵

It's called voyage-4-nano, it's multilingual, and very efficient.

Details in 🧵

Both versions will be supported for the foreseeable future!

Details in 🧵

Both versions will be supported for the foreseeable future!

Details in 🧵

Until now, that is.

RexRerankers by Walmart Tech MLEs is a suite of 5 SOTA rerankers ranging from 17M to 0.6B parameters.

Details in 🧵:

Until now, that is.

RexRerankers by Walmart Tech MLEs is a suite of 5 SOTA rerankers ranging from 17M to 0.6B parameters.

Details in 🧵:

We've collaborated with the fine folks at Unsloth to make your embedding model finetuning ~2x faster and require ~20% less VRAM!

The Unsloth team prepared 6 notebooks showing how you can take advantage of it!

🧵

We've collaborated with the fine folks at Unsloth to make your embedding model finetuning ~2x faster and require ~20% less VRAM!

The Unsloth team prepared 6 notebooks showing how you can take advantage of it!

🧵

The trick: Binary search with int8 rescoring.

I'll show you a demo & how it works in the 🧵:

The trick: Binary search with int8 rescoring.

I'll show you a demo & how it works in the 🧵:

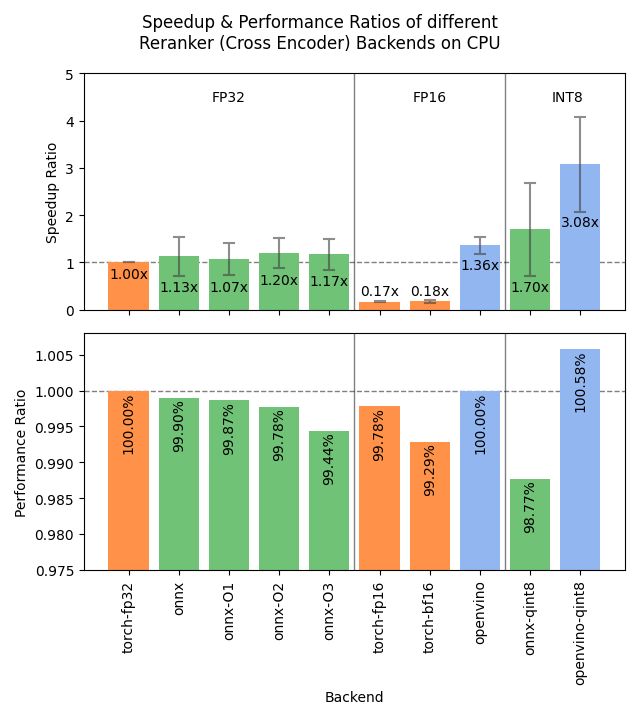

It introduces multi-processing for CrossEncoder (rerankers), multilingual NanoBEIR evaluators, similarity score outputs in mine_hard_negatives, Transformers v5 support and more.

Details in 🧵

It introduces multi-processing for CrossEncoder (rerankers), multilingual NanoBEIR evaluators, similarity score outputs in mine_hard_negatives, Transformers v5 support and more.

Details in 🧵

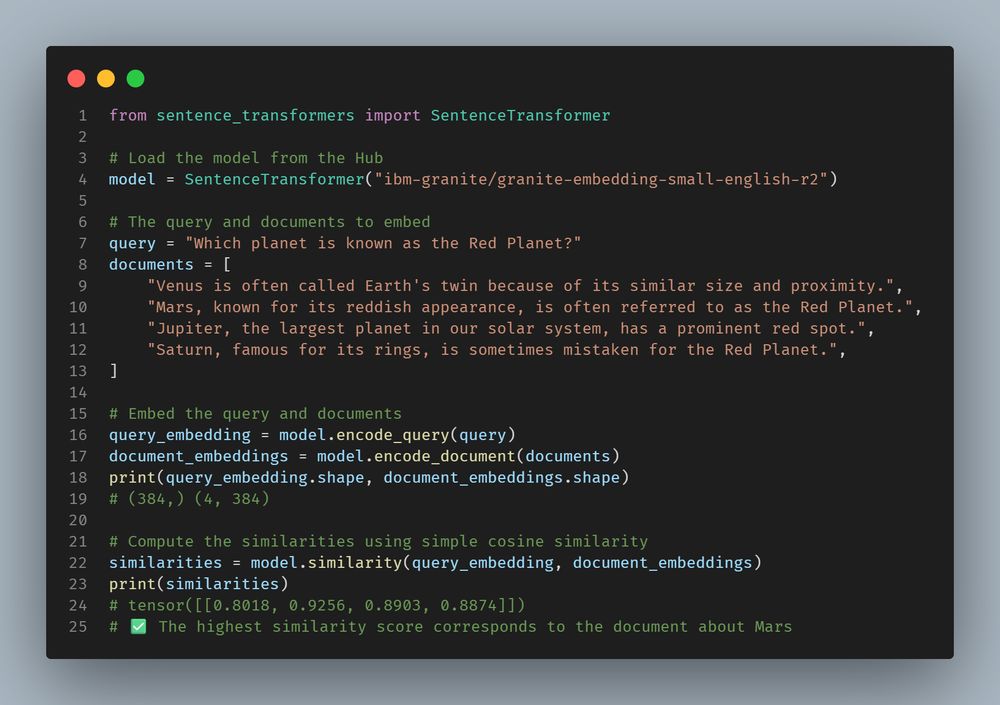

Originally developed at the UKP Lab at @tuda.bsky.social, Sentence Transformers has become one of the world’s most widely used open-source libraries for semantic embeddings in natural language processing.

(1/🧵)

Originally developed at the UKP Lab at @tuda.bsky.social, Sentence Transformers has become one of the world’s most widely used open-source libraries for semantic embeddings in natural language processing.

(1/🧵)

This formalizes the existing maintenance structure, as I've personally led the project for the past two years on behalf of Hugging Face. I'm super excited about the transfer!

Details in 🧵

This formalizes the existing maintenance structure, as I've personally led the project for the past two years on behalf of Hugging Face. I'm super excited about the transfer!

Details in 🧵

Their blogpost covers all changes, including easier evaluation, multimodal support, rerankers, new interfaces, documentation, dataset statistics, a migration guide, etc.

🧵

Their blogpost covers all changes, including easier evaluation, multimodal support, rerankers, new interfaces, documentation, dataset statistics, a migration guide, etc.

🧵

It's a new multilingual text embedding retrieval benchmark with private (!) datasets, to ensure that we measure true generalization and avoid (accidental) overfitting.

Details in our blogpost below 🧵

It's a new multilingual text embedding retrieval benchmark with private (!) datasets, to ensure that we measure true generalization and avoid (accidental) overfitting.

Details in our blogpost below 🧵

It's a small patch release that makes the project more explicit with incorrect arguments and introduces some fixes for multi-GPU processing, evaluators, and hard negatives mining.

Details in 🧵

It's a small patch release that makes the project more explicit with incorrect arguments and introduces some fixes for multi-GPU processing, evaluators, and hard negatives mining.

Details in 🧵

One of the most requested models I've seen, @jhuclsp.bsky.social has trained state-of-the-art massively multilingual encoders using the ModernBERT architecture: mmBERT.

Stronger than an existing models at their sizes, while also much faster!

Details in 🧵

One of the most requested models I've seen, @jhuclsp.bsky.social has trained state-of-the-art massively multilingual encoders using the ModernBERT architecture: mmBERT.

Stronger than an existing models at their sizes, while also much faster!

Details in 🧵

Details in 🧵:

Details in 🧵:

Details in 🧵:

Details in 🧵:

See 🧵for the deets:

See 🧵for the deets:

See more in huggingface.co/openai

See more in huggingface.co/openai

🧵

🧵

Details in 🧵:

Details in 🧵:

Details in 🧵

Details in 🧵

Details in 🧵

Details in 🧵

Details in 🧵

Details in 🧵

The new patch introduces compatibility with the latest transformers and sentence-transformers versions.

The new patch introduces compatibility with the latest transformers and sentence-transformers versions.