Machine Learning Engineer at 🤗 Hugging Face

🧵

🧵

This formalizes the existing maintenance structure, as I've personally led the project for the past two years on behalf of Hugging Face. I'm super excited about the transfer!

Details in 🧵

This formalizes the existing maintenance structure, as I've personally led the project for the past two years on behalf of Hugging Face. I'm super excited about the transfer!

Details in 🧵

Their blogpost covers all changes, including easier evaluation, multimodal support, rerankers, new interfaces, documentation, dataset statistics, a migration guide, etc.

🧵

Their blogpost covers all changes, including easier evaluation, multimodal support, rerankers, new interfaces, documentation, dataset statistics, a migration guide, etc.

🧵

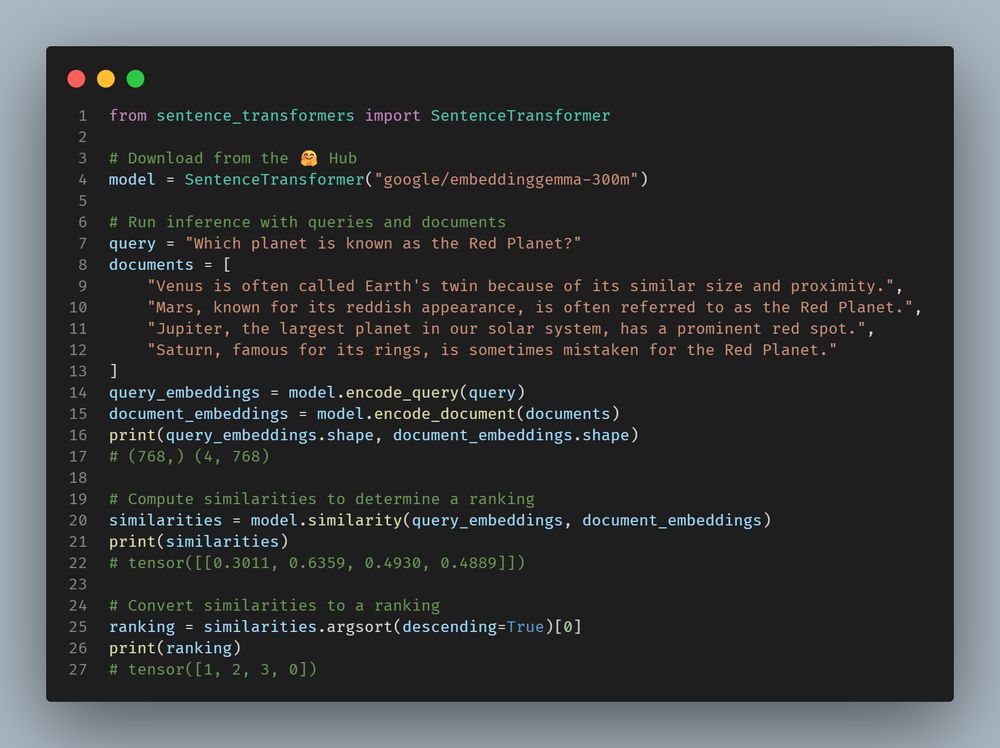

There's also an English only version available.

🧵

There's also an English only version available.

🧵

This would be an indication of whether the model is capable of generalizing nicely.

🧵

This would be an indication of whether the model is capable of generalizing nicely.

🧵

It's a new multilingual text embedding retrieval benchmark with private (!) datasets, to ensure that we measure true generalization and avoid (accidental) overfitting.

Details in our blogpost below 🧵

It's a new multilingual text embedding retrieval benchmark with private (!) datasets, to ensure that we measure true generalization and avoid (accidental) overfitting.

Details in our blogpost below 🧵

- Add support for Knowledgeable Passage Retriever (KPR) models

- Multi-GPU processing with 'model.encode()' now works with 'convert_to_tensor'

🧵

- Add support for Knowledgeable Passage Retriever (KPR) models

- Multi-GPU processing with 'model.encode()' now works with 'convert_to_tensor'

🧵

- a new `model.get_model_kwargs()` method for checking which custom model-specific keyword arguments are supported for this model

🧵

- a new `model.get_model_kwargs()` method for checking which custom model-specific keyword arguments are supported for this model

🧵

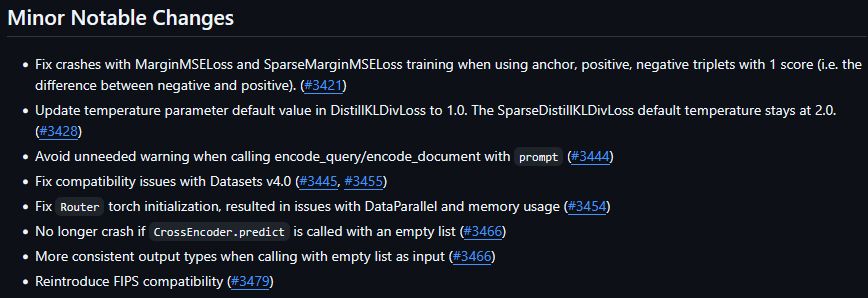

It's a small patch release that makes the project more explicit with incorrect arguments and introduces some fixes for multi-GPU processing, evaluators, and hard negatives mining.

Details in 🧵

It's a small patch release that makes the project more explicit with incorrect arguments and introduces some fixes for multi-GPU processing, evaluators, and hard negatives mining.

Details in 🧵

I already trained a basic Sentence Transformer model myself as I was too curious 👀

🧵

I already trained a basic Sentence Transformer model myself as I was too curious 👀

🧵

🧵

🧵

E.g. see the picture for MTEB v2 Multilingual performance.

🧵

E.g. see the picture for MTEB v2 Multilingual performance.

🧵

- Very competitive with ModernBERT at equivalent sizes on English (GLUE, MTEB v2 English after finetuning)

E.g. see the picture for MTEB v2 English performance.

🧵

- Very competitive with ModernBERT at equivalent sizes on English (GLUE, MTEB v2 English after finetuning)

E.g. see the picture for MTEB v2 English performance.

🧵

- Trained on 1833 languages incl. DCLM, FineWeb2, etc

- 3 training phases: 2.3T tokens on 60 languages, 600B tokens on 110 languages, and 100B tokens on all 1833 languages.

- Also uses model merging and clever transitions between the three training phases.

🧵

- Trained on 1833 languages incl. DCLM, FineWeb2, etc

- 3 training phases: 2.3T tokens on 60 languages, 600B tokens on 110 languages, and 100B tokens on all 1833 languages.

- Also uses model merging and clever transitions between the three training phases.

🧵

- 2 model sizes: 42M non-embed (140M total) and 110M non-embed (307M total)

- Uses the ModernBERT architecture + Gemma2 multilingual tokenizer (so: flash attention, alternating global/local attention, sequence packing, etc.)

- Max. seq. length of 8192 tokens

🧵

- 2 model sizes: 42M non-embed (140M total) and 110M non-embed (307M total)

- Uses the ModernBERT architecture + Gemma2 multilingual tokenizer (so: flash attention, alternating global/local attention, sequence packing, etc.)

- Max. seq. length of 8192 tokens

🧵

One of the most requested models I've seen, @jhuclsp.bsky.social has trained state-of-the-art massively multilingual encoders using the ModernBERT architecture: mmBERT.

Stronger than an existing models at their sizes, while also much faster!

Details in 🧵

One of the most requested models I've seen, @jhuclsp.bsky.social has trained state-of-the-art massively multilingual encoders using the ModernBERT architecture: mmBERT.

Stronger than an existing models at their sizes, while also much faster!

Details in 🧵

🧵

🧵

- Outperforms any <500M embedding model on Multilingual & English MTEB

🧵

- Outperforms any <500M embedding model on Multilingual & English MTEB

🧵

- Supports 100+ languages, trained on 320B token multilingual corpus

- Compatible with Sentence-Transformers, LangChain, LlamaIndex, Haystack, txtai, Transformers.js, ONNX Runtime, and Text-Embeddings-Inference

- Matryoshka-style dimensionality reduction (512/256/128)

🧵

- Supports 100+ languages, trained on 320B token multilingual corpus

- Compatible with Sentence-Transformers, LangChain, LlamaIndex, Haystack, txtai, Transformers.js, ONNX Runtime, and Text-Embeddings-Inference

- Matryoshka-style dimensionality reduction (512/256/128)

🧵

Details in 🧵:

Details in 🧵:

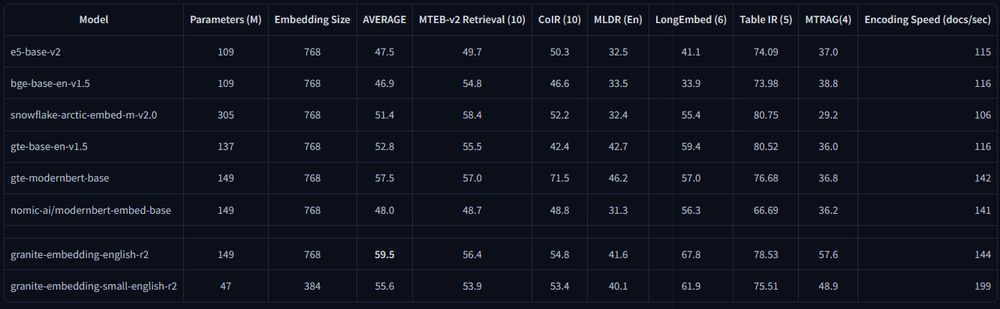

- Strong on common benchmarks like MTEB, MLDR, CoIR, BEIR

- Outperforms their previous equivalently sized models

- Beats other similarly sized models on various benchmarks, but your own evaluation is recommended

🧵

- Strong on common benchmarks like MTEB, MLDR, CoIR, BEIR

- Outperforms their previous equivalently sized models

- Beats other similarly sized models on various benchmarks, but your own evaluation is recommended

🧵

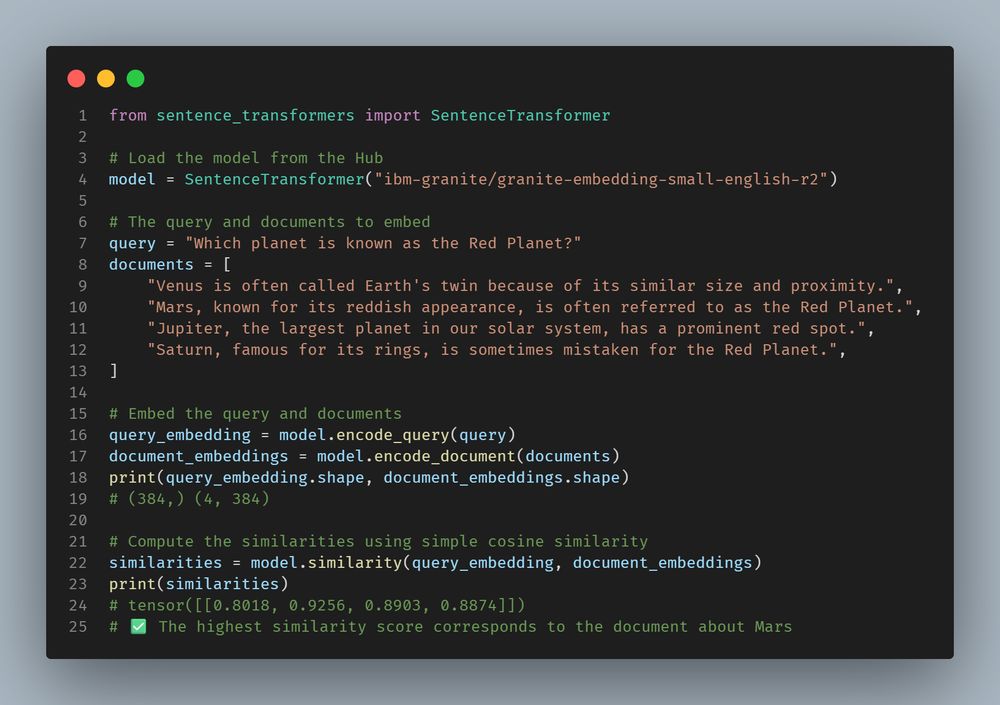

- 2 models: 47M and 149M parameters: extremely performant, even on CPUs

- Both use the ModernBERT architecture, i.e. alternating global & local attention, flash attention 2, etc.

- A 8192 maximum sequence length

🧵

- 2 models: 47M and 149M parameters: extremely performant, even on CPUs

- Both use the ModernBERT architecture, i.e. alternating global & local attention, flash attention 2, etc.

- A 8192 maximum sequence length

🧵

Details in 🧵:

Details in 🧵:

🧵

🧵

sbert.net/docs/sentenc...

🧵

sbert.net/docs/sentenc...

🧵