That seems...wrong.

That seems...wrong.

lingbuzz.net/lingbuzz/007...

A lot has happened since, but I know where I'd put my money in predicting which approach will figure out language.

It reflected a long interest in animal minds: “One alternative alone is left, namely, that worms, although standing low in the scale of organization, possess some degree of intelligence.”

🧪 🦋🦫 #HistSTM #philsci #pschsky #cogsci

It’s exciting to see recent experiments pushing it to its limits, hopefully leading to new directions.

🧠📈

www.biorxiv.org/content/10.1...

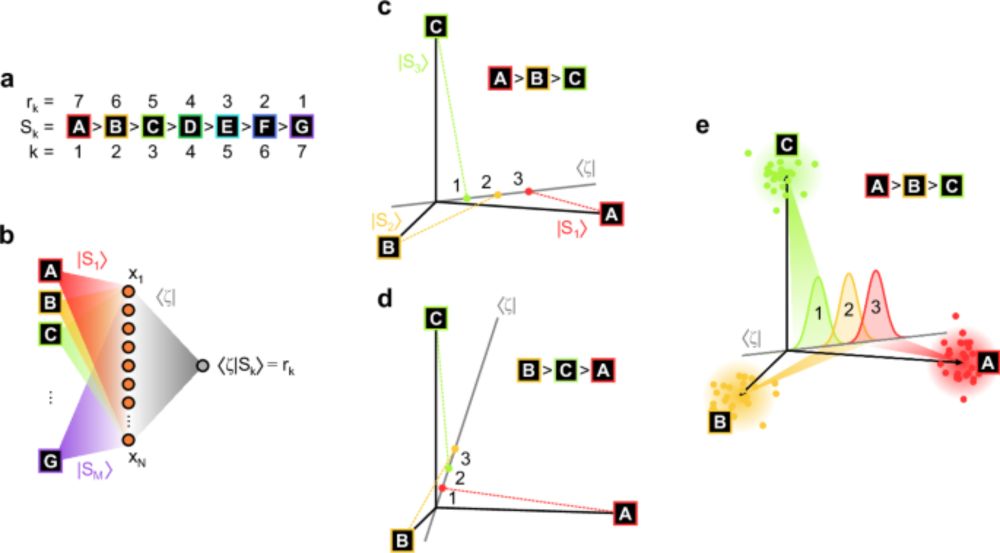

tl;dr: if you repeatedly give an animal a stimulus sequence XXXY, then throw in the occasional XXXX, there are large responses to the Y in XXXY, but not to the final X in XXXX, even though that's statistically "unexpected".

🧠📈 🧪

It’s exciting to see recent experiments pushing it to its limits, hopefully leading to new directions.

🧠📈

Thinking hard feels unpleasant

The unpleasantness of thinking:

A meta-analytic review of the association between mental effort and negative affect. 🏺🧪

psycnet.apa.org/record/2025-...

Thinking hard feels unpleasant

The unpleasantness of thinking:

A meta-analytic review of the association between mental effort and negative affect. 🏺🧪

psycnet.apa.org/record/2025-...

What are the behavioural and learning consequences of these biased representations?

I discuss this question in a new blog post: tinyurl.com/32ys9k8d

(1/4)

#neuroscience 🧠🤖 #VisionScience

What are the behavioural and learning consequences of these biased representations?

I discuss this question in a new blog post: tinyurl.com/32ys9k8d

(1/4)

#neuroscience 🧠🤖 #VisionScience

doi.org/10.1126/scie...

#neuroscience

doi.org/10.1126/scie...

#neuroscience

@cantlonlab.bsky.social

rdcu.be/dDoBt

www.nature.com/articles/s41...

#Neuroscience

But my high score is 53 seconds.

But my high score is 53 seconds.

www.youtube.com/watch?v=_sTD...

www.youtube.com/watch?v=_sTD...