2015-2025: turns out that there's hardly any improvement. AI bubble?

GPT is at 70% for this task, whereas the best methods get close to 85%.

Leaderboard: huggingface.co/spaces/ml-jk...

P: arxiv.org/abs/2511.14744

2015-2025: turns out that there's hardly any improvement. AI bubble?

GPT is at 70% for this task, whereas the best methods get close to 85%.

Leaderboard: huggingface.co/spaces/ml-jk...

P: arxiv.org/abs/2511.14744

"Value-aware Importance Weighting for Off-policy Reinforcement Learning"

proceedings.mlr.press/v232/de-asis...

"Value-aware Importance Weighting for Off-policy Reinforcement Learning"

proceedings.mlr.press/v232/de-asis...

"The Information Dynamics of Generative Diffusion"

This paper connects entropy production, divergence of vector fields and spontaneous symmetry breaking

link: arxiv.org/abs/2508.19897

"The Information Dynamics of Generative Diffusion"

This paper connects entropy production, divergence of vector fields and spontaneous symmetry breaking

link: arxiv.org/abs/2508.19897

“In our results, xLSTM showcases state-of-the-art accuracy, outperforming 23 popular anomaly detection baselines.”

Again, xLSTM excels in time series analysis.

“In our results, xLSTM showcases state-of-the-art accuracy, outperforming 23 popular anomaly detection baselines.”

Again, xLSTM excels in time series analysis.

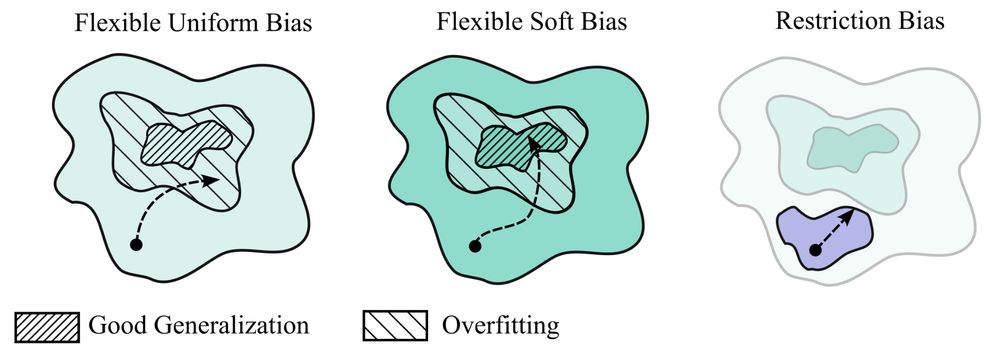

🤯 Why does flow matching generalize? Did you know that the flow matching target you're trying to learn *can only generate training points*?

w @quentinbertrand.bsky.social @annegnx.bsky.social @remiemonet.bsky.social 👇👇👇

🤯 Why does flow matching generalize? Did you know that the flow matching target you're trying to learn *can only generate training points*?

w @quentinbertrand.bsky.social @annegnx.bsky.social @remiemonet.bsky.social 👇👇👇

How can you guide diffusion and flow-based generative models when data is scarce but you have domain knowledge? We introduce Minimum Excess Work, a physics-inspired method for efficiently integrating sparse constraints.

Thread below 👇https://arxiv.org/abs/2505.13375

How can you guide diffusion and flow-based generative models when data is scarce but you have domain knowledge? We introduce Minimum Excess Work, a physics-inspired method for efficiently integrating sparse constraints.

Thread below 👇https://arxiv.org/abs/2505.13375

Try our interactive app for prompting MHNfs — a state-of-the-art model for few-shot molecule–property prediction. No coding or training needed. 🚀

📄 Paper:

pubs.acs.org/doi/10.1021/...

🖥️ App:

huggingface.co/spaces/ml-jk...

Try our interactive app for prompting MHNfs — a state-of-the-art model for few-shot molecule–property prediction. No coding or training needed. 🚀

📄 Paper:

pubs.acs.org/doi/10.1021/...

🖥️ App:

huggingface.co/spaces/ml-jk...

That was quite popular and here is a synthesis of the responses:

That was quite popular and here is a synthesis of the responses:

FPI: Morning poster session

DeLTa: Afternoon poster session

#SDE #Bayes #GenAI #Diffusion #Flow

See you tomorrow at poster 13, 10-12:30.

See you tomorrow at poster 13, 10-12:30.

#CombinatorialOptimization #StatisticalPhysics #DiffusionModels

#CombinatorialOptimization #StatisticalPhysics #DiffusionModels

published in @apsphysics.bsky.social Phys.Rev.X, with Rana Adhikari & Yehonathan Drori @ligo.org @caltech.edu @mpi-scienceoflight.bsky.social

journals.aps.org/prx/abstract...

Extremely happy to see this paper online after 3.5 years of work.

🧵1/5

published in @apsphysics.bsky.social Phys.Rev.X, with Rana Adhikari & Yehonathan Drori @ligo.org @caltech.edu @mpi-scienceoflight.bsky.social

journals.aps.org/prx/abstract...

Extremely happy to see this paper online after 3.5 years of work.

🧵1/5

Meet the fastest 7B language model out there. Based on the mLSTM!

P: arxiv.org/abs/2503.13427

Meet the fastest 7B language model out there. Based on the mLSTM!

P: arxiv.org/abs/2503.13427

Given how easy it is to derive, it's surprising how recently it was discovered ('50s). It was published a while later when Tweedie wrote Stein about it

1/n

Given how easy it is to derive, it's surprising how recently it was discovered ('50s). It was published a while later when Tweedie wrote Stein about it

1/n

We have many open positions in machine learning, deep learning, LLMs!! Both for PostDocs and PhDs!

Join us!

xLSTM outperforms LSTM.

"These findings mark the potential of xLSTM for enhancing DRL-based stock trading systems."

This reinforcement learning approach uses xLSTM in both actor and critic components, which increases the performance.

We have many open positions in machine learning, deep learning, LLMs!! Both for PostDocs and PhDs!

Join us!

Here it is arxiv.org/abs/2503.08247 , great work by Linda Mauron at the CQS Lab, check it out! (1/4)

Here it is arxiv.org/abs/2503.08247 , great work by Linda Mauron at the CQS Lab, check it out! (1/4)

Here: thomwolf.io/blog/scienti...

It's an extension of this interview discussion from the AI summit: youtu.be/AxBd3G0lFLs?...

Here: thomwolf.io/blog/scienti...

It's an extension of this interview discussion from the AI summit: youtu.be/AxBd3G0lFLs?...