📢 I'm recruiting PhD students in Computer Science or Information Science @cornellbowers.bsky.social!

If you're interested, apply to either department (yes, either program!) and list me as a potential advisor!

📢 I'm recruiting PhD students in Computer Science or Information Science @cornellbowers.bsky.social!

If you're interested, apply to either department (yes, either program!) and list me as a potential advisor!

naming the big five personality traits: definitely agi

naming the big five personality traits: definitely agi

saxon.me/blog/2025/co...

prompt: “I’m trying to dial in this v60 of huatusco with my vario. temp / grind recommendations?”

prompt: “I’m trying to dial in this v60 of huatusco with my vario. temp / grind recommendations?”

glad i did it, hope i don’t have to do it again

glad i did it, hope i don’t have to do it again

huggingface.co/openai/gpt-o...

huggingface.co/openai/gpt-o...

w/ Michelle Yang, @sivareddyg.bsky.social , @msonderegger.bsky.social and @dallascard.bsky.social👇(1/12)

w/ Michelle Yang, @sivareddyg.bsky.social , @msonderegger.bsky.social and @dallascard.bsky.social👇(1/12)

Looking for practical methods for settings where human annotations are costly.

A few examples in thread ↴

Looking for practical methods for settings where human annotations are costly.

A few examples in thread ↴

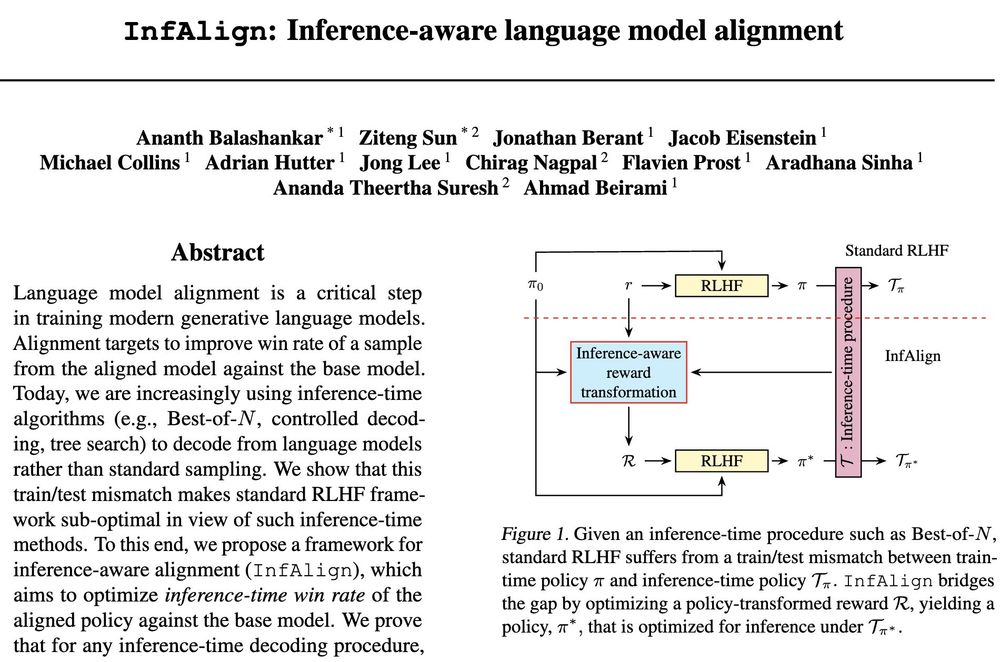

w/ Ananth Balashankar & @jacobeisenstein.bsky.social, we present a reinforcement learning framework in view of test-time scaling. We show how to optimally calibrate & transform rewards to obtain optimal performance with a given test-time algorithm.

𝘊𝘢𝘯 𝘸𝘦 𝘢𝘭𝘪𝘨𝘯 𝘰𝘶𝘳 𝘮𝘰𝘥𝘦𝘭 𝘵𝘰 𝘣𝘦𝘵𝘵𝘦𝘳 𝘴𝘶𝘪𝘵 𝘢 𝘨𝘪𝘷𝘦𝘯 𝘪𝘯𝘧𝘦𝘳𝘦𝘯𝘤𝘦-𝘵𝘪𝘮𝘦 𝘱𝘳𝘰𝘤𝘦𝘥𝘶𝘳𝘦?

Check out below.

w/ Ananth Balashankar & @jacobeisenstein.bsky.social, we present a reinforcement learning framework in view of test-time scaling. We show how to optimally calibrate & transform rewards to obtain optimal performance with a given test-time algorithm.

w/ @jacobeisenstein.bsky.social & Alekh Agarwal, we present a theoretical characterization of best-of-N (a simple yet effective method for test-time scaling & alignment). Our results justify the widespread use of BoN as a strong baseline in this space.

- improving agents

- scaling inference-time compute

- preference alignment

- jailbreaking models

How does 𝐁𝐨𝐧 work? and why is it so strong?

Find some answers in the paper we wrote over two Christmas breaks!🧵

w/ @jacobeisenstein.bsky.social & Alekh Agarwal, we present a theoretical characterization of best-of-N (a simple yet effective method for test-time scaling & alignment). Our results justify the widespread use of BoN as a strong baseline in this space.

Or accurate but expensive?

How to split a limited annotation budget between different types of judges?👩⚖️🤖🦧

www.arxiv.org/abs/2506.07949

Or accurate but expensive?

How to split a limited annotation budget between different types of judges?👩⚖️🤖🦧

www.arxiv.org/abs/2506.07949

He asked me to guess how many Nerds were in a box of Nerds and I did and asked if I was right and he said “well, well never know” and I said “of course we can know.” And he said “I’m not gonna count them all.” And I said: “you don’t have to” and explained MATH.