http://ptshaw.com

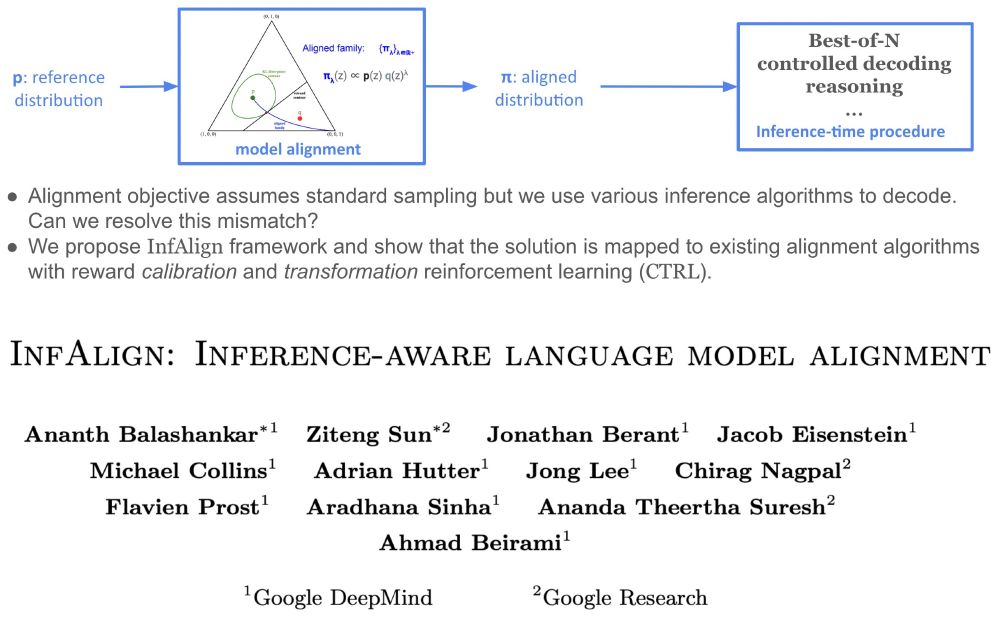

Alignment optimization objective implicitly assumes 𝘴𝘢𝘮𝘱𝘭𝘪𝘯𝘨 from the resulting aligned model. But we are increasingly using different and sometimes sophisticated inference-time compute algorithms.

How to resolve this discrepancy?🧵

Alignment optimization objective implicitly assumes 𝘴𝘢𝘮𝘱𝘭𝘪𝘯𝘨 from the resulting aligned model. But we are increasingly using different and sometimes sophisticated inference-time compute algorithms.

How to resolve this discrepancy?🧵

Pack 1: go.bsky.app/2VWBcCd

Pack 2: go.bsky.app/Bw84Hmc

DM if you want to be included (or nominate people who should be!)

Pack 1: go.bsky.app/2VWBcCd

Pack 2: go.bsky.app/Bw84Hmc

DM if you want to be included (or nominate people who should be!)

github.com/varungodbole...

github.com/varungodbole...

AI: go.bsky.app/SipA7it

RL: go.bsky.app/3WPHcHg

Women in AI: go.bsky.app/LaGDpqg

NLP: go.bsky.app/SngwGeS

AI and news: go.bsky.app/5sFqVNS

You can also search all starter packs here: blueskydirectory.com/starter-pack...

AI: go.bsky.app/SipA7it

RL: go.bsky.app/3WPHcHg

Women in AI: go.bsky.app/LaGDpqg

NLP: go.bsky.app/SngwGeS

AI and news: go.bsky.app/5sFqVNS

You can also search all starter packs here: blueskydirectory.com/starter-pack...

ALTA is A Language for Transformer Analysis.

Because ALTA programs can be compiled to transformer weights, it provides constructive proofs of transformer expressivity. It also offers new analytic tools for *learnability*.

arxiv.org/abs/2410.18077

ALTA is A Language for Transformer Analysis.

Because ALTA programs can be compiled to transformer weights, it provides constructive proofs of transformer expressivity. It also offers new analytic tools for *learnability*.

arxiv.org/abs/2410.18077