We found that if you simply delete them after pretraining and recalibrate for <1% of the original budget, you unlock massive context windows. Smarter, not harder.

We found embeddings like RoPE aid training but bottleneck long-sequence generalization. Our solution’s simple: treat them as a temporary training scaffold, not a permanent necessity.

arxiv.org/abs/2512.12167

pub.sakana.ai/DroPE

We found that if you simply delete them after pretraining and recalibrate for <1% of the original budget, you unlock massive context windows. Smarter, not harder.

We found embeddings like RoPE aid training but bottleneck long-sequence generalization. Our solution’s simple: treat them as a temporary training scaffold, not a permanent necessity.

arxiv.org/abs/2512.12167

pub.sakana.ai/DroPE

We found embeddings like RoPE aid training but bottleneck long-sequence generalization. Our solution’s simple: treat them as a temporary training scaffold, not a permanent necessity.

arxiv.org/abs/2512.12167

pub.sakana.ai/DroPE

"""The original paper claims Grassmann flow layers achieve perplexity "within 10-15% of size-matched Transformers" on Wikitext-2. ... using the exact architecture specified in the paper, reveals a 22.6% performance gap - significantly larger than claimed."""

"""The original paper claims Grassmann flow layers achieve perplexity "within 10-15% of size-matched Transformers" on Wikitext-2. ... using the exact architecture specified in the paper, reveals a 22.6% performance gap - significantly larger than claimed."""

Unfortunately the author didn't share any code and so far I only found one attempt at reproducing his findings.

elliotarledge.com used Claude Code and...

Unfortunately the author didn't share any code and so far I only found one attempt at reproducing his findings.

elliotarledge.com used Claude Code and...

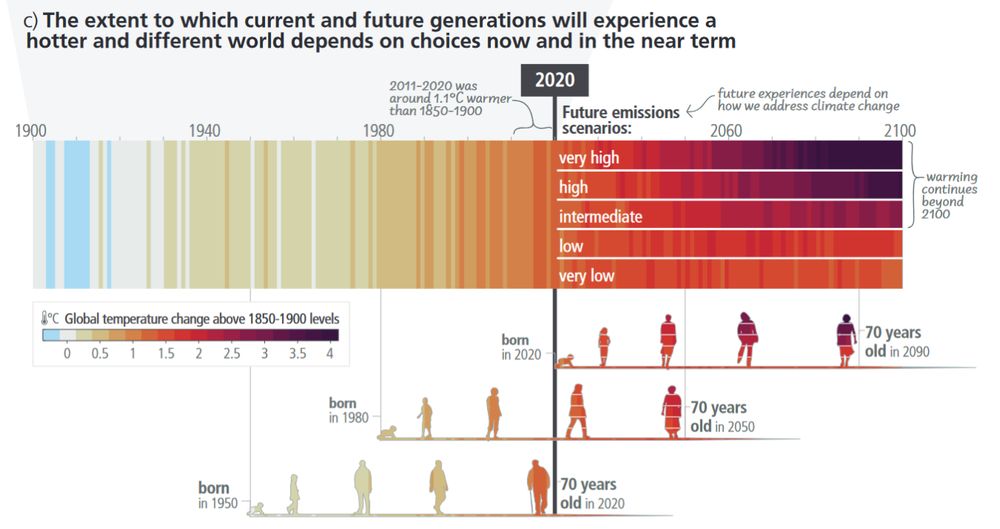

How? I'm adding new videos, deep dives & behind-the-scenes stories to Talking Climate.

Why? Because climate honesty and hope matter more than ever right now.

Join me on Patreon: bit.ly/47pCLaf

Or Substack: bit.ly/4ntfvhJ

How? I'm adding new videos, deep dives & behind-the-scenes stories to Talking Climate.

Why? Because climate honesty and hope matter more than ever right now.

Join me on Patreon: bit.ly/47pCLaf

Or Substack: bit.ly/4ntfvhJ

Meet Seaweed-APT2: an 8B-param autoregressive GAN that streams 24fps video at 736×416—all in *1 network forward eval*. That’s 1440 frames/min, live, on a single H100 🚀

Project: seaweed-apt.com/2

Meet Seaweed-APT2: an 8B-param autoregressive GAN that streams 24fps video at 736×416—all in *1 network forward eval*. That’s 1440 frames/min, live, on a single H100 🚀

Project: seaweed-apt.com/2

Meanwhile the FTC: www.ftc.gov/news-events/...

Meanwhile the FTC: www.ftc.gov/news-events/...