Ask us anything about OLMo, our family of fully-open language models. Our researchers will be on hand to answer them Thursday, May 8 at 8am PST.

Ask us anything about OLMo, our family of fully-open language models. Our researchers will be on hand to answer them Thursday, May 8 at 8am PST.

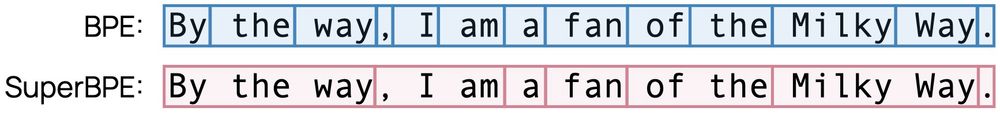

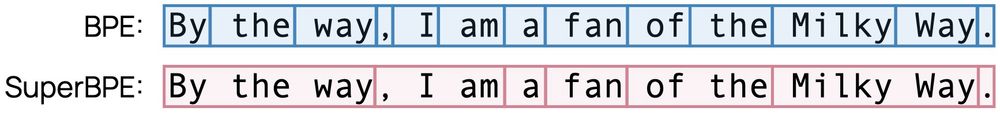

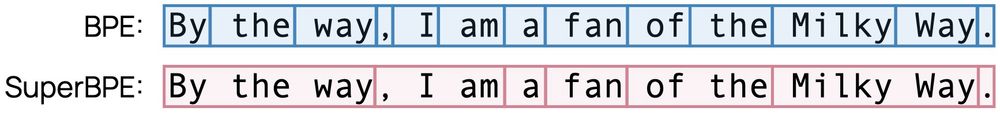

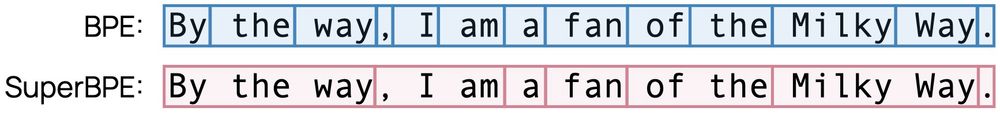

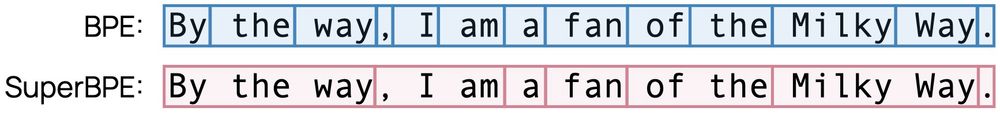

Enter SuperBPE, a tokenizer that lifts this restriction and brings substantial gains in efficiency and performance! 🚀

Details 👇

When pretraining at 8B scale, SuperBPE models consistently outperform the BPE baseline on 30 downstream tasks (+8% MMLU), while also being 27% more efficient at inference time.🧵

Enter SuperBPE, a tokenizer that lifts this restriction and brings substantial gains in efficiency and performance! 🚀

Details 👇

When pretraining at 8B scale, SuperBPE models consistently outperform the BPE baseline on 30 downstream tasks (+8% MMLU), while also being 27% more efficient at inference time.🧵

When pretraining at 8B scale, SuperBPE models consistently outperform the BPE baseline on 30 downstream tasks (+8% MMLU), while also being 27% more efficient at inference time.🧵

When pretraining at 8B scale, SuperBPE models consistently outperform the BPE baseline on 30 downstream tasks (+8% MMLU), while also being 27% more efficient at inference time.🧵

When pretraining at 8B scale, SuperBPE models consistently outperform the BPE baseline on 30 downstream tasks (+8% MMLU), while also being 27% more efficient at inference time.🧵

When pretraining at 8B scale, SuperBPE models consistently outperform the BPE baseline on 30 downstream tasks (+8% MMLU), while also being 27% more efficient at inference time.🧵

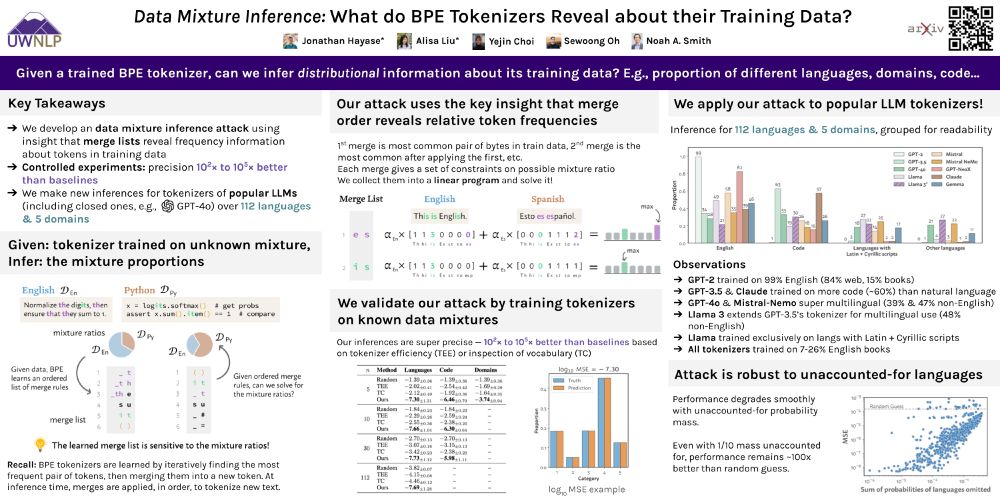

arxiv.org/abs/2407.16607

arxiv.org/abs/2407.16607

🔗 arxiv.org/abs/2407.16607

🔗 arxiv.org/abs/2407.16607

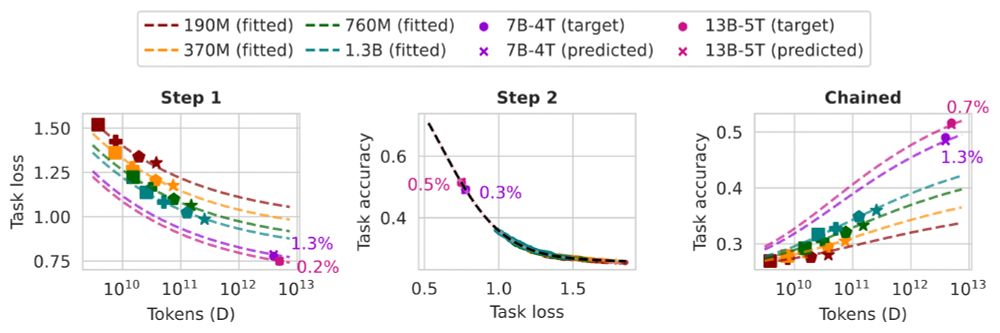

We develop task scaling laws and model ladders, which predict the accuracy on individual tasks by OLMo 2 7B & 13B models within 2 points of absolute error. The cost is 1% of the compute used to pretrain them.

We develop task scaling laws and model ladders, which predict the accuracy on individual tasks by OLMo 2 7B & 13B models within 2 points of absolute error. The cost is 1% of the compute used to pretrain them.

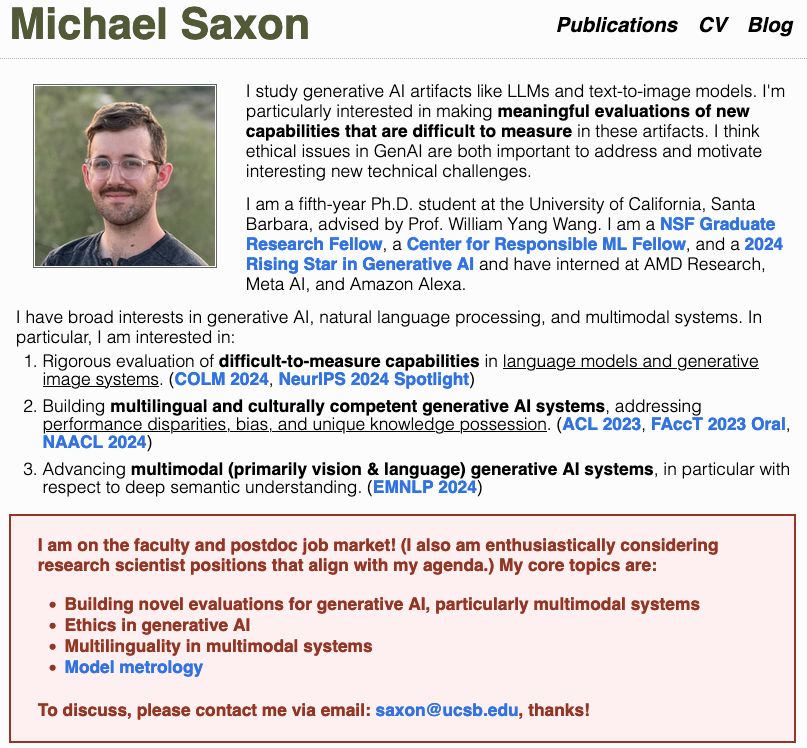

I'm searching for faculty positions/postdocs in multilingual/multicultural NLP, vision+language models, and eval for genAI!

I'll be at #NeurIPS2024 presenting our work on meta-evaluation for text-to-image faithfulness! Let's chat there!

Papers in🧵, see more: saxon.me

I'm searching for faculty positions/postdocs in multilingual/multicultural NLP, vision+language models, and eval for genAI!

I'll be at #NeurIPS2024 presenting our work on meta-evaluation for text-to-image faithfulness! Let's chat there!

Papers in🧵, see more: saxon.me

There are now several training configs that together reproduce the training runs that lead to the final OLMo 2 models.

In particular, all the training data is available, tokenized and shuffled exactly as we trained on it!

There are now several training configs that together reproduce the training runs that lead to the final OLMo 2 models.

In particular, all the training data is available, tokenized and shuffled exactly as we trained on it!

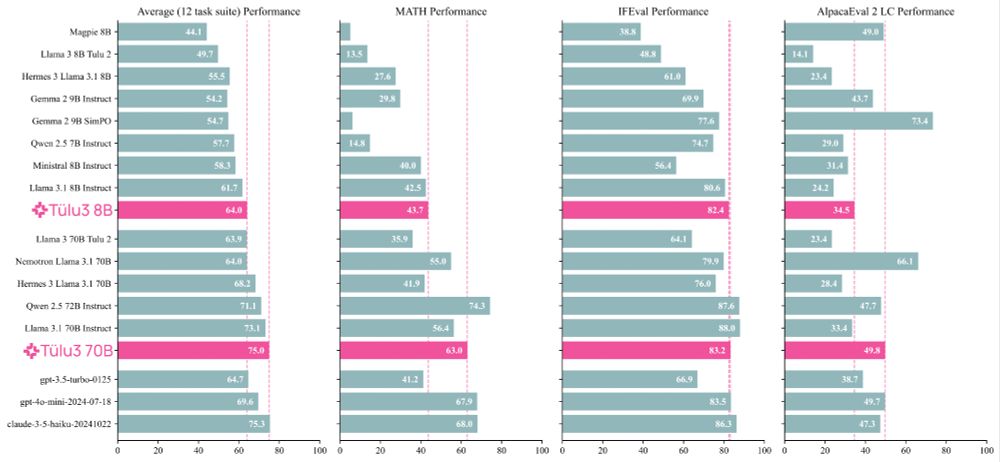

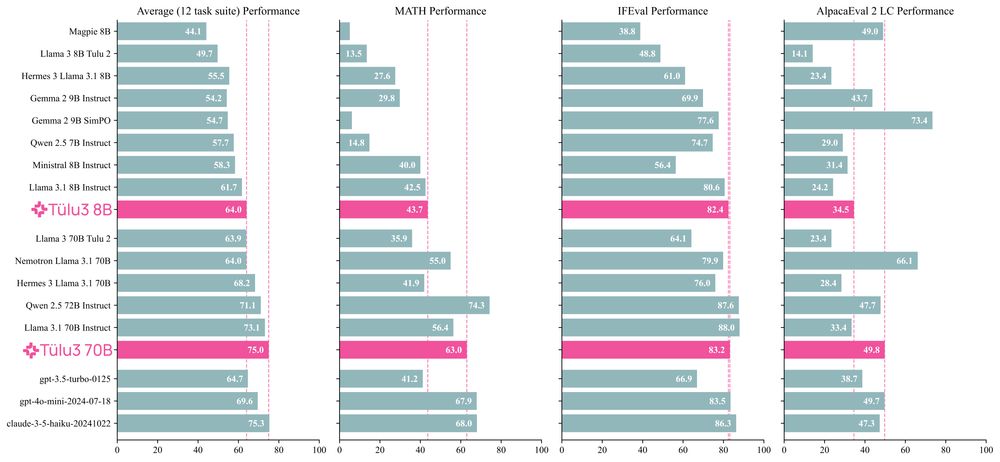

Simply the best fully open models yet.

Really proud of the work & the amazing team at

@ai2.bsky.social

Simply the best fully open models yet.

Really proud of the work & the amazing team at

@ai2.bsky.social

Paper: allenai.org/papers/tulu-...

Demo: playground.allenai.org

Code: github.com/allenai/open...

Eval: github.com/allenai/olmes

Notes

Paper: allenai.org/papers/tulu-...

Demo: playground.allenai.org

Code: github.com/allenai/open...

Eval: github.com/allenai/olmes

Notes

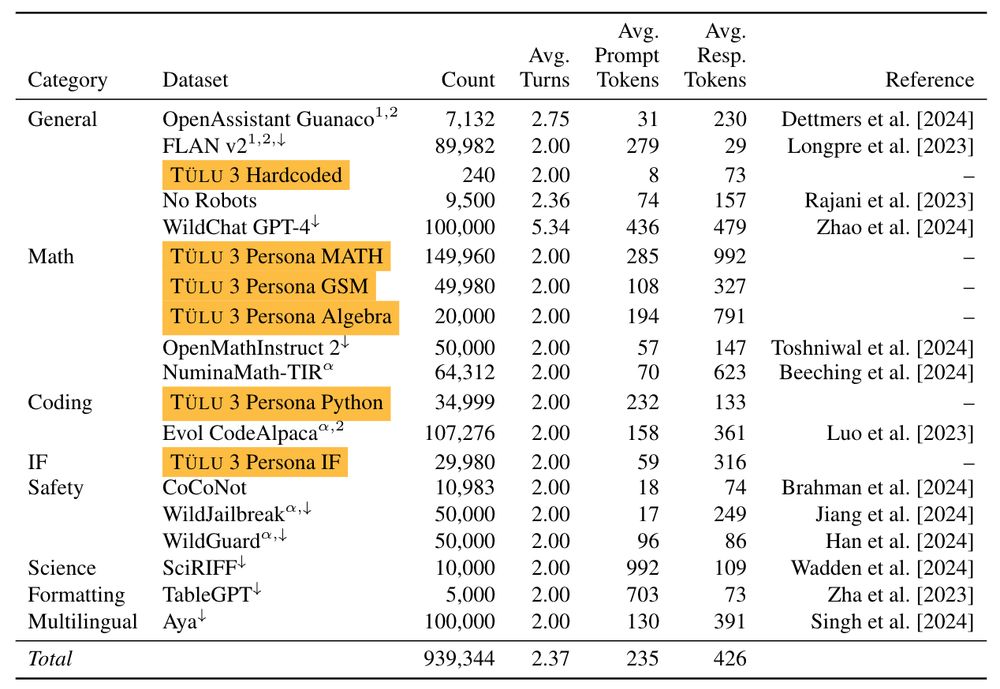

We invented new methods for fine-tuning language models with RL and built upon best practices to scale synthetic instruction and preference data.

Demo, GitHub, paper, and models 👇

We invented new methods for fine-tuning language models with RL and built upon best practices to scale synthetic instruction and preference data.

Demo, GitHub, paper, and models 👇

Thread.

Thread.

@uwnlp.bsky.social & Ai2

With open models & 45M-paper datastores, it outperforms proprietary systems & match human experts.

Try out our demo!

openscholar.allen.ai

@uwnlp.bsky.social & Ai2

With open models & 45M-paper datastores, it outperforms proprietary systems & match human experts.

Try out our demo!

openscholar.allen.ai