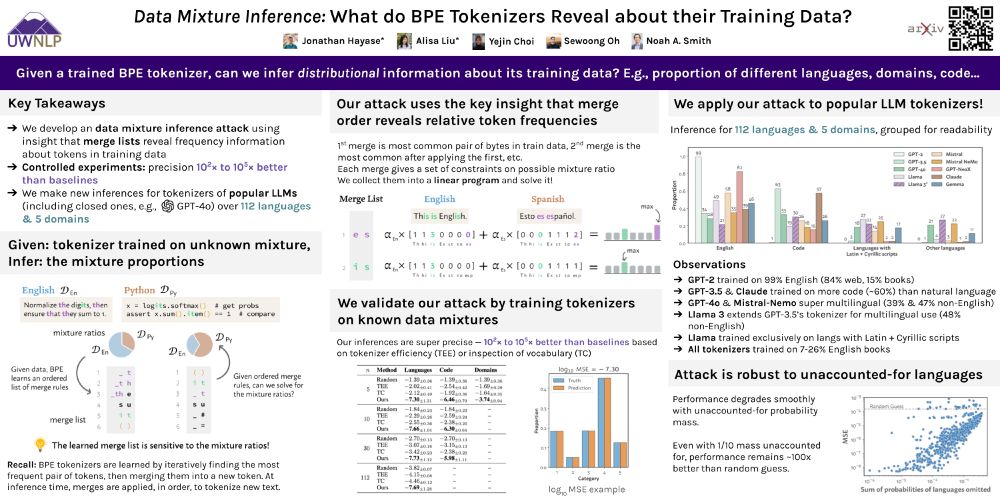

Paper: arxiv.org/abs/2503.13423

HF models & tokenizers: tinyurl.com/superbpe

This work would not have been possible w/o co-1st 🌟@jon.jon.ke🌟, @valentinhofmann.bsky.social @sewoong79.bsky.social @nlpnoah.bsky.social @yejinchoinka.bsky.social

Paper: arxiv.org/abs/2503.13423

HF models & tokenizers: tinyurl.com/superbpe

This work would not have been possible w/o co-1st 🌟@jon.jon.ke🌟, @valentinhofmann.bsky.social @sewoong79.bsky.social @nlpnoah.bsky.social @yejinchoinka.bsky.social

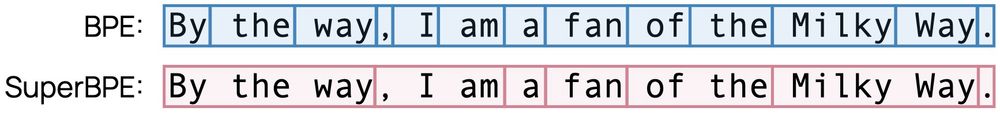

When pretraining at 8B scale, SuperBPE models consistently outperform the BPE baseline on 30 downstream tasks (+8% MMLU), while also being 27% more efficient at inference time.🧵

When pretraining at 8B scale, SuperBPE models consistently outperform the BPE baseline on 30 downstream tasks (+8% MMLU), while also being 27% more efficient at inference time.🧵

🔗 arxiv.org/abs/2407.16607

🔗 arxiv.org/abs/2407.16607