w/ @sscardapane.bsky.social @neuralnoise.com @bartoszWojcik

Soon #AAAI25

Link 👇

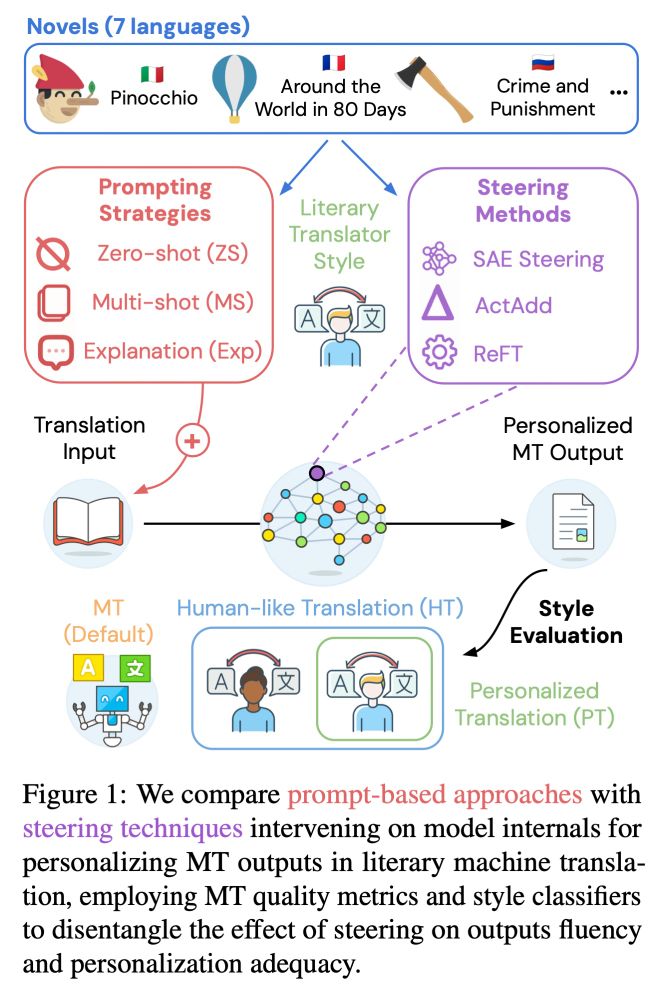

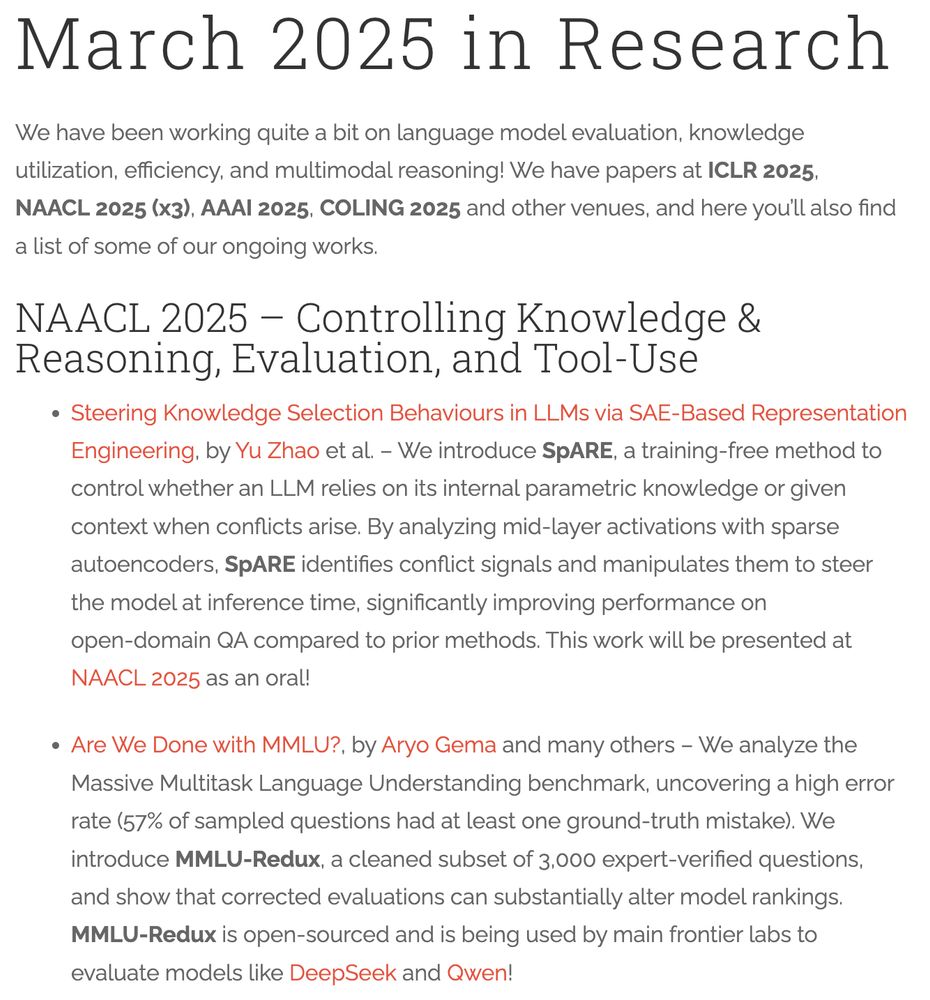

Following SpARE (@yuzhaouoe.bsky.social @alessiodevoto.bsky.social), we propose ✨ contrastive SAE steering ✨ with mutual info to personalize literary MT by tuning latent features 4/

Following SpARE (@yuzhaouoe.bsky.social @alessiodevoto.bsky.social), we propose ✨ contrastive SAE steering ✨ with mutual info to personalize literary MT by tuning latent features 4/

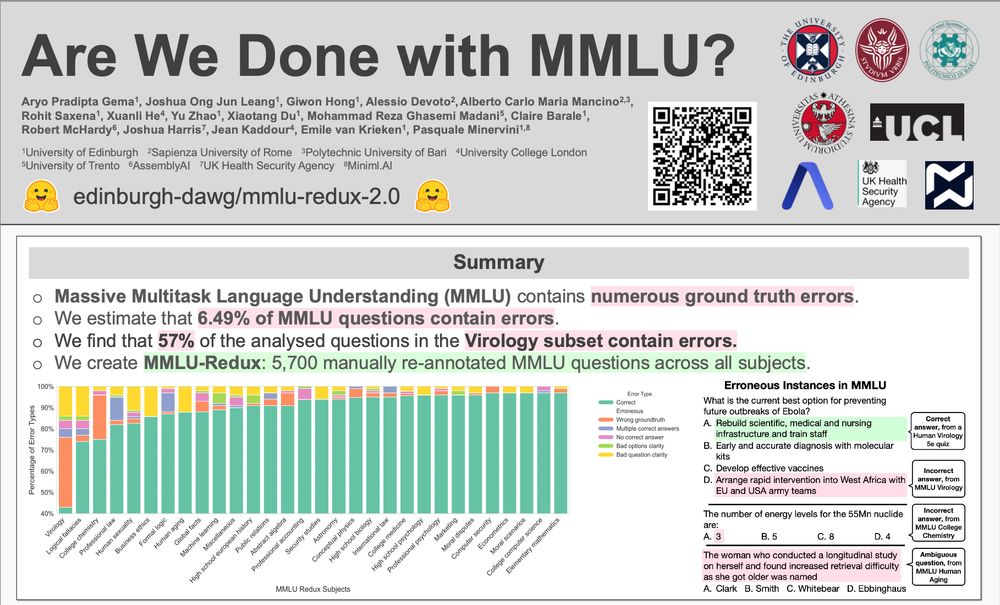

Wish I could be there for our "Are We Done with MMLU?" poster today (9:00-10:30am in Hall 3, Poster Session 7), but visa drama said nope 😅

If anyone's swinging by, give our research some love! Hit me up if you check it out! 👋

Wish I could be there for our "Are We Done with MMLU?" poster today (9:00-10:30am in Hall 3, Poster Session 7), but visa drama said nope 😅

If anyone's swinging by, give our research some love! Hit me up if you check it out! 👋

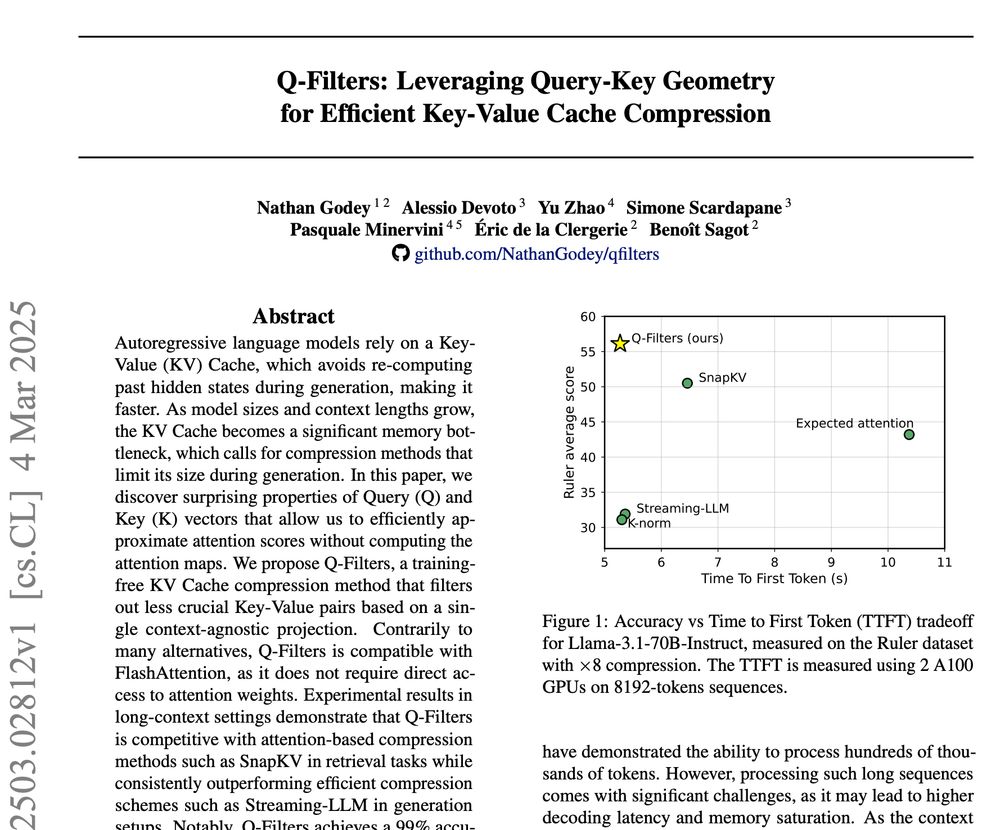

We introduce Q-Filters, a training-free method for efficient KV Cache compression!

It is compatible with FlashAttention and can compress along generation which is particularly useful for reasoning models ⚡

TLDR: we make Streaming-LLM smarter using the geometry of attention

We introduce Q-Filters, a training-free method for efficient KV Cache compression!

It is compatible with FlashAttention and can compress along generation which is particularly useful for reasoning models ⚡

TLDR: we make Streaming-LLM smarter using the geometry of attention

(april-tools.github.io/colorai/)

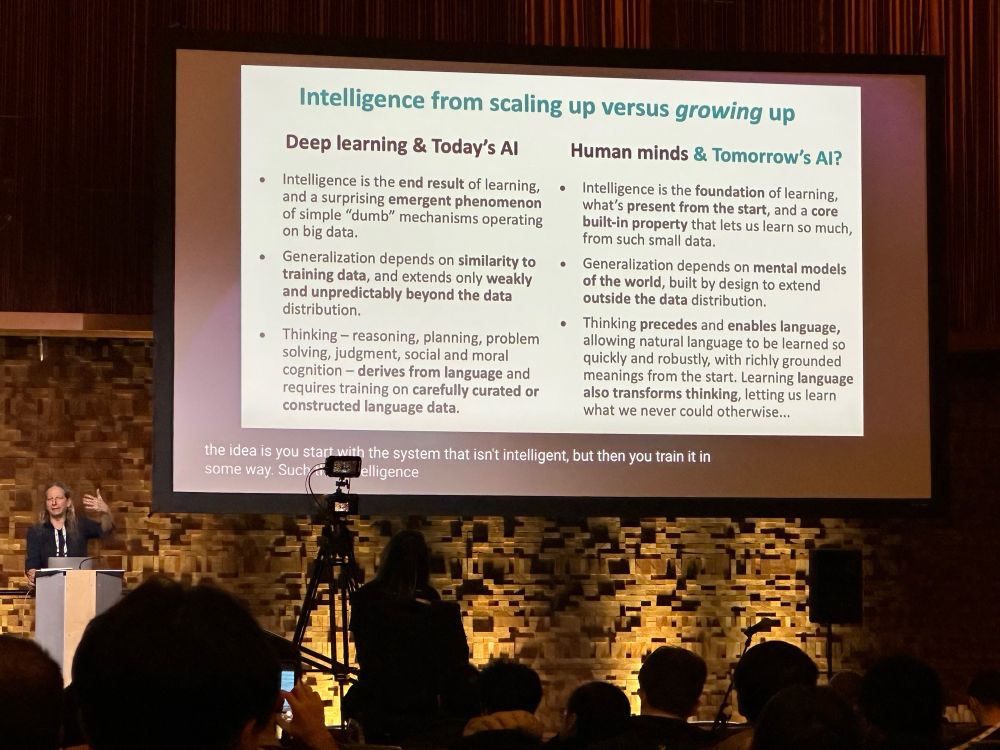

Nadav Cohen is now giving his talk on "What Makes Data Suitable for Deep Learning?"

Tools from quantum physics are shown to be useful in building more expressive deep learning models by changing the data distribution.

(april-tools.github.io/colorai/)

Nadav Cohen is now giving his talk on "What Makes Data Suitable for Deep Learning?"

Tools from quantum physics are shown to be useful in building more expressive deep learning models by changing the data distribution.

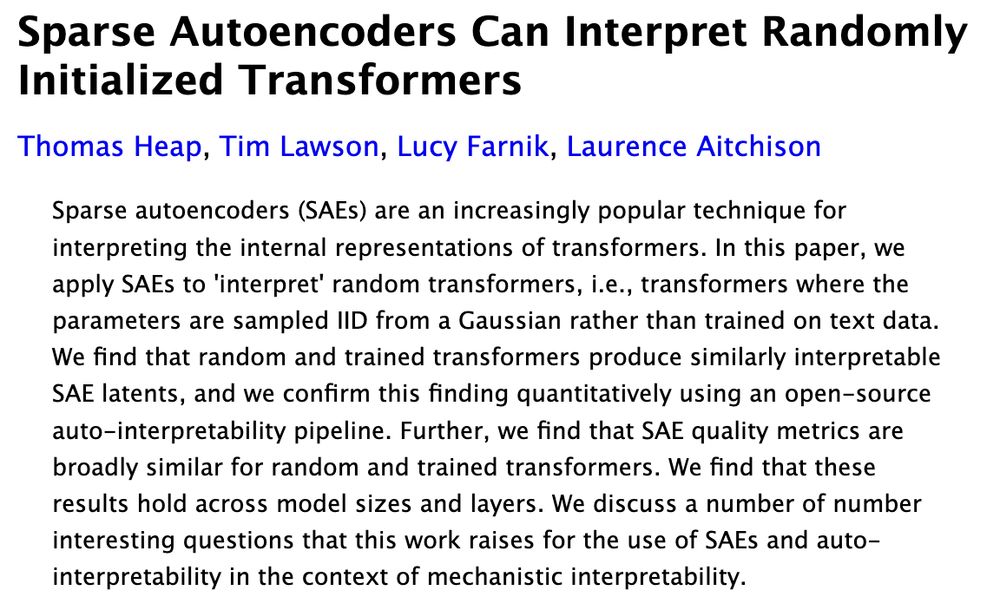

2025: SAEs give plausible interpretations of random weights, triggering skepticism and ...

2025: SAEs give plausible interpretations of random weights, triggering skepticism and ...

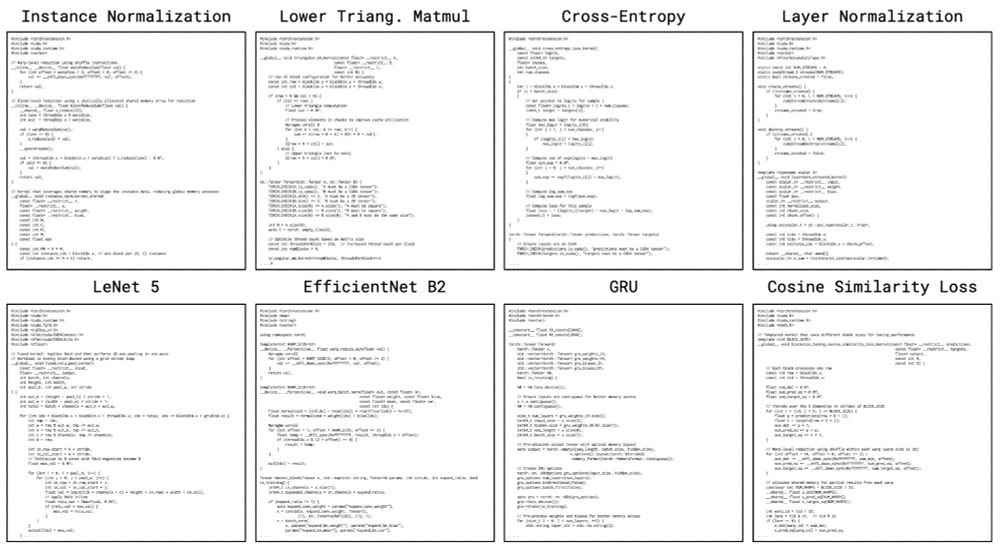

sakana.ai/ai-cuda-engi...

The AI CUDA Engineer can produce highly optimized CUDA kernels, reaching 10-100x speedup over common machine learning operations in PyTorch.

Examples:

sakana.ai/ai-cuda-engi...

The AI CUDA Engineer can produce highly optimized CUDA kernels, reaching 10-100x speedup over common machine learning operations in PyTorch.

Examples:

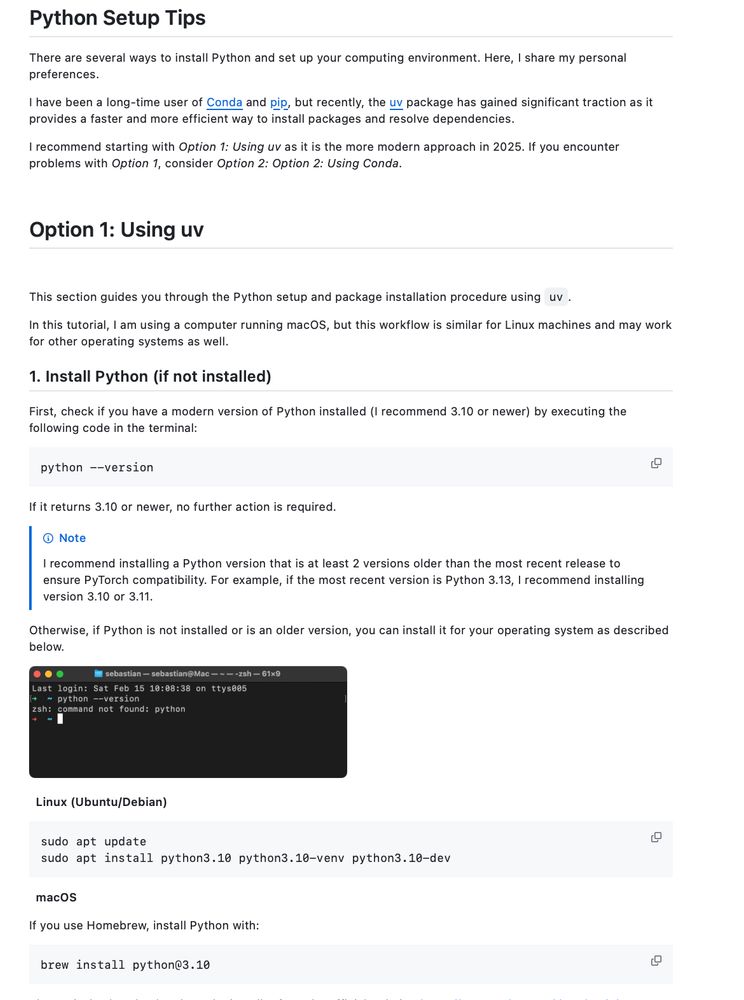

Here's my go-to recommendation for uv + venv in Python projects for faster installs, better dependency management: github.com/rasbt/LLMs-f...

(Any additional suggestions?)

Here's my go-to recommendation for uv + venv in Python projects for faster installs, better dependency management: github.com/rasbt/LLMs-f...

(Any additional suggestions?)

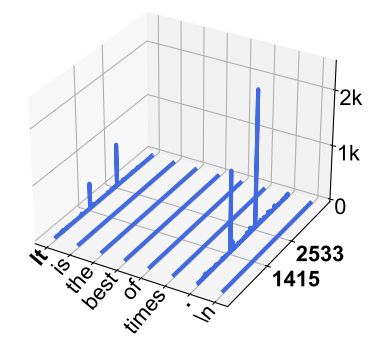

- The Super Weight: finds performance can be totally degraded when pruning a *single* weight - Mengxia Yu et al.

- Massive Activations in LLM:finds some (crucial) activations have very high norm irrespective of context - Mingjie Sun et al.

- The Super Weight: finds performance can be totally degraded when pruning a *single* weight - Mengxia Yu et al.

- Massive Activations in LLM:finds some (crucial) activations have very high norm irrespective of context - Mingjie Sun et al.

Janus-Pro🔥 autoregressive framework that unifies multimodal understanding and generation

huggingface.co/deepseek-ai/...

✨ 1B / 7B

✨ MIT License

Janus-Pro🔥 autoregressive framework that unifies multimodal understanding and generation

huggingface.co/deepseek-ai/...

✨ 1B / 7B

✨ MIT License

On Twitter, my feed of scientists who study climate-related topics topped out at 3300. Here, we’re at 4500 already and it’s still growing.

Pin here: bsky.app/profile/did:...

https://go.nature.com/42tH8Ai

On Twitter, my feed of scientists who study climate-related topics topped out at 3300. Here, we’re at 4500 already and it’s still growing.

Pin here: bsky.app/profile/did:...

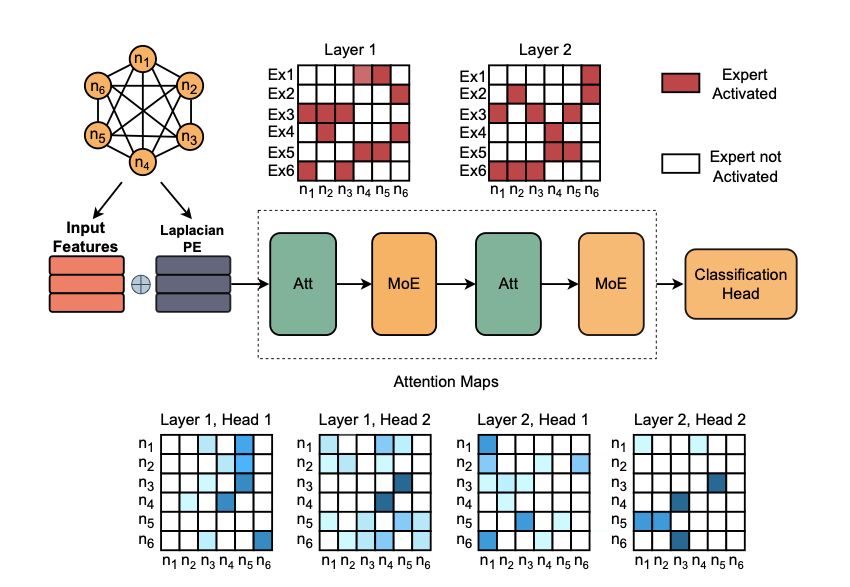

by @alessiodevoto.bsky.social @sgiagu.bsky.social et al.

We propose a MoE graph transformer for particle collision analysis, with many nice interpretability insights (e.g., expert specialization).

arxiv.org/abs/2501.03432

by @alessiodevoto.bsky.social @sgiagu.bsky.social et al.

We propose a MoE graph transformer for particle collision analysis, with many nice interpretability insights (e.g., expert specialization).

arxiv.org/abs/2501.03432

Llamas Work in English: LLMs default to English-based concept representations, regardless of input language @wendlerc.bsky.social et al

Semantic Hub: Multimodal models create a single shared semantic space, structured by their primary language @zhaofengwu.bsky.social et a

Llamas Work in English: LLMs default to English-based concept representations, regardless of input language @wendlerc.bsky.social et al

Semantic Hub: Multimodal models create a single shared semantic space, structured by their primary language @zhaofengwu.bsky.social et a

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

w/ @sscardapane.bsky.social @neuralnoise.com @bartoszWojcik

Soon #AAAI25

Link 👇

w/ @sscardapane.bsky.social @neuralnoise.com @bartoszWojcik

Soon #AAAI25

Link 👇

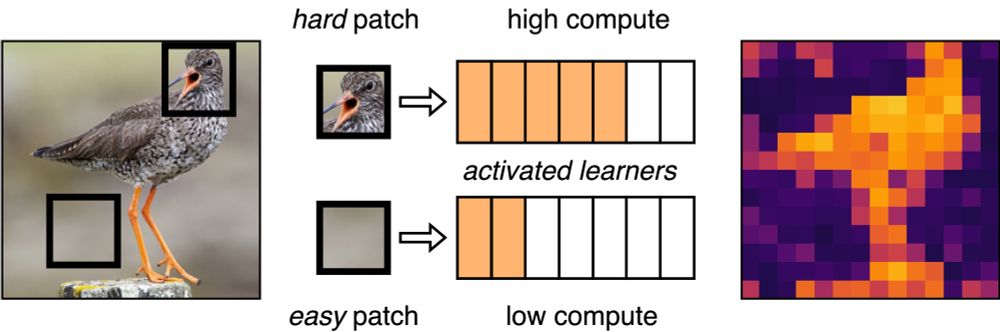

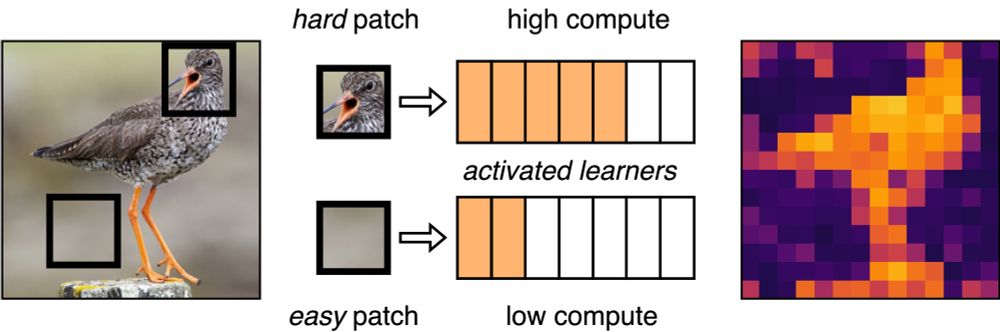

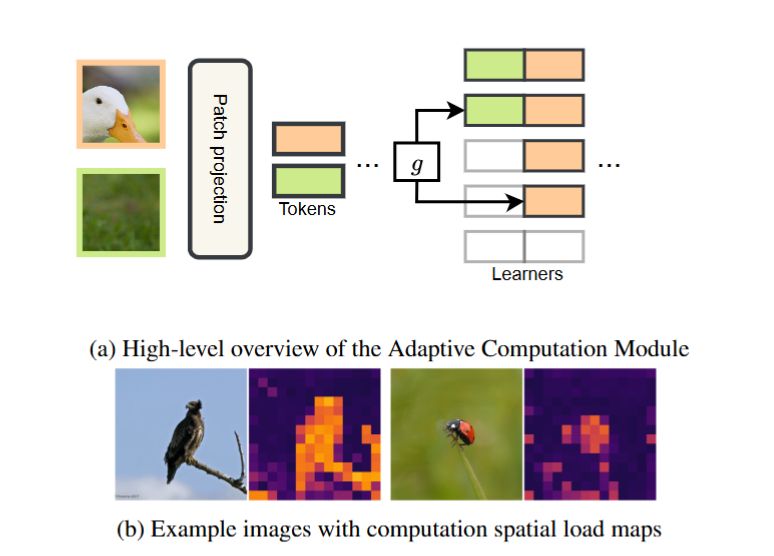

with @alessiodevoto.bsky.social @neuralnoise.com

Happy to share our work on distilling efficient transformers with dynamic modules' activation was accepted at #AAAI2025. 🔥

arxiv.org/abs/2312.10193

with @alessiodevoto.bsky.social @neuralnoise.com

Happy to share our work on distilling efficient transformers with dynamic modules' activation was accepted at #AAAI2025. 🔥

arxiv.org/abs/2312.10193

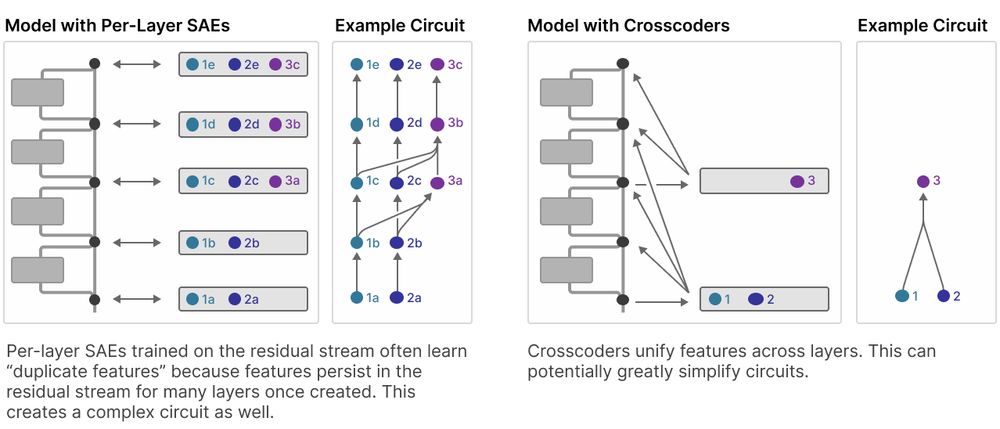

by @colah.bsky.social @anthropic.com

Investigates stability & dynamics of "interpretable features" with cross-layers SAEs. Can also be used to investigate differences in fine-tuned models.

transformer-circuits.pub/2024/crossco...

by @colah.bsky.social @anthropic.com

Investigates stability & dynamics of "interpretable features" with cross-layers SAEs. Can also be used to investigate differences in fine-tuned models.

transformer-circuits.pub/2024/crossco...

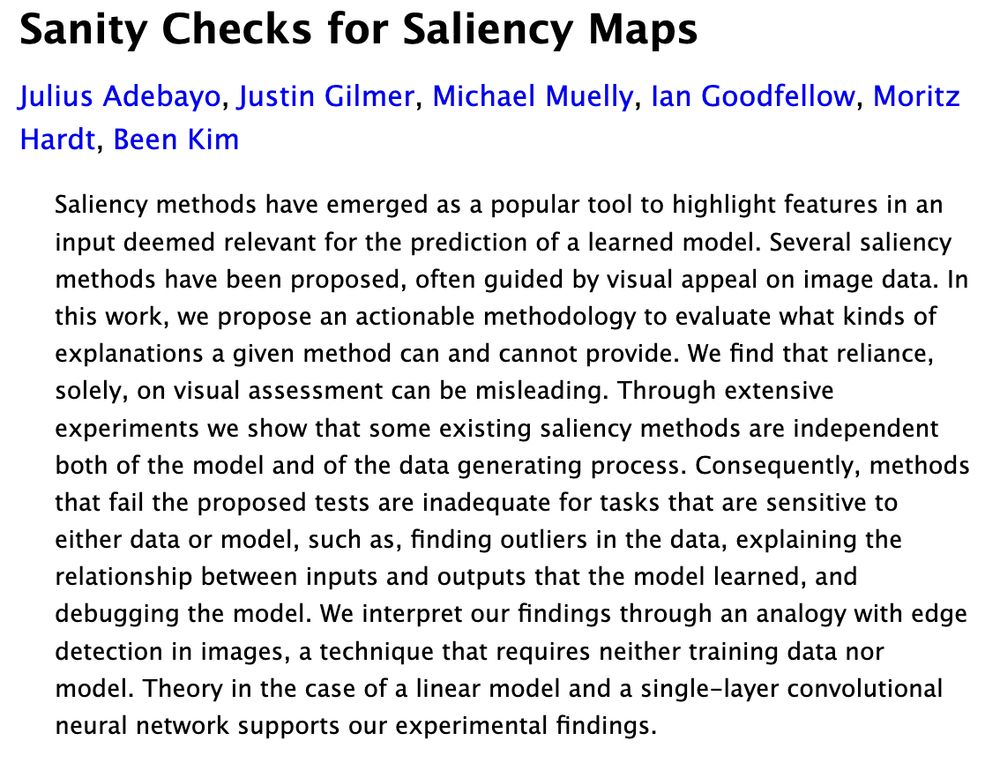

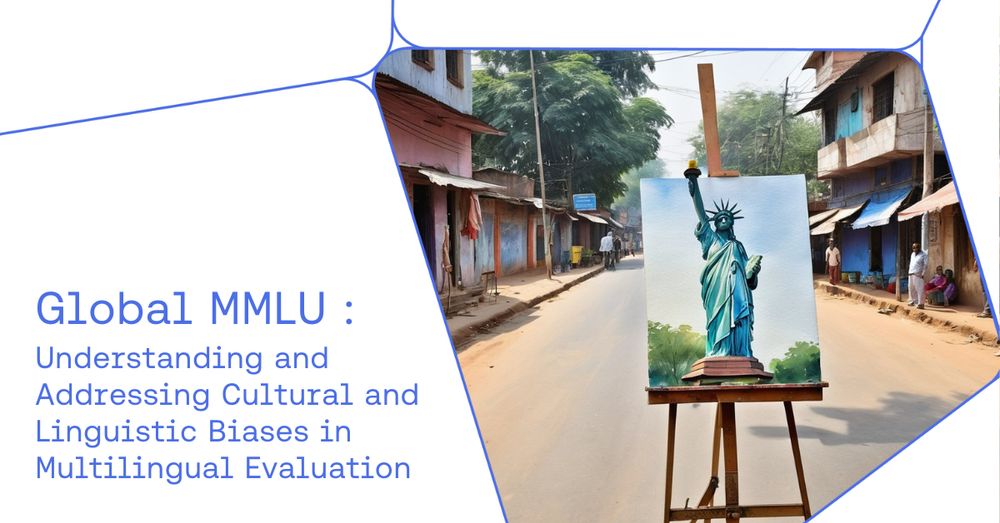

We investigated the issue of existing errors in the original MMLU in

arxiv.org/abs/2406.04127

@aryopg.bsky.social @neuralnoise.com

As part of a massive cross-institutional collaboration:

🗽Find MMLU is heavily overfit to western culture

🔍 Professional annotation of cultural sensitivity data

🌍 Release improved Global-MMLU 42 languages

📜 Paper: arxiv.org/pdf/2412.03304

📂 Data: hf.co/datasets/Coh...

We investigated the issue of existing errors in the original MMLU in

arxiv.org/abs/2406.04127

@aryopg.bsky.social @neuralnoise.com