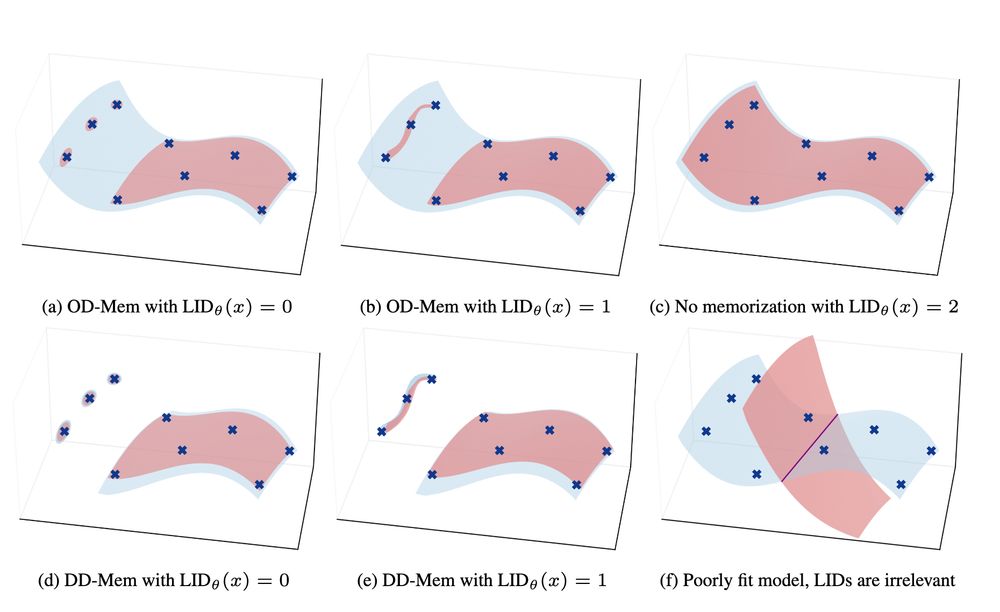

- The Super Weight: finds performance can be totally degraded when pruning a *single* weight - Mengxia Yu et al.

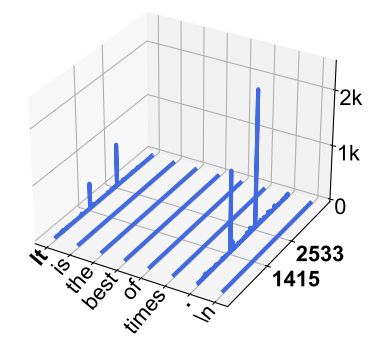

- Massive Activations in LLM:finds some (crucial) activations have very high norm irrespective of context - Mingjie Sun et al.

- The Super Weight: finds performance can be totally degraded when pruning a *single* weight - Mengxia Yu et al.

- Massive Activations in LLM:finds some (crucial) activations have very high norm irrespective of context - Mingjie Sun et al.

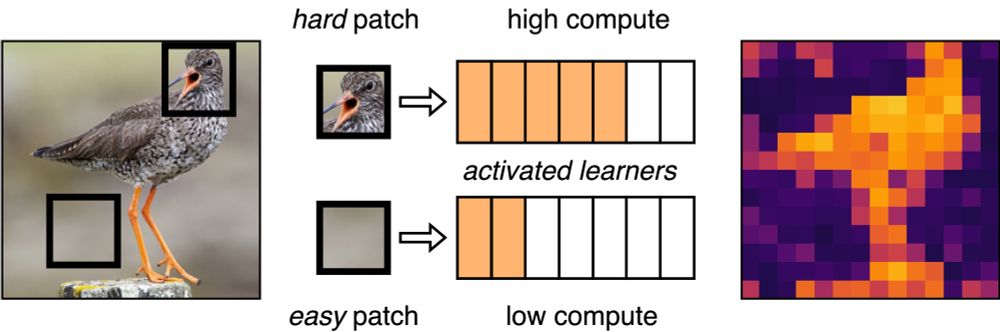

w/ @sscardapane.bsky.social @neuralnoise.com @bartoszWojcik

Soon #AAAI25

Link 👇

w/ @sscardapane.bsky.social @neuralnoise.com @bartoszWojcik

Soon #AAAI25

Link 👇

From "Restructuring Vector Quantization With The Rotation Trick" @ChristopherFifty et al.

From "Restructuring Vector Quantization With The Rotation Trick" @ChristopherFifty et al.

Check out the method details in our EMNLP '24 paper: arxiv.org/abs/2406.11430

Check out the method details in our EMNLP '24 paper: arxiv.org/abs/2406.11430

Very cool transformer-inspired architecture where linear layers are replaced with token-parameter attention (Pattention). This allows for efficient scaling by adding new parameters to the model.

Very cool transformer-inspired architecture where linear layers are replaced with token-parameter attention (Pattention). This allows for efficient scaling by adding new parameters to the model.