Contributing to the Chinese ML community.

huggingface.co/baidu/ERNIE-...

✨ Small MoE - Apache 2.0

✨ 128K context length for deep reasoning

✨ Efficient tool usage capabilities

huggingface.co/baidu/ERNIE-...

✨ Small MoE - Apache 2.0

✨ 128K context length for deep reasoning

✨ Efficient tool usage capabilities

huggingface.co/baidu/ERNIE-...

✨ Small MoE - Apache 2.0

✨ 128K context length for deep reasoning

✨ Efficient tool usage capabilities

huggingface.co/baidu/ERNIE-...

✨ Small MoE - Apache 2.0

✨ 128K context length for deep reasoning

✨ Efficient tool usage capabilities

huggingface.co/openbmb/Mini...

✨ 8B - Apache 2.0

✨ Hybrid reasoning model: deep reasoning +fast inference.

✨5x faster on edge chips, 90% smaller (BitCPM)

✨Trained on UltraClean + UltraChat v2 data

huggingface.co/openbmb/Mini...

✨ 8B - Apache 2.0

✨ Hybrid reasoning model: deep reasoning +fast inference.

✨5x faster on edge chips, 90% smaller (BitCPM)

✨Trained on UltraClean + UltraChat v2 data

huggingface.co/datasets/m-a...

huggingface.co/papers/2509....

Testing LLMs on their ability to override biases & follow adversarial instructions.

✨ 8 challenge types

✨ 1,012 CN/EN Qs across 23 domains

✨ Human-in-the-loop + LLM-as-a-Judge

huggingface.co/datasets/m-a...

huggingface.co/papers/2509....

Testing LLMs on their ability to override biases & follow adversarial instructions.

✨ 8 challenge types

✨ 1,012 CN/EN Qs across 23 domains

✨ Human-in-the-loop + LLM-as-a-Judge

huggingface.co/collections/...

✨ 46B total / 2.5B active - Apache2.0

✨ Dense-level performance at lower cost

✨ Trained on 22T tokens with progressive curriculum

✨ 64K context length

huggingface.co/collections/...

✨ 46B total / 2.5B active - Apache2.0

✨ Dense-level performance at lower cost

✨ Trained on 22T tokens with progressive curriculum

✨ 64K context length

Kimi K2 >>> Kimi K2-Instruct-0905🔥

huggingface.co/moonshotai/K...

✨ 32B activated / 1T total parameters

✨ Enhanced agentic coding intelligence

✨ Better frontend coding experience

✨ 256K context window for long horizon tasks

Kimi K2 >>> Kimi K2-Instruct-0905🔥

huggingface.co/moonshotai/K...

✨ 32B activated / 1T total parameters

✨ Enhanced agentic coding intelligence

✨ Better frontend coding experience

✨ 256K context window for long horizon tasks

huggingface.co/meituan-long...

huggingface.co/meituan-long...

Demo

huggingface.co/spaces/byted...

Model

huggingface.co/bytedance-re...

Paper

huggingface.co/papers/2508....

Demo

huggingface.co/spaces/byted...

Model

huggingface.co/bytedance-re...

Paper

huggingface.co/papers/2508....

huggingface.co/collections/...

huggingface.co/collections/...

huggingface.co/spaces/zh-ai...

✨Goal: By 2035, AI will deeply empower all sectors, reshape productivity & society

✨Focus on 6 pillars:

>Science & Tech

>Industry

>Consumption

>Public welfare

>Governance

>Global cooperation

huggingface.co/spaces/zh-ai...

✨Goal: By 2035, AI will deeply empower all sectors, reshape productivity & society

✨Focus on 6 pillars:

>Science & Tech

>Industry

>Consumption

>Public welfare

>Governance

>Global cooperation

huggingface.co/openbmb/Mini...

huggingface.co/openbmb/Mini...

huggingface.co/collections/...

✨ 1B · 2B · 4B · 8B · 14B · 38B | MoE → 20B-A4B · 30B-A3B · 241B-A28B 📄Apache 2.0

✨ +16% reasoning performance, 4.05× speedup vs InternVL3

huggingface.co/collections/...

✨ 1B · 2B · 4B · 8B · 14B · 38B | MoE → 20B-A4B · 30B-A3B · 241B-A28B 📄Apache 2.0

✨ +16% reasoning performance, 4.05× speedup vs InternVL3

huggingface.co/internlm/Int...

✨ Efficient 8B LLM + 0.3B vision encoder

✨ Apache 2.0

✨ 5T multimodal pretraining, 50%+ in scientific domains

✨ Dynamic tokenizer for molecules & protein sequences

huggingface.co/internlm/Int...

✨ Efficient 8B LLM + 0.3B vision encoder

✨ Apache 2.0

✨ 5T multimodal pretraining, 50%+ in scientific domains

✨ Dynamic tokenizer for molecules & protein sequences

huggingface.co/collections/...

✨ 36B - Base & Instruct

✨ Apache 2.0

✨ Native 512K long context

✨ Strong reasoning & agentic intelligence

✨ 2 Base versions: with & without synthetic data

huggingface.co/collections/...

✨ 36B - Base & Instruct

✨ Apache 2.0

✨ Native 512K long context

✨ Strong reasoning & agentic intelligence

✨ 2 Base versions: with & without synthetic data

When I came back: Qwen still releasing

Respect!!🫡

Qwen Image Edit 🔥 the image editing version of Qwen-Image by Alibaba Qwen

huggingface.co/Qwen/Qwen-Im...

When I came back: Qwen still releasing

Respect!!🫡

Qwen Image Edit 🔥 the image editing version of Qwen-Image by Alibaba Qwen

huggingface.co/Qwen/Qwen-Im...

huggingface.co/collections/...

I’ve been tracking things closely, but July’s open-source wave still managed to surprise me.

Can’t wait to see what’s coming next! 🚀

huggingface.co/collections/...

I’ve been tracking things closely, but July’s open-source wave still managed to surprise me.

Can’t wait to see what’s coming next! 🚀

They just released Qwen3-Coder-30B-A3B-Instruct on the hub

huggingface.co/Qwen/Qwen3-C...

✨ Apache 2.0

✨30B total / 3.3B active (128 experts, 8 top-k)

✨ Native 256K context, extendable to 1M via Yarn

✨ Built for Agentic Coding

They just released Qwen3-Coder-30B-A3B-Instruct on the hub

huggingface.co/Qwen/Qwen3-C...

✨ Apache 2.0

✨30B total / 3.3B active (128 experts, 8 top-k)

✨ Native 256K context, extendable to 1M via Yarn

✨ Built for Agentic Coding

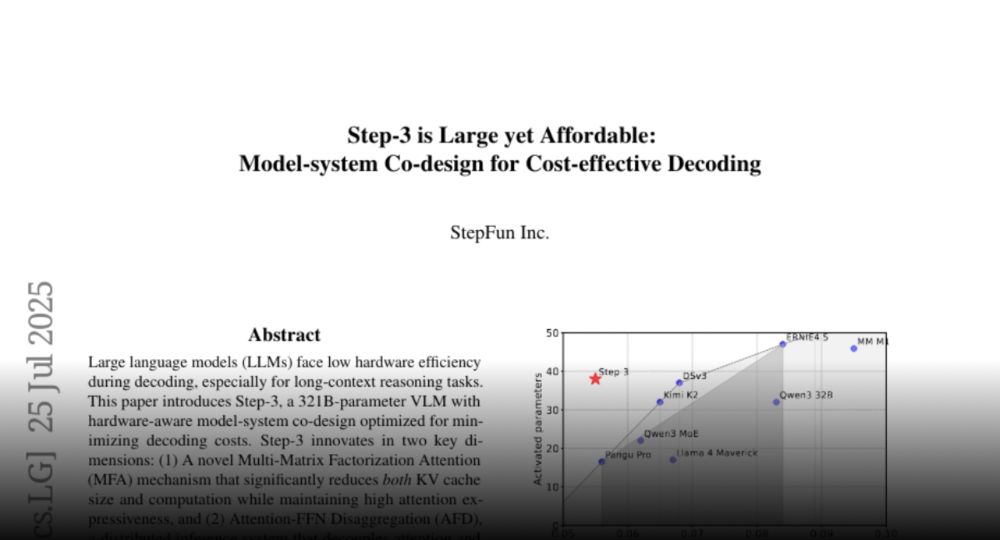

Model: huggingface.co/stepfun-ai/s...

Paper: huggingface.co/papers/2507....

Model: huggingface.co/stepfun-ai/s...

Paper: huggingface.co/papers/2507....

huggingface.co/Qwen/Qwen3-3...

✨ 30B total / 3B active - Apache 2.0

✨ Native 256K context

✨ SOTA coding, alignment, agentic reasoning

huggingface.co/Qwen/Qwen3-3...

✨ 30B total / 3B active - Apache 2.0

✨ Native 256K context

✨ SOTA coding, alignment, agentic reasoning

huggingface.co/collections/...

✨ 1.5 B - MIT License

✨ Runs on RTX 4090

✨ Truly unified architecture

huggingface.co/collections/...

✨ 1.5 B - MIT License

✨ Runs on RTX 4090

✨ Truly unified architecture

huggingface.co/Qwen/Qwen3-3...

✨ 30B MoE / 3.3B active - Apache 2.0

✨ Strong gains in reasoning, math, coding, & multilingual tasks

✨ Native support for 256K long-context inputs

huggingface.co/Qwen/Qwen3-3...

✨ 30B MoE / 3.3B active - Apache 2.0

✨ Strong gains in reasoning, math, coding, & multilingual tasks

✨ Native support for 256K long-context inputs

huggingface.co/Wan-AI/Wan2....

huggingface.co/Wan-AI/Wan2....

huggingface.co/Wan-AI/Wan2....

huggingface.co/Wan-AI/Wan2....

Built for intelligent agents with unified capabilities: reasoning, coding, tool use.

huggingface.co/collections/...

✨ 355B total / 32B active - MIT license

✨ Hybrid modes: Thinking mode for complex tasks/ Non-thinking mode for instant replies

Built for intelligent agents with unified capabilities: reasoning, coding, tool use.

huggingface.co/collections/...

✨ 355B total / 32B active - MIT license

✨ Hybrid modes: Thinking mode for complex tasks/ Non-thinking mode for instant replies