https://zhi-wen.net/

This family of 7B and 32B models represents:

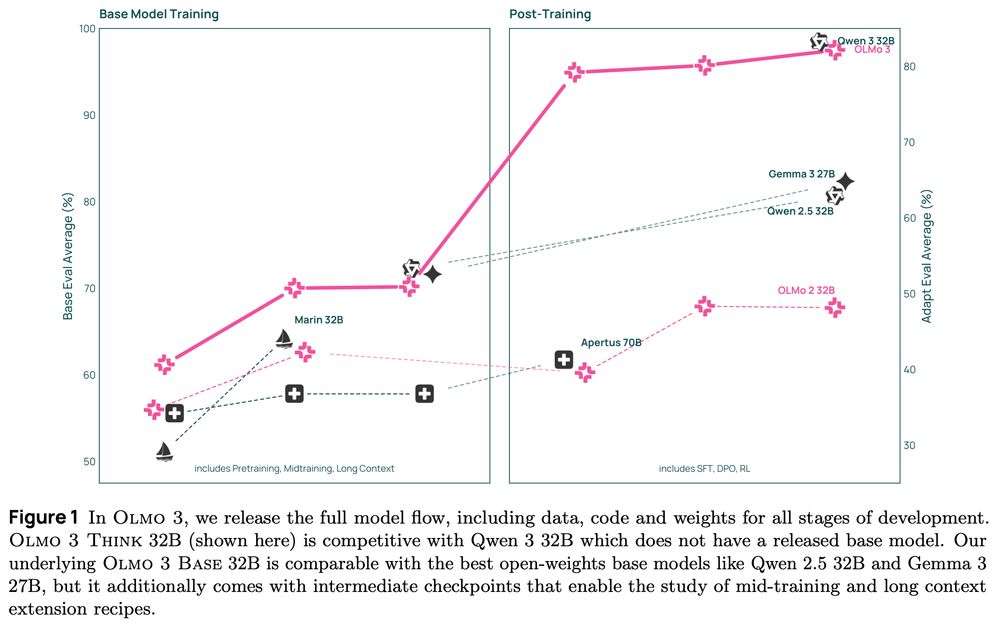

1. The best 32B base model.

2. The best 7B Western thinking & instruct models.

3. The first 32B (or larger) fully open reasoning model.

This family of 7B and 32B models represents:

1. The best 32B base model.

2. The best 7B Western thinking & instruct models.

3. The first 32B (or larger) fully open reasoning model.

🗓️Dates: October 6-9, 2026

🏨Venue: Hilton San Francisco Union Square

Website and CFPs for papers and workshops coming up soon!

🗓️Dates: October 6-9, 2026

🏨Venue: Hilton San Francisco Union Square

Website and CFPs for papers and workshops coming up soon!

For example, I just spotted this beautiful website by Catherine Yeh: github.com/catherinesye...

For example, I just spotted this beautiful website by Catherine Yeh: github.com/catherinesye...

I wouldn't say it's okay, but I'm not sure how to fix it.

www.interconnects.ai/p/burning-out

I wouldn't say it's okay, but I'm not sure how to fix it.

www.interconnects.ai/p/burning-out

If you're interested in working on technical safeguards to create safe-by-design AI systems, check out the openings on our website and don't hesitate to reach out to our team!

job-boards.greenhouse.io/lawzero

If you're interested in working on technical safeguards to create safe-by-design AI systems, check out the openings on our website and don't hesitate to reach out to our team!

job-boards.greenhouse.io/lawzero

Applications are due Dec 1: make sure you include a research statement!

jobs.careers.microsoft.com/global/en/jo...

Applications are due Dec 1: make sure you include a research statement!

jobs.careers.microsoft.com/global/en/jo...

📄 arxiv.org/abs/2510.14086 1/

📄 arxiv.org/abs/2510.14086 1/

@bucds.bsky.social in 2026! BU has SCHEMES for LM interpretability & analysis, I couldn't be more pumped to join a burgeoning supergroup w/ @najoung.bsky.social @amuuueller.bsky.social. Looking for my first students, so apply and reach out!

One of the coolest papers I've read recently (in addition to QAlign/QUEST which has similar approaches).

arxiv.org/abs/2510.14901

One of the coolest papers I've read recently (in addition to QAlign/QUEST which has similar approaches).

arxiv.org/abs/2510.14901

Continuing our interpretation of DINOv2, the second part of our study concerns the *geometry of concepts* and the synthesis of our findings toward a new representational *phenomenology*:

the Minkowski Representation Hypothesis

"Are language models worth it?"

Explains that the prior decade of his work on adversarial images, while it taught us a lot, isn't very applied; it's unlikely anyone is actually altering images of cats in scary ways.

"Are language models worth it?"

Explains that the prior decade of his work on adversarial images, while it taught us a lot, isn't very applied; it's unlikely anyone is actually altering images of cats in scary ways.

Looking forward to see what people are thinking about controllable/safe generation, eval, diffusion LM, interpretability, etc.

And we’re still hirng applied research scientists at Mila! ⬇️

Looking forward to see what people are thinking about controllable/safe generation, eval, diffusion LM, interpretability, etc.

And we’re still hirng applied research scientists at Mila! ⬇️

those with savior and superiority complex, obsessed with sci fi, blinded by dollar signs, devoid of empathy, and severely gullible.

those with savior and superiority complex, obsessed with sci fi, blinded by dollar signs, devoid of empathy, and severely gullible.