We're hiring postdocs and senior researchers in AI/ML broadly, and in specific areas like test-time scaling and science of DL. Postdoc applications due Oct 22, 2025. Senior researcher applications considered on a rolling basis.

Links to apply: aka.ms/msrnyc-jobs

We're hiring postdocs and senior researchers in AI/ML broadly, and in specific areas like test-time scaling and science of DL. Postdoc applications due Oct 22, 2025. Senior researcher applications considered on a rolling basis.

Links to apply: aka.ms/msrnyc-jobs

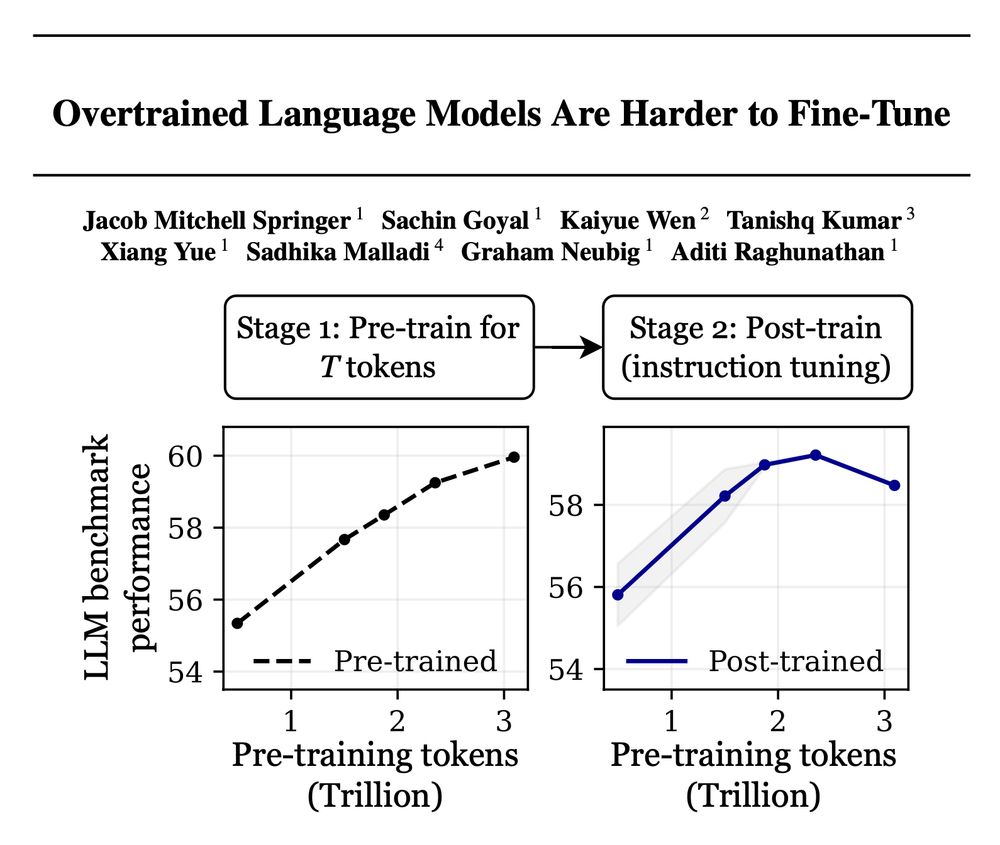

False! Scaling language models by adding more pre-training data can decrease your performance after post-training!

Introducing "catastrophic overtraining." 🥁🧵👇

arxiv.org/abs/2503.19206

1/10

False! Scaling language models by adding more pre-training data can decrease your performance after post-training!

Introducing "catastrophic overtraining." 🥁🧵👇

arxiv.org/abs/2503.19206

1/10

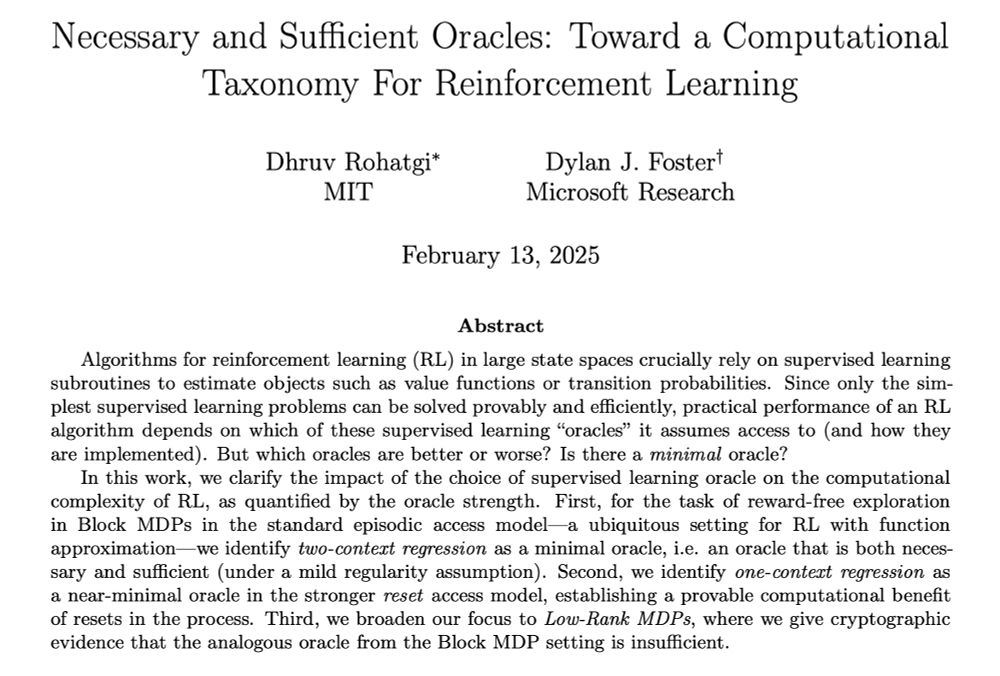

New paper led by my amazing intern Dhruv Rohatgi:

Necessary and Sufficient Oracles: Toward a Computational Taxonomy for Reinforcement Learning

arxiv.org/abs/2502.08632

1/

New paper led by my amazing intern Dhruv Rohatgi:

Necessary and Sufficient Oracles: Toward a Computational Taxonomy for Reinforcement Learning

arxiv.org/abs/2502.08632

1/

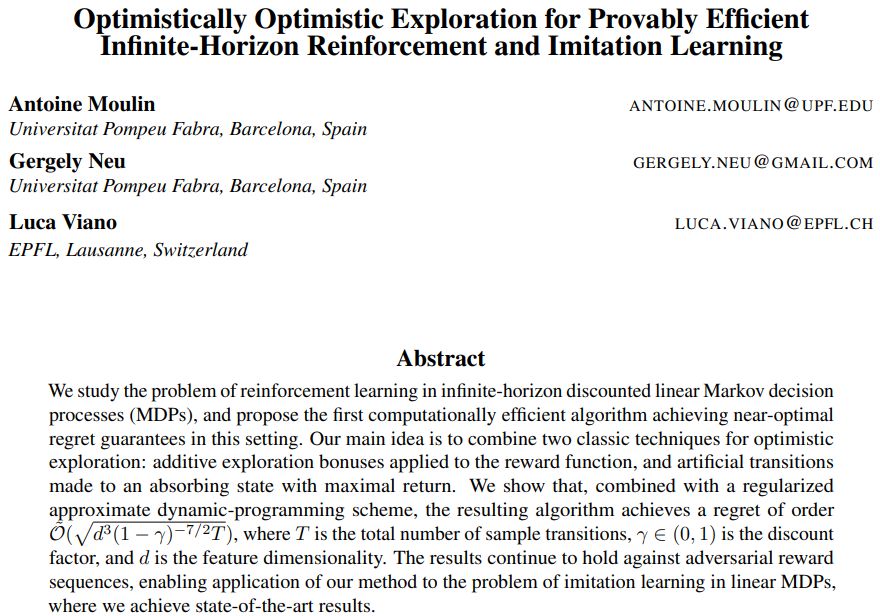

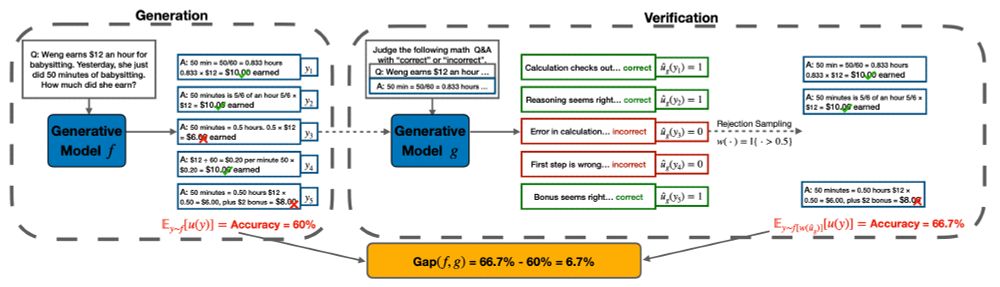

Across LLM families, tasks and mechanisms

This ability scales with pretraining, prefers CoT, non QA tasks and more in 🧵

alphaxiv.org/abs/2412.02674

@yus167.bsky.social @shamkakade.bsky.social

📈🤖

#NLP #ML

Across LLM families, tasks and mechanisms

This ability scales with pretraining, prefers CoT, non QA tasks and more in 🧵

alphaxiv.org/abs/2412.02674

@yus167.bsky.social @shamkakade.bsky.social

📈🤖

#NLP #ML

Happy to meet old and new friends and talk about all aspects of RL: data, environment structure, and reward! 😀

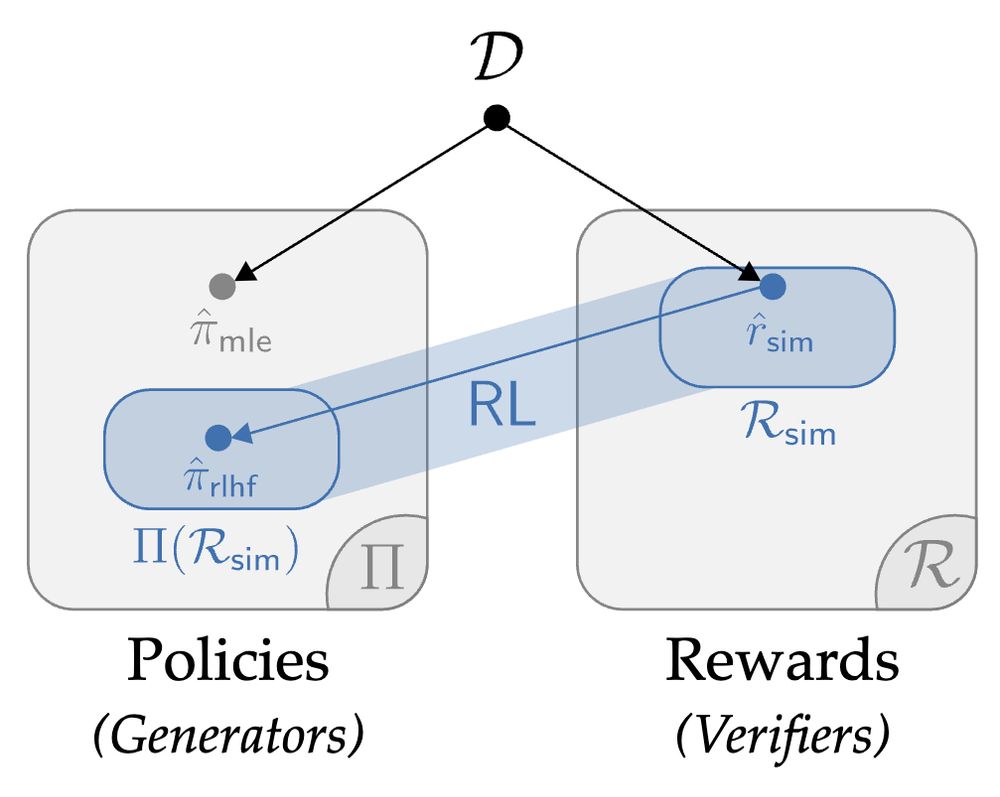

In Wed 11am-2pm poster session I will present HyPO-- best of both worlds of offline and online RLHF: neurips.cc/virtual/2024...

Happy to meet old and new friends and talk about all aspects of RL: data, environment structure, and reward! 😀

In Wed 11am-2pm poster session I will present HyPO-- best of both worlds of offline and online RLHF: neurips.cc/virtual/2024...

Mind the Gap: Examining the Self-Improvement Capabilities of Large Language Models

https://arxiv.org/abs/2412.02674

Mind the Gap: Examining the Self-Improvement Capabilities of Large Language Models

https://arxiv.org/abs/2412.02674

go.bsky.app/QLTVEph

#AcademicSky

go.bsky.app/QLTVEph

#AcademicSky

Logarithmic Neyman Regret for Adaptive Estimation of the Average Treatment Effect

https://arxiv.org/abs/2411.14341

Logarithmic Neyman Regret for Adaptive Estimation of the Average Treatment Effect

https://arxiv.org/abs/2411.14341

I am a final-year PhD student from CMU Robotics. I work on humanoid control, perception, and behavior in both simulation and real life, using mostly RL:

🏃🏻PHC: zhengyiluo.com/PHC

💫PULSE: zhengyiluo.com/PULSE

🔩Omnigrasp: zhengyiluo.com/Omnigrasp

🤖OmniH2O: omni.human2humanoid.com

I am a final-year PhD student from CMU Robotics. I work on humanoid control, perception, and behavior in both simulation and real life, using mostly RL:

🏃🏻PHC: zhengyiluo.com/PHC

💫PULSE: zhengyiluo.com/PULSE

🔩Omnigrasp: zhengyiluo.com/Omnigrasp

🤖OmniH2O: omni.human2humanoid.com

My research focuses on interactive AI, involving:

🤖 reinforcement learning,

🧠 foundation models, and

👩💻 human-centered AI.

Also a founding co-organizer of the MineRL competitions 🖤 Follow me for ML updates!

My research focuses on interactive AI, involving:

🤖 reinforcement learning,

🧠 foundation models, and

👩💻 human-centered AI.

Also a founding co-organizer of the MineRL competitions 🖤 Follow me for ML updates!