bsky.app/profile/yus1...

bsky.app/profile/yus1...

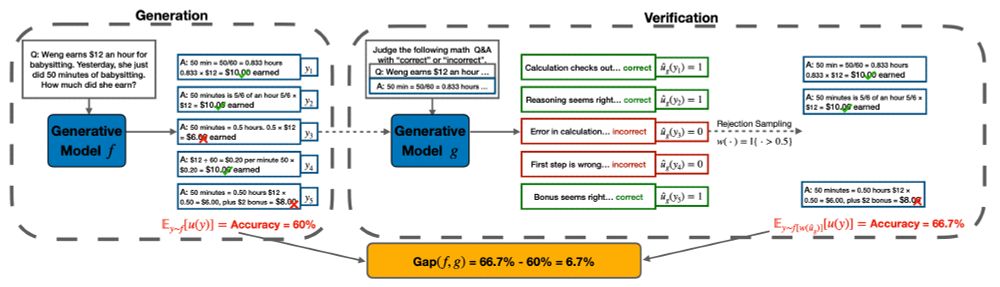

1. Model generates many candidate responses.

2. Model filters/reweights responses based on its verifications.

3. Distill the reweighted responses into a new model.

(2/9)

1. Model generates many candidate responses.

2. Model filters/reweights responses based on its verifications.

3. Distill the reweighted responses into a new model.

(2/9)