t.co/jdJrzRegL0

t.co/jdJrzRegL0

#ML #AI

#ML #AI

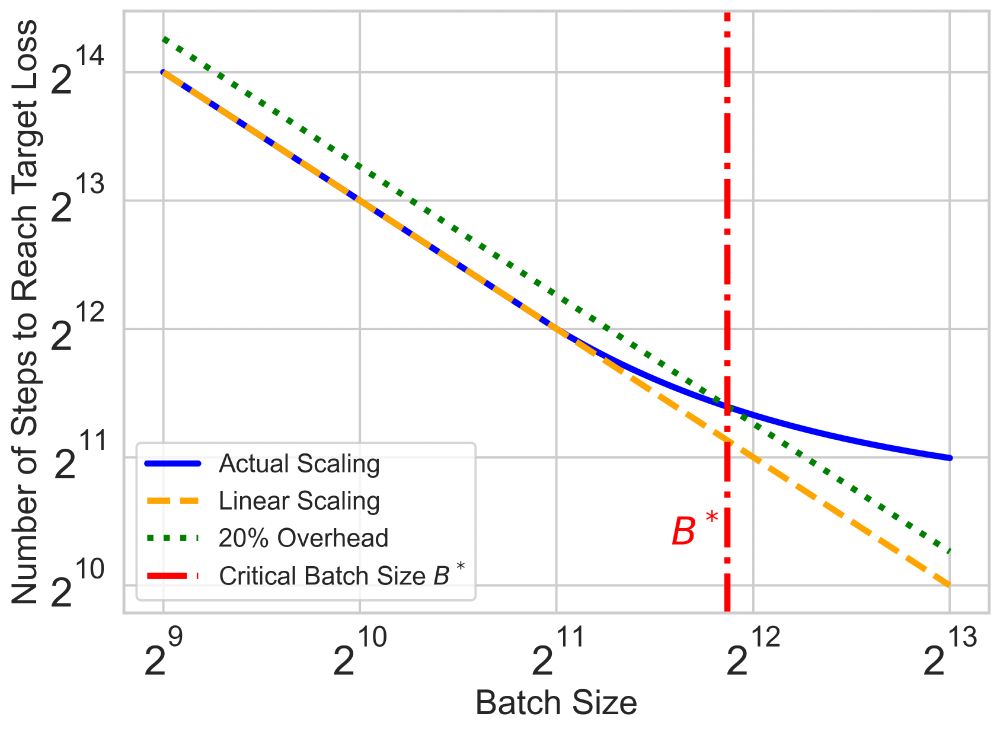

We propose a methodology to approach these questions by showing that we can predict the performance across datasets and losses with simple shifted power law fits.

We propose a methodology to approach these questions by showing that we can predict the performance across datasets and losses with simple shifted power law fits.