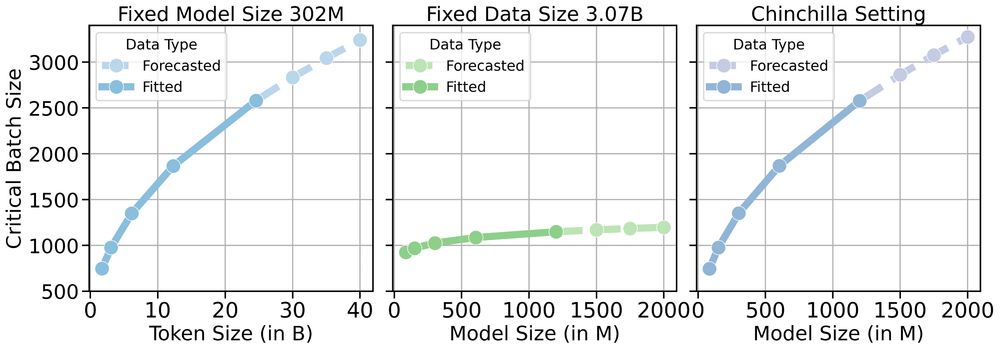

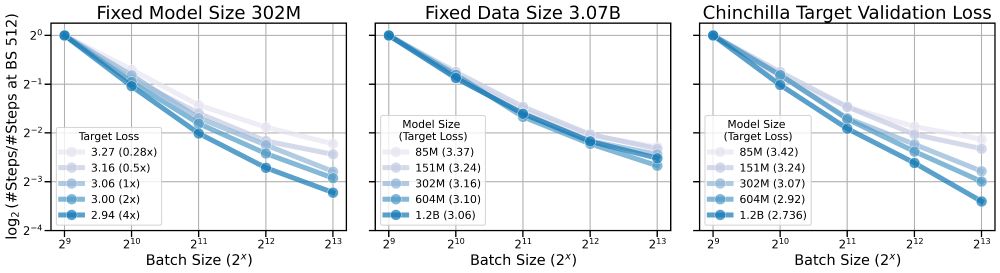

📈CBS increases as dataset size grows

🤏CBS remains weakly dependent on model size

Data size, not model size, drives parallel efficiency for large-scale pre-training.

📈CBS increases as dataset size grows

🤏CBS remains weakly dependent on model size

Data size, not model size, drives parallel efficiency for large-scale pre-training.