We're a research / engineering team working together in industries like health and logistics to ship ML tools that drastically improve productivity. If you're interested in ML and RL work that matters, come join us 😀

* The first RLVR run uses allenai/RLVR-GSM-MATH-IF-Mixed-Constraints

* The final RLVR run uses allenai/RLVR-MATH for targeted MATH improvement

Short 🧵

* The first RLVR run uses allenai/RLVR-GSM-MATH-IF-Mixed-Constraints

* The final RLVR run uses allenai/RLVR-MATH for targeted MATH improvement

Short 🧵

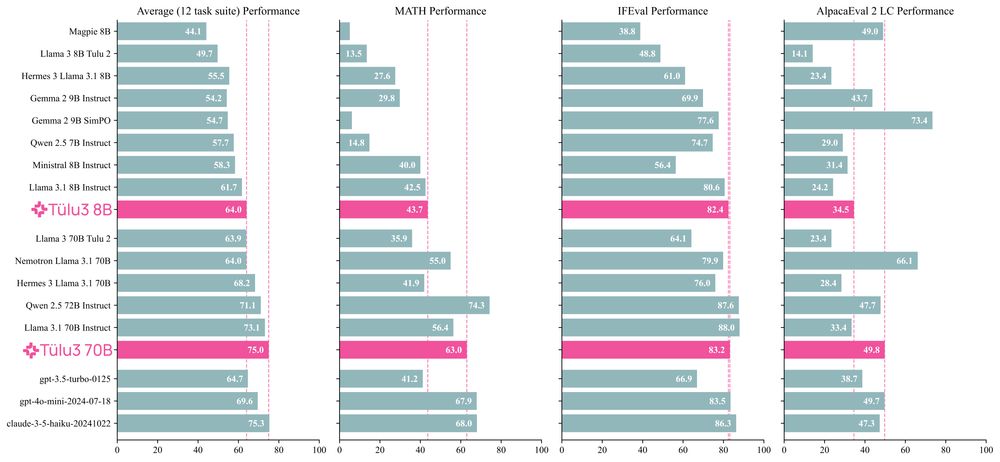

We are happy to "quietly" release our latest GRPO-trained Tulu 3.1 model, which is considerably better in MATH and GSM8K!

We are happy to "quietly" release our latest GRPO-trained Tulu 3.1 model, which is considerably better in MATH and GSM8K!

We also trained new OLMoE-1B-7B-0125 this time using the Tulu 3 recipe. Very exciting that RLVR improved gsm8k by almost 10 points for OLMoE 🔥

A quick 🧵

We also trained new OLMoE-1B-7B-0125 this time using the Tulu 3 recipe. Very exciting that RLVR improved gsm8k by almost 10 points for OLMoE 🔥

A quick 🧵

When directly minimizing the KL loss, kl3 just appears much more numerically stable. And the >0 guarantee here is also really nice (kl1 could go negative).

When directly minimizing the KL loss, kl3 just appears much more numerically stable. And the >0 guarantee here is also really nice (kl1 could go negative).

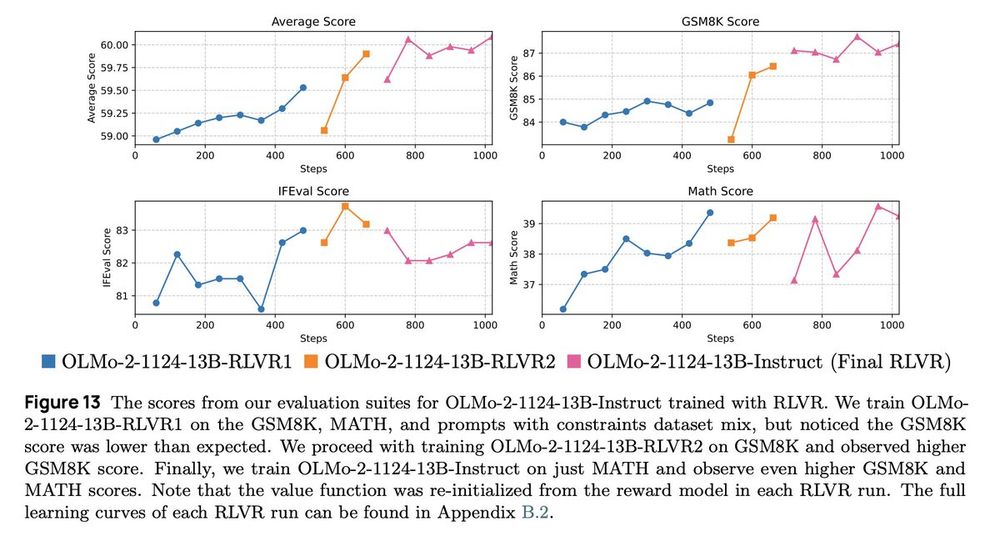

This time, we applied RLVR iteratively! Our initial RLVR checkpoint on the RLVR dataset mix shows a low GSM8K score, so we did another RLVR on GSM8K only and another on MATH only 😆.

And it works! A thread 🧵 1/N

This time, we applied RLVR iteratively! Our initial RLVR checkpoint on the RLVR dataset mix shows a low GSM8K score, so we did another RLVR on GSM8K only and another on MATH only 😆.

And it works! A thread 🧵 1/N

SmolLM from HuggingFace trained with part of the Tülu 3 recipe we release a month ago :D.

Cool numerical explorations of post-training stuff. Nothing crazy.

SultanR/SmolTulu-1.7b-Instruct

https://buff.ly/41Epauv

SmolLM from HuggingFace trained with part of the Tülu 3 recipe we release a month ago :D.

Cool numerical explorations of post-training stuff. Nothing crazy.

SultanR/SmolTulu-1.7b-Instruct

https://buff.ly/41Epauv

I would love to chat about LLM post-training research, the Faculty job market and anything in between - ping me if you'd like to meet up!

You can also find me at the following:

I would love to chat about LLM post-training research, the Faculty job market and anything in between - ping me if you'd like to meet up!

You can also find me at the following:

Here are the gains/losses of allenai/OLMo-2-1124-13B-Instruct (RLVR's checkpoint) over allenai/OLMo-2-1124-13B-DPO. More to share soon!

Here are the gains/losses of allenai/OLMo-2-1124-13B-Instruct (RLVR's checkpoint) over allenai/OLMo-2-1124-13B-DPO. More to share soon!

Using these, we got >6x speed-ups compared to the original CleanRL implementations.

github.com/pytorch-labs...

Using these, we got >6x speed-ups compared to the original CleanRL implementations.

github.com/pytorch-labs...

Costa huang for clean rl (i couldn't manage to @ him...)

bsky.app/profile/vwxy...

Thread.

Thread.