As always, we uploaded all the intermediate RL checkpoints

As always, we uploaded all the intermediate RL checkpoints

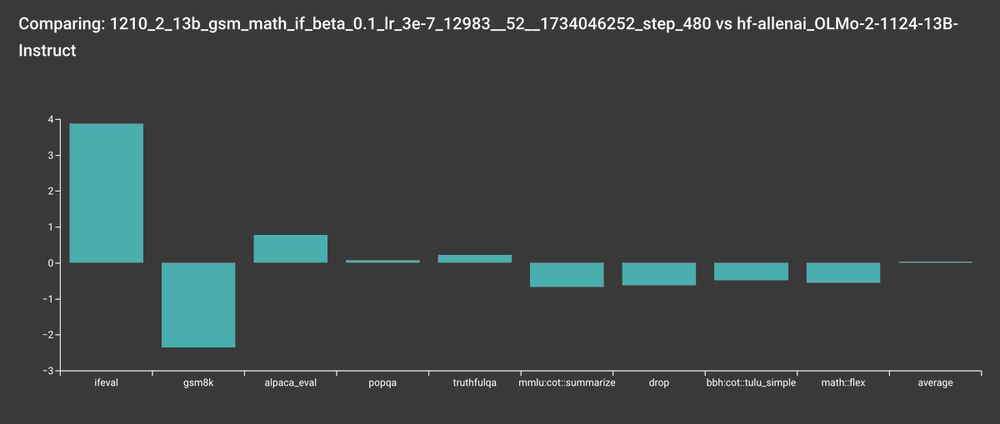

* The first RLVR run uses allenai/RLVR-GSM-MATH-IF-Mixed-Constraints

* The final RLVR run uses allenai/RLVR-MATH for targeted MATH improvement

Short 🧵

* The first RLVR run uses allenai/RLVR-GSM-MATH-IF-Mixed-Constraints

* The final RLVR run uses allenai/RLVR-MATH for targeted MATH improvement

Short 🧵

See our updated collection here: huggingface.co/collections/...

See our updated collection here: huggingface.co/collections/...

The PPO's MATH score is more consistent with the Llama-3.1-Tulu-3-8B model, but GRPO got higher scores.

The PPO's MATH score is more consistent with the Llama-3.1-Tulu-3-8B model, but GRPO got higher scores.

We are happy to "quietly" release our latest GRPO-trained Tulu 3.1 model, which is considerably better in MATH and GSM8K!

We are happy to "quietly" release our latest GRPO-trained Tulu 3.1 model, which is considerably better in MATH and GSM8K!

huggingface.co/collections/...

huggingface.co/collections/...

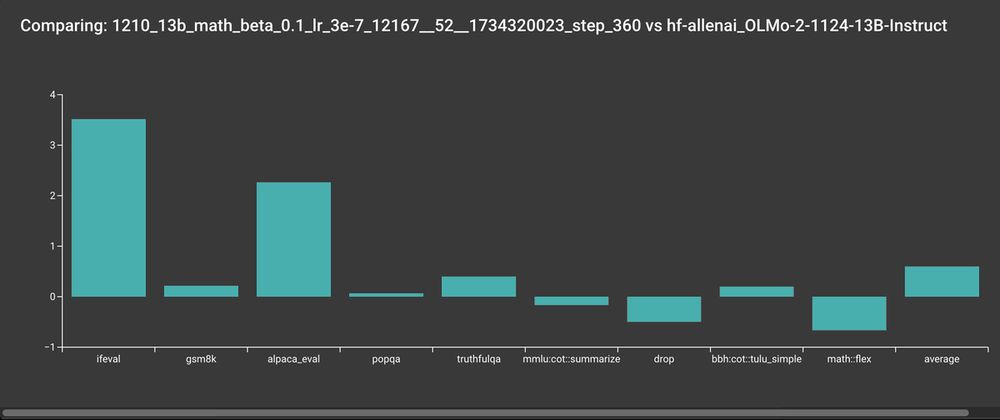

Huge gains on GSM8K, DROP, MATH, and alpaca eval.

Huge gains on GSM8K, DROP, MATH, and alpaca eval.

We also trained new OLMoE-1B-7B-0125 this time using the Tulu 3 recipe. Very exciting that RLVR improved gsm8k by almost 10 points for OLMoE 🔥

A quick 🧵

We also trained new OLMoE-1B-7B-0125 this time using the Tulu 3 recipe. Very exciting that RLVR improved gsm8k by almost 10 points for OLMoE 🔥

A quick 🧵

Here is an example of the PPO training curve (using kl1). As you can see, kl3 > kl2 > kl in scale.

Here is an example of the PPO training curve (using kl1). As you can see, kl3 > kl2 > kl in scale.

When directly minimizing the KL loss, kl3 just appears much more numerically stable. And the >0 guarantee here is also really nice (kl1 could go negative).

When directly minimizing the KL loss, kl3 just appears much more numerically stable. And the >0 guarantee here is also really nice (kl1 could go negative).

arxiv.org/abs/2501.00656

arxiv.org/abs/2501.00656

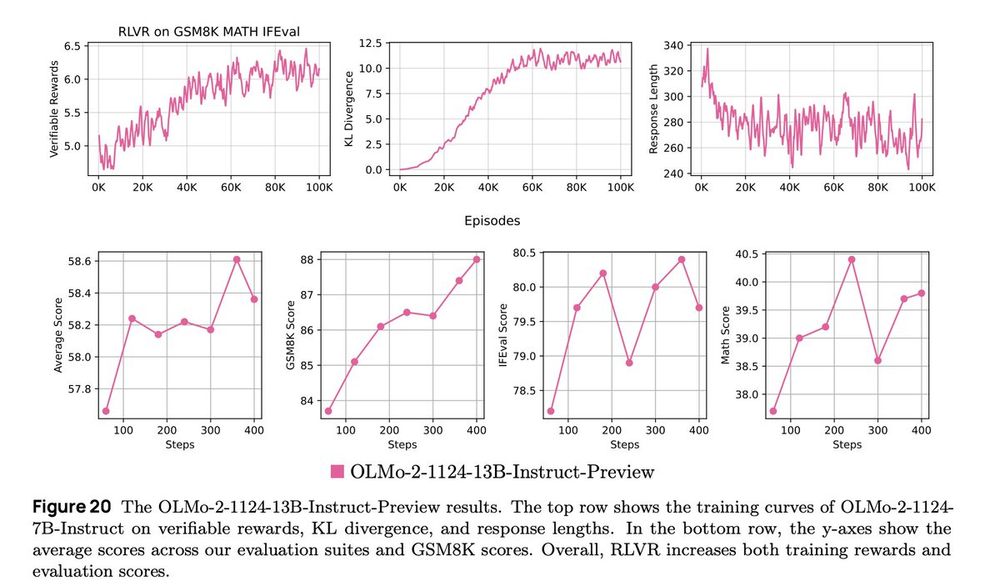

Our final RLVR checkpoint does look pretty good 😊

Our final RLVR checkpoint does look pretty good 😊

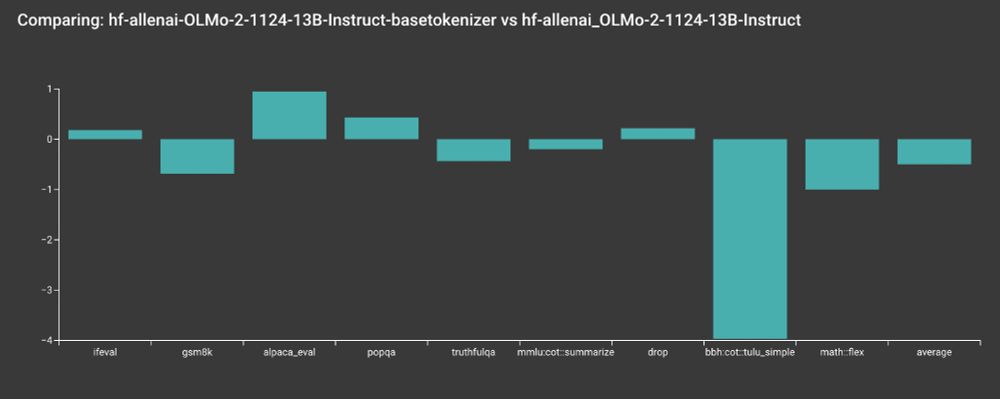

Our initial reproduction attempt shows regression on SFT / DPO / RLVR.

Our initial reproduction attempt shows regression on SFT / DPO / RLVR.

So, we decided to re-train the models using the correct tokenizer.

So, we decided to re-train the models using the correct tokenizer.