--> https://valeoai.github.io/ <--

We trained a 1.2B parameter model on 1,800+ hours of raw driving videos.

No labels. No maps. Just pure observation.

And it works! 🤯

🧵👇 [1/10]

Check it out 👌

Check it out 👌

We’ll present 5 papers about:

💡 self-supervised & representation learning

🌍 3D occupancy & multi-sensor perception

🧩 open-vocabulary segmentation

🧠 multimodal LLMs & explainability

valeoai.github.io/posts/iccv-2...

We’ll present 5 papers about:

💡 self-supervised & representation learning

🌍 3D occupancy & multi-sensor perception

🧩 open-vocabulary segmentation

🧠 multimodal LLMs & explainability

valeoai.github.io/posts/iccv-2...

Today, Corentin Sautier is defending his PhD on "Learning Actionable LiDAR Representations without Annotations".

Good luck! 🚀

His thesis «Learning Actionable LiDAR Representations w/o Annotations» covers the papers BEVContrast (learning self-sup LiDAR features), SLidR, ScaLR (distillation), UNIT and Alpine (solving tasks w/o labels).

Today, Corentin Sautier is defending his PhD on "Learning Actionable LiDAR Representations without Annotations".

Good luck! 🚀

All hands and hearts up in the room.

Honored to welcome @gabrielacsurka.bsky.social today to speak about the amazing work @naverlabseurope.bsky.social towards 3D Foundation Models

All hands and hearts up in the room.

Honored to welcome @gabrielacsurka.bsky.social today to speak about the amazing work @naverlabseurope.bsky.social towards 3D Foundation Models

Today, @bjoernmichele.bsky.social is defending his PhD on "Domain Adaptation for 3D Data"

Best of luck! 🚀

Today, @bjoernmichele.bsky.social is defending his PhD on "Domain Adaptation for 3D Data"

Best of luck! 🚀

Andrei Bursuc @abursuc.bsky.social

Anh-Quan Cao @anhquancao.bsky.social

Renaud Marlet

Eloi Zablocki @eloizablocki.bsky.social

@iccv.bsky.social

iccv.thecvf.com/Conferences/...

Andrei Bursuc @abursuc.bsky.social

Anh-Quan Cao @anhquancao.bsky.social

Renaud Marlet

Eloi Zablocki @eloizablocki.bsky.social

@iccv.bsky.social

iccv.thecvf.com/Conferences/...

🤖 🚗

We're excited to present our latest research and connect with the community.

#CoRL2025

🤖 🚗

We're excited to present our latest research and connect with the community.

#CoRL2025

The project kick-off is today!

ELLIOT is a €25M #HorizonEurope project launching July 2025 to build open, trustworthy Multimodal Generalist Foundation Models.

30 partners, 12 countries, EU values.

🔗 Press release: apigateway.agilitypr.com/distribution...

The project kick-off is today!

The European Commission decided to extend the duration of our Lighthouse on Secure and Safe AI. We will now run for an additional 12 months until August 2026.

Find more details in the official press release:

elsa-ai.eu/official-ext...

Congratulations to the network!

The European Commission decided to extend the duration of our Lighthouse on Secure and Safe AI. We will now run for an additional 12 months until August 2026.

Find more details in the official press release:

elsa-ai.eu/official-ext...

Congratulations to the network!

With a non-linear path, MOCA has been accepted at #TMLR and presented in the TMLR poster session at #iclr2025

MOCA ☕ - Predicting Masked Online Codebook Assignments w/ @spyrosgidaris.bsky.social O. Simeoni, A. Vobecky, @matthieucord.bsky.social, N. Komodakis, @ptrkprz.bsky.social #TMLR #ICLR2025

Grab a ☕ & brace for a story & a🧵

We leverage meta-learning-like pseudo-tasks w/ pseudo-labels.

Kudos @ssirko.bsky.social 👇

#iccv2025

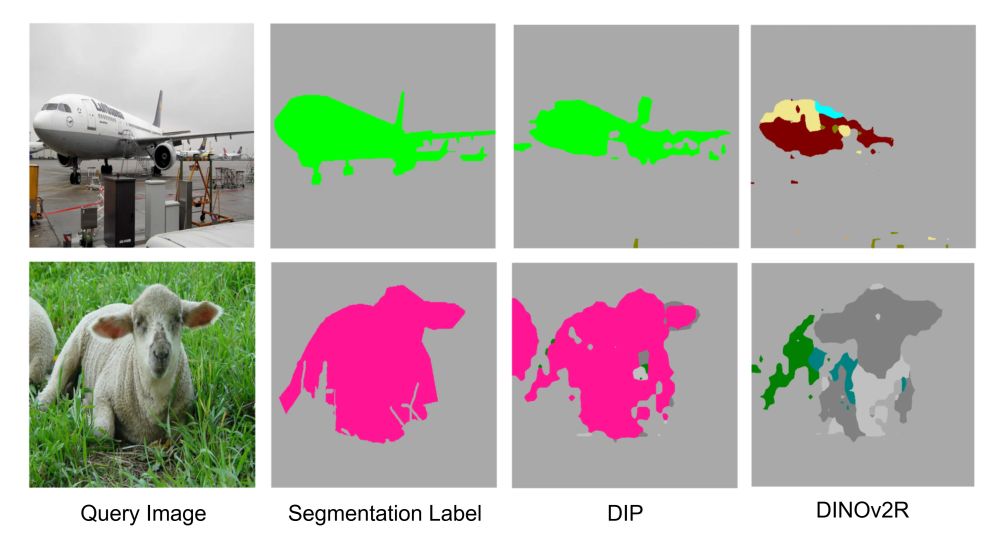

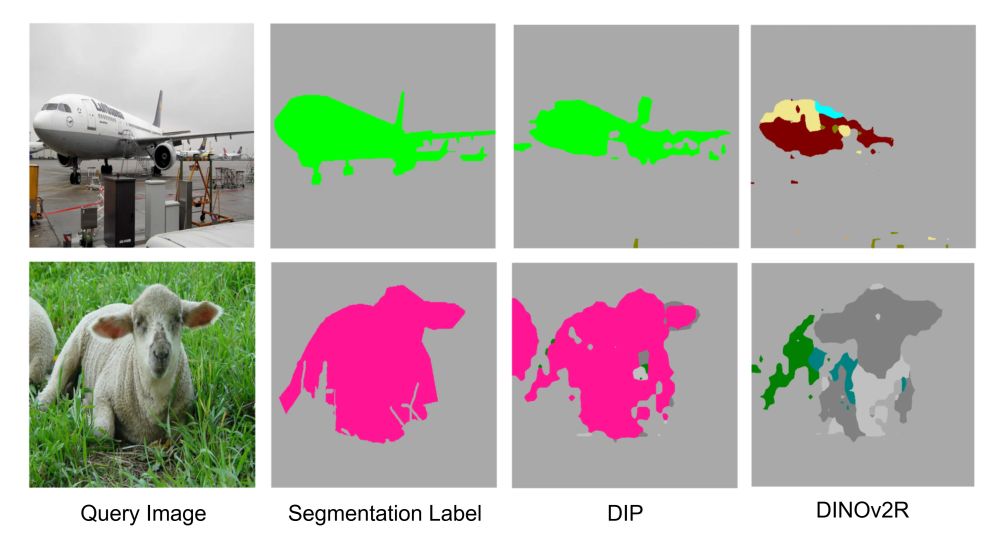

Introducing DIP: unsupervised post-training that enhances dense features in pretrained ViTs for dense in-context scene understanding

Below: Low-shot in-context semantic segmentation examples. DIP features outperform DINOv2!

We leverage meta-learning-like pseudo-tasks w/ pseudo-labels.

Kudos @ssirko.bsky.social 👇

#iccv2025

Check out DIP an effective post-training strategy by @ssirko.bsky.social @spyrosgidaris.bsky.social

@vobeckya.bsky.social @abursuc.bsky.social and Nicolas Thome 👇

#iccv2025

Introducing DIP: unsupervised post-training that enhances dense features in pretrained ViTs for dense in-context scene understanding

Below: Low-shot in-context semantic segmentation examples. DIP features outperform DINOv2!

Check out DIP an effective post-training strategy by @ssirko.bsky.social @spyrosgidaris.bsky.social

@vobeckya.bsky.social @abursuc.bsky.social and Nicolas Thome 👇

#iccv2025

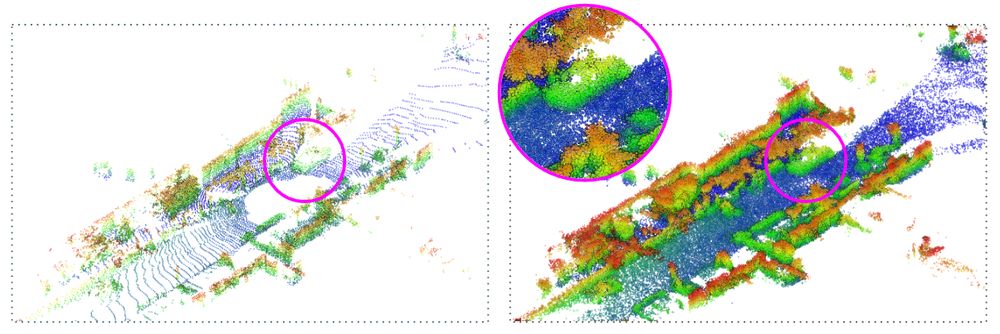

tl;dr: LiDPM - a point diffusion strategy for scene completion from outdoor LiDAR point clouds.

Check out the paper and code below if you can't make it for the poster.

Project page: astra-vision.github.io/LiDPM/

w/ @gillespuy.bsky.social, @alexandreboulch.bsky.social, Renaud Marlet, Raoul de Charette

Also, see our poster at 3pm in the Caravaggio room and AMA 😉

tl;dr: LiDPM - a point diffusion strategy for scene completion from outdoor LiDAR point clouds.

Check out the paper and code below if you can't make it for the poster.

Paper : arxiv.org/abs/2506.11136

Project Page: jafar-upsampler.github.io

Github: github.com/PaulCouairon...

Paper : arxiv.org/abs/2506.11136

Project Page: jafar-upsampler.github.io

Github: github.com/PaulCouairon...

Great talks, great posters, and great to connect with the French & European vision community.

Kudos to the organizers, hoping that it returns next year! 🤞

#CVPR2025 @cvprconference.bsky.social

Great talks, great posters, and great to connect with the French & European vision community.

Kudos to the organizers, hoping that it returns next year! 🤞

#CVPR2025 @cvprconference.bsky.social

🔗 afrif.irisa.fr?page_id=54

🔗 afrif.irisa.fr?page_id=54

Find out more below 🧵

valeoai.github.io/posts/2025-0...

Find out more below 🧵

valeoai.github.io/posts/2025-0...

We trained a 1.2B parameter model on 1,800+ hours of raw driving videos.

No labels. No maps. Just pure observation.

And it works! 🤯

🧵👇 [1/10]

We trained a 1.2B parameter model on 1,800+ hours of raw driving videos.

No labels. No maps. Just pure observation.

And it works! 🤯

🧵👇 [1/10]

This is also an excellent occasion to fit all team members in a photo 📸

This is also an excellent occasion to fit all team members in a photo 📸

Check out our blog post to know more about our 7 papers: valeoai.github.io/posts/2024-1...

🧵👇

Check out our blog post to know more about our 7 papers: valeoai.github.io/posts/2024-1...

🧵👇