--> https://valeoai.github.io/ <--

Check it out 👌

Check it out 👌

tl;dr: a new method for understanding and controlling how MLLMs adapt during fine-tuning

by: P. Khayatan, M. Shukor, J. Parekh, A. Dapogny, @matthieucord.bsky.social

📄: arxiv.org/abs/2501.03012

tl;dr: a new method for understanding and controlling how MLLMs adapt during fine-tuning

by: P. Khayatan, M. Shukor, J. Parekh, A. Dapogny, @matthieucord.bsky.social

📄: arxiv.org/abs/2501.03012

tl;dr: a simple trick to boost open-vocabulary semantic segmentation by identifying class expert prompt templates

by: Y. Benigmim, M. Fahes, @tuanhungvu.bsky.social, @abursuc.bsky.social, R. de Charette.

📄: arxiv.org/abs/2504.10487

tl;dr: a simple trick to boost open-vocabulary semantic segmentation by identifying class expert prompt templates

by: Y. Benigmim, M. Fahes, @tuanhungvu.bsky.social, @abursuc.bsky.social, R. de Charette.

📄: arxiv.org/abs/2504.10487

tl;dr: a self-supervised learning of temporally consistent representations from video w/ motion cues

by: M. Salehi, S. Venkataramanan, I. Simion, E. Gavves, @cgmsnoek.bsky.social, Y. Asano

📄: arxiv.org/abs/2506.08694

tl;dr: a self-supervised learning of temporally consistent representations from video w/ motion cues

by: M. Salehi, S. Venkataramanan, I. Simion, E. Gavves, @cgmsnoek.bsky.social, Y. Asano

📄: arxiv.org/abs/2506.08694

tl;dr: a module for 3D occupancy learning that enforces 2D-3D consistency through differentiable Gaussian rendering

by: L. Chambon, @eloizablocki.bsky.social, @alexandreboulch.bsky.social, M. Chen, M. Cord

📄: arxiv.org/abs/2502.05040

tl;dr: a module for 3D occupancy learning that enforces 2D-3D consistency through differentiable Gaussian rendering

by: L. Chambon, @eloizablocki.bsky.social, @alexandreboulch.bsky.social, M. Chen, M. Cord

📄: arxiv.org/abs/2502.05040

We’ll present 5 papers about:

💡 self-supervised & representation learning

🌍 3D occupancy & multi-sensor perception

🧩 open-vocabulary segmentation

🧠 multimodal LLMs & explainability

valeoai.github.io/posts/iccv-2...

We’ll present 5 papers about:

💡 self-supervised & representation learning

🌍 3D occupancy & multi-sensor perception

🧩 open-vocabulary segmentation

🧠 multimodal LLMs & explainability

valeoai.github.io/posts/iccv-2...

All hands and hearts up in the room.

Honored to welcome @gabrielacsurka.bsky.social today to speak about the amazing work @naverlabseurope.bsky.social towards 3D Foundation Models

All hands and hearts up in the room.

Honored to welcome @gabrielacsurka.bsky.social today to speak about the amazing work @naverlabseurope.bsky.social towards 3D Foundation Models

Today, @bjoernmichele.bsky.social is defending his PhD on "Domain Adaptation for 3D Data"

Best of luck! 🚀

Today, @bjoernmichele.bsky.social is defending his PhD on "Domain Adaptation for 3D Data"

Best of luck! 🚀

📄 Paper: arxiv.org/abs/2412.06491

by Y. Xu, @yuanyinnn.bsky.social, @eloizablocki.bsky.social, @tuanhungvu.bsky.social , @alexandreboulch.bsky.social, M. Cord

📄 Paper: arxiv.org/abs/2412.06491

by Y. Xu, @yuanyinnn.bsky.social, @eloizablocki.bsky.social, @tuanhungvu.bsky.social , @alexandreboulch.bsky.social, M. Cord

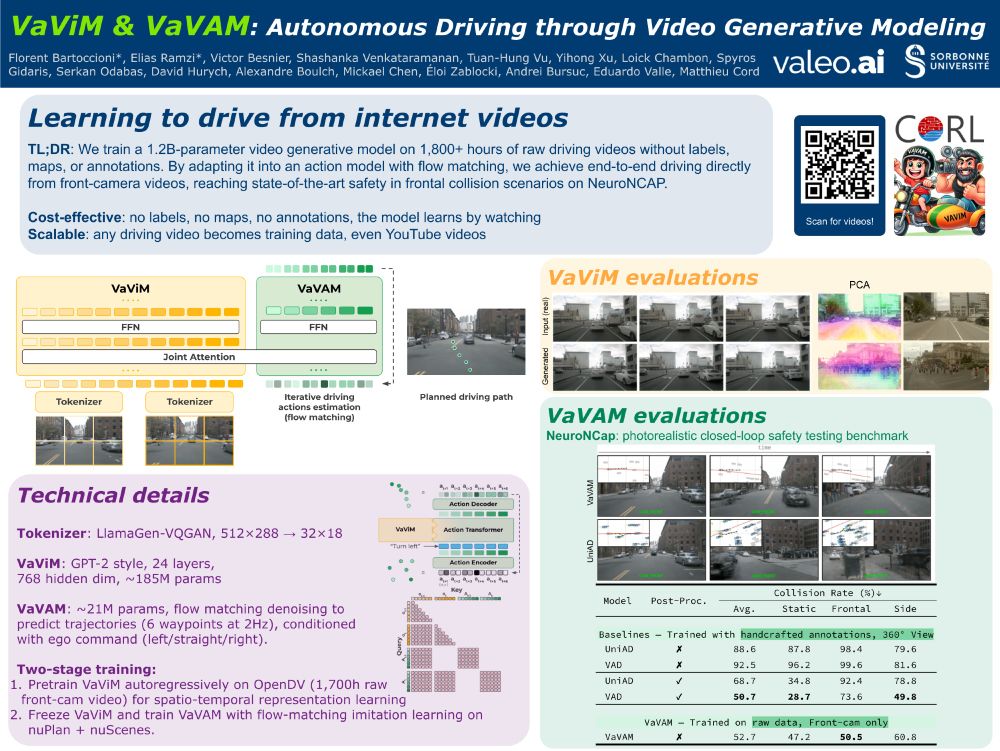

🔗 Project page: valeoai.github.io/vavim-vavam/

📄 Paper: arxiv.org/abs/2502.15672

💻 Code: github.com/valeoai/Vide...

by F. Bartoccioni, E. Ramzi, et al.

🔗 Project page: valeoai.github.io/vavim-vavam/

📄 Paper: arxiv.org/abs/2502.15672

💻 Code: github.com/valeoai/Vide...

by F. Bartoccioni, E. Ramzi, et al.

🤖 🚗

We're excited to present our latest research and connect with the community.

#CoRL2025

🤖 🚗

We're excited to present our latest research and connect with the community.

#CoRL2025

Great talks, great posters, and great to connect with the French & European vision community.

Kudos to the organizers, hoping that it returns next year! 🤞

#CVPR2025 @cvprconference.bsky.social

Great talks, great posters, and great to connect with the French & European vision community.

Kudos to the organizers, hoping that it returns next year! 🤞

#CVPR2025 @cvprconference.bsky.social

by S. Gidaris, A. Bursuc, O. Simeoni, A. Vobecky, N. Komodakis, M. Cord, P. Pérez

Unify mask-and-predict & contrastive SSL objectives -> better performance w/ 3x faster training

by S. Gidaris, A. Bursuc, O. Simeoni, A. Vobecky, N. Komodakis, M. Cord, P. Pérez

Unify mask-and-predict & contrastive SSL objectives -> better performance w/ 3x faster training

by L. Le Boudec, E. de Bézenac, @louisserrano.bsky.social, R. D. Regueiro-Espino, @yuanyinnn.bsky.social, P. Gallinari

A physics-informed neural PDE solver capable of solving a distribution of PDE instances

by L. Le Boudec, E. de Bézenac, @louisserrano.bsky.social, R. D. Regueiro-Espino, @yuanyinnn.bsky.social, P. Gallinari

A physics-informed neural PDE solver capable of solving a distribution of PDE instances

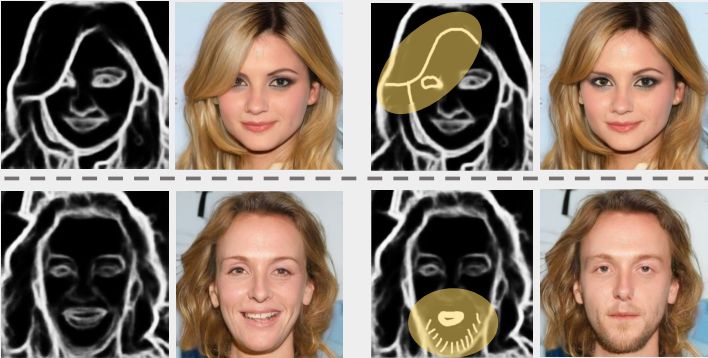

by E. Abdelrahman, L. Zhao, @vtaohu.bsky.social, @matthieucord.bsky.social, @ptrkprz.bsky.social, M. Elhoseiny

A diffusion framework to generate high-quality RGB images more efficiently than Stable Diffusion

by E. Abdelrahman, L. Zhao, @vtaohu.bsky.social, @matthieucord.bsky.social, @ptrkprz.bsky.social, M. Elhoseiny

A diffusion framework to generate high-quality RGB images more efficiently than Stable Diffusion

Boost VLM perf by tuning an LLM to reason on its outputs! It's black-box🔒 & efficient⚡ (< 7h on 1 GPU)

Boost VLM perf by tuning an LLM to reason on its outputs! It's black-box🔒 & efficient⚡ (< 7h on 1 GPU)

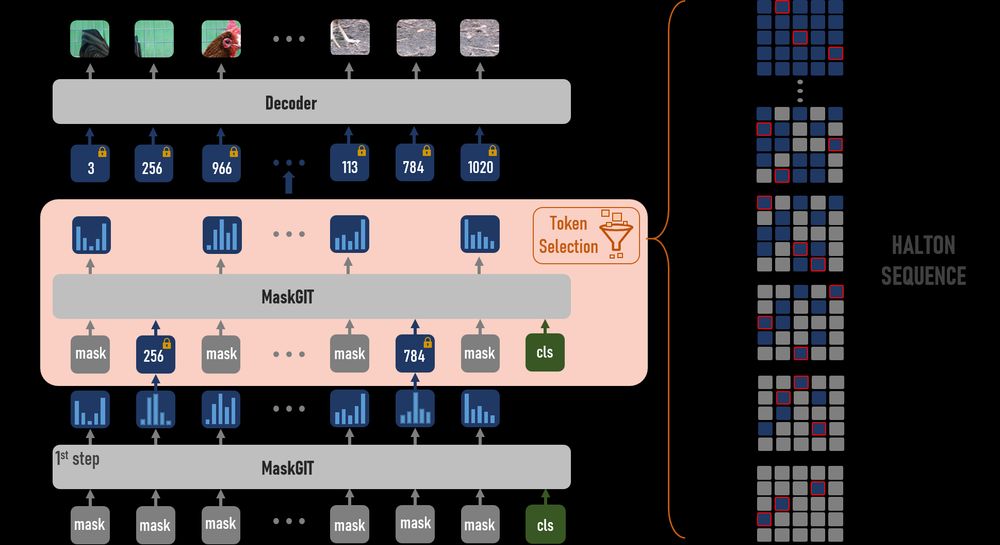

by V. Besnier, @mickaelchen.bsky.social, D. Hurych, E. Valle, @matthieucord.bsky.social

⭐️Halton Scheduler⭐️ fixes original MaskGIT’s sampling flaws by using a low-discrepancy sequences, distributing token selection uniformly across the image🚀

by V. Besnier, @mickaelchen.bsky.social, D. Hurych, E. Valle, @matthieucord.bsky.social

⭐️Halton Scheduler⭐️ fixes original MaskGIT’s sampling flaws by using a low-discrepancy sequences, distributing token selection uniformly across the image🚀

Find out more below 🧵

valeoai.github.io/posts/2025-0...

Find out more below 🧵

valeoai.github.io/posts/2025-0...

More to come! 🚀

Retweet if you’re excited, and follow @valeoai.bsky.social for updates! 💚

🙏 Thanks for reading!

[10/10]

More to come! 🚀

Retweet if you’re excited, and follow @valeoai.bsky.social for updates! 💚

🙏 Thanks for reading!

[10/10]

🔄 Validated through closed-loop testing using NeuroNCAP

Our model achieves:

🏆 SOTA on NeuroNCAP safety benchmark in frontal scenario

🤔 But scaling is inconsistent: see more in the paper and project page

[7/10]

🔄 Validated through closed-loop testing using NeuroNCAP

Our model achieves:

🏆 SOTA on NeuroNCAP safety benchmark in frontal scenario

🤔 But scaling is inconsistent: see more in the paper and project page

[7/10]

VaVAM’s action module draws from π0: a flow matching approach for action prediction

Kudos to the π0 team! (and to @remicadene.bsky.social)

[6/10]

VaVAM’s action module draws from π0: a flow matching approach for action prediction

Kudos to the π0 team! (and to @remicadene.bsky.social)

[6/10]

Each color represents different learned concepts:

🚶 Pedestrians

🏢 Buildings & structures

🚸Crosswalks

Pure emergent semantic grouping!

Future works will study this in more detail 🕵️

[5/10]

Each color represents different learned concepts:

🚶 Pedestrians

🏢 Buildings & structures

🚸Crosswalks

Pure emergent semantic grouping!

Future works will study this in more detail 🕵️

[5/10]

We computed the scaling law and optimal frontier for you 😉

Spoiler: we need even more driving data!

⇨ Bigger models + more data = better generation

⇨ Better driving? let's see...

All details in our report:

🔗 arxiv.org/abs/2502.15672

[4/10]

We computed the scaling law and optimal frontier for you 😉

Spoiler: we need even more driving data!

⇨ Bigger models + more data = better generation

⇨ Better driving? let's see...

All details in our report:

🔗 arxiv.org/abs/2502.15672

[4/10]