http://snavely.io

Today I want to share two new works on this topic:

Eliciting higher alignment: arxiv.org/abs/2510.02425

Unpaired learning of unified reps: arxiv.org/abs/2510.08492

1/9

Today I want to share two new works on this topic:

Eliciting higher alignment: arxiv.org/abs/2510.02425

Unpaired learning of unified reps: arxiv.org/abs/2510.08492

1/9

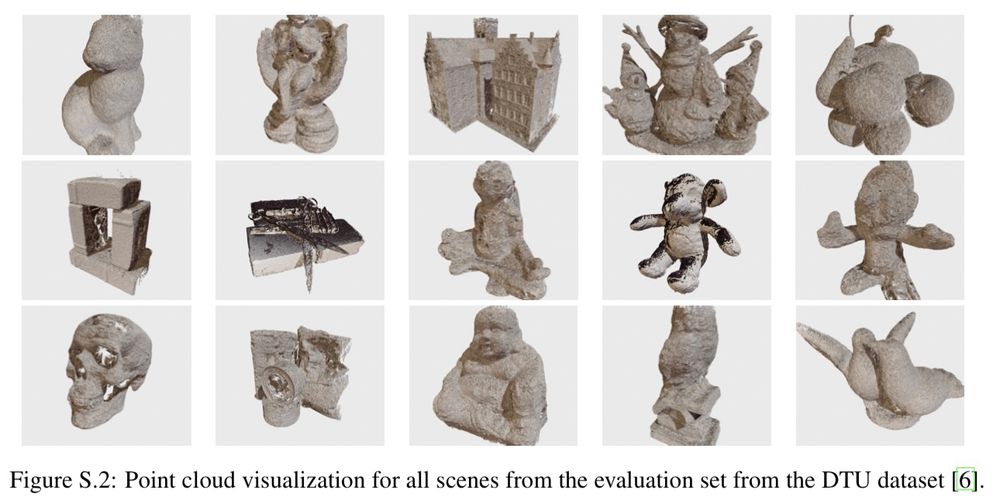

TTT3R offers a simple state update rule to enhance length generalization for #CUT3R — No fine-tuning required!

🔗Page: rover-xingyu.github.io/TTT3R

We rebuilt @taylorswift13’s "22" live at the 2013 Billboard Music Awards - in 3D!

TTT3R offers a simple state update rule to enhance length generalization for #CUT3R — No fine-tuning required!

🔗Page: rover-xingyu.github.io/TTT3R

We rebuilt @taylorswift13’s "22" live at the 2013 Billboard Music Awards - in 3D!

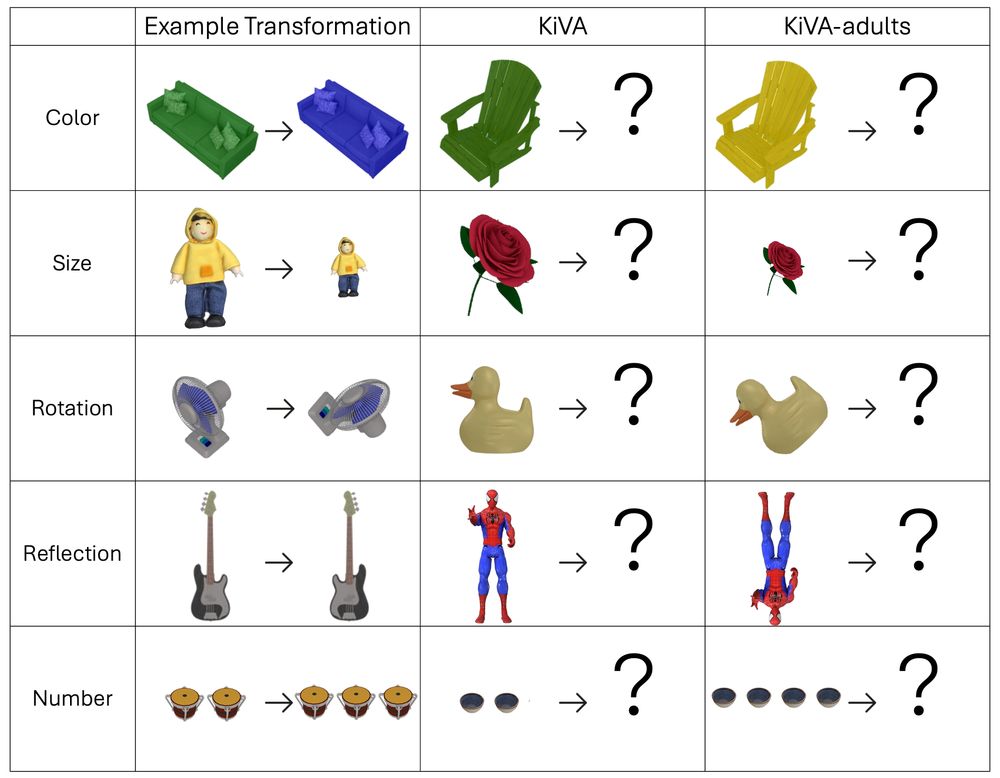

Put it to the test with the KiVA Challenge: a new benchmark for abstract visual reasoning, grounded in real developmental data from children and adults.

🏆 Prizes:

🥇$1K to the top model

🥈🥉$500

📅 Deadline: 10/7/25

🔗 kiva-challenge.github.io

@iccv.bsky.social

Put it to the test with the KiVA Challenge: a new benchmark for abstract visual reasoning, grounded in real developmental data from children and adults.

🏆 Prizes:

🥇$1K to the top model

🥈🥉$500

📅 Deadline: 10/7/25

🔗 kiva-challenge.github.io

@iccv.bsky.social

Think again!

Our Kid-Inspired Visual Analogies benchmark reveals where young children still win: ey242.github.io/kiva.github....

Catch our #ICLR2025 poster today to see where models still fall short!

Thurs. April 24

3-5:30 pm

Halls 3 + 2B #312

Think again!

Our Kid-Inspired Visual Analogies benchmark reveals where young children still win: ey242.github.io/kiva.github....

Catch our #ICLR2025 poster today to see where models still fall short!

Thurs. April 24

3-5:30 pm

Halls 3 + 2B #312

Chris Rockwell, @jtung.bsky.social, Tsung-Yi Lin, Ming-Yu Liu, David F. Fouhey, Chen-Hsuan Lin

tl;dr: a large-scale dataset of dynamic Internet videos annotated with camera poses

arxiv.org/abs/2504.17788

Chris Rockwell, @jtung.bsky.social, Tsung-Yi Lin, Ming-Yu Liu, David F. Fouhey, Chen-Hsuan Lin

tl;dr: a large-scale dataset of dynamic Internet videos annotated with camera poses

arxiv.org/abs/2504.17788

Our key insight: large-scale data is crucial for robust panoramic synthesis across diverse scenes.

Our key insight: large-scale data is crucial for robust panoramic synthesis across diverse scenes.

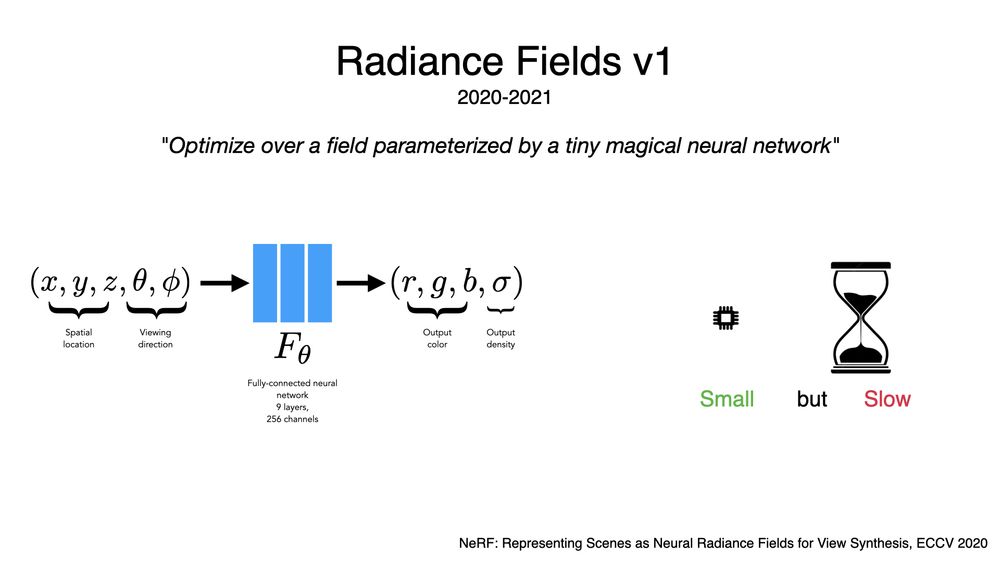

Radiance fields have had 3 distinct generations. First was NeRF: just posenc and a tiny MLP. This was slow to train but worked really well, and it was unusually compressed --- The NeRF was smaller than the images.

Radiance fields have had 3 distinct generations. First was NeRF: just posenc and a tiny MLP. This was slow to train but worked really well, and it was unusually compressed --- The NeRF was smaller than the images.

Check it out below 👇

🔗 Code: github.com/haian-jin/LVSM

📄 Paper: arxiv.org/abs/2410.17242

🌐 Project Page: haian-jin.github.io/projects/LVSM/

Check it out below 👇

🔗 Code: github.com/haian-jin/LVSM

📄 Paper: arxiv.org/abs/2410.17242

🌐 Project Page: haian-jin.github.io/projects/LVSM/

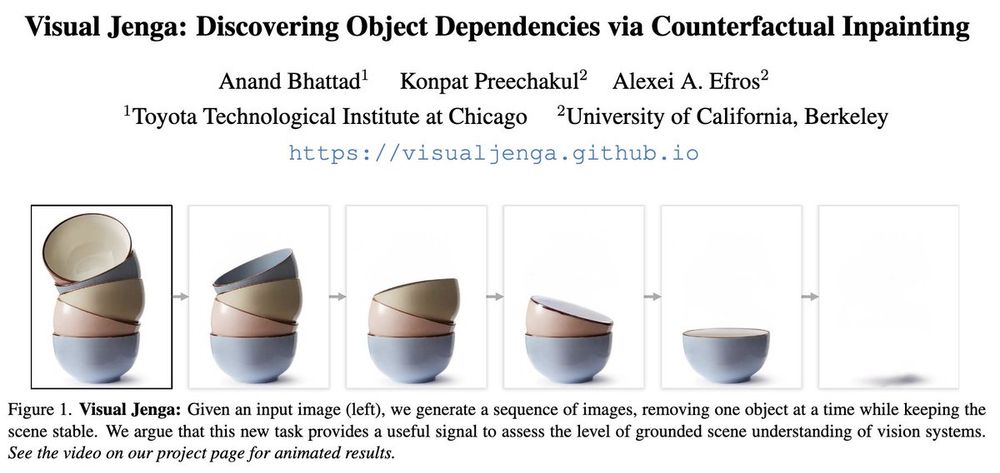

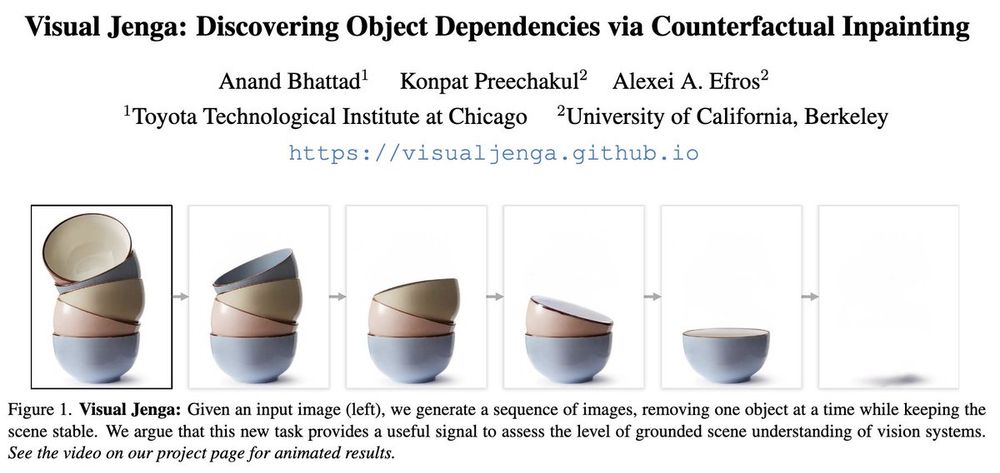

Models today can label pixels and detect objects with high accuracy. But does that mean they truly understand scenes?

Super excited to share our new paper and a new task in computer vision: Visual Jenga!

📄 arxiv.org/abs/2503.21770

🔗 visualjenga.github.io

Models today can label pixels and detect objects with high accuracy. But does that mean they truly understand scenes?

Super excited to share our new paper and a new task in computer vision: Visual Jenga!

📄 arxiv.org/abs/2503.21770

🔗 visualjenga.github.io

Models today can label pixels and detect objects with high accuracy. But does that mean they truly understand scenes?

Super excited to share our new paper and a new task in computer vision: Visual Jenga!

📄 arxiv.org/abs/2503.21770

🔗 visualjenga.github.io

“This work feels like a marked evolution for me personally,” said Ọnụọha.

@snavely.bsky.social

“This work feels like a marked evolution for me personally,” said Ọnụọha.

@snavely.bsky.social

Information and form: forms.gle/uDR2Q74drC4V...

Information and form: forms.gle/uDR2Q74drC4V...

Huge congrats to everyone involved and the community 🎉

Huge congrats to everyone involved and the community 🎉

(And I believe it's pronounced "cuter".)

An online 3D reasoning framework for many 3D tasks directly from just RGB. For static or dynamic scenes. Video or image collections, all in one!

Project Page: cut3r.github.io

Code and Model: github.com/CUT3R/CUT3R

(And I believe it's pronounced "cuter".)

An online 3D reasoning framework for many 3D tasks directly from just RGB. For static or dynamic scenes. Video or image collections, all in one!

Project Page: cut3r.github.io

Code and Model: github.com/CUT3R/CUT3R

An online 3D reasoning framework for many 3D tasks directly from just RGB. For static or dynamic scenes. Video or image collections, all in one!

Project Page: cut3r.github.io

Code and Model: github.com/CUT3R/CUT3R

I'm taking this to mean that LLMs are at play and therefore, they will influence the language such that the two words will eventually come to mean the same thing!

I'm taking this to mean that LLMs are at play and therefore, they will influence the language such that the two words will eventually come to mean the same thing!

www.settlingthescorepodcast.com/66-the-empir...

@scoresettlers.bsky.social

www.settlingthescorepodcast.com/66-the-empir...

@scoresettlers.bsky.social

In the 1960s, did they call the 1880s "the Eighties"?

In the 1960s, did they call the 1880s "the Eighties"?