shwnmnl.github.io

We explore how our unique subjective experiences of the world affect mental health using a combination of psychometrics, NLP and genAI.

🔗 Read it here: doi.org/10.1111/pcn....

🧵👇

🧵of my highlights

🧵of my highlights

the outputs shouldn’t replace your own thoughts, but help to refine them

open.substack.com/pub/shifting...

the outputs shouldn’t replace your own thoughts, but help to refine them

open.substack.com/pub/shifting...

So understanding is "Standing among" as-in a mind that figuratively shares space with the concepts in question.

So understanding is "Standing among" as-in a mind that figuratively shares space with the concepts in question.

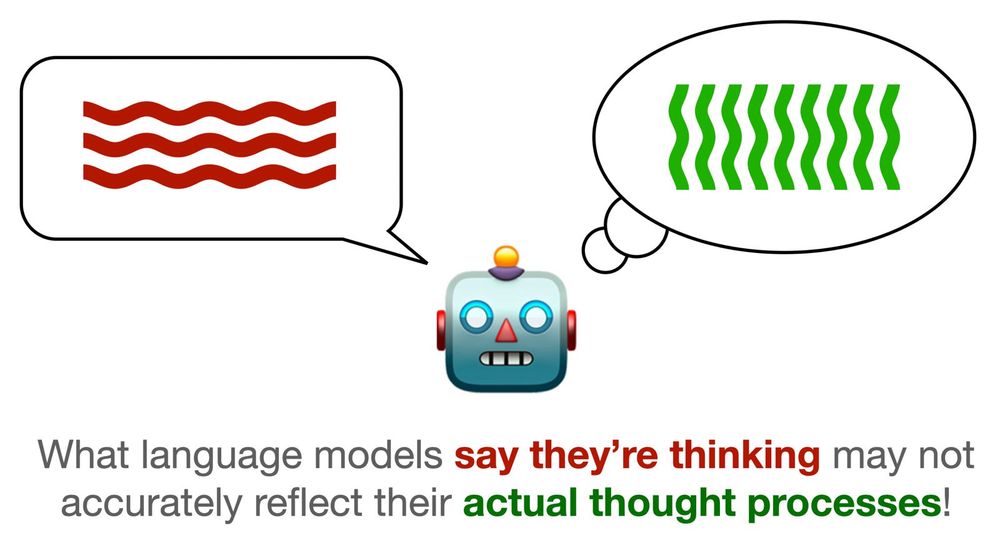

I really like this paper as a survey on the current literature on what CoT is, but more importantly on what it's not.

It also serves as a cautionary tale to the (apparently quite common) misuse of CoT as an interpretable method.

I really like this paper as a survey on the current literature on what CoT is, but more importantly on what it's not.

It also serves as a cautionary tale to the (apparently quite common) misuse of CoT as an interpretable method.

doi.org/10.31234/osf...

#psychology

doi.org/10.31234/osf...

#psychology

I focused on comparing fMRI techniques on a single subject, using fear as a case study.

school-brainhack.github.io/project/many...

I focused on comparing fMRI techniques on a single subject, using fear as a case study.

school-brainhack.github.io/project/many...

This applies to everyday use and even more so to academic or scientific contexts.

As a self-described AI power-user, here’s my two-cents in a quick (6min) video demonstration.

youtu.be/JVPonpG6hkM

This applies to everyday use and even more so to academic or scientific contexts.

As a self-described AI power-user, here’s my two-cents in a quick (6min) video demonstration.

youtu.be/JVPonpG6hkM

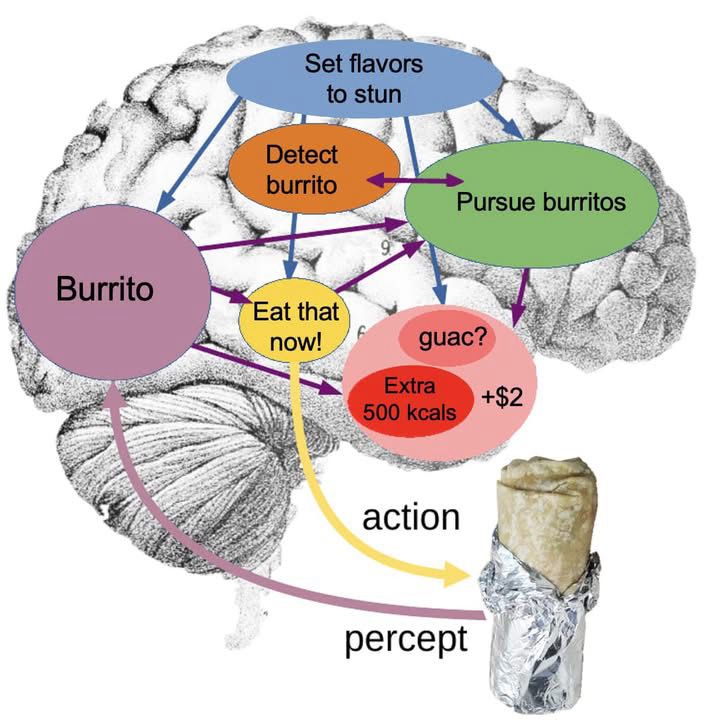

www.thetransmitter.org/brain-inspir...

www.thetransmitter.org/brain-inspir...

(looking at a paper): "Ugh, can you maybe give me an 'abstract'? Still too long...maybe just 'highlights'...? Maybe a 'public interest statement'...?

(looking at a paper): "Ugh, can you maybe give me an 'abstract'? Still too long...maybe just 'highlights'...? Maybe a 'public interest statement'...?

to be explanatory and useful, any claim ultimately has to cache out in terms consistent with our individual and collective subjective experiences

🧵

to be explanatory and useful, any claim ultimately has to cache out in terms consistent with our individual and collective subjective experiences

🧵

1/n

1/n

Recherche menée par @shwnmnl.bsky.social, @vincenttd.bsky.social, Jean Gagnon et Frédéric Gosselin.

#SantéMentale #Psychologie

Recherche menée par @shwnmnl.bsky.social, @vincenttd.bsky.social, Jean Gagnon et Frédéric Gosselin.

#SantéMentale #Psychologie