https://alexrame.github.io/

Now integrating thinking capabilities, 2.5 Pro Experimental is our most performant Gemini model yet. It’s #1 on the LM Arena leaderboard. 🥇

Now integrating thinking capabilities, 2.5 Pro Experimental is our most performant Gemini model yet. It’s #1 on the LM Arena leaderboard. 🥇

1 Two different clip hyperparams, so positive clipping can uplift more unexpected tokens

2 Dynamic sampling -- remove samples w flat reward in batch

3 Per token loss

4 Managing too long generations in loss

dapo-sia.github.io

1 Two different clip hyperparams, so positive clipping can uplift more unexpected tokens

2 Dynamic sampling -- remove samples w flat reward in batch

3 Per token loss

4 Managing too long generations in loss

dapo-sia.github.io

Report: storage.googleapis.com/deepmind-med...

Report: storage.googleapis.com/deepmind-med...

On HF, under CC-BY licence: huggingface.co/kyutai/heliu...

On HF, under CC-BY licence: huggingface.co/kyutai/heliu...

OpenAI's Reinforcement Finetuning and RL for the masses

The cherry on Yann LeCun’s cake has finally been realized.

OpenAI's Reinforcement Finetuning and RL for the masses

The cherry on Yann LeCun’s cake has finally been realized.

💡Our NeurIPS 2024 paper proposes 𝐌𝐚𝐍𝐨, a training-free and SOTA approach!

📑 arxiv.org/pdf/2405.18979

🖥️https://github.com/Renchunzi-Xie/MaNo

1/🧵(A surprise at the end!)

💡Our NeurIPS 2024 paper proposes 𝐌𝐚𝐍𝐨, a training-free and SOTA approach!

📑 arxiv.org/pdf/2405.18979

🖥️https://github.com/Renchunzi-Xie/MaNo

1/🧵(A surprise at the end!)

With Colin Raffel, UofT works on decentralizing, democratizing, and derisking large-scale AI. Wanna work on model m(o)erging, collaborative/decentralized learning, identifying & mitigating risks, etc. Apply (deadline is Monday!)

web.cs.toronto.edu/graduate/how...

🤖📈

With Colin Raffel, UofT works on decentralizing, democratizing, and derisking large-scale AI. Wanna work on model m(o)erging, collaborative/decentralized learning, identifying & mitigating risks, etc. Apply (deadline is Monday!)

web.cs.toronto.edu/graduate/how...

🤖📈

👉 Who else should be included?

Comment below or DM me to be added

go.bsky.app/dfvcLZ

👉 Who else should be included?

Comment below or DM me to be added

go.bsky.app/dfvcLZ

recently, @primeintellect.bsky.social have announced finishing their 10B distributed learning, trained across the world.

what is it exactly?

🧵

recently, @primeintellect.bsky.social have announced finishing their 10B distributed learning, trained across the world.

what is it exactly?

🧵

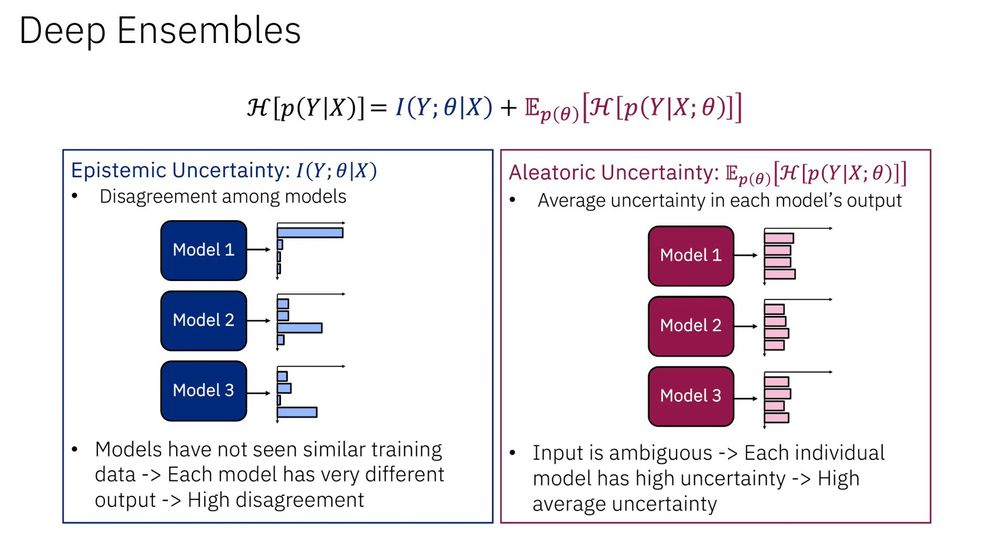

tl;dr: generate multiple clarifications of input txt w/ external LLM then forward:

>disagreement btw outputs -> data uncertainty

>avg uncertainty in each output -> model uncertainty

tl;dr: generate multiple clarifications of input txt w/ external LLM then forward:

>disagreement btw outputs -> data uncertainty

>avg uncertainty in each output -> model uncertainty

@mathurinmassias.bsky.social has a good list of advice mathurinm.github.io/cnrs_inria_a...

Official 🔗 www.ins2i.cnrs.fr/en/cnrsinfo/...

Don't wait!

@mathurinmassias.bsky.social has a good list of advice mathurinm.github.io/cnrs_inria_a...

Official 🔗 www.ins2i.cnrs.fr/en/cnrsinfo/...

Don't wait!

1. Get max prob

2. Find min prob based on a threshold \in [0, 1] \times that max prob

3. Gather only tokens probs above that min prob

4. Sample in that pool, according to renormalized probs

More robust to change in temperature!

1. Get max prob

2. Find min prob based on a threshold \in [0, 1] \times that max prob

3. Gather only tokens probs above that min prob

4. Sample in that pool, according to renormalized probs

More robust to change in temperature!

We've been adding over a million users per day for the last few days. To celebrate, here are 20 fun facts about Bluesky:

We've been adding over a million users per day for the last few days. To celebrate, here are 20 fun facts about Bluesky:

1/

1/