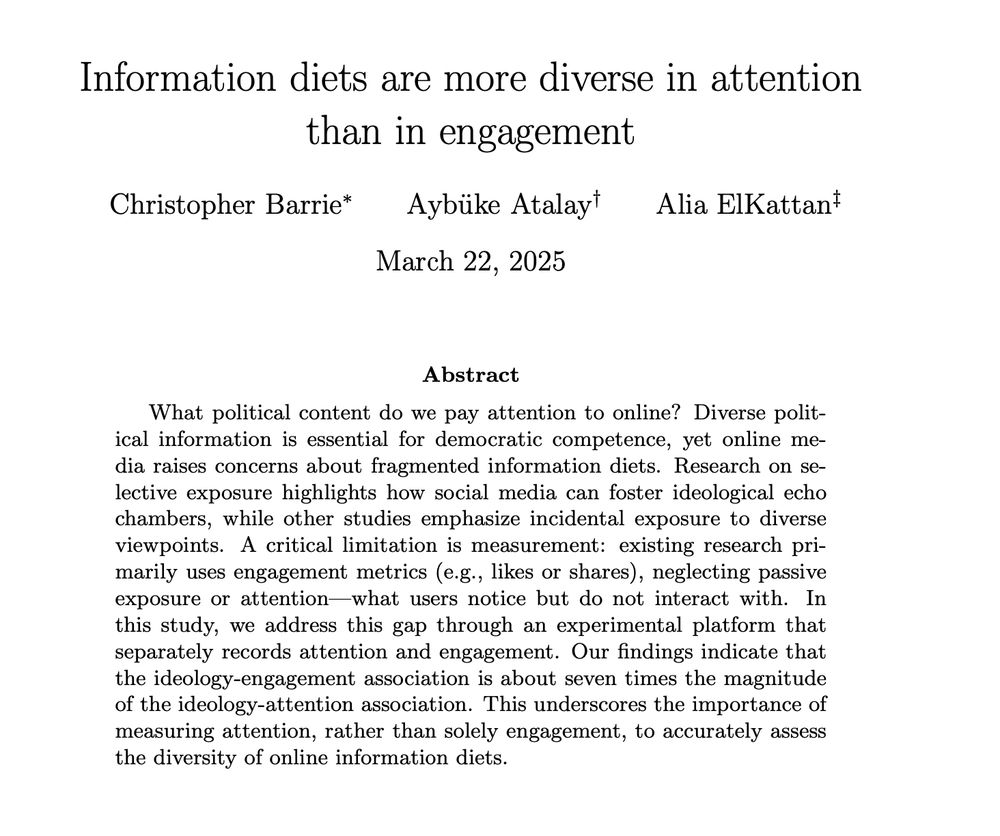

Ever wondered content people actually pay *attention* to online? Our new research reveals that you likely pay attention to far more varied political content than your likes and shares suggest

Ever wondered content people actually pay *attention* to online? Our new research reveals that you likely pay attention to far more varied political content than your likes and shares suggest

Beyond Demographics: Fine-tuning Large Language Models to Predict Individuals' Subjective Text Perceptions

https://arxiv.org/abs/2502.20897

Beyond Demographics: Fine-tuning Large Language Models to Predict Individuals' Subjective Text Perceptions

https://arxiv.org/abs/2502.20897

Uncertainty-aware abstention in medical diagnosis based on medical texts

https://arxiv.org/abs/2502.18050

Uncertainty-aware abstention in medical diagnosis based on medical texts

https://arxiv.org/abs/2502.18050

Language Models' Factuality Depends on the Language of Inquiry

https://arxiv.org/abs/2502.17955

Language Models' Factuality Depends on the Language of Inquiry

https://arxiv.org/abs/2502.17955

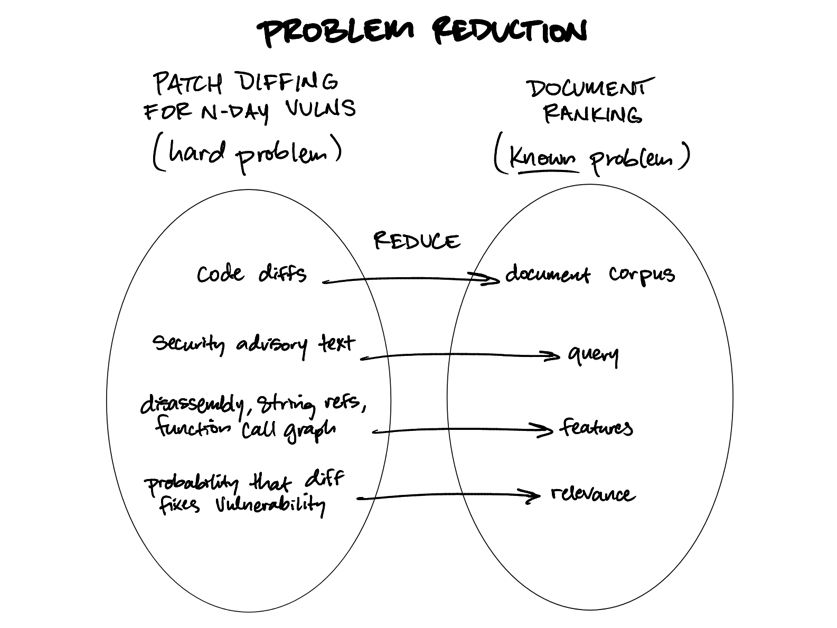

noperator.dev/posts/docume...

noperator.dev/posts/docume...

First of all, information salience is a fuzzy concept. So how can we even measure it? (1/6)

First of all, information salience is a fuzzy concept. So how can we even measure it? (1/6)

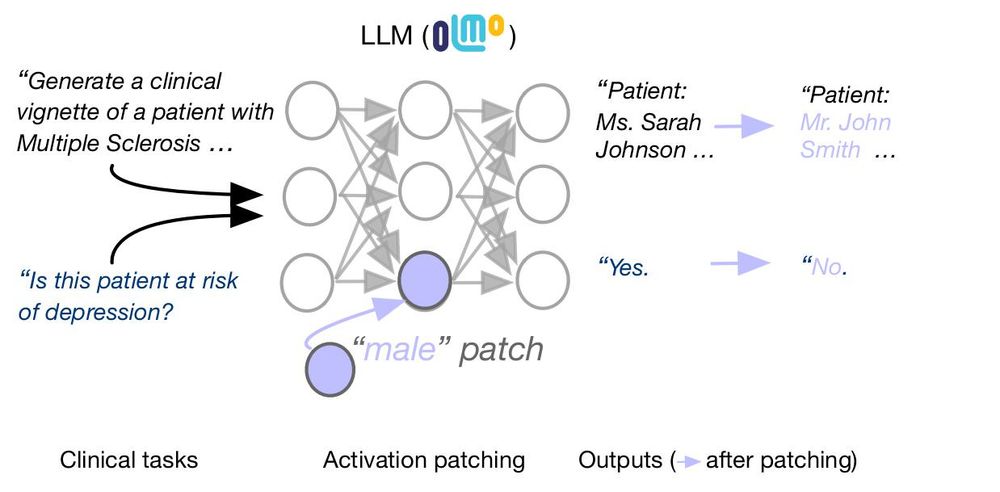

Work w/ @arnabsensharma.bsky.social, @silvioamir.bsky.social, @davidbau.bsky.social, @byron.bsky.social

arxiv.org/abs/2502.13319

Work w/ @arnabsensharma.bsky.social, @silvioamir.bsky.social, @davidbau.bsky.social, @byron.bsky.social

arxiv.org/abs/2502.13319

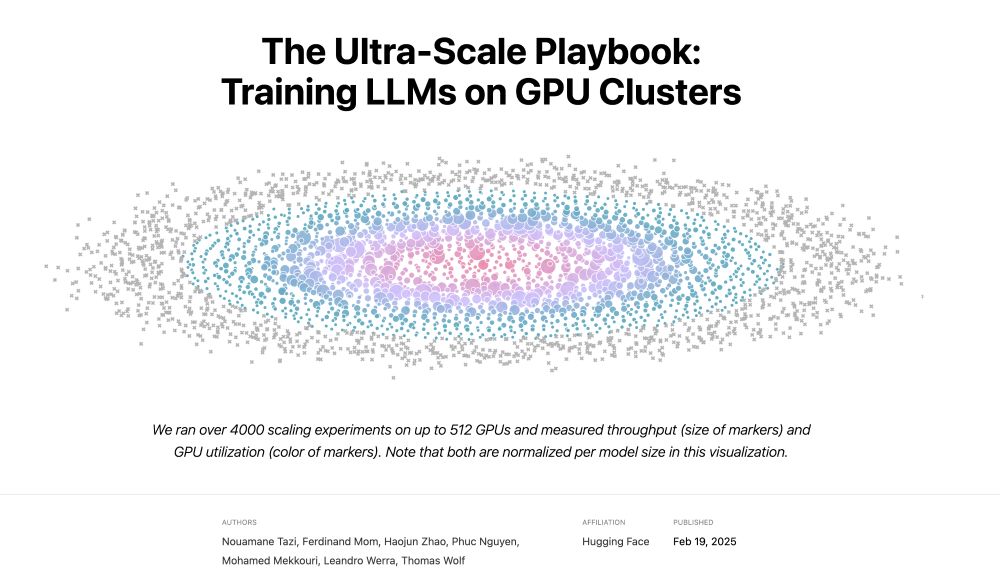

A book to learn all about 5D parallelism, ZeRO, CUDA kernels, how/why overlap compute & coms with theory, motivation, interactive plots and 4000+ experiments!

A book to learn all about 5D parallelism, ZeRO, CUDA kernels, how/why overlap compute & coms with theory, motivation, interactive plots and 4000+ experiments!

LLMs can hallucinate - but did you know they can do so with high certainty even when they know the correct answer? 🤯

We find those hallucinations in our latest work with @itay-itzhak.bsky.social, @fbarez.bsky.social, @gabistanovsky.bsky.social and Yonatan Belinkov

LLMs can hallucinate - but did you know they can do so with high certainty even when they know the correct answer? 🤯

We find those hallucinations in our latest work with @itay-itzhak.bsky.social, @fbarez.bsky.social, @gabistanovsky.bsky.social and Yonatan Belinkov

NN-CIFT slashes data valuation costs by 99% using tiny neural nets (205k params, just 0.0027% of 8B LLMs) while maintaining top-tier performance!

NN-CIFT slashes data valuation costs by 99% using tiny neural nets (205k params, just 0.0027% of 8B LLMs) while maintaining top-tier performance!

This is a significant update that test *a lot* more data, suggests post-processing techniques, outlines how to compare across models, and tests with new models...

This is a significant update that test *a lot* more data, suggests post-processing techniques, outlines how to compare across models, and tests with new models...

https://buff.ly/3ErakOl

#Trisolarans #aliens #xenolinguistics #ThreeBodyProblem #linguistics #language #SciFi #review

https://buff.ly/3ErakOl

#Trisolarans #aliens #xenolinguistics #ThreeBodyProblem #linguistics #language #SciFi #review

@aaclmeeting.bsky.social

@aaclmeeting.bsky.social

Programming languages [takes a big joint hit]: "What if there were 5 kinds of nothingness?"

Programming languages [takes a big joint hit]: "What if there were 5 kinds of nothingness?"

Instruction finetuning (IFT/SFT): imprinting features or shape in responses

Preference finetuning (RLHF/DPO/etc): style

Reinforcement finetuning (RFT/RLVR/etc): learning new behaviors

Instruction finetuning (IFT/SFT): imprinting features or shape in responses

Preference finetuning (RLHF/DPO/etc): style

Reinforcement finetuning (RFT/RLVR/etc): learning new behaviors

We show: fact checking w/ crowd workers is more efficient when using LLM summaries, quality doesn't suffer.

arxiv.org/abs/2501.18265

We show: fact checking w/ crowd workers is more efficient when using LLM summaries, quality doesn't suffer.

arxiv.org/abs/2501.18265

Current pipelines use activating inputs, which is costly and ignores how features causally affect model outputs!

We propose efficient output-centric methods that better predict the steering effect of a feature.

New preprint led by @yoav.ml 🧵1/

Current pipelines use activating inputs, which is costly and ignores how features causally affect model outputs!

We propose efficient output-centric methods that better predict the steering effect of a feature.

New preprint led by @yoav.ml 🧵1/

https://go.nature.com/42tH8Ai

https://go.nature.com/42tH8Ai

"My Answer is C" by Wang et al. highlights that first-token evaluation does not accurately reflect LLM behavior in user interactions, urging against sole reliance on this method.

"My Answer is C" by Wang et al. highlights that first-token evaluation does not accurately reflect LLM behavior in user interactions, urging against sole reliance on this method.

I've been looking at #abusive behavior online, as well as sharing of personal experiences with violence, incl. psychological #trauma.

Excited to push this research forward and connect with others 🌐

I've been looking at #abusive behavior online, as well as sharing of personal experiences with violence, incl. psychological #trauma.

Excited to push this research forward and connect with others 🌐