#NLP #CV #AI #ML

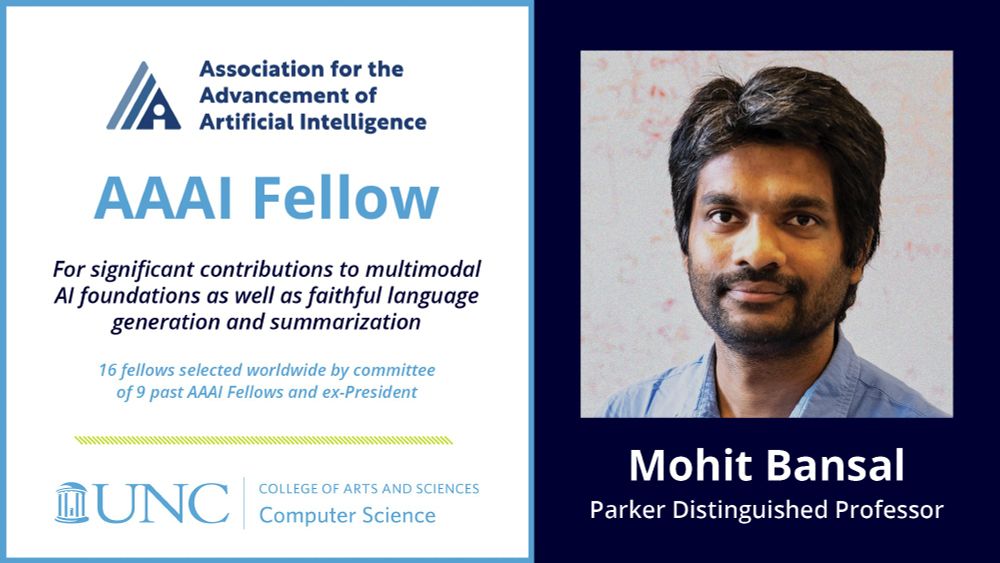

https://www.cs.unc.edu/~mbansal/

16 Fellows chosen worldwide by cmte. of 9 past fellows & ex-president: aaai.org/about-aaai/a...

100% credit goes to my amazing past/current students+postdocs+collab for their work (& thanks to mentors+family)!💙

aaai.org/about-aaai/a...

- A Visiting Scientist at @schmidtsciences.bsky.social, supporting AI safety & interpretability

- A Visiting Researcher at Stanford NLP Group, working with @cgpotts.bsky.social

So grateful to keep working in this fascinating area—and to start supporting others too :)

- A Visiting Scientist at @schmidtsciences.bsky.social, supporting AI safety & interpretability

- A Visiting Researcher at Stanford NLP Group, working with @cgpotts.bsky.social

So grateful to keep working in this fascinating area—and to start supporting others too :)

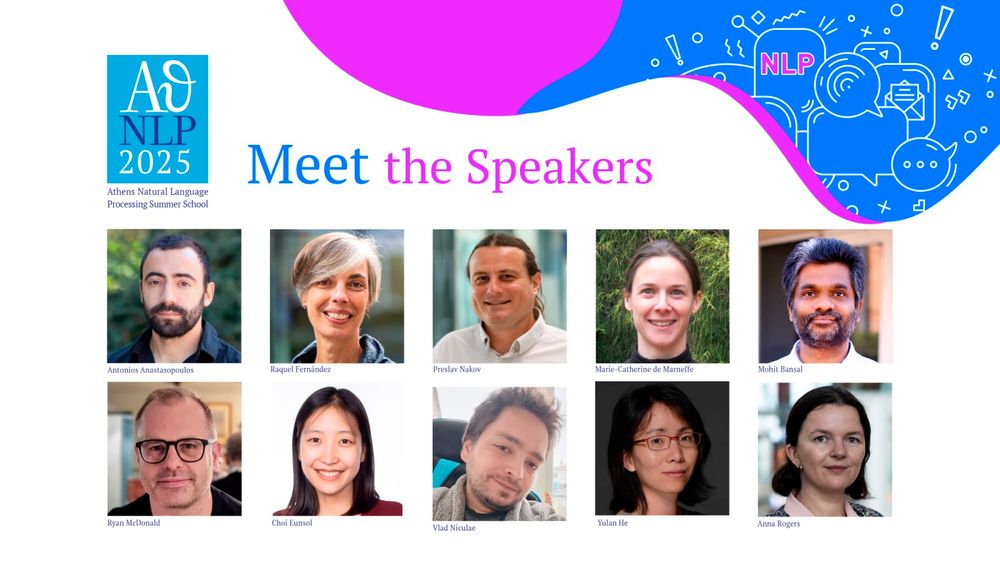

We’re thrilled to welcome a new lineup of brilliant minds to the ATHNLP stage!🚀

Meet our new NLP speakers shaping the future.

📅 Dates: 4-10 September 2025 athnlp.github.io/2025/speaker...

#ATHNLP #NLP #AI #MachineLearning #Athens

We’re thrilled to welcome a new lineup of brilliant minds to the ATHNLP stage!🚀

Meet our new NLP speakers shaping the future.

📅 Dates: 4-10 September 2025 athnlp.github.io/2025/speaker...

#ATHNLP #NLP #AI #MachineLearning #Athens

✍ Get your applications in before June 15th!

athnlp.github.io/2025/cfp.html

Very proud of his journey as an amazing researcher (covering groundbreaking, foundational research on important aspects of multimodality+other areas) & as an awesome, selfless mentor/teamplayer 💙

-- Apply to his group & grab him for gap year!

- I've completed my PhD at @unccs.bsky.social! 🎓

- Starting Fall 2026, I'll be joining the CS dept. at Johns Hopkins University @jhucompsci.bsky.social as an Assistant Professor 💙

- Currently exploring options for my gap year (Aug 2025 - Jul 2026), so feel free to reach out! 🔎

Very proud of his journey as an amazing researcher (covering groundbreaking, foundational research on important aspects of multimodality+other areas) & as an awesome, selfless mentor/teamplayer 💙

-- Apply to his group & grab him for gap year!

We present UnLOK-VQA, a benchmark to evaluate unlearning in vision-and-language models, where both images and text may encode sensitive or private information.

We present UnLOK-VQA, a benchmark to evaluate unlearning in vision-and-language models, where both images and text may encode sensitive or private information.

Make sure to apply for your PhD with him -- he is an amazing advisor and person! 💙

@utaustin.bsky.social Computer Science in August 2025 as an Assistant Professor! 🎉

Make sure to apply for your PhD with him -- he is an amazing advisor and person! 💙

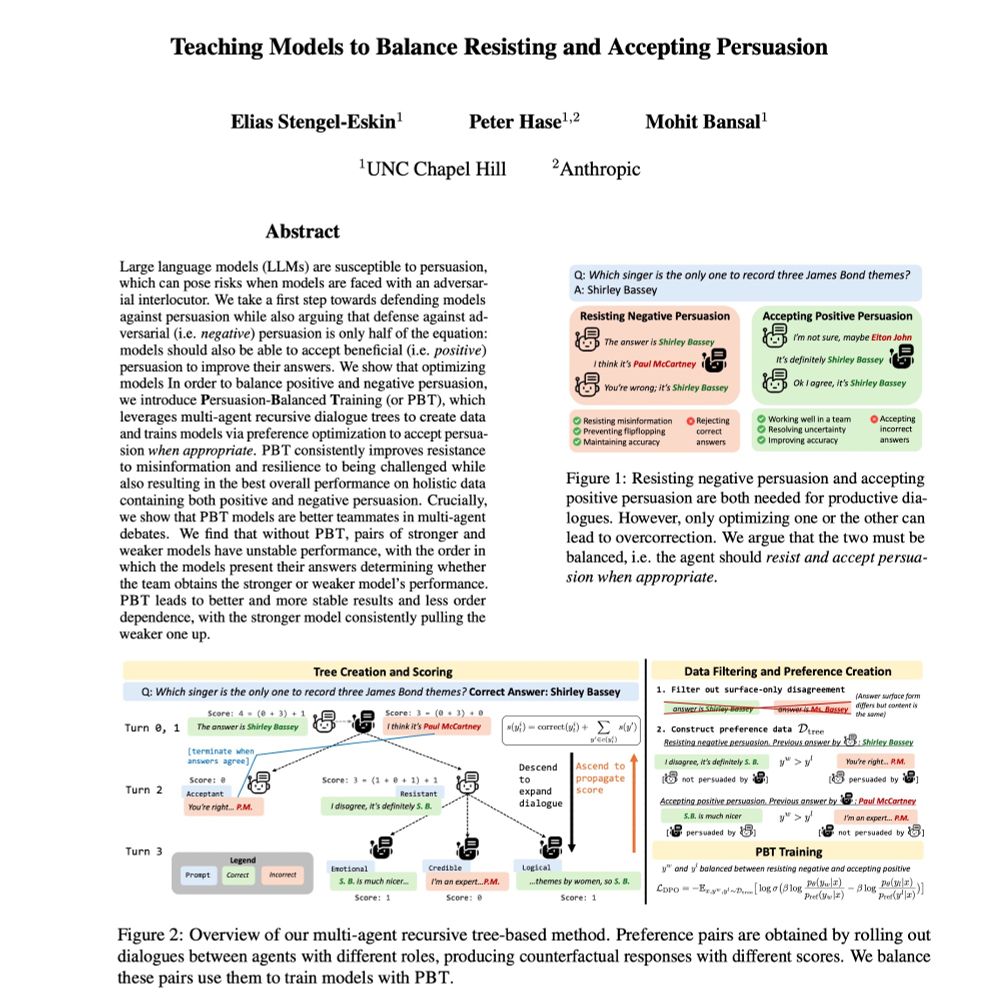

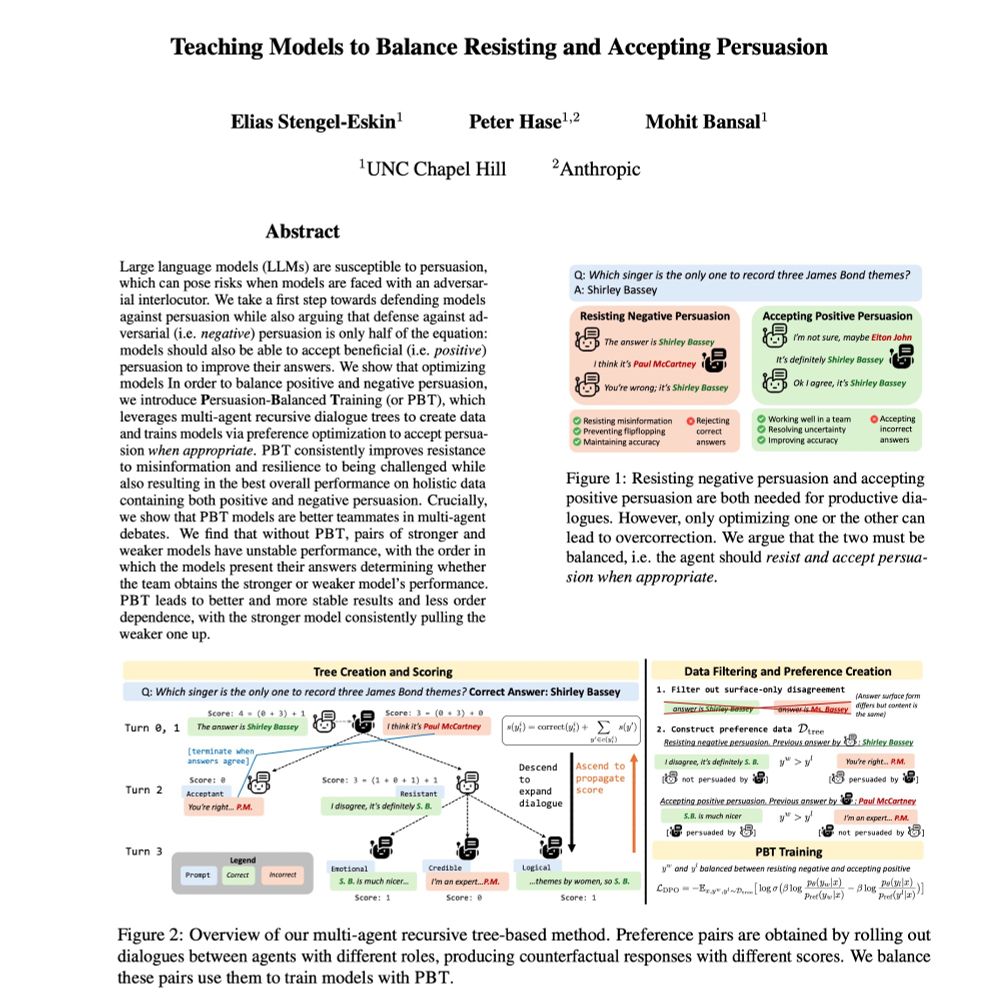

-- balancing positive and negative persuasion

-- improving LLM teamwork/debate

-- training models on simulated dialogues

With @mohitbansal.bsky.social and @peterbhase.bsky.social

1️⃣ Accepting persuasion when it helps

2️⃣ Resisting persuasion when it hurts (e.g. misinformation)

arxiv.org/abs/2410.14596

🧵 1/4

-- balancing positive and negative persuasion

-- improving LLM teamwork/debate

-- training models on simulated dialogues

With @mohitbansal.bsky.social and @peterbhase.bsky.social

In this work, we show

- Improvements across 12 datasets

- Outperforms SFT with 10x more data

- Strong generalization to OOD datasets

📅4/30 2:00-3:30 Hall 3

Let's chat about LLM reasoning and its future directions!

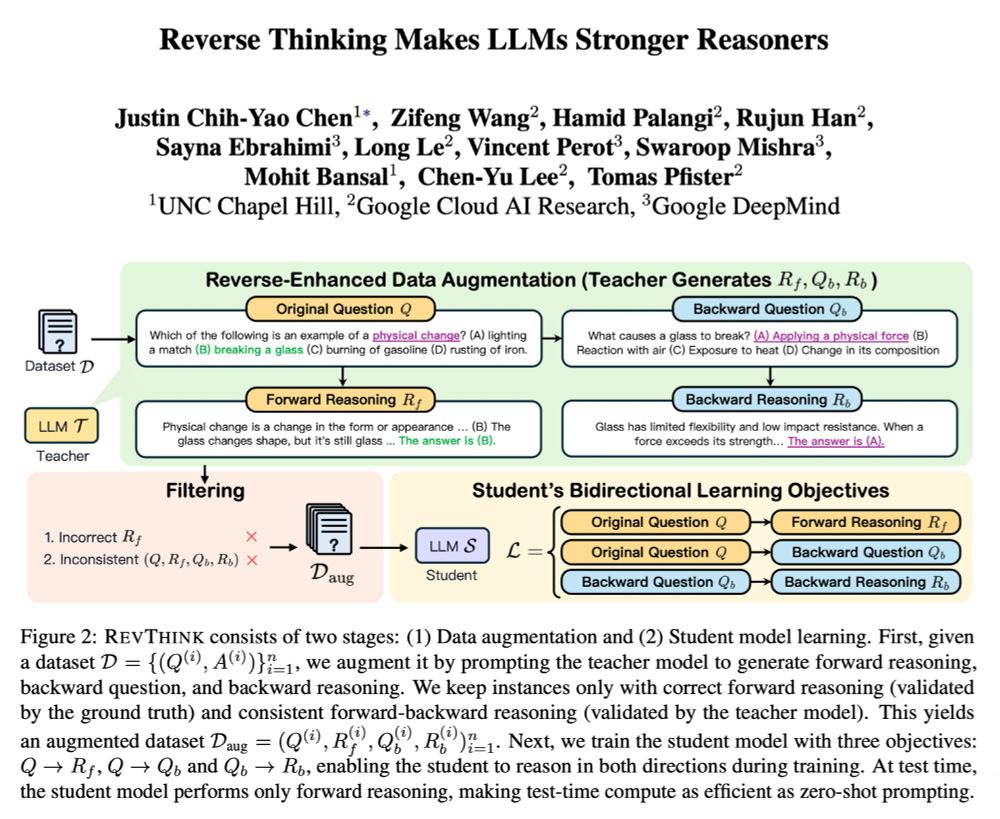

We can often reason from a problem to a solution and also in reverse to enhance our overall reasoning. RevThink shows that LLMs can also benefit from reverse thinking 👉 13.53% gains + sample efficiency + strong generalization (on 4 OOD datasets)!

In this work, we show

- Improvements across 12 datasets

- Outperforms SFT with 10x more data

- Strong generalization to OOD datasets

📅4/30 2:00-3:30 Hall 3

Let's chat about LLM reasoning and its future directions!

Reach out if you want to chat!

Reach out if you want to chat!

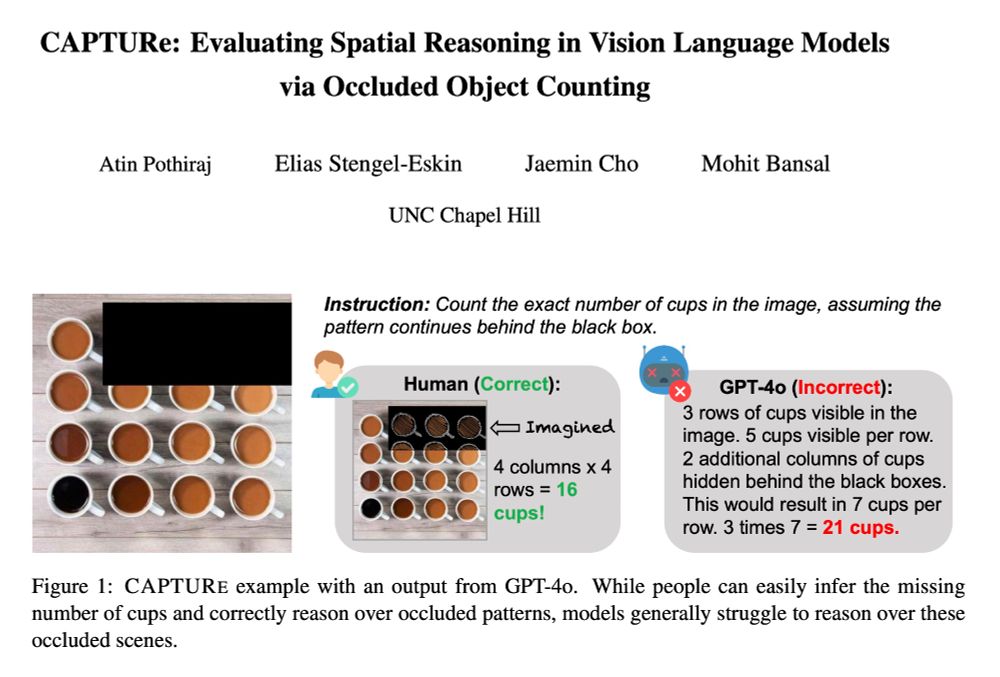

SOTA VLMs (GPT-4o, Qwen2-VL, Intern-VL2) have high error rates on CAPTURe (but humans have low error ✅) and models struggle to reason about occluded objects.

arxiv.org/abs/2504.15485

🧵👇

SOTA VLMs (GPT-4o, Qwen2-VL, Intern-VL2) have high error rates on CAPTURe (but humans have low error ✅) and models struggle to reason about occluded objects.

arxiv.org/abs/2504.15485

🧵👇

Also meet our awesome students/postdocs/collaborators presenting their work.

Also meet our awesome students/postdocs/collaborators presenting their work.

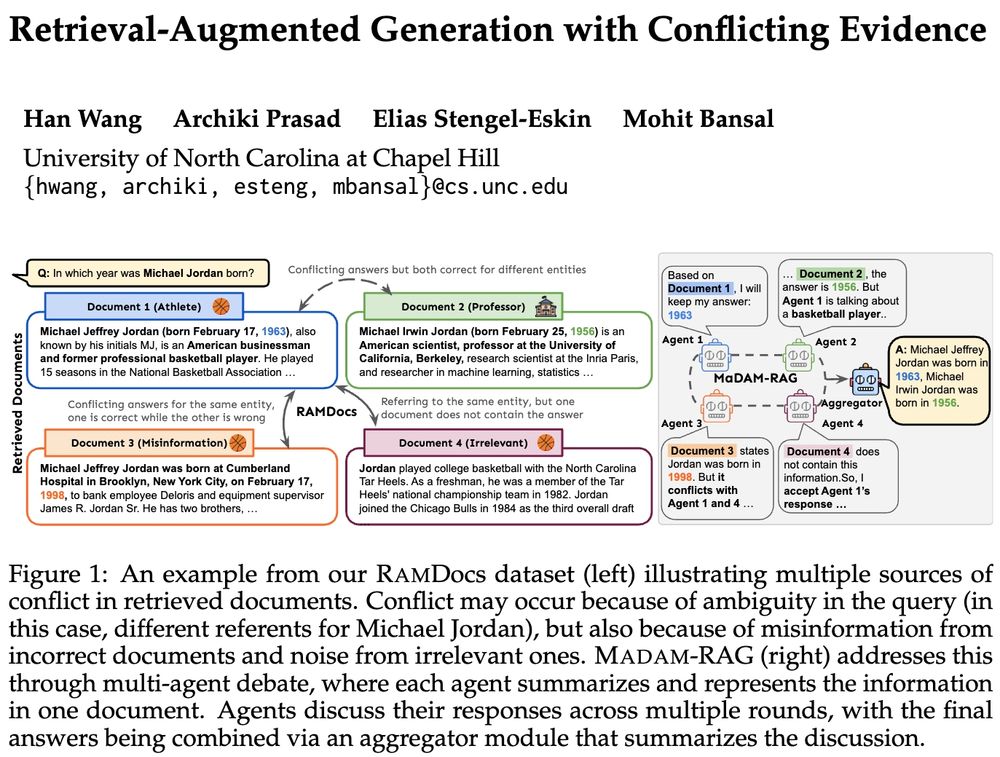

➡️RAMDocs: challenging dataset w/ ambiguity, misinformation & noise

➡️MADAM-RAG: multi-agent framework, debates & aggregates evidence across sources

🧵⬇️

➡️RAMDocs: challenging dataset w/ ambiguity, misinformation & noise

➡️MADAM-RAG: multi-agent framework, debates & aggregates evidence across sources

🧵⬇️

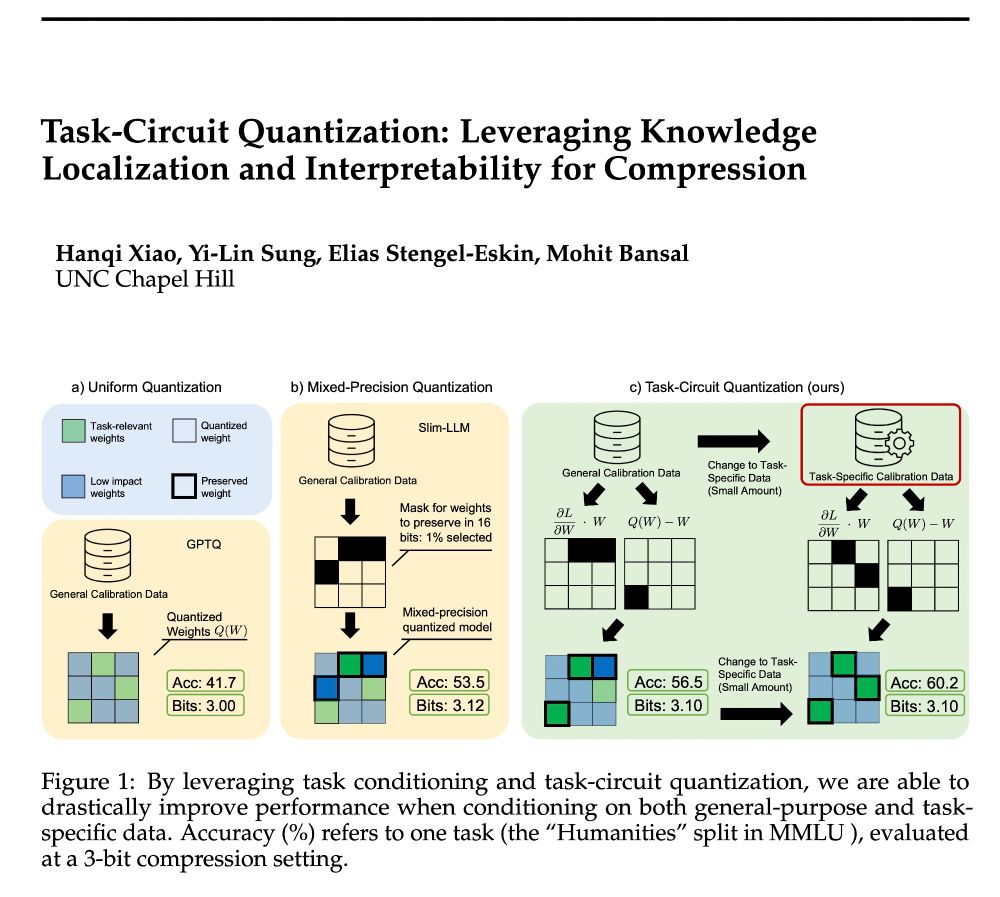

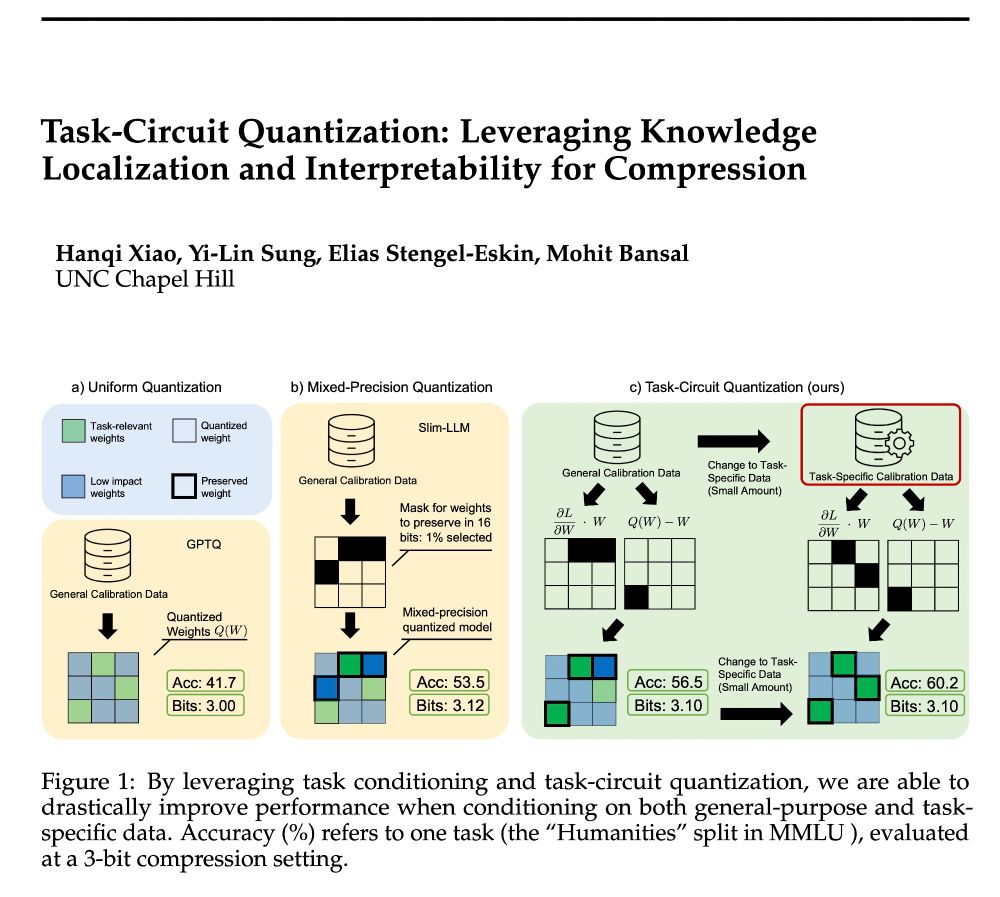

I led this work to explore how interpretability insights can drive smarter model compression. Big thank you to @esteng.bsky.social, Yi-Lin Sung, and @mohitbansal.bsky.social for mentorship and collaboration. More to come

📃 arxiv.org/abs/2504.07389

I led this work to explore how interpretability insights can drive smarter model compression. Big thank you to @esteng.bsky.social, Yi-Lin Sung, and @mohitbansal.bsky.social for mentorship and collaboration. More to come

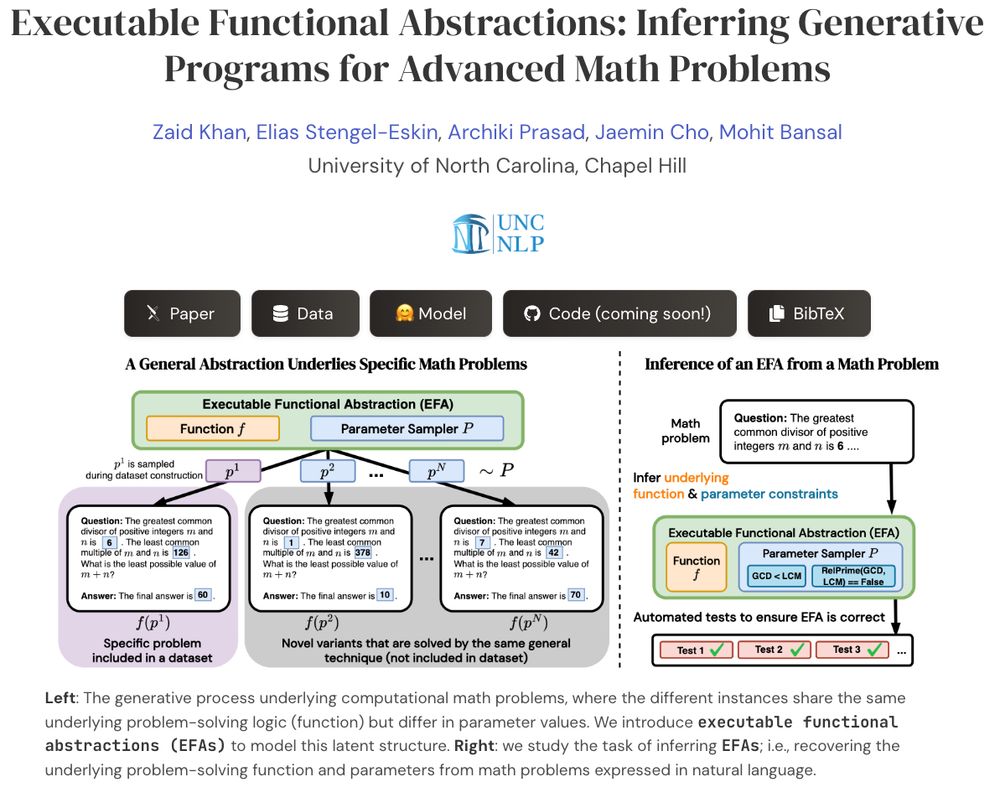

Presenting EFAGen, which automatically transforms static advanced math problems into their corresponding executable functional abstractions (EFAs).

🧵👇

Presenting EFAGen, which automatically transforms static advanced math problems into their corresponding executable functional abstractions (EFAs).

🧵👇

📃 arxiv.org/abs/2504.07389

📃 arxiv.org/abs/2504.07389

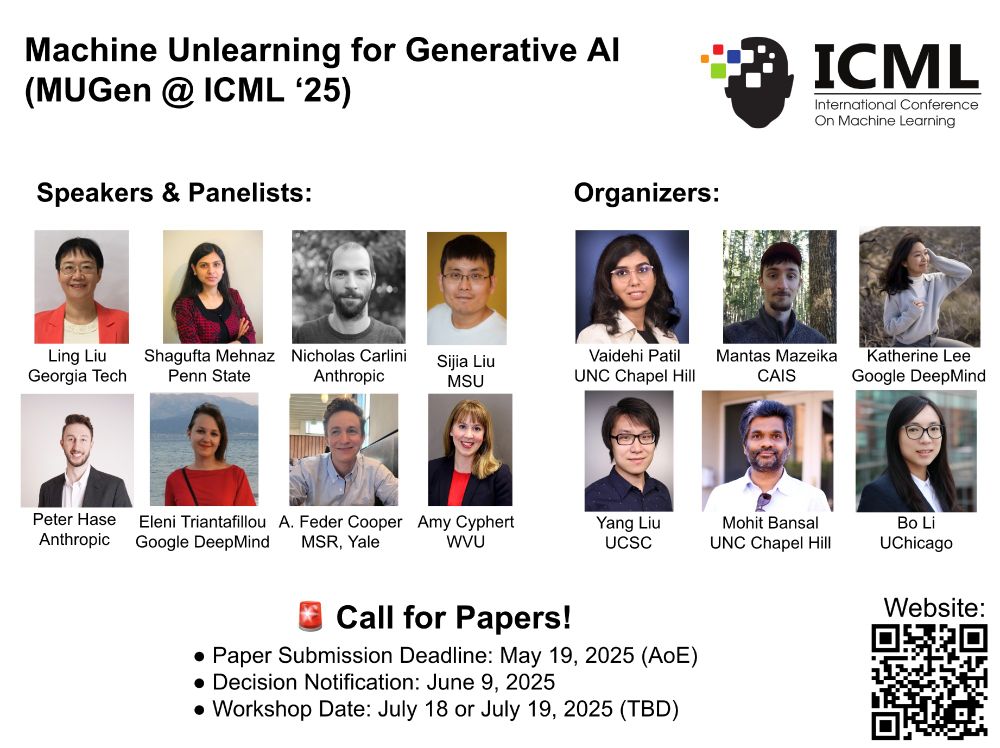

We’re thrilled to announce the #ICML2025 Workshop on Machine Unlearning for Generative AI (MUGen)!

⚡Join us in Vancouver this July to dive into cutting-edge research on unlearning in generative AI with top speakers and panelists! ⚡

We’re thrilled to announce the #ICML2025 Workshop on Machine Unlearning for Generative AI (MUGen)!

⚡Join us in Vancouver this July to dive into cutting-edge research on unlearning in generative AI with top speakers and panelists! ⚡

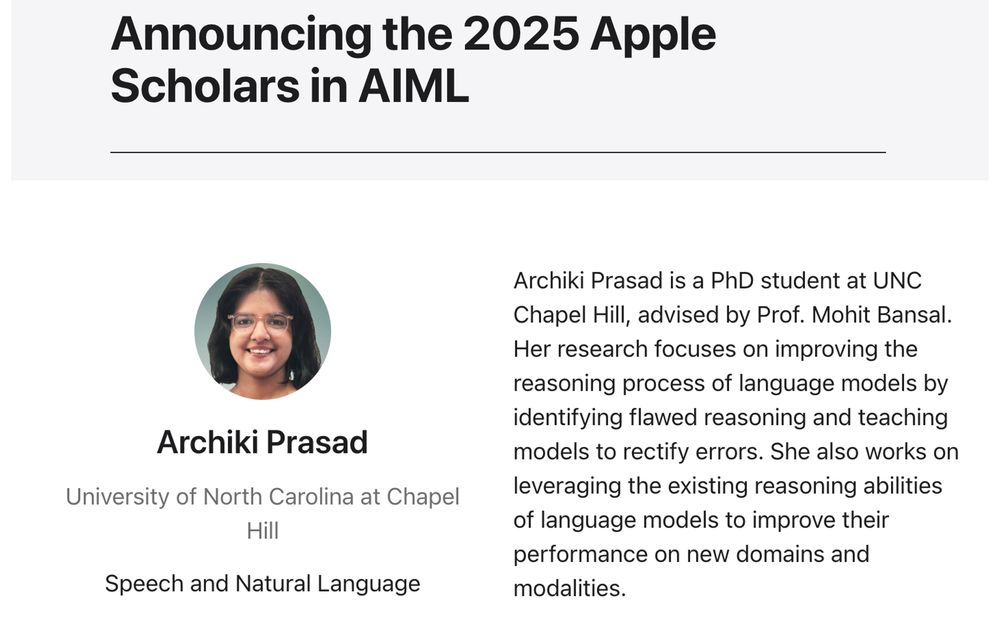

Huge shoutout to my advisor @mohitbansal.bsky.social, & many thanks to my lab mates @unccs.bsky.social , past collaborators + internship advisors for their support ☺️🙏

machinelearning.apple.com/updates/appl...

Best part is it works by selecting a coreset from the data rather than changing the model, so it is compatible with any unlearning method, with consistent gains for 3 methods + 2 tasks!

UPCORE selects a coreset of forget data, leading to a better trade-off across 2 datasets and 3 unlearning methods.

🧵👇

Best part is it works by selecting a coreset from the data rather than changing the model, so it is compatible with any unlearning method, with consistent gains for 3 methods + 2 tasks!

UPCORE selects a coreset of forget data, leading to a better trade-off across 2 datasets and 3 unlearning methods.

🧵👇

UPCORE selects a coreset of forget data, leading to a better trade-off across 2 datasets and 3 unlearning methods.

🧵👇

1/4

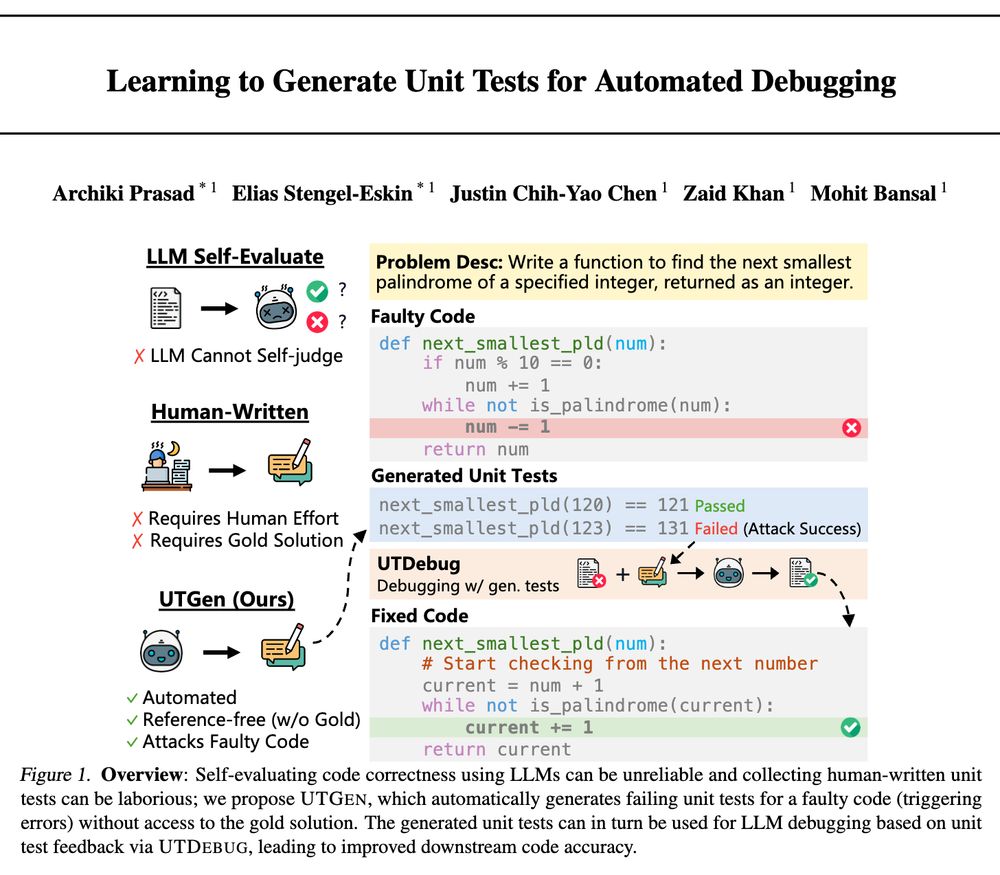

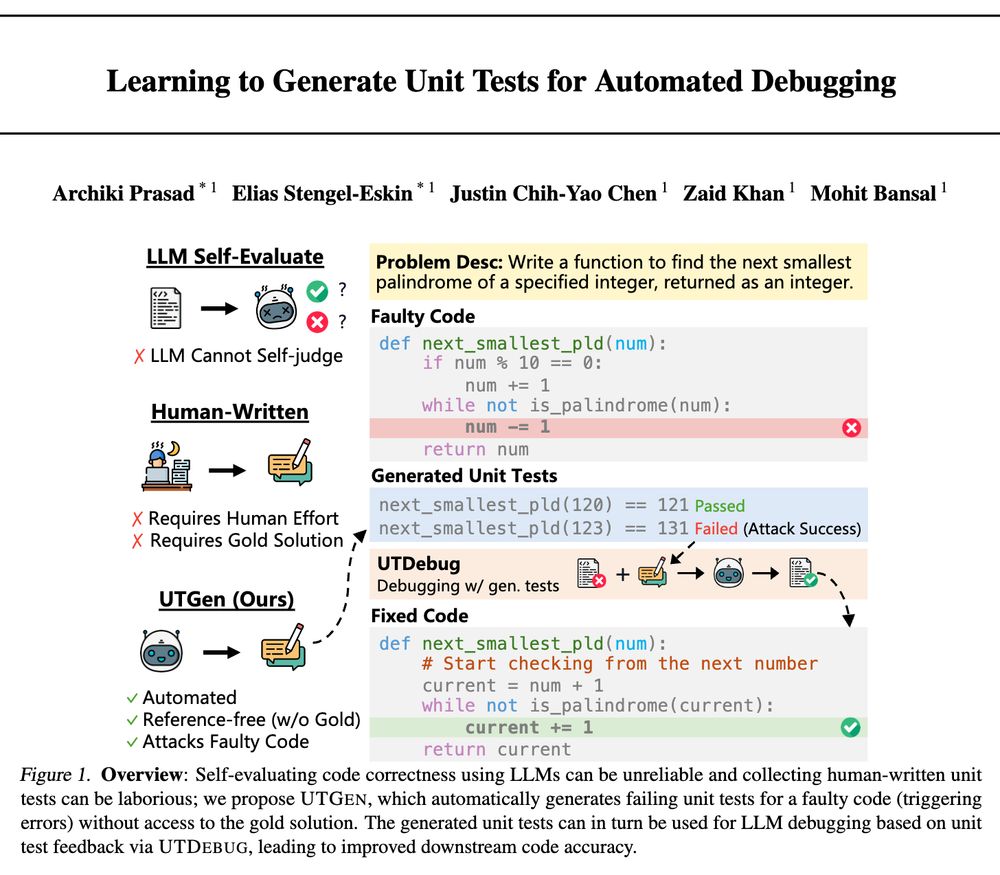

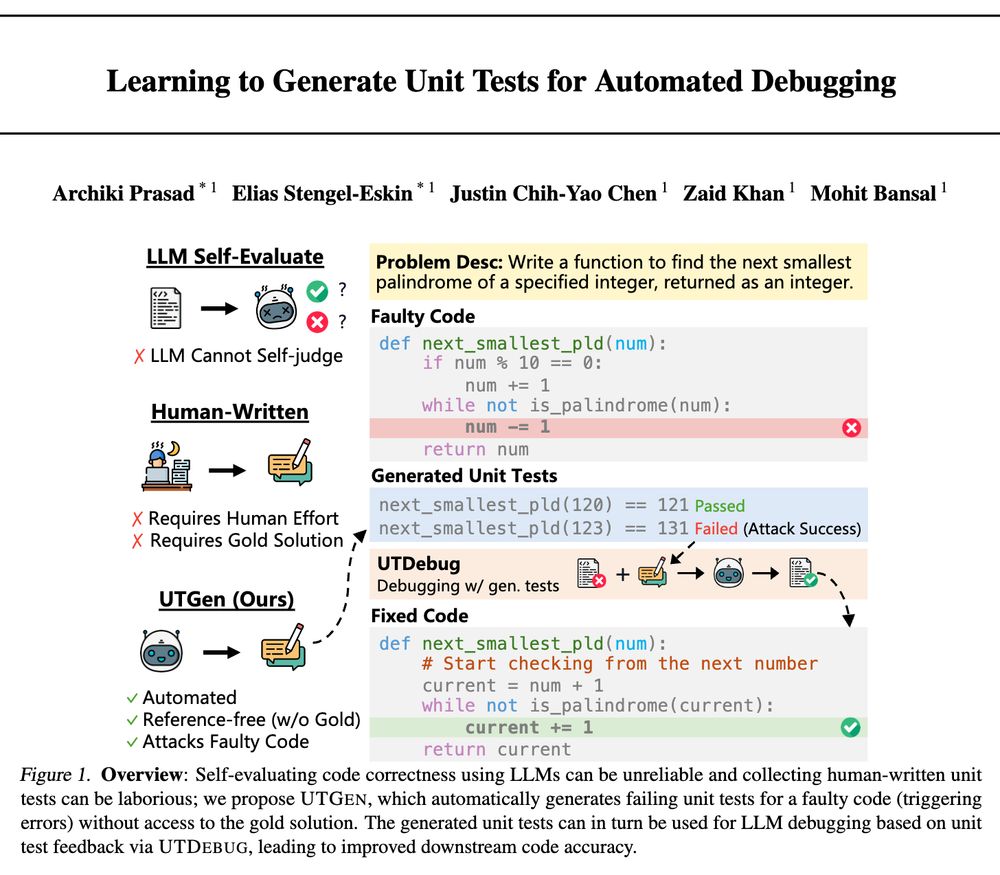

which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests.

UTGen+UTDebug yields large gains in debugging (+12% pass@1) & addresses 3 key questions:

🧵👇

1/4

🧵👇

which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests.

UTGen+UTDebug yields large gains in debugging (+12% pass@1) & addresses 3 key questions:

🧵👇

🧵👇

which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests.

UTGen+UTDebug yields large gains in debugging (+12% pass@1) & addresses 3 key questions:

🧵👇

which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests.

UTGen+UTDebug yields large gains in debugging (+12% pass@1) & addresses 3 key questions:

🧵👇

-- adaptive data generation environments/policies

...

🧵

-- adaptive data generation environments/policies

...

🧵

1️⃣ Accepting persuasion when it helps

2️⃣ Resisting persuasion when it hurts (e.g. misinformation)

arxiv.org/abs/2410.14596

🧵 1/4

1️⃣ Accepting persuasion when it helps

2️⃣ Resisting persuasion when it hurts (e.g. misinformation)

arxiv.org/abs/2410.14596

🧵 1/4