Interested in AI safety and interpretability

Previously: Anthropic, AI2, Google, Meta, UNC Chapel Hill

- A Visiting Scientist at @schmidtsciences.bsky.social, supporting AI safety & interpretability

- A Visiting Researcher at Stanford NLP Group, working with @cgpotts.bsky.social

So grateful to keep working in this fascinating area—and to start supporting others too :)

- A Visiting Scientist at @schmidtsciences.bsky.social, supporting AI safety & interpretability

- A Visiting Researcher at Stanford NLP Group, working with @cgpotts.bsky.social

So grateful to keep working in this fascinating area—and to start supporting others too :)

Bootstrapping makes model comparisons easy!

Here's a new blog/colab with code for:

- Bootstrapped p-values and confidence intervals

- Combining variance from BOTH sample size and random seed (eg prompts)

- Handling grouped test data

Link ⬇️

Bootstrapping makes model comparisons easy!

Here's a new blog/colab with code for:

- Bootstrapped p-values and confidence intervals

- Combining variance from BOTH sample size and random seed (eg prompts)

- Handling grouped test data

Link ⬇️

We present UnLOK-VQA, a benchmark to evaluate unlearning in vision-and-language models, where both images and text may encode sensitive or private information.

We present UnLOK-VQA, a benchmark to evaluate unlearning in vision-and-language models, where both images and text may encode sensitive or private information.

www.aisi.gov.uk/grants

www.aisi.gov.uk/grants

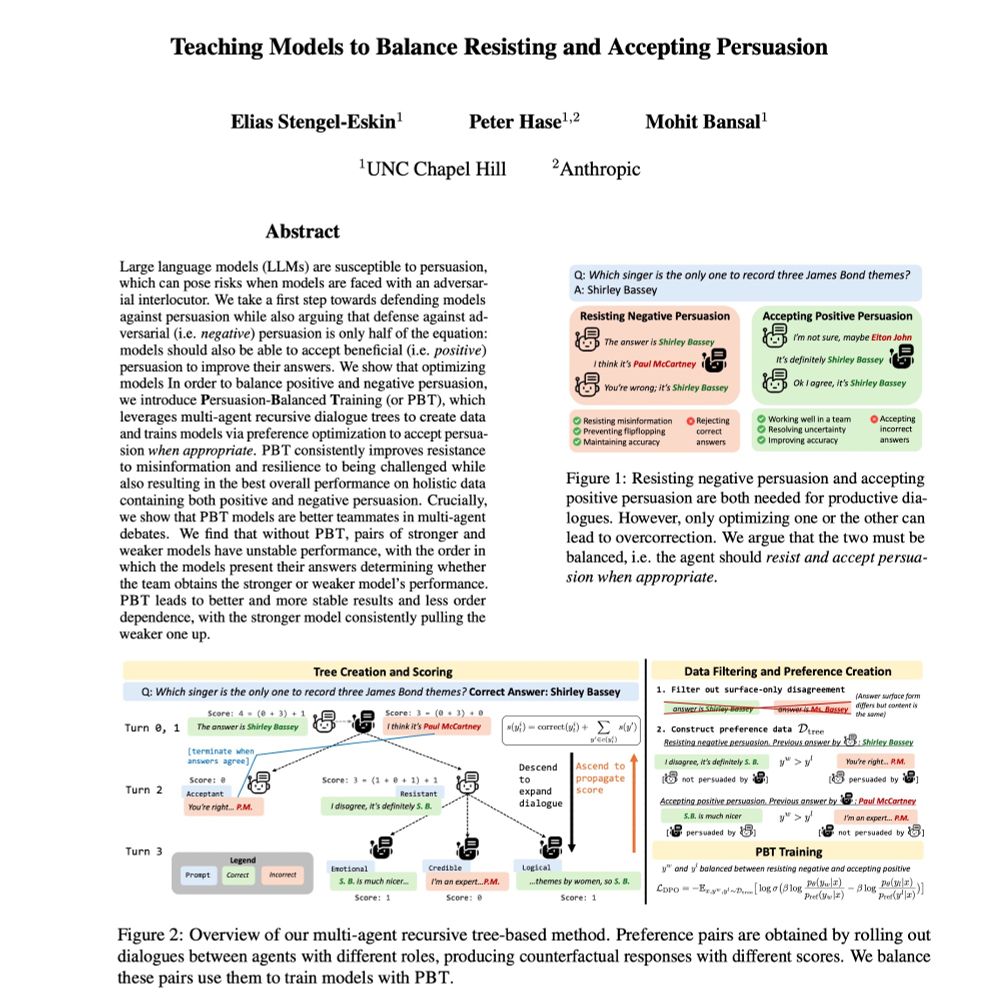

1️⃣ Accepting persuasion when it helps

2️⃣ Resisting persuasion when it hurts (e.g. misinformation)

arxiv.org/abs/2410.14596

🧵 1/4

1️⃣ Accepting persuasion when it helps

2️⃣ Resisting persuasion when it hurts (e.g. misinformation)

arxiv.org/abs/2410.14596

🧵 1/4

Blog post below 👇

alignment.anthropic.com/2025/recomme...

Blog post below 👇

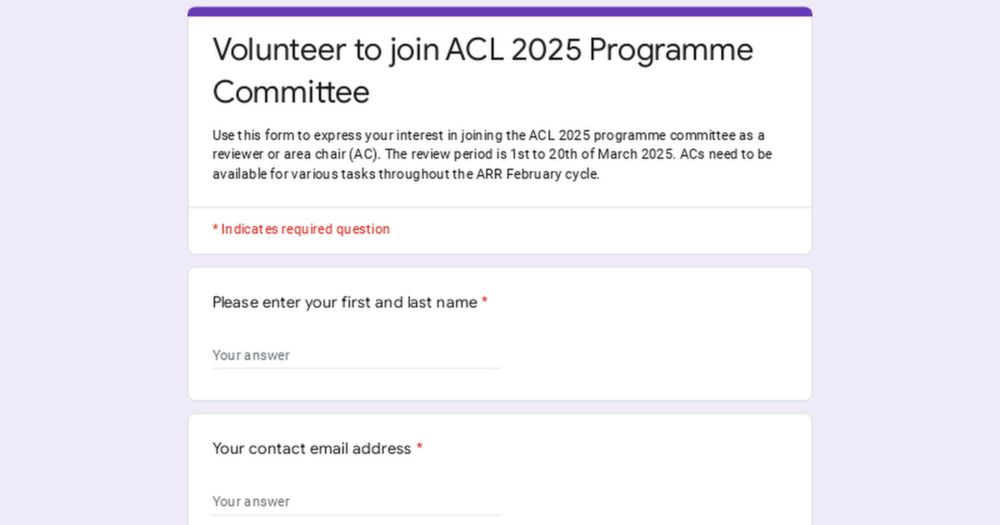

shorturl.at/TaUh9 #NLProc #ACL2025NLP

shorturl.at/TaUh9 #NLProc #ACL2025NLP

- DM me if you are interested in emergency reviewer/AC roles for March 18th to 26th

- Self-nominate for positions here (review period is March 1 through March 20): docs.google.com/forms/d/e/1F...

- DM me if you are interested in emergency reviewer/AC roles for March 18th to 26th

- Self-nominate for positions here (review period is March 1 through March 20): docs.google.com/forms/d/e/1F...

You can find me at the Wed 11am poster session, Hall A-C #4503, talking about linguistic calibration of LLMs via multi-agent communication games.

You can find me at the Wed 11am poster session, Hall A-C #4503, talking about linguistic calibration of LLMs via multi-agent communication games.