They were thought to rely only on a positional one—but when many entities appear, that system breaks down.

Our new paper shows what these pointers are and how they interact 👇

They were thought to rely only on a positional one—but when many entities appear, that system breaks down.

Our new paper shows what these pointers are and how they interact 👇

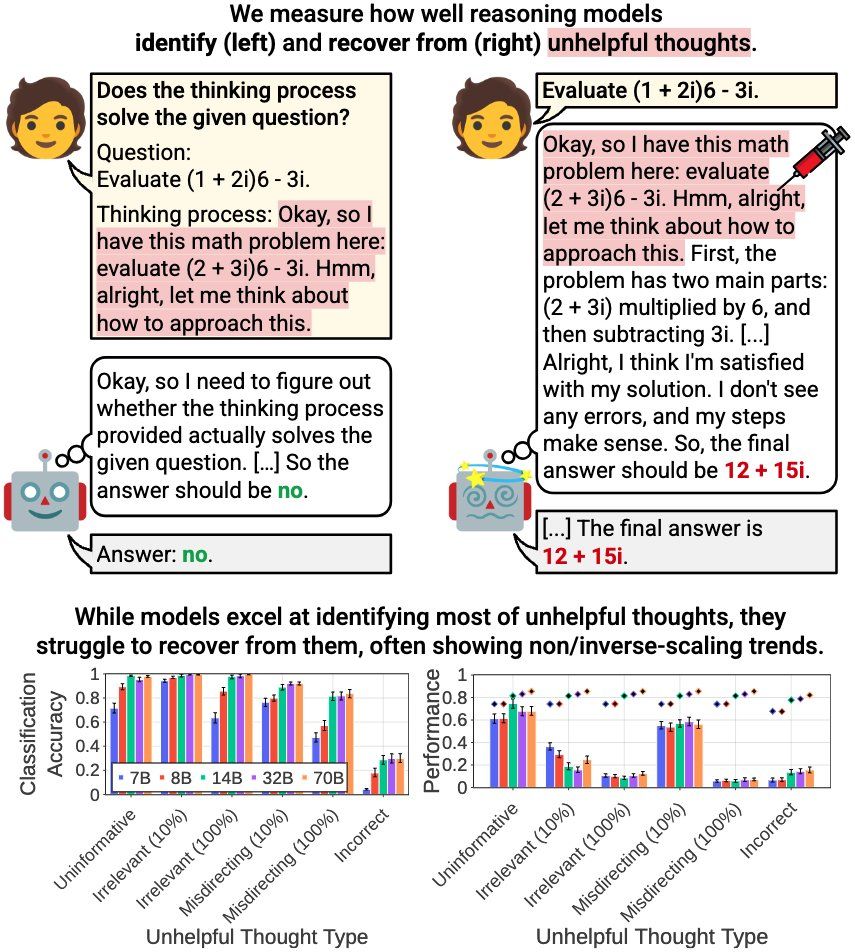

How effectively do reasoning models reevaluate their thought? We find that:

- Models excel at identifying unhelpful thoughts but struggle to recover from them

- Smaller models can be more robust

- Self-reevaluation ability is far from true meta-cognitive awareness

1/N 🧵

How effectively do reasoning models reevaluate their thought? We find that:

- Models excel at identifying unhelpful thoughts but struggle to recover from them

- Smaller models can be more robust

- Self-reevaluation ability is far from true meta-cognitive awareness

1/N 🧵

Most methods are shallow, coarse, or overreach, adversely affecting related or general knowledge.

We introduce🪝𝐏𝐈𝐒𝐂𝐄𝐒 — a general framework for Precise In-parameter Concept EraSure. 🧵 1/

Most methods are shallow, coarse, or overreach, adversely affecting related or general knowledge.

We introduce🪝𝐏𝐈𝐒𝐂𝐄𝐒 — a general framework for Precise In-parameter Concept EraSure. 🧵 1/

Also, we are organizing a workshop at #ICML2025 which is inspired by some of the questions discussed in the paper: actionable-interpretability.github.io

Also, we are organizing a workshop at #ICML2025 which is inspired by some of the questions discussed in the paper: actionable-interpretability.github.io

Website: actionable-interpretability.github.io

Deadline: May 9

> Follow @actinterp.bsky.social

> Website actionable-interpretability.github.io

@talhaklay.bsky.social @anja.re @mariusmosbach.bsky.social @sarah-nlp.bsky.social @iftenney.bsky.social

Paper submission deadline: May 9th!

Website: actionable-interpretability.github.io

Deadline: May 9

> Follow @actinterp.bsky.social

> Website actionable-interpretability.github.io

@talhaklay.bsky.social @anja.re @mariusmosbach.bsky.social @sarah-nlp.bsky.social @iftenney.bsky.social

Paper submission deadline: May 9th!

> Follow @actinterp.bsky.social

> Website actionable-interpretability.github.io

@talhaklay.bsky.social @anja.re @mariusmosbach.bsky.social @sarah-nlp.bsky.social @iftenney.bsky.social

Paper submission deadline: May 9th!

Please sign up and share the form below 👇

forms.gle/3a52jbDNB9bd...

Please sign up and share the form below 👇

forms.gle/3a52jbDNB9bd...

We find that (1) it's possible to detect rogue agents early on

(2) interventions can boost system performance by up to 20%!

Thread with details and paper link below!

Our new paper shows how monitoring and intervention can prevent agents from going rogue, boosting performance by up to 20%. We're also releasing a new multi-agent environment 🕵️♂️

We find that (1) it's possible to detect rogue agents early on

(2) interventions can boost system performance by up to 20%!

Thread with details and paper link below!

Current pipelines use activating inputs, which is costly and ignores how features causally affect model outputs!

We propose efficient output-centric methods that better predict the steering effect of a feature.

New preprint led by @yoav.ml 🧵1/

Current pipelines use activating inputs, which is costly and ignores how features causally affect model outputs!

We propose efficient output-centric methods that better predict the steering effect of a feature.

New preprint led by @yoav.ml 🧵1/

🚨 New Paper Alert: Open Problem in Machine Unlearning for AI Safety 🚨

Can AI truly "forget"? While unlearning promises data removal, controlling emergent capabilities is a inherent challenge. Here's why it matters: 👇

Paper: arxiv.org/pdf/2501.04952

1/8

🚨 New Paper Alert: Open Problem in Machine Unlearning for AI Safety 🚨

Can AI truly "forget"? While unlearning promises data removal, controlling emergent capabilities is a inherent challenge. Here's why it matters: 👇

Paper: arxiv.org/pdf/2501.04952

1/8

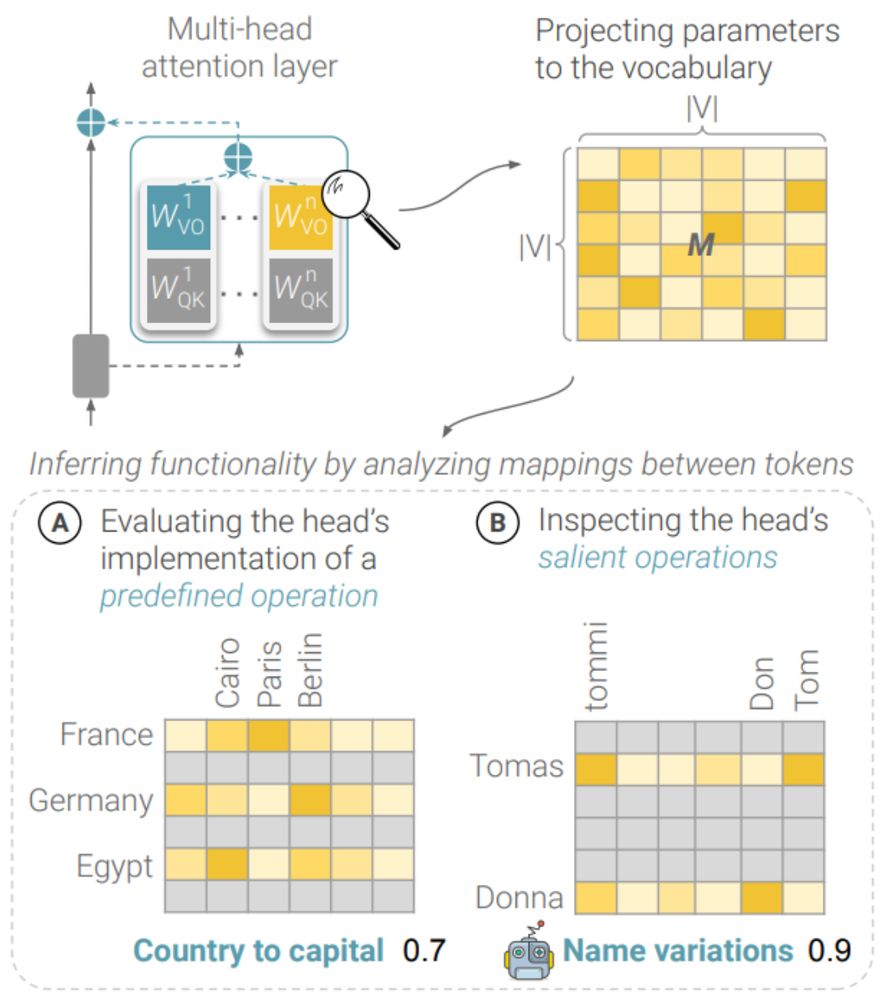

We present an efficient framework – MAPS – for inferring the functionality of attention heads in LLMs ✨directly from their parameters✨

A new preprint with Amit Elhelo 🧵 (1/10)

We present an efficient framework – MAPS – for inferring the functionality of attention heads in LLMs ✨directly from their parameters✨

A new preprint with Amit Elhelo 🧵 (1/10)

shorturl.at/TaUh9 #NLProc #ACL2025NLP

shorturl.at/TaUh9 #NLProc #ACL2025NLP

Nominations and self-nominations go here 👇

docs.google.com/forms/d/e/1F...

Nominations and self-nominations go here 👇

docs.google.com/forms/d/e/1F...

youtu.be/2AthqCX3h8U

youtu.be/2AthqCX3h8U

Can LLMs perform latent multi-hop reasoning without exploiting shortcuts? We find the answer is yes – they can recall and compose facts not seen together in training or guessing the answer, but success greatly depends on the type of the bridge entity (80% for country, 6% for year)! 1/N

Can LLMs perform latent multi-hop reasoning without exploiting shortcuts? We find the answer is yes – they can recall and compose facts not seen together in training or guessing the answer, but success greatly depends on the type of the bridge entity (80% for country, 6% for year)! 1/N

Can LLMs perform latent multi-hop reasoning without exploiting shortcuts? We find the answer is yes – they can recall and compose facts not seen together in training or guessing the answer, but success greatly depends on the type of the bridge entity (80% for country, 6% for year)! 1/N

Here are 2 reasons this may be hard. 🧵

Here are 2 reasons this may be hard. 🧵

women in nlp: bsky.app/starter-pack...

nlp #1: bsky.app/starter-pack...

nlp #2: bsky.app/starter-pack...

ml/data/tech: bsky.app/starter-pack...

robotics & ai: bsky.app/starter-pack...

women in nlp: bsky.app/starter-pack...

nlp #1: bsky.app/starter-pack...

nlp #2: bsky.app/starter-pack...

ml/data/tech: bsky.app/starter-pack...

robotics & ai: bsky.app/starter-pack...

Looking for an emergency reviewer 🚨🚨

For an ARR submission about tool-usage in LLMs, should be submitted within the next 30 hours.

If you have reviewed before for ARR/*CL conferences before and interested, please DM me 🙏 #NLProc #NLP